JSON Parser Benchmarking (original) (raw)

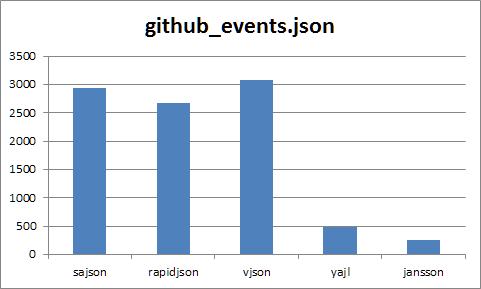

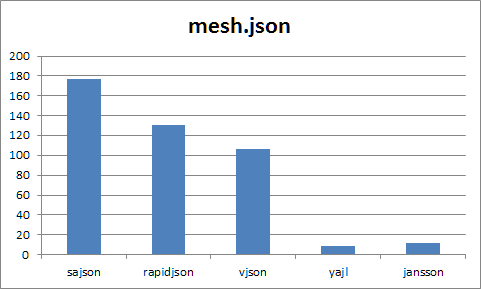

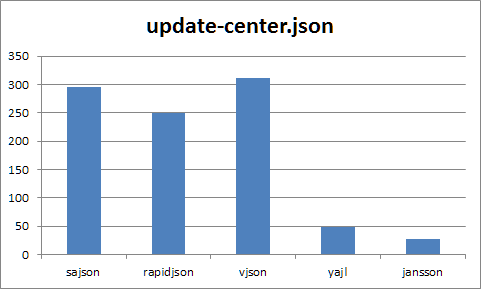

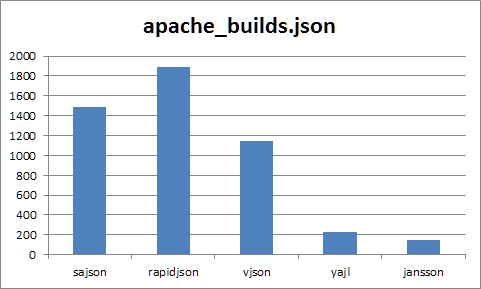

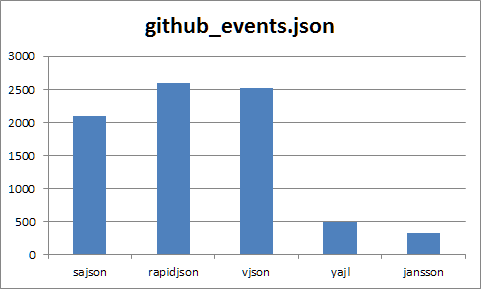

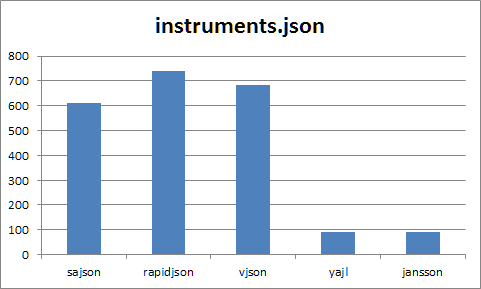

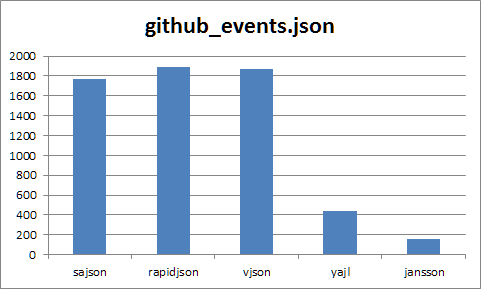

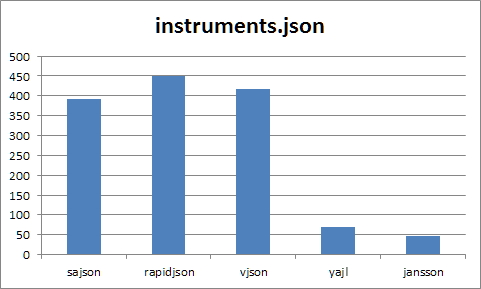

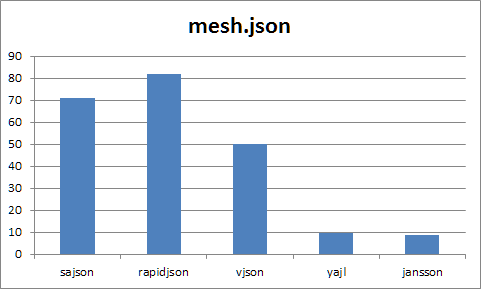

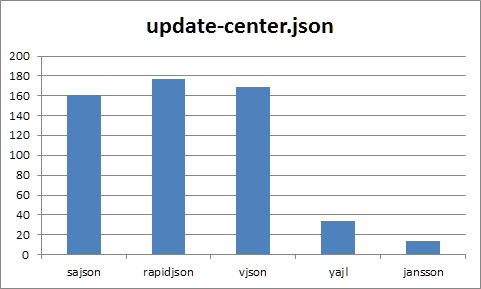

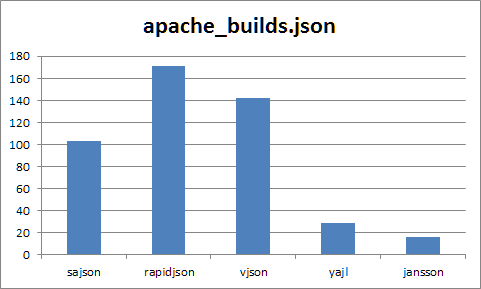

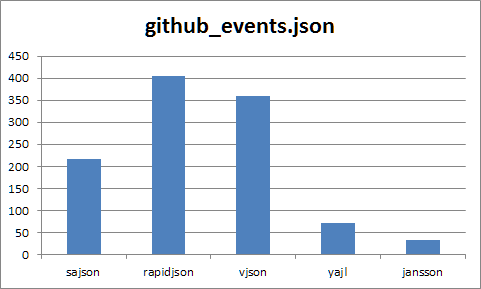

With the caveat that each parser provides different functionality and access to the resulting parse tree, I benchmarked sajson, rapidjson, vjson, YAJL, and Jansson. My methodology was simple: given large-ish real-world JSON files, parse them as many times as possible in one second. Â To include the cost of reading the parse tree in the benchmark, I then iterated over the entire document and summed the number of strings, numbers, object values, array elements, etc.

The documents are:

- apache_builds.json: Data from the Apache Jenkins installation. Mostly it's a array of three-element objects, with string keys and string values.

- github_events.json: JSON data from GitHub's events feature. Nested objects several levels deep, mostly strings, but also contains booleans, nulls, and numbers.

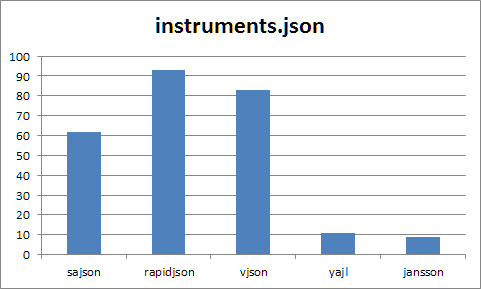

- instruments.json: A very long array of many-key objects.

- mesh.json: 3D mesh data. Almost entirely consists of floating point numbers.

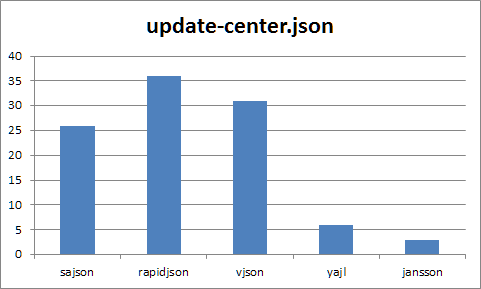

- update-center.json: Also from Jenkins though I'm not sure where I found it.

apache_builds.json, github_events.json, and instruments.json are pretty-printed with a great deal of interelement whitespace.

Now for the results. The Y axis is parses per second. Thus, higher is faster.

Core 2 Duo E6850, Windows 7, Visual Studio 2010, x86

Core 2 Duo E6850, Windows 7, Visual Studio 2010, AMD64

Atom D2700, Ubuntu 12.04, clang 3.0, AMD64

Raspberry Pi

Conclusions

sajson compares favorably to rapidjson and vjson, all of which stomp the C-based YAJL and Jansson parsers. 64-bit builds are noticeably faster: presumably because the additional registers are helpful. Raspberry Pis are slow. :)