Visual Question Answering (original) (raw)

- Home

- People

- Code

- Demo

- Download

- Evaluation

- Challenge

- Browse

- Visualize

- Workshop

- Sponsors

- Terms

- External

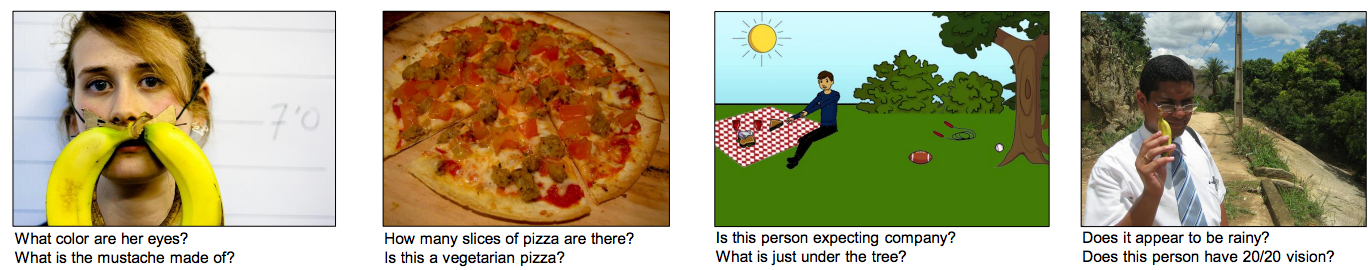

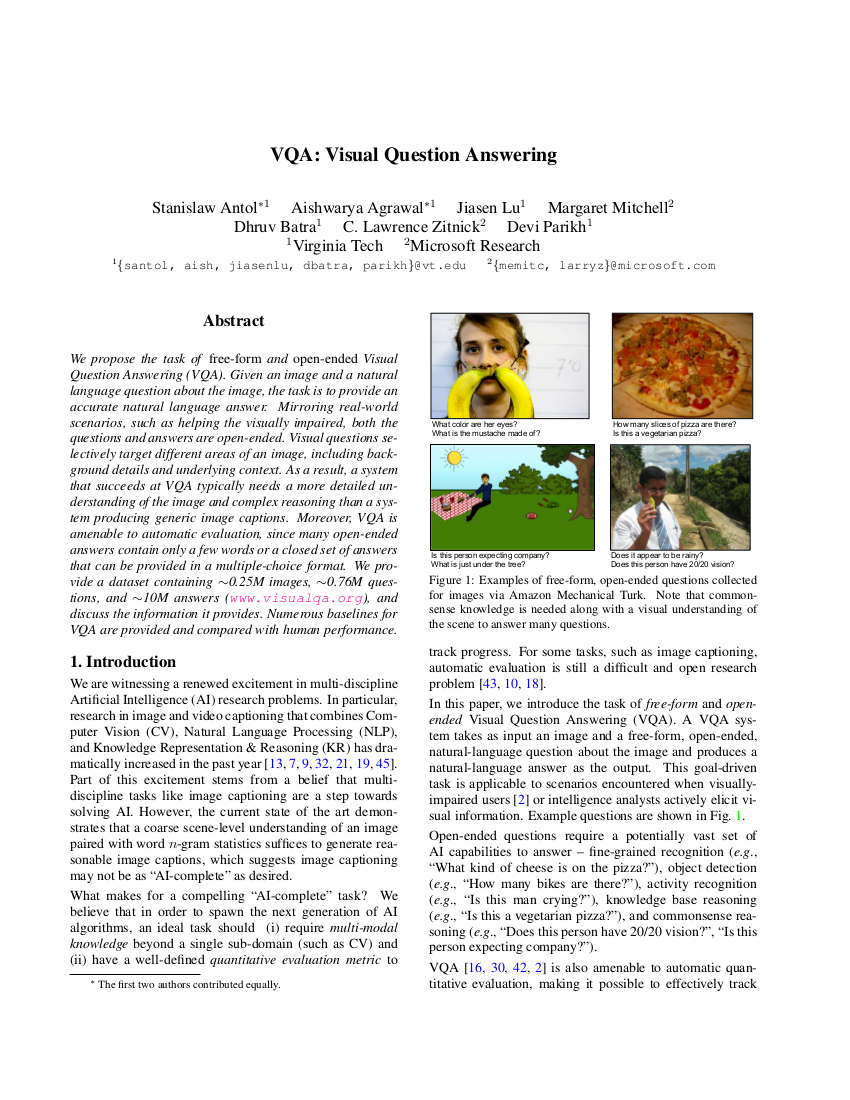

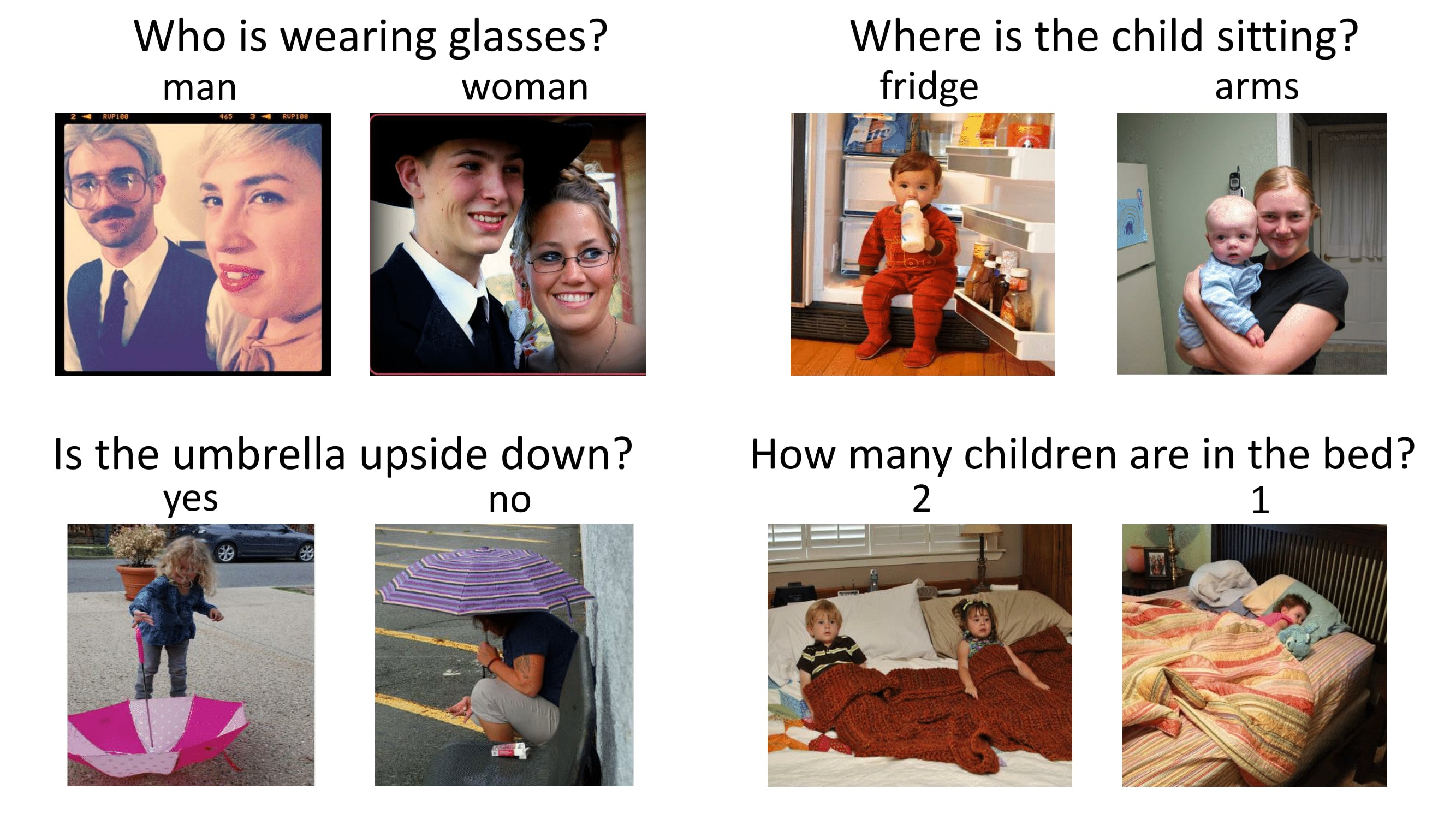

What is VQA?

VQA is a new dataset containing open-ended questions about images. These questions require an understanding of vision, language and commonsense knowledge to answer.

- 265,016 images (COCO and abstract scenes)

- At least 3 questions (5.4 questions on average) per image

- 10 ground truth answers per question

- 3 plausible (but likely incorrect) answers per question

- Automatic evaluation metric

Dataset

Details on downloading the latest dataset may be found on the download webpage.

- April 2017: Full release (v2.0)

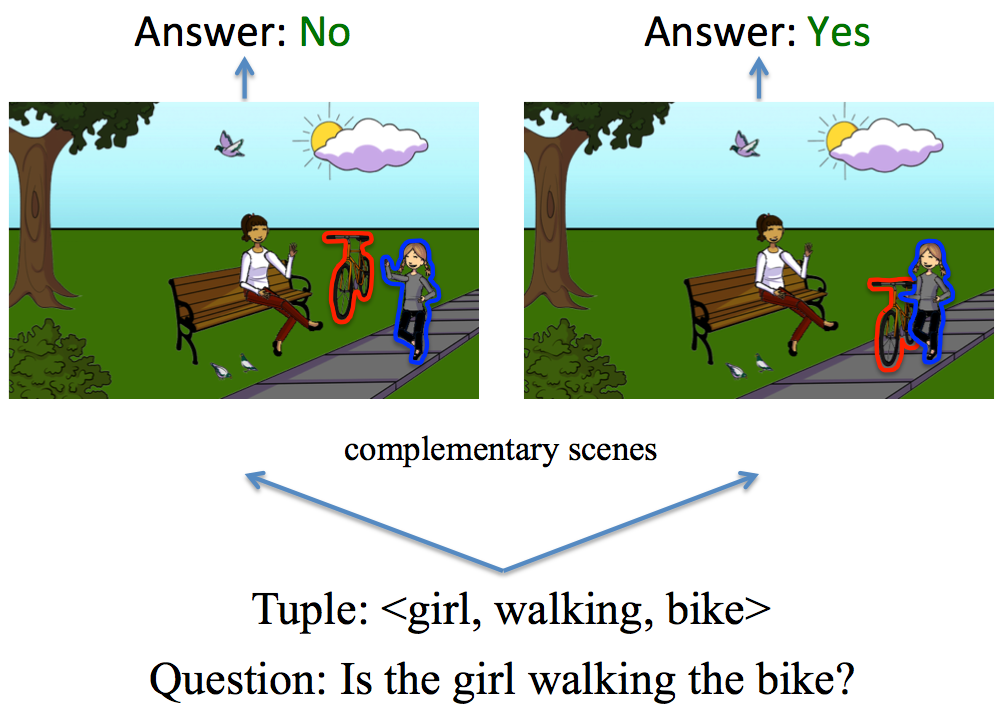

Balanced Real Images

204,721 COCO images

(all of current train/val/test)1,105,904 questions

11,059,040 ground truth answers

⊕

March 2017: Beta v1.9 release

Balanced Real Images

- 123,287 COCO images

(only train/val) - 658,111 questions

- 6,581,110 ground truth answers

- 1,974,333 plausible answers

Balanced Binary Abstract Scenes

31,325 abstract scenes

(only train/val)33,383 questions

333,830 ground truth answers

⊕

October 2015: Full release (v1.0)

Real Images

- 204,721 COCO images

(all of current train/val/test) - 614,163 questions

- 6,141,630 ground truth answers

- 1,842,489 plausible answers

Abstract Scenes

50,000 abstract scenes

150,000 questions

1,500,000 ground truth answers

450,000 plausible answers

250,000 captions

⊕

July 2015: Beta v0.9 release123,287 COCO images (all of train/val)

369,861 questions

3,698,610 ground truth answers

1,109,583 plausible answers

⊕

June 2015: Beta v0.1 release10,000 COCO images (from train)

30,000 questions

300,000 ground truth answers

90,000 plausible answers

Papers

Making the V in VQA Matter: Elevating the Role of Image Understanding in Visual Question Answering (CVPR 2017)

Download the paper

@InProceedings{balanced_vqa_v2,

author = {Yash Goyal and Tejas Khot and Douglas Summers{-}Stay and Dhruv Batra and Devi Parikh},

title = {Making the {V} in {VQA} Matter: Elevating the Role of Image Understanding in {V}isual {Q}uestion {A}nswering},

booktitle = {Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2017},

}

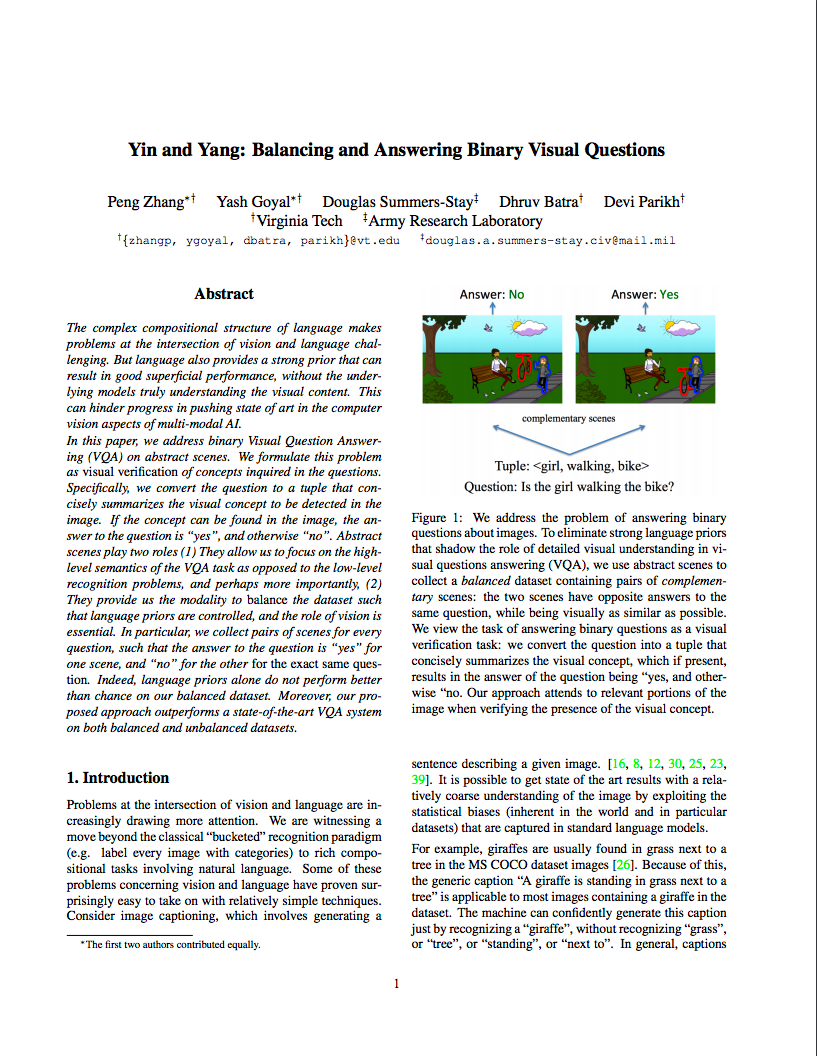

Yin and Yang: Balancing and Answering Binary Visual Questions (CVPR 2016)

Download the paper

@InProceedings{balanced_binary_vqa,

author = {Peng Zhang and Yash Goyal and Douglas Summers{-}Stay and Dhruv Batra and Devi Parikh},

title = {{Y}in and {Y}ang: Balancing and Answering Binary Visual Questions},

booktitle = {Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2016},

}

VQA: Visual Question Answering (ICCV 2015)

Download the paper

@InProceedings{{VQA},

author = {Stanislaw Antol and Aishwarya Agrawal and Jiasen Lu and Margaret Mitchell and Dhruv Batra and C. Lawrence Zitnick and Devi Parikh},

title = {{VQA}: {V}isual {Q}uestion {A}nswering},

booktitle = {International Conference on Computer Vision (ICCV)},

year = {2015},

}