Pulkit Agrawal (original) (raw)

| Pulkit Agrawal I am an Associate Professor in the department of Electrical Engineering and Computer Science (EECS) at MIT. My lab is a part of the Computer Science and Artificial Intelligence Lab (CSAIL), is affiliated with the Laboratory for Information and Decision Systems (LIDS) and involved with NSF AI Institute for Artificial Intelligence and Fundamental Interactions ( IAIFI ). I completed my Ph.D. at UC Berkeley; undergraduate studies from IIT Kanpur. Co-founded SafelyYou Inc. that builds fall prevention technology. Advisor toTutor Intelligence, Lab0 Inc., and Common Sense Machines. Follow @pulkitology / LinkedIn / Email / CV / Biography / Google Scholar |  |

|---|

Research

The overarching research interest is to build machines that have similar manipulation and locomotion abilities as humans. These machines will automatically and continuously learn about their environment and exhibit both common sense and physical intuition. I refer to this line of work as "computational sensorimotor learning". It encompasses problems in peception, control, hardware design,robotics, reinforcement learning, and other learning approaches to control. My past work has also drawn inspiration from cognitive science, and neuroscience.

Ph.D. Thesis (Computational Sensorimotor Learning) / Thesis Talk / Bibtex

TEDxMIT Talk: Why machines can play chess but can't open doors? (i.e., why is robotics hard?)

Recent Awards to Lab Members

Pulkit recieves the IIT Kanpur Young Alumnus Award.

Pulkit recieves 2024 IEEE Early Academic Career Award in Robotics and Automation .

Meenal Parakh wins the 2024 Charles and Jennifer Johnson MEng Thesis Award.

Idan Shenfeld and Zhang-Wei Hong win the 2024 Qualcomm Innvoation Fellowship.

Srinath Mahankali wins the 2024 Jeremy Gerstle UROP Award for undergraduate research.

Srinath Mahankali wins the 2024 Barry Goldwater Scholarship.

Best Paper Award at Conference on Robot Learning (CoRL) 2021 to our work on in-hand object re-orientation.

Research Group

The lab is an unsual collection of folks working on something that is unconceivable/unthinkable, but not impossible in our lifetime: General Artificial Intelligence. Life is short, do what you must do :-) I like to call my group: Improbable AI Lab.

Post Docs

Haoshu Fang

Branden Romero

Graduate Students

Antonia Bronars

Gabe Margolis

Zhang-wei Hong

Nolan Fey

Younghyo Park

Jyothish Pari

Idan Shenfeld

Aviv Netanyahu

Richard Li

Martin Peticco

Nitish Dashora

Seungwook Han

Masters of Engineering (MEng. Students) and Undergraduate Researchers (UROPs)

Srinath Mahankali, Jagdeep Bhatia, Arthur Hu, Gregory Pylypovych, Kevin Garcia, Yash Prabhu, Locke Cai.

Visiting Researchers

Sandor Felber, Lars Ankile

Openings

We have openings for Ph.D. Students, PostDocs, and MIT UROPs/SuperUROPs. If you would like to apply for the Ph.D. program, please apply directly to MIT EECS admissions. For all other positions, send me an e-mail with your resume.

Recent Talks

Pathway to Robotic Intelligence , MIT Schwarzman College of Computing Talk, 2024.

Making Robots as Intelligent as ChatGPT, Forbes, 2023.

Robot Learning for the Real World, Forum for Artificial Intelligence, UT Austin, March 2023.

Fun with Robots and Machine Learning , Robotics Colloqium, University of Washington, Nov 2022.

Navigating Through Contacts , RSS 2022 Workshop in The Science of Bumping into Things.

Coming of Age of Robot learning , Technion Robotics Seminar (April 14 2022) / MIT Robotics Seminar (March 2022).

Rethinking Robot Learning , Learning to Learn: Robotics Workshop, ICRA'21.

Self-Supervised Robot Learning, Robotics Seminar, Robot Learning Seminar, MILA.

Challenges in Real-World Reinforcement Learning, IAIFI Seminar, MIT.

The Task Specification Problem, Embodied Intelligence Seminar, MIT.

| Pre-Prints | |

|---|---|

|

General Reasoning Requires Learning to Reason from the Get-go Seungwook Han,Jyo Pari,Sam Gershman,Pulkit Agrawal arXiv, 2025 paper /bibtex Achieving true reasoning requires a new paradigm for pre-training based on rewards and iterative computation. |

|

Known Unknowns: Out-of-Distribution Property Prediction in Materials and Molecules Nofit Segal*, Aviv Netanyahu*, Kevin Greenman,Pulkit Agrawal†, Rafael Gómez-Bombarelli† (*equal contribution; †equal advising) Workshop on AI for Accelerated Materials Design, NeurIPS 2024 (Oral) paper /code /bibtex Extrapolating property prediction in materials science. |

|

Bridging the Sim-to-Real Gap for Athletic Loco-Manipulation Nolan Fey,Gabriel B. Margolis,Martin Peticco,Pulkit Agrawal Workshop on Robot Learning, ICLR 2025 (Oral) paper /bibtexEnhancing the sim-to-real transfer for extreme whole-body manipulation. |

|

Language Model Personalization via Reward Factorization Idan Shenfeld*,Felix Faltings*,Pulkit Agrawal,Aldo Pacchiano, In submission paper /bibtexFramework for personalizing large models assuming that human preferences lie on a low-dimensional manifold. |

| Publications | |

|---|---|

|

From Imitation to Refinement – Residual RL for Precise Visual Assembly Lars Ankile, Anthony Simeonov, Idan Shenfeld, Marcel Torne,Pulkit Agrawal ICRA, 2025 paper /project page /code /bibtex Refining behavior-cloned diffusion model policies using RL. |

| Vegetable Peeling: A Case Study in Constrained Dexterous Manipulation Tao Chen,Eric Cousineau,Naveen Kuppuswamy,Pulkit Agrawal ICRA, 2025 project page /arXiv A robotic system that peels vegetables with a dexterous robot hand. | |

|

ORSO: Accelerating Reward Design via Online Reward Selection and Policy Optimization Chen Bo Calvin Zhang,Zhang-Wei Hong,Aldo Pacchiano,Pulkit Agrawal ICLR, 2025 paper /bibtexCasting reward selection as a model selection leads to faster learning (upto 8x) and better performance (upto 2x) when training RL agents with provable regret guarantees. |

|

Efficient Diffusion Transformer Policies with Mixture of Expert Denoisers for Multitask Learning Moritz Reuss*,Jyo Pari*,Pulkit Agrawal,Rudolf Lioutikov (*equal contribution) ICLR , 2025 paper /bibtexMoDE is a novel architecture that uses sparse experts and noise-conditioned routing. |

| Diffusion Policy Policy Optimization Allen Z. Ren, Justin Lidard, Lars L. Ankile, Anthony Simeonov,Pulkit Agrawal, Anirudha Majumdar, Benjamin Burchfiel, Hongkai Dai, Max Simchowitz ICLR, 2025 paper /code /bibtexDPPO is an algorithmic framework for fine-tuning diffusion-based policies using reinforcement learning. | |

|

EyeSight Hand: Design of a Fully-Actuated Dexterous Robot Hand with Integrated Vision-Based Tactile Sensors and Compliant Actuation Branden Romero*, Hao-Shu Fang*, Pulkit Agrawal, Edward Adelson IROS, 2024 paper /project page /bibtex A dexterous hand with proprioceptive actuation fully covered with tactile sensing. |

| Reconciling Reality through Simulation: A Real-To-Sim-to-Real Approach for Robust Manipulation Marcel Torne Villasevil , Anthony Simeonov, Zechu Li, April Chan, Tao Chen, Abhishek Gupta,Pulkit Agrawal RSS, 2024 paper /project page /bibtex A framework to train robots on scans of real-world scenes. | |

|

Few-Shot Task Learning through Inverse Generative Modeling Aviv Netanyahu, Yilun Du, Antonia Bronars, Jyothish Pari, Joshua Tenenbaum, Tianmin Shu,Pulkit Agrawal NeurIPS, 2024 paper /project page /code /bibtex Few-shot continual learning via generative modeling. |

|

Random Latent Exploration for Deep Reinforcement Learning Srinath Mahankali,Zhang-Wei Hong,Ayush Sekhari,Alexander Rakhlin,Pulkit Agrawal ICML, 2024 paper /project page /code /bibtex State-of-the-art exploration by optimizing the agent to achieve randomly sampled latent goals. |

|

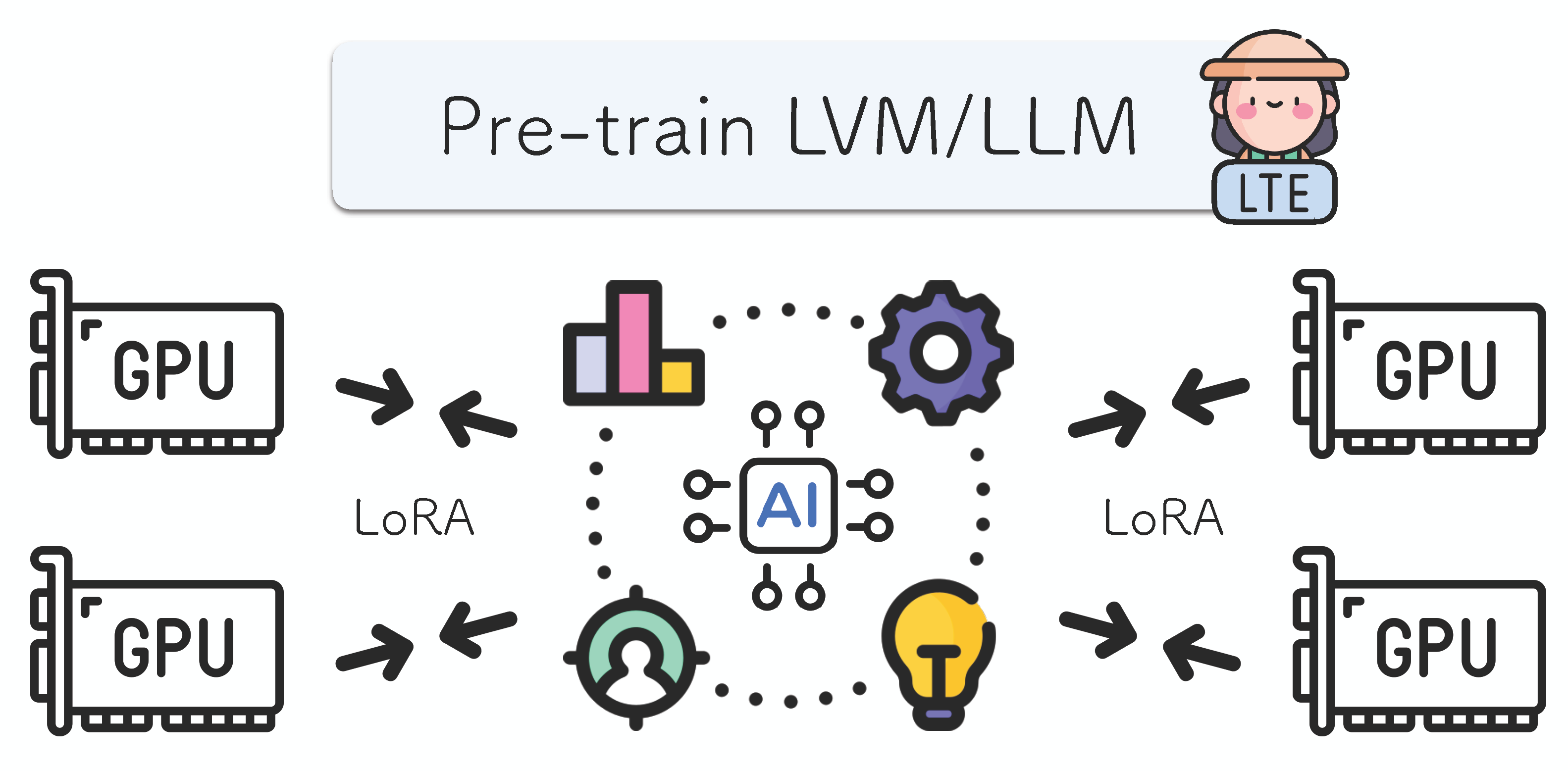

Training Neural Networks From Scratch with Parallel Low-Rank Adapters Minyoung Huh, Brian Cheung, Jeremy Bernstein, Phillip Isola, Pulkit Agrawal arXiv, 2024 paper /project page /bibtex A method for parallel training of large models on computers with limited memory. |

|

Value Augmented Sampling for Language Model Alignment and Personalization Seungwook Han, Idan Shenfeld, Akash Srivastava,Yoon Kim,Pulkit Agrawal Workshop on Reliable and Responsible Foundation Models, ICLR 2024 (Oral) paper /bibtex Algorithm for inference-time augmentation of Large Language Models. |

|

Lifelong Robot Learning with Human Assisted Language Planners Meenal Parakh*, Alisha Fong*, Anthony Simeonov, Abhishek Gupta, Tao Chen, Pulkit Agrawal (*equal contribution)ICRA , 2024 paper /project page /bibtex An LLM-based task planner that can learn new skills opens doors for continual learning. |

|

Learning Force Control for Legged Manipulation Tifanny Portela, Gabriel B. Margolis, Yandong Ji, Pulkit Agrawal ICRA, 2024 paper / project page /bibtex Learning to control the force applied by a legged robot's arm for compliant and forceful manipulation. |

| |

Curiosity-driven Red-teaming for Large Language Models Zhang-Wei Hong, Idan Shenfeld, Tsun-Hsuan Wang, Yung-Sung Chuang, Aldo Pareja, James R. Glass, Akash Srivastava, Pulkit Agrawal ICLR, 2024 paper /code /bibtex |

|

Maximizing Quadruped Velocity by Minimizing Energy Srinath Mahankali*,Chi-Chang Lee*,Gabriel B. Margolis,Zhang-Wei Hong,Pulkit Agrawal ICRA, 2024 paper /project page /bibtex Principled energy minimization increases robot's agility. |

|

JUICER: Data-Efficient Imitation Learning for Robotic Assembly Lars Ankile, Anthony Simeonov, Idan Shenfeld, Pulkit Agrawal IROS, 2024 paper /project page /code /bibtex Learning complex assembly skills from few human demonstrations. |

|

Rank2Reward: Learning Shaped Reward Functions from Passive Video Daniel Yang, Davin Tjia, Jacob Berg, Dima Damen,Pulkit Agrawal, Abhishek Gupta ICRA, 2024 paper /project page /code /bibtex Learning reward functions from videos of human demonstrations. |

|

Everyday finger: a robotic finger that meets the needs of everyday interactive manipulation Rubén Castro Ornelas, Tomás Cantú, Isabel Sperandio, Alexander H. Slocum,Pulkit Agrawal ICRA, 2024 paper /project page /bibtex Robotic finger designed to perform every day tasks. |

| Visual Dexterity: In-Hand Reorientation of Novel and Complex Object Shapes Tao Chen, Megha Tippur, Siyang Wu,Vikash Kumar,Edward Adelson,Pulkit Agrawal Science Robotics, 2023 paper /project page / bibtex A real-time controller that dynamically reorients complex and novel objects by any amount using a single depth camera. | |

|

Compositional Foundation Models for Hierarchical Planning Anurag Ajay*, Seungwook Han*, Yilun Du*, Shuang Li, Abhi Gupta, Tommi Jaakkola, Josh Tenenbaum, Leslie Kaelbling, Akash Srivastava,Pulkit Agrawal (* equal contribution) NeurIPS, 2023 paper / project page / bibtex Composing existing foundation models operating on different modalities to solve long-horizon tasks. |

|

Breadcrumbs to the Goal: Goal-Conditioned Exploration from Human-in-the-Loop Feedback Marcel Torne, Max Balsells, Zihan Wang, Samedh Desai, Tao Chen,Pulkit Agrawal, Abhishek Gupta NeurIPS, 2023 paper /project page /code / bibtex Method for guiding goal-directed exploration with asynchronous human feedback. |

|

[ Beyond Uniform Sampling: Offline Reinforcement Learning with Imbalanced Datasets ](<https://arxiv.org/pdf/2310.04413.pdf) Zhang-Wei Hong, Aviral Kumar, Sathwik Karnik, Abhishek Bhandwaldar, Akash Srivastava, Joni Pajarinen, Romain Laroche, Abhishek Gupta,Pulkit Agrawal NeurIPS, 2023 paper /bibtex / code Optimizing the sampling distribution enables offline RL to learn a good policy in skewed datasets primarily composed of sub-optimal trajectories. |

|

Shelving, Stacking, Hanging: Relational Pose Diffusion for Multi-modal Rearrangement Anthony Simeonov, Ankit Goyal*, Lucas Manuelli*, Lin Yen-Chen, Alina Sarmiento, Alberto Rodriguez, Pulkit Agrawal**, Dieter Fox** (*equal contribution, **equal advising)CoRL, 2023 paper /project page /code /bibtex Relational rearrangement with multi-modal placing and generalization over scene layouts via diffusion and local scene conditioning. |

|

Learning to See Physical Properties with Active Sensing Motor Policies Gabriel B. Margolis, Xiang Fu, Yandong Ji,Pulkit Agrawal Conference on Robot Learning (CoRL), 2023 paper /project page / bibtex Learn to perceive physical properties of terrains in front of the robot (i.e., a digital twin). |

|

Visual Pre-training for Navigation: What Can We Learn from Noise? Yanwei Wang, Ching-Yun Ko, Pulkit Agrawal IROS 2023, NeurIPS 2022 Workshop paper /code /project page / bibtex Learning to navigate by moving the camera across random images. |

|

Autonomous Robotic Reinforcement Learning with Asynchronous Human Feedback Max Balsells*, Marcel Torne*, Zihan Wang, Samedh Desai, Pulkit Agrawal, Abhishek Gupta CoRL, 2023 paper /bibtex Leveraging crowdsourced non-expert human feedback to guide exploration in robot policy learning. |

|

TGRL: An Algorithm for Teacher Guided Reinforcement Learning Idan Shenfeld, Zhang-Wei Hong, Aviv Tamar,Pulkit Agrawal ICML, 2023 paper / code / project page /bibtex An algorithm for automatically balancing learning from teacher's guidance and task reward. |

|

Straightening Out the Straight-Through Estimator: Overcoming Optimization Challenges in Vector Quantized Networks Minyoung Huh, Brian Cheung, Pulkit Agrawal, Phillip Isola International Conference on Machine Learning (ICML), 2023 paper / website / code /bibtex A set of suggestions that simplifies training of vector quantization layers. |

|

Parallel Q-Learning: Scaling Off-policy Reinforcement Learning under Massively Parallel Simulation Zechu Li*,Tao Chen*,Zhang-Wei Hong,Anurag Ajay,Pulkit Agrawal (* indicates equal contribution) ICML, 2023 paper /code / bibtex Scaling Q-learning algorithms to 10K+ workers. |

|

Diagnosis, Feedback, Adaptation: A Human-in-the-Loop Framework for Test-Time Policy Adaptation Andi Peng, Aviv Netanyahu, Mark Ho, Tianmin Shu, Andreea Bobu, Julie Shah,Pulkit Agrawal ICML, 2023 paper /project page /bibtex A step towards using counterfactuals for improving policy adaptation. |

|

Statistical Learning under Heterogenous Distribution Shift Max Simchowitz*, Anurag Ajay*, Pulkit Agrawal, Akshay Krishnamurthy (* equal contribution) ICML, 2023 paper /bibtex In-distribution error for certain features predicts their out-of-distribution sensitivity. |

|

DribbleBot: Dynamic Legged Manipulation in the Wild Yandong Ji*,Gabriel B. Margolis*,Pulkit Agrawal (*equal contribution) International Conference on Robotics and Automation (ICRA), 2023 paper /project page /bibtex Press: TechCrunch,IEEE Spectrum, NBC Boston, Insider, Yahoo!News,MIT News Dynamic legged object manipulation on diverse terrains with onboard compute and sensing. |

| TactoFind: A Tactile Only System for Object Retrieval Sameer Pai*, Tao Chen*, Megha Tippur*,Edward Adelson,Abhishek Gupta†,Pulkit Agrawal† (*equal contribution, † equal advising) International Conference on Robotics and Automation (ICRA), 2023 paper /project page /bibtex Localize, identify, and fetch a target object in the dark with tactile sensors. | |

|

Is Conditional Generative Modeling all you need for Decision Making? Anurag Ajay*, Yilun Du*, Abhi Gupta*, Josh Tenenbaum, Tommi Jaakkola,Pulkit Agrawal (*equal contribution) ICLR, 2023 (Oral) paper /project page /bibtex Return conditioned generative models offer a powerful alternative to temporal-difference learning for offline decision making and reasoning with constraints. |

|

Learning to Extrapolate: A Transductive Approach Aviv Netanyahu*, Abhishek Gupta*, Max Simchowitz, Kaiqing Zhang,Pulkit Agrawal (*equal contribution) ICLR, 2023 paper /bibtex Transductive reparameterization converts out-of-support generalization problem into out-of-combination generalization which is possible under low-rank style conditions. |

| |

Harnessing Mixed Offline Reinforcement Learning Datasets via Trajectory Weighting Zhang-Wei Hong, Pulkit Agrawal, Remi Tachet des Combes, Romain Laroche ICLR, 2023 paper /bibtex Return reweighted sampling of trajectories enables offline RL algorithms to work with skewed datasets. |

|

The Low-Rank Simplicity Bias in Deep Networks Minyoung Huh, Hossein Mobahi, Richard Zhang, Brian Cheung, Pulkit Agrawal, Phillip Isola Transactions of Machine Learning Research (TMLR), 2023 paper / website /bibtex Deeper Networks find simpler solutions! Also learn why ResNets overcome the challenges associated with very deep networks. |

|

Redeeming Intrinsic Rewards via Constrained Optimization Eric Chen*, Zhang-Wei Hong*, Joni Pajarinen, Pulkit Agrawal (*equal contribution) NeurIPS, 2022 paper /project page /bibtex Press: MIT News Method that automatically balances exploration bonus or curiosity against task rewards leading to consistent performance improvement. |

|

SE(3)-Equivariant Relational Rearrangement with Neural Descriptor Fields Anthony Simeonov*,Yilun Du*,Lin Yen-Chen,Alberto Rodriguez,Leslie P. Kaelbling, Tomás Lozano-Peréz,Pulkit Agrawal (*equal contribution) CoRL, 2022 paper /project page /code /bibtex Learning relational tasks with a few demonstrations in a way that generalizes to new configurations of objects. |

|

Walk These Ways: Tuning Robot Control for Generalization with Multiplicity of Behavior Gabriel B. Margolis,Pulkit Agrawal CoRL, 2022 (Oral) paper /code /project page /bibtex One learned policy embodies many dynamic behaviors useful for different tasks. |

|

Distributionally Adaptive Meta Reinforcement Learning Anurag Ajay*, Abhishek Gupta*, Dibya Ghosh, Sergey Levine, Pulkit Agrawal (*equal contribution) NeurIPS, 2022 paper /project page /bibtex Being adaptive instead of being robust results in faster adaption to out-of-distribution tasks. |

|

Efficient Tactile Simulation with Differentiability for Robotic Manipulation Jie Xu,Sangwoon Kim,Tao Chen,Alberto Rodriguez,Pulkit Agrawal,Wojciech Matusik,Shinjiro Sueda CoRL, 2022 paper /Code coming soon /project page /bibtex Tactile Simulator for complex shapes training on which transfers to real-world. |

|

Rapid Locomotion via Reinforcement Learning Gabriel Margolis*, Ge Yang*, Kartik Paigwar, Tao Chen, Pulkit Agrawal RSS, 2022 paper /project page /bibtex Press: Wired, Popular Science, TechCrunch,BBC , MIT News High-speed running and spinning on diverse terrains with a RL based controller. |

|

Stubborn: A Strong Baseline for Indoor Object Navigation Haokuan Luo, Albert Yue, Zhang-Wei Hong, Pulkit Agrawal IROS, 2022 paper /code /bibtex State-of-the-art Performance on Habitat Navigation Challenge without any machine learning for navigation. |

|

Neural Descriptor Fields: SE(3)-Equivariant Object Representations for Manipulation Anthony Simeonov*, Yilun Du*, Andrea Tagliasacchi, Joshua B. Tenenbaum, Alberto Rodriguez,Pulkit Agrawal**, Vincent Sitzmann** (*equal contribution, order determined by coin flip. **equal advising) ICRA, 2022 paper / website and code /bibtex An SE(3) Equivariant method for specifiying and finding correspondences which enables data efficient object manipulation. |

|

An Integrated Design Pipeline for Tactile Sensing Robotic Manipulators Lara Zlokapa, Yiyue Luo, Jie Xu, Michael Foshey, Kui Wu,Pulkit Agrawal, Wojciech Matusik ICRA, 2022 paper / website /bibtex A method for users to easily design a variety of robotic manipulators with integrated tactile sensors. |

|

Stable Object Reorientation using Contact Plane Registration Richard Li, Carlos Esteves, Ameesh Makadia, Pulkit Agrawal ICRA, 2022 paper /bibtex Predicting contact points with a CVAE and plane segmentation improves object generalization and handles multimodality. |

|

Discovering Generalizable Spatial Goal Representations via Graph-based Active Reward Learning Aviv Netanyahu*, Tianmin Shu*, Joshua B. Tenenbaum,Pulkit Agrawal ICML, 2022 paper /bibtex Graph-based one-shot reward learning via active learning for object rearrangement tasks. |

|

Offline RL Policies Should be Trained to be Adaptive Dibya Ghosh, Anurag Ajay, Pulkit Agrawal, Sergey Levine ICML, 2022 paper /bibtex Online adaptation of offline RL policies using evaluation data improves performance. |

|

Topological Experience Replay Zhang-Wei Hong, Tao Chen, Yen-Chen Lin, Joni Pajarinen,Pulkit Agrawal ICLR, 2022 paper /bibtex Sampling data from the replay buffer informed by topological structure of the state space improves performance. |

|

Bilinear Value Networks for Multi-goal Reinforcement Learning Zhang-Wei Hong*, Ge Yang*,Pulkit Agrawal (*equal contribution) ICLR, 2022 paper /bibtex Bilinear decomposition of the Q-value function improves generalization and data efficiency. |

|

Equivariant Contrastive Learning Rumen Dangovski, Li Jing, Charlotte Loh, Seungwook Han, Akash Srivastava, Brian Cheung,Pulkit Agrawal, Marin Soljacic ICLR , 2022 paper /bibtex Study revealing complementarity of invariance and equivariance in contrastive learning. |

|

Overcoming The Spectral Bias of Neural Value Approximation Ge Yang*, Anurag Ajay*,Pulkit Agrawal (*equal contribution) ICLR, 2022 paper /bibtex Fourier features improve value estimation and consequently data efficiency. |

|

A System for General In-Hand Object Re-Orientation Tao Chen,Jie Xu,Pulkit Agrawal CoRL, 2021 (Best Paper Award) paper /bibtex /project page Press: MIT News A framework for general in-hand object reorientation. |

|

Learning to Jump from Pixels Gabriel Margolis,Tao Chen,Kartik Paigwar,Xiang Fu, Donghyun Kim,Sangbae Kim,Pulkit Agrawal CoRL, 2021 paper /bibtex /project page Press: MIT News A hierarchical control framework for dynamic vision-aware locomotion. |

|

3D Neural Scene Representations for Visuomotor Control Yunzhu Li*, Shuang Li*, Vincent Sitzmann,Pulkit Agrawal, Antonio Torralba (*equal contribution) CoRL, 2021 (Oral) paper / website /bibtex Extreme viewpoint generalization via 3D representations based on Neural Radiance Fields. |

|

An End-to-End Differentiable Framework for Contact-Aware Robot Design Jie Xu, Tao Chen, Lara Zlokapa, Michael Foshey, Wojciech Matusik, Shinjiro Sueda, Pulkit Agrawal RSS, 2021 paper /website /bibtex /video / Press: MIT News Computational method for design task-specific robotic hands. |

|

Learning Task Informed Abstractions Xiang Fu, Ge Yang,Pulkit Agrawal, Tommi Jaakkola ICML, 2021 paper /website /bibtex A MDP formulation that dissociates task relevant and irrelevant information. |

|

Residual Model Learning for Microrobot Control Joshua Gruenstein, Tao Chen, Neel Doshi,Pulkit Agrawal ICRA, 2021 paper /bibtex Data efficient learning method for controlling microrobots. |

|

OPAL: Offline Primitive Discovery for Accelerating Offline Reinforcement Learning Anurag Ajay, Aviral Kumar,Pulkit Agrawal, Sergey Levine, Ofir Nachum ICLR, 2021 paper /website /bibtex Learning action primitives for data efficient online and offline RL. |

|

A Long Horizon Planning Framework for Manipulating Rigid Pointcloud Objects Anthony Simeonov, Yilun Du, Beomjoon Kim, Francois Hogan, Joshua Tenenbaum,Pulkit Agrawal, Alberto Rodriguez CoRL, 2020 paper /website /bibtex A framework that achieves the best of TAMP and robot-learning for manipulating rigid objects. |

|

Towards Practical Multi-object Manipulation using Relational Reinforcement Learning Richard Li,Allan Jabri,Trevor Darrell,Pulkit Agrawal ICRA, 2020 paper /website /code /bibtex Combining graph neural networks with curriculum learning for solve long horizon multi-object manipulation tasks. |

|

Superposition of Many Models into One Brian Cheung,Alex Terekhov,Yubei Chen,Pulkit Agrawal,Bruno Olshausen, NeurIPS, 2019 arxiv /video tutorial /code /bibtex A method for storing multiple neural network models for different tasks into a single neural network. |

|

Real-time Video Detection of Falls in Dementia Care Facility and Reduced Emergency Care Glen L Xiong, Eleonore Bayen, Shirley Nickels, Raghav Subramaniam, Pulkit Agrawal, Julien Jacquemot, Alexandre M Bayen, Bruce Miller, George Netscher American Journal of Managed Care , 2019 paper /SafelyYou /bibtex Computer Vision based Fall Detection system reduces number of falls and emergency room visits in people with Dementia. |

|

Zero Shot Visual Imitation Deepak Pathak*,Parsa Mahmoudieh*, Michael Luo,Pulkit Agrawal*, Evan Shelhamer,Alexei A. Efros,Trevor Darrell (* equal contribution) ICLR, 2018 (Oral) paper /website /code /slides /bibtex Self-supervised learning of skills helps an agent imitate the task presented as a sequence of images. Forward consistency loss overcomes key challenges of inverse and forward models. |

|

Investigating Human Priors for Playing Video Games Rachit Dubey,Pulkit Agrawal,Deepak Pathak,Alexei A. Efros,Tom Griffiths ICML, 2018 paper /website /youtube cover /media /bibtex An empirical study of various kinds of prior information used by humans to solve video games. Such priors make them significantly more sample efficient as compared to Deep Reinforcement Learning algorithms. |

|

Learning Instance Segmentation by Interaction Deepak Pathak*, Yide Shentu*,Dian Chen*,Pulkit Agrawal*,Trevor Darrell,Sergey Levine,Jitendra Malik (*equal contribution) CVPR Workshop, 2018 paper /website bibtex A self-supervised method for learning to segment objects by interacting with them. |

|

Fully Automated Echocardiogram Interpretation in Clinical Practice: Feasibility and Diagnostic Accuracy Jeffrey Zhang, Sravani Gajjala, Pulkit Agrawal, Geoffrey H Tison, Laura A Hallock, Lauren Beussink-Nelson, Mats H Lassen, Eugene Fan, Mandar A Aras, ChaRandle Jordan, Kirsten E Fleischmann, Michelle Melisko, Atif Qasim, Sanjiv J Shah, Ruzena Bajcsy, Rahul C Deo Circulation, 2018 paper /arxiv /bibtex Computer vision method for building fully automated and scalable analysis pipeline for echocardiogram interpretation. |

|

Curiosity Driven Exploration by Self-Supervised Prediction Deepak Pathak,Pulkit Agrawal,Alexei A. Efros,Trevor Darrell ICML, 2017 arxiv /video /talk /code / project website /bibtex Intrinsic curiosity of agents enables them to learn useful and generalizable skills without any rewards from the environment. |

|

What Will Happen Next?: Forecasting Player Moves in Sports Videos Panna Felsen,Pulkit Agrawal,Jitendra Malik ICCV, 2017 paper /bibtex Feature learning by making use of an agent's knowledge of its motion. |

|

Combining Self-Supervised Learning and Imitation for Vision-based Rope Manipulation Ashvin Nair*,Dian Chen*,Pulkit Agrawal*,Phillip Isola,Pieter Abbeel,Jitendra Malik,Sergey Levine (*equal contribution) ICRA, 2017 arxiv / website / video /bibtex Self-supervised learning of low-level skills enables a robot to follow a high-level plan specified by a single video demonstration. The code for the paper Zero Shot Visual Imitation subsumes this project's code release. |

|

Learning to Perform Physics Experiments via Deep Reinforcement Learning Misha Denil,Pulkit Agrawal*,Tejas D Kulkarni,Tom Erez,Peter Battaglia,Nando de Freitas ICLR, 2017 arxiv /media / video /bibtex Deep reinforcement learning can equip an agent with the ability to perform experiments for inferring physical quanities of interest. |

|

Reduction in Fall Rate in Dementia Managed Care through Video Incident Review: Pilot Study Eleonore Bayen, Julien Jacquemot, George Netscher,Pulkit Agrawal, Lynn Tabb Noyce, Alexandre Bayen Journal of Medical Internet Research, 2017 paper /bibtex Analysis how continuous video monitoring and review of falls of individuals with dementia can support better quality of care. |

|

Human Pose Estimation with Iterative Error Feedback Joao Carreira,Pulkit Agrawal,Katerina Fragkiadaki,Jitendra Malik CVPR, 2016 (Spotlight) arxiv /code /bibtex Iterative Error Feedback (IEF) is a self-correcting model that progressively changes an initial solution by feeding back error predictions. In contrast to feedforward CNNs that only capture structure in inputs, IEF captures structure in both the space of inputs and outputs. |

|

Learning to Poke by Poking: Experiential Learning of Intuitive Physics Pulkit Agrawal*,Ashvin Nair*,Pieter Abbeel,Jitendra Malik,Sergey Levine (*equal contribution) NIPS, 2016, (Oral) arxiv /talk /project website /data /bibtex Robot learns how to push objects to target locations by conducting a large number of pushing experiments. The code for the paper Zero Shot Visual Imitation subsumes this project's code release. |

|

What makes Imagenet Good for Transfer Learning? Jacob Huh ,Pulkit Agrawal,Alexei A. Efros NIPS LSCVS Workshop, 2016, (Oral) arxiv /project website /code /bibtex An empirical investigation into various factors related to the statistics of Imagenet dataset that result in transferrable features. |

|

Learning Visual Predictive Models of Physics for Playing Billiards Katerina Fragkiadaki*,Pulkit Agrawal*,Sergey Levine,Jitendra Malik (*equal contribution) ICLR, 2016 arxiv /code /bibtex This work explores how an agent can be equipped with an internal model of the dynamics of the external world, and how it can use this model to plan novel actions by running multiple internal simulations (“visual imagination”). |

|

Generic 3d Representation via Pose Estimation and Matching Amir R. Zamir, Tilman Wekel,Pulkit Agrawal, Colin Weil,Jitendra Malik, Silvio Savarese ECCV, 2016 arxiv /website / dataset /code /bibtex Large-scale study of feature learning using agent's knowledge of its motion. This paper extends our ICCV 2015 paper. |

|

Learning to See by Moving Pulkit Agrawal,Joao Carreira,Jitendra Malik ICCV, 2015 arxiv /code /bibtex Feature learning by making use of an agent's knowledge of its motion. |

|

Analyzing the Performance of Multilayer Neural Networks for Object Recognition Pulkit Agrawal,Ross Girshick,Jitendra Malik ECCV, 2014 arxiv /bibtex A detailed study of how to finetune neural networks and the nature of the learned representations. |

|

Pixels to Voxels: Modeling Visual Representation in the Human Brain Pulkit Agrawal,Dustin Stansbury,Jitendra Malik,Jack Gallant (*equal contribution) arXiv, 2014 arxiv /unpublished results /bibtex Comparing the representations learnt by a Deep Neural Network optimized for object recognition against the human brain. |

|

The Automatic Assessment of Knowledge Integration Processes in Project Teams Gahgene Gweon,Pulkit Agrawal, Mikesh Udani, Bhiksha Raj, Carolyn Rose Computer Supported Collaborative Learning , 2011**(Best Student Paper Award)** arxiv /bibtex Method for identifying important parts of a group conversation directly from speech data. |

| Patents |

|---|

| System and Method for Detecting, Recording and Communicating Events in the Care and Treatment of Cognitively Impaired Persons George Netscher, Julien Jacquemot, Pulkit Agrawal, Alexandre Bayen US Patent: US20190287376A1, 2019 |

| Invariant Object Representation of Images Using Spiking Neural Networks Pulkit Agrawal, Somdeb Majumdar, Vikram Gupta US Patent: US20150278628A1, 2015 |

| Invariant Object Representation of Images Using Spiking Neural Networks Pulkit Agrawal, Somdeb Majumdar US Patent: US20150278641A1, 2015 |

| Service |

|---|

| Program Chair, CoRL, 2024 Area Chair, ICML, 2021 Area Chair, ICLR, 2021 Area Chair, NeurIPS, 2020 Area Chair, CoRL, 2020, 2019 Reviewer for CVPR, ICCV, ECCV, NeurIPS, ICML, ICLR, RSS, ICRA, IJRR, IJCV, IEEE RA-L, TPAMI etc. |

| Lab Alumni |

|---|

| Tao Chen, PhD 2024, co-founded his startup. Anurag Ajay , PhD 2024, now at Google. Ruben Castro , MS 2024. Jacob Huh , PhD 2024, now at OpenAI. Anthony Simeonov , PhD 2024, now at Boston Dynamics. Xiang Fu , PhD 2024. Bipasha Sen Brian Cheung Andrew Jenkins, MEng, 2024, now at Zoosk . Tifanny Portela , visiting student 2023, now a Ph.D. student at ETH Zurich. Yandong Ji, visiting researcher 2023, now a Ph.D. student at UCSD. Meenal Parakh, MEng 2023, now PhD student at Princeton. Marcel Torne, MS 2023, now PhD student at Stanford. Alisha Fong, MEng, 2023 Alina Sarmiento, Undergraduate, 2023, now PhD student at CMU. Sathwik Karnik, Shreya Kumar, Yaosheng Xu, Bhavya Agrawalla, April Chan, Andrei Spiride, April Chan, Calvin Zhang, Abhaya Ravikumar, Alex Hu, Isabel Sperandino Andi Peng, MS 2023, now PhD student with Julie Shah. Steven Li , visiting researcher 2023, now a PhD student at TU Darmstadt. Abhishek Gupta, PostDoc, now Faculty at University of Washington. Lara Zlokapa, MEng, 2022 Haokuan Luo, MEng, 2022 (now at Hudson River) Albert Yue, MEng, 2022 (now at Hudson River) Matthew Stallone, MEng, 2022 Eric Chen, MEng, 2021 (now at Aurora) Joshua Gruenstein, 2021 (now CEO Tutor Intelligence) Alon Z. Kosowsky-Sachs, 2021 (now CTO Tutor Intelligence) Avery Lamp (now at stealth startup) Sanja Simonkovj, 2021 (Masters Student) Oran Luzon, 2021 (Undergraduate Researcher) Blake Tickell, 2020 (Visiting Researcher) Ishani Thakur, 2020 (Undergraduate Researcher) |