DoubleField Project Page (original) (raw)

CVPR 2022

DoubleField: Bridging the Neural Surface and Radiance Fields for High-fidelity Human Reconstruction and Rendering

Ruizhi Shao1, Hongwen Zhang1, He Zhang2, Mingjia Chen1, Yanpei Cao3, Tao Yu1, Yebin Liu1

1Tsinghua University 2Beihang University 3Kuaishou Technology

Fig 1. Given sparse multi-view RGB images, our method achieves high-fidelity human reconstruction and rendering.

Abstract

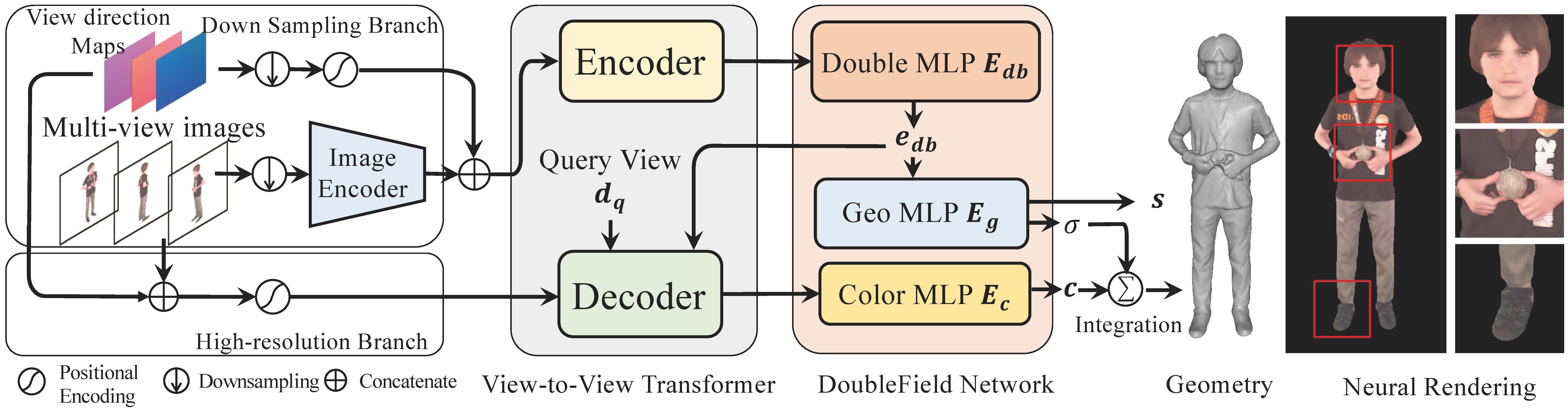

We introduce DoubleField, a novel framework combining the merits of both surface field and radiance field for high-fidelity human reconstruction and rendering. Within DoubleField, the surface field and radiance field are associated together by a shared feature embedding and a surface-guided sampling strategy. Moreover, a view-to-view transformer is introduced to fuse multi-view features and learn view-dependent features directly from high-resolution inputs. With the modeling power of DoubleField and the view-to-view transformer, our method significantly improves the reconstruction quality of both geometry and appearance, while supporting direct inference, scene-specific high-resolution finetuning, and fast rendering. The efficacy of DoubleField is validated by the quantitative evaluations on several datasets and the qualitative results in a real-world sparse multi-view system, showing its superior capability for high-quality human model reconstruction and photo-realistic free-viewpoint human rendering. Data and source code will be made public for the research purpose.

[arXiv] [Code(comming soon)]

Overview

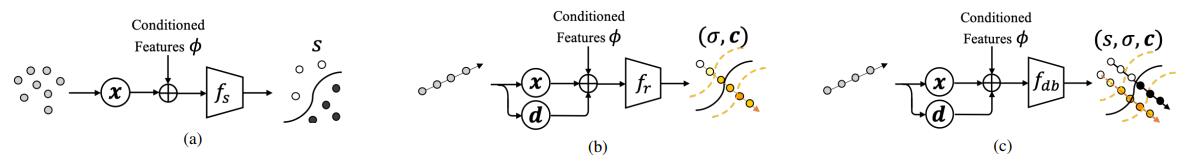

Fig 2. Comparison of different neural field representations. (a) Neural surface field in PIFu. (b) Neural radiance field in PixelNeRF. (c) The proposed DoubleField. The joint implicit fucntion bridges the surface field and the radiance field.

Fig 3. Pipeline of our method for high-fidelity human reconstruction and rendering.

Results

Fig 4. High-fidelity reconstruction results from only 6 views on Twindom and THuman2.0 dataset.

Fig 5. High-fidelity reconstruction results from only 5 views on real-world data.

Fig 6. High-fidelity rendering results from only 6 views on Twindom and THuman2.0 dataset.

Fig 7. High-fidelity rendering results from only 5 views on real-world data.

Technical Paper

Demo Video

Citation

Ruizhi Shao, Hongwen Zhang, He Zhang, Mingjia Chen, Yanpei Cao, Tao Yu, and Yebin Liu. "DoubleField: Bridging the Neural Surface and Radiance Fields for High-fidelity Human Reconstruction and Rendering". CVPR 2022

@inproceedings{shao2022doublefield,

author = {Shao, Ruizhi and Zhang, Hongwen and Zhang, He and Chen, Mingjia and Cao, Yanpei and Yu, Tao and Liu, Yebin},

title = {DoubleField: Bridging the Neural Surface and Radiance Fields for High-fidelity Human Reconstruction and Rendering},

booktitle = {CVPR},

year = {2022}

}