Comparing the Efficacy of Medications for ADHD Using Meta-analysis (original) (raw)

Abstract

Objective

Medications used to treat attention-deficit/hyperactivity disorder (ADHD) have been well researched, but comparisons among drugs are hindered by the absence of direct comparative trials.

Methods

We analyzed recent published literature on the pharmacotherapy of ADHD to describe the variability of drug-placebo effect sizes. A literature search was conducted to identify double-blind, placebo-controlled studies of ADHD youth published after 1979. Meta-analysis regression assessed the influence of medication type and study design features on medication effects.

Results

Twenty-nine trials met criteria and were included in this meta-analysis. These trials studied 15 drugs using 17 different outcome measures of hyperactive, inattentive, impulsive, or oppositional behavior. The most commonly identified treatments included both methylphenidate and amphetamine compounds. After stratifying trials on the class of drug studied (short-acting stimulant vs long-acting stimulant vs nonstimulant), we found significant drug differences for both study design variables and effect sizes. The differences among the 3 classes of drug remained significant after correcting for study design variables.

Conclusion

Uniformity appears to be lacking in how medication effectiveness is assessed and in many study design parameters. Comparing medication effect sizes from different studies will be biased without accounting for variability in study design parameters. Although these differences obscure comparisons among specific medications, they do allow for conclusions about the differential effects of broad classes of medications used to treat ADHD.

Introduction

Attention-deficit/hyperactivity disorder (ADHD) is a neurocognitive disorder with a high worldwide prevalence.[1] For decades, the stimulant medications methylphenidate, dextroamphetamine, and mixed amphetamine salts have been the most common drugs used in the treatment of ADHD. The stimulants increase the availability of synaptic dopamine,[2,3] reduce the overactivity, impulsivity, and inattention characteristic of patients with ADHD, and improve associated behaviors, including on-task behavior, academic performance, and social functioning.[4] Studies demonstrate robust effects in both children and adults,[5] and long-acting formulations extend the action of these medications over 8–12 hours to allow once-daily dosing.[6–8]

Although stimulants have been the mainstay of ADHD pharmacotherapy, several nonstimulant medications have also shown evidence of efficacy. These include tricyclic antidepressants (TCAs),[9–11] bupropion,[12–14] modafinil,[15,16] monoamine oxidase inhibitors (MAOIs),[17,18] and atomoxetine.[19–21] While the medications that treat ADHD have been well researched, comparisons among drugs are hindered by the absence of head-to-head trials. In the absence of such trials, physicians must rely on qualitative comparisons among published trials, along with their own clinical experience, to draw conclusions about the efficacy of different medication types on ADHD outcomes. Qualitative reviews of the literature are useful for summarizing results and drawing conclusions about general trends, but they cannot easily evaluate and control the many factors associated with study design that influence the apparent medication effect from a single study.

Meta-analysis provides a systematic quantitative framework for assessing the effects of medications reported by different studies. One problem faced by meta-analysis is that different studies use different outcome measures. Comparing such studies is difficult because the meaning of a 1-point difference between drug and placebo groups on a particular outcome measure is typically not the same as the meaning of a 1-point difference on another outcome measure. Meta-analysis partially solves this problem by computing an effect size for each measure. The effect size standardizes the unit of measurement across studies so that a change in 1 point on the effect-size scale has the same meaning in each study. For example, in the case of the effect size known as the standardized mean difference, a 1-point difference means that the drug and placebo groups differ by 1 standard deviation (SD) on the outcome measure.

Comparing effect sizes between studies is questionable if the studies differ substantially on design features that might plausibly influence drug-placebo differences. For example, if a study of one drug using double-blind methodology found a smaller effect size than a study of a second drug that was not blinded, we could not be sure whether the difference in effect size was due to differences in drug efficacy or differences in methodology. Meta-analysis can address this issue by using regression methods to determine whether design features are associated with effect size and whether differences in design features can account for differences among drugs.

The present study applies meta-analysis to published literature on the pharmacotherapy of ADHD. Although there have been prior meta-analyses of stimulants[22–25]and reviews of effect size for long-acting formulations,[26] prior studies have not compared medications while also addressing confounding variables. Our goals were to determine whether: (1) differences in study design among studies might have confounded comparisons of medication efficacy; (2) available studies provided evidence for significant differences in effect sizes among medications; and (3) features of study design influenced the estimate of medication efficacy.

Materials and Methods

A literature search was conducted to identify double-blind, placebo-controlled studies of ADHD in youth published after 1979. We searched for articles using the following search engines: PubMed, Ovid, ERIC, CINAHL, Medline, PREMEDLINE, the Cochrane database, e-psyche, and social sciences abstracts. In addition, presentations from the American Psychiatric Association (APA) and American Academy of Child and Adolescent Psychiatry (AACAP) meetings were reviewed. We only included studies using randomized, double-blind methodology with placebo controls that defined ADHD using diagnostic criteria from the Diagnostic and Statistical Manual of Mental Disorders, Revised Third Edition (DSM-III-R) or Fourth Edition (DSM-IV), and followed subjects for 2 weeks or more. To be included, studies must have presented the means and SDs of either change or end point scores for the drug and placebo groups. For studies presenting data on more than 1 fixed dose, we used the highest dose. We excluded studies that rated behavior in laboratory environments, studied fewer than 20 subjects in either the drug or placebo group, were designed to explore appropriate doses for future work, or selected ADHD samples for the presence of a comorbid condition (eg, studies of ADHD among mentally retarded children).

We extracted the following data from each article: name of dependent outcome measure; name of drug; distribution of DSM-IV subtypes in study sample (for studies using DSM-IV criteria); design of study (parallel vs crossover); type of outcome score used (change score vs posttreatment score); type of rater (parent, teacher, clinician, self); mean age of study sample; percentage of male subjects in study sample; dosing method (fixed dose vs titration to best dose); exclusion of nonresponders (yes/no); use of placebo lead-in (yes/no), and year of publication.

Effect sizes for dependent measures in each study were expressed as standardized mean differences (SMD). The SMD is computed by taking the mean of the active drug group minus the mean of the placebo group and dividing the result by the pooled SD of the groups. Studies reporting change scores provided end point minus baseline scores for drug and placebo groups. In this case, the SMD is computed as the difference between change scores. For studies reporting end point scores, the SMD is computed as the difference between end point scores. Studies were weighted according to the number of participants included. Our meta-analysis used the random effects model of DerSimonian and Laird.[27] We used meta-analytic regression to assess the degree to which the effect sizes varied with the methodologic features of each study described above and used Egger's[28] method to assess for publication biases.

For each study, all dependent outcome measures reported were treated as a separate data point for entry into the analysis, with several studies providing data on more than 1 measure to permit comparison of measures as well as among drugs in this population. Because measures reported from the same study are not statistically independent of one another, standard statistical procedures will produce inaccurate P values. To address this intrafamily clustering, variance estimates were adjusted using Huber's[29] formula as implemented in STATA.[30]

This formula is a “theoretical bootstrap” that produces robust statistical tests. The method works by entering the cluster scores (ie, sum of scores within families) into the formula for the estimate of variance. The resulting P values are valid even when observations are not statistically independent.

Results

Table 1 describes the 29 articles meeting criteria for inclusion in the meta-analysis. Studies are listed more than once if they studied more than 1 drug or if they reported independent studies of the same drug. The studies in Table 1 evaluated 14 drugs using 19 different measures of ADHD symptoms to assess efficacy. Each drug-placebo comparison provided information on more than 1 outcome score. These allowed us to compute 157 effect sizes. Table 2 shows the number of times each medication was studied and the numbers of subjects in the drug and placebo groups from those studies.

Table 1.

Studies Used in the Analysis

| Study | Drug | Mean Dose (mg/d) | Mean Dose (mg/kg/d) | Method of Dosing | N in Drug Group | N in Placebo Group | Mean Age | % Male |

|---|---|---|---|---|---|---|---|---|

| Conners[36] | MPH | 22 | .82 | Best | 20 | 20 | 12 | 95 |

| Taylor[37] | MPH | DNP | DNP | Best | 37 | 37 | 9 | 100 |

| Klorman[38] | MPH | 22.3 | .76 | Best | 44 | 44 | 9 | 84 |

| Conners[12] | Bupropion | DNP | 6 | Best | 52 | 20 | 9 | 90 |

| Schachar[39] | MPH | 31.9 | 1.2 | Best | 37 | 29 | 8 | 77 |

| Manos[40] | MAS | 10.6 | DNP | Best | 42 | 42 | 10 | 79 |

| Manos[40] | MPH | 19.5 | DNP | Best | 42 | 42 | 10 | 79 |

| Zeiner[35] | MPH | DNP | .5 | Best | 36 | 36 | 9 | 100 |

| Bostic[41] | Pemoline | 1.81 | 3 | Best | 21 | 21 | 14 | 86 |

| Pliszka[42] | MAS | 12.5 | .31 | Best | 20 | 18 | 8 | DNP |

| Pliszka[42] | MPH | 25.2 | .39 | Best | 20 | 18 | 8 | DNP |

| James[33] | MAS | 10.3 | .32 | DNP | 35 | 35 | 9 | 60 |

| James[33] | d-Amp | 10.3 | .32 | DNP | 35 | 35 | 9 | 60 |

| James[33] | d-Amp ER | 10.3 | .32 | DNP | 35 | 35 | 9 | 60 |

| Wolraich[7] | MPH | 29.5 | .9 | Best | 97 | 90 | 9 | 87 |

| Wolraich[7] | MPH OROS | 34.3 | 1.1 | Best | 95 | 90 | 9 | 78 |

| Michelson[20] | Atomoxetine | DNP | 1.8 | Fixed | 82 | 83 | 11 | 72 |

| Michelson[43] | Atomoxetine | DNP | 1.3 | Best | 84 | 83 | 10 | 71 |

| Biederman[44] | Atomoxetine | DNP | 2 | Best | 31 | 21 | 10 | 0 |

| Biederman[8] | MAS XR | 30 | DNP | Fixed | 120 | 203 | 9 | 80 |

| Greenhill[6] | MPH MR | 40.7 | 1.28 | Best | 155 | 159 | 9 | 83 |

| Spencer[45] | Atomoxetine | 90 | 2 | Best | 49 | 47 | 10 | 81 |

| Spencer[45] | Atomoxetine | 90 | 2 | Best | 53 | 45 | 10 | 81 |

| Biederman[46] | MPH-LA | DNP | DNP | Best | 63 | 71 | 9 | 77 |

| Wigal[47] | MPH | 32.14 | DNP | Best | 41 | 41 | 10 | 88 |

| Wigal[47] | d-MPH | 18.25 | DNP | Best | 42 | 41 | 10 | 88 |

| Swanson[48] | Modafinil | 395.3 | DNP | Fixed | 120 | 63 | 10 | 74 |

| Biederman[49] | Modafinil | 382.5 | DNP | Fixed | 120 | 63 | 10 | 74 |

| Findling[50] | MPH OROS | 54 | DNP | Best | 89 | 85 | 9 | 70 |

| Findling[50] | MTS | 27 | DNP | Best | 96 | 85 | 9 | 66 |

| Pelham[51] | MTS | 31.6 | DNP | Fixed | DNP | DNP | 10 | 87 |

| Greenhill[52] | Modafinil | 297.5 | DNP | Best | 128 | 66 | 10 | 73 |

| Kelsey[53] | Atomoxetine | DNP | 1.3 | Best | 126 | 60 | 10 | 71 |

| Wilens[34] | MPH OROS | 53.6 | DNP | Best | 87 | 90 | 15 | 80 |

| Spencer[54]a | MAS-XR | 40 | DNP | Fixed | 27 | 24 | 14 | 64 |

| Spencer[54]b | MAS-XR | 40 | DNP | Fixed | 26 | 28 | 14 | 64 |

| Findling[55] | MPH | 30 | DNP | Best | 120 | 39 | 10 | 79 |

| Findling[55] | MPH-MR | 30 | DNP | Best | 120 | 39 | 10 | 81 |

Table 2.

Drug-Placebo Comparisons Tested in the Meta-analysis Studies

| Medication | No. of Studies Testing Each Medication | Number of Subjects in Drug Group | Number of Subjects in Placebo Group |

|---|---|---|---|

| Nonstimulants/Others | |||

| Atomoxetine | 6 | 425 | 339 |

| Bupropion | 1 | 52 | 20 |

| Modafinil | 3 | 410 | 209 |

| Immediate-release Stimulants | |||

| MAS | 3 | 97 | 95 |

| d-Amp | 1 | 35 | 35 |

| MPH | 10 | 499 | 396 |

| Pemoline | 1 | 21 | 21 |

| d-MPH | 1 | 43 | 41 |

| Long-acting Stimulants | |||

| MAS XR | 2 | 147 | 227 |

| d-Amp ER | 1 | 35 | 35 |

| MPH MR | 2 | 275 | 198 |

| MPH OROS | 3 | 271 | 265 |

| MTS | 2 | 96 | 85 |

| MPH-LA | 1 | 63 | 71 |

Meta-analysis regression found a significant effect of drug group (F(1, 27) = 15.3, P < .0001). The effect sizes for nonstimulant medications were significantly less than those for immediate-release stimulants (F(1, 27) = 28.4, P < .0001) or long-acting stimulants (F(1, 27) = 14.1, P = .0008). The 2 classes of stimulant medication did not differ significantly from one another (F(1, 27) = 2.4, P = .14).

Table 3 presents clinical and demographic variables that might potentially confound our meta-analytic comparisons of medication types. We found no significant differences among studies for the distribution of DSM-IV subtypes (for studies using DSM-IV criteria), gender, and age. Table 4 describes the study design features for the 3 medication classes. We found a significant difference among the 3 types of studies for type of score (change score vs outcome score) and study design (crossover vs parallel) but not for the other design features. The medication groups did not differ in the types of raters used, the score categories used to assess efficacy, the use of fixed-dose vs titration for best dose designs, whether or not subjects with a history of nonresponse were excluded at baseline, exclusion of nonresponders, or use of a placebo lead-in.

Table 3.

Clinical and Demographic Features of Study Samples by Type of Medication

| Nonstimulant/Other | Short-acting Stimulant | Long-acting Stimulant | ||

|---|---|---|---|---|

| Variable | n = 10 | n = 11 | n = 8 | F Statistic |

| ADHD subtype | ||||

| Inattentive (%) | 25 | 26 | 21 | F (2,14) = .1 P = .12 |

| Hyperactive-impulsive (%) | 11 | 1 | 4 | F (2,14) = .9 P = .7 |

| Combined (%) | 63 | 73 | 75 | F (2,15) = .5 P = .7 |

| Gender (% male) | 68 | 85 | 77 | F (2,25) = 2.3 P = .13 |

| Age in years | 10 | 10 | 10 | F (2,26) = .3 P = .77 |

Table 4.

Design Features of Studies by Type of Medication (Percentage of Each Medication Class)

| Nonstimulant/Other | Short-acting Stimulant | Long-acting Stimulant | P value* | |

|---|---|---|---|---|

| Change or Outcome Score | ||||

| Change | 70 | 9 | 37.5 | .02 |

| Outcome | 30 | 91 | 62.5 | |

| Type of Rater | ||||

| Teacher | 30 | 46 | 50 | .25 |

| Parent | 20 | 46 | 12.5 | |

| Physician | 50 | 9 | 37.5 | |

| Score Category | ||||

| ADHD Total | 60 | 73 | 75 | .77 |

| Hyperactive-impulsive | 30 | 27 | 12.5 | |

| Inattentive | 10 | 0 | 12.5 | |

| Study Design | ||||

| Crossover | 0 | 55 | 12.5 | .009 |

| Parallel | 100 | 45 | 87.5 | |

| Placebo Lead-in | ||||

| No | 90 | 91 | 75 | .65 |

| Yes | 10 | 9 | 25 | |

| Nonresponders Excluded | ||||

| No | 100 | 82 | 62.5 | .12 |

| Yes | 0 | 18 | 37.5 | |

| Best vs Fixed Dose | ||||

| Best | 75 | 100 | 71 | .26 |

| Fixed | 25 | 0 | 29 |

We used meta-analysis regression to determine whether any of the design features in Table 3 and Table 4 predicted effect size. The only significant findings were for study design and type of score. Effect sizes were greater for crossover compared with parallel designs (F(1, 27) = 9.4, P = .02) and for outcome compared with change scores (F(1, 27) = 4.7, P = .04). All other effects were not significant (all P values > .05). Because study design and type of score were significantly associated with both effect size and drug class, these features could explain the observed differences among drug class. These confounds created 3 strata: outcome scores from crossover studies, outcome scores from parallel design studies, and change scores from parallel design studies. None of the studies examined presented change scores from a crossover design. The greater efficacy of immediate-release and long-acting stimulants compared with nonstimulants remained significant after correcting for these potentially confounding features (F(1,27) = 11.3, P = .05), and there was a significant interaction between drug class and confound strata such that the medication effects were significant for outcome scores from parallel studies (F(2,10) = 59.5, P < .0001), not significant for outcome scores from crossover studies (F(1,5) = 5.7, P = .06) and not significant for change scores from parallel studies (F(2,10) = 1.8, P = .2).

We conducted separate random-effects meta-analyses for stimulants and nonstimulants to assess for heterogeneity within drug class and publication bias. For the long-acting stimulants, we found significant heterogeneity (Q = 52.3, df = 26, P = .02). There was no significant heterogeneity for immediate-release stimulants (Q = 34.4, df = 38, P = .7) or nonstimulants (Q = 30.4, df = 47, P = .9). We found no evidence of publication bias for nonstimulants (t[47] = 1.7, P = .10), short-acting stimulants (t[38] = 1.6, P = .12), or long-acting stimulants (t[26] = 1.1, P = .3). There was also no evidence of publication bias in a pooled analysis of all medication types (t[113] = 1.0, P = .34).

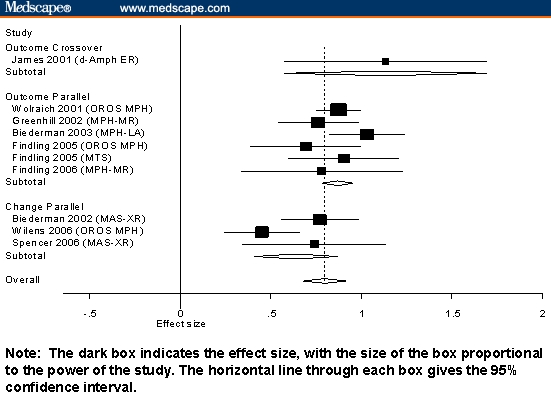

Figures 1 through 3 present our results stratified by the 3 significant effects reported above: medication class, study design, and type of score. Figure 1 shows the results for long-acting stimulants. Each line of the graph gives the results pooled across all outcome measures used for each study.

Figure 1.

Standardized mean differences and 95% confidence intervals (CIs) for long-acting stimulants stratified by design and type of score.

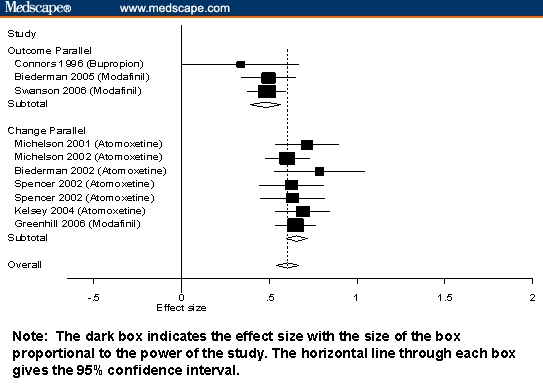

Figure 3.

Standardized mean differences and 95% CIs for nonstimulants stratified by design and type of score.

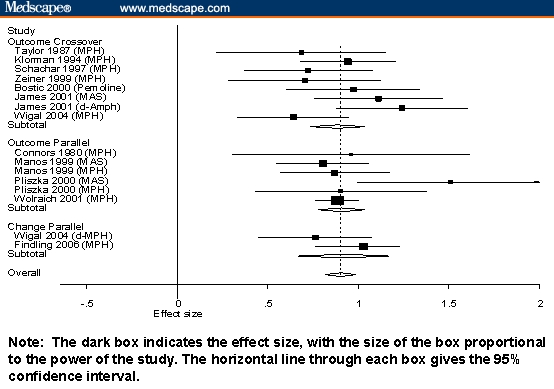

Figure 2 shows the results for immediate-release stimulants, and Figure 3 shows results for long-acting stimulants.

Figure 2.

Standardized mean differences and 95% CIs for short-acting stimulants stratified by design and type of score.

Discussion

Our meta-analysis of ADHD efficacy outcomes found significant differences between stimulant and nonstimulant medications, even after correcting for study design features that might have confounded the results. We found no evidence of publication bias for any of the 3 classes of medication. Our analyses indicate that effect sizes for stimulants are significantly greater than those for other medications, but the presence of confounds and their interaction with medication class suggests that, in the absence of confirmatory head-to-head studies, caution is warranted when comparing the effects of different medications across studies. Although head-to-head trials are needed to make definitive statements about efficacy differences, our results comparing stimulants and nonstimulants are compatible with the efficacy differences between atomoxetine and mixed amphetamine salts reported by Wigal and coworkers[31,32] and the conclusions of a prior review limited to a smaller subset of studies that excluded short-acting stimulants.[26]

Table 1 shows little uniformity in the study design parameters used to assess medication efficacy. Although this does not affect the interpretation of individual studies, it makes difficult the comparison of the efficacy of different medications in the absence of direct comparisons within the same study. This problem is further compounded by the fact that effect sizes, which compare treatment efficacy, differ according to study design variables. Comparing medication effect sizes in different studies will lead to spurious conclusions without accounting for these influences. We found that 2 key design variables – type of score (change score or outcome score) and study design (crossover vs parallel) – differed significantly among the medication groups. When we adjusted for these differences using meta-analysis regression, however, we continued to find significant differences among classes of medications, although these differences could not be established for the 3 different methodologic strata that potentially confounded results.

The robust effects of all ADHD medications can be seen in Figures 1 through 3, which show that most measures of effect from all studies were statistically significant. Also notable is that, for nonstimulants and short-acting stimulants, variability within each medication class was not statistically significant. This suggests that, within each medication class, studies measure a common effect size. For long-acting stimulants, there was modest evidence of significant interstudy variability. Inspection of Figure 3 suggests that this is due to the very high effect sizes reported by James and colleagues[33] and the low effect size reported by Wilens and associates.[34]

The interpretive difficulties created by confounding design variables are clearly seen in Figures 1 through 3. Notably, studies using outcome scores from crossover designs were only used for stimulant medications, which is sensible given that the crossover is not suitable for drugs having longer half-lives. The 95% confidence intervals from Figures 1 through 3 show that, although there are systematic differences among classes of medication, the variability within each study is high, which makes it difficult to compare individual pairs of studies or pairs of drugs. The Figures also show that within the strata defined by confounding variables, there are no apparent differences among the stimulants or among the nonstimulants.

This work must be interpreted in the context of several limitations. Because we relied on data presented by authors, we could not assess the effects of all potential confounds. We were limited by what other investigators chose to present. For example, we could not compute the effect sizes at specific time points, because such data were rarely provided. Future work should review studies of time course such as the analog school laboratory paradigm. Although that work does not assess outcome in the patient's environment, it would provide useful data about efficacy peak or trough effect. Similarly, we did not assess differential duration of action between medication classes, but this effect is rarely presented. All meta-analyses are limited by the quality of the studies analyzed. For that reason, we limited our review to double-blind, placebo-controlled studies that diagnosed ADHD using DSM-IV criteria. Nevertheless, although our analyses controlled for several study design features, it is possible that systematic methodologic differences between drugs or classes of drugs might have led to spurious results. For example, in Figure 1, we see that the effect size reported from Wilens and colleagues'[34] study of MPH OROS was much lower that that seen for other MPH OROS studies and other long-acting stimulants. Wilens and colleagues note that because patients were not dosed to optimal outcome, their results might be less that expected with optimal dosing. Similarly, Zeiner and coworkers'[35] study of methylphenidate reported a low effect size using doses of 0.5 mg/kg, which is relatively low by current standards. Effects of this sort that are idiosyncratic to one or a few studies cannot be adjusted for in a meta-analysis context.

Despite these limitations, our findings highlight the remarkable variability in methods among ADHD treatment studies. Although this does not argue against the validity of individual studies, it highlights the difficulty that one faces when interpreting differential medication efficacy when a direct comparative trial is not available. Yet, despite this variability, we found substantial and significant differences in efficacy between stimulant and nonstimulant medications. Although efficacy effect sizes should not be the sole guide for clinicians to use when choosing an ADHD medication, they do provide useful information for clinicians to consider when planning treatment regimens for patients with ADHD.

Funding Information

This work was supported, in part, by a grant from Shire Pharmaceuticals Inc. to S.V. Faraone.

Footnotes

Readers are encouraged to respond to George Lundberg, MD, Editor of MedGenMed, for the editor's eye only or for possible publication via email: glundberg@medscape.net

Contributor Information

Stephen V Faraone, Child & Adolescent Psychiatry Research, Department of Psychiatry, SUNY Upstate Medical University, Syracuse, New York; Email: faraones@upstate.edu.

Joseph Biederman, Pediatric Psychopharmacology, Massachusetts General Hospital, Boston, Massachusetts; Professor of Psychiatry, Harvard Medical School, Boston, Massachusetts.

Thomas J Spencer, Pediatric Psychopharmacology, Massachusetts General Hospital, Boston, Massachusetts; Associate Professor of Psychiatry, Harvard Medical School, Boston, Massachusetts.

Megan Aleardi, Pediatric Psychopharmacology, Massachusetts General Hospital, Boston, Massachusetts.

References

- 1.Faraone SV, Sergeant J, Gillberg C, et al. The worldwide prevalence of ADHD: Is it an American condition? World Psychiatry. 2003;2:104–113. [PMC free article] [PubMed] [Google Scholar]

- 2.Volkow ND, Fowler JS, Wang G, et al. Mechanism of Action of methylphenidate: insights from PET imaging studies. J Atten Disord. 2002;6:S31–S44. doi: 10.1177/070674370200601s05. [DOI] [PubMed] [Google Scholar]

- 3.Volkow ND, Wang G, Fowler JS, et al. Therapeutic doses of oral methylphenidate significantly increase extracellular dopamine in the human brain. J Neurosci. 2001;21:RC121. doi: 10.1523/JNEUROSCI.21-02-j0001.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Greenhill LL, Pliszka S, Dulcan MK, et al. Summary of the practice parameter for the use of stimulant medications in the treatment of children, adolescents, and adults. J Am Acad Child Adolesc Psychiatry. 2001;40:1352–1355. doi: 10.1097/00004583-200111000-00020. [DOI] [PubMed] [Google Scholar]

- 5.Spencer T, Biederman J, Wilens T. Pharmacotherapy of attention deficit hyperactivity disorder. Child Adolesc Psychiatr Clin North Am. 2000;9:77–97. [PubMed] [Google Scholar]

- 6.Greenhill LL, Findling RL, Swanson JM. A double-blind, placebo-controlled study of modified-release methylphenidate in children with attention-deficit/hyperactivity disorder. Pediatrics. 2002;109:E39. doi: 10.1542/peds.109.3.e39. [DOI] [PubMed] [Google Scholar]

- 7.Wolraich M, Greenhill LL, Pelham W, et al. Randomized controlled trial of OROS methylphenidate qd in children with attention deficit/hyperactivity disorder. Pediatrics. 2001;108:883–892. doi: 10.1542/peds.108.4.883. [DOI] [PubMed] [Google Scholar]

- 8.Biederman J, Lopez FA, Boellner SW, et al. A randomized, double-blind, placebo-controlled, parallel-group study of SLI381 (Adderall XR) in children with attention deficit hyperactivity disorder. Pediatrics. 2002;110:258–266. doi: 10.1542/peds.110.2.258. [DOI] [PubMed] [Google Scholar]

- 9.Spencer T, Biederman J, Coffey B, et al. A double-blind comparison of desipramine and placebo in children and adolescents with chronic tic disorder and comorbid attention-deficit/hyperactivity disorder. Arch Gen Psychiatry. 2002;59:649–656. doi: 10.1001/archpsyc.59.7.649. [DOI] [PubMed] [Google Scholar]

- 10.Wilens T, Biederman J, Baldessarini R, et al. Cardiovascular effects of therapeutic doses of tricyclic antidepressants in children and adolescents. J Am Acad Child Adolesc Psychiatry. 1996;35:1491–1501. doi: 10.1097/00004583-199611000-00018. [DOI] [PubMed] [Google Scholar]

- 11.Biederman J, Baldessarini RJ, Wright V, et al. A double-blind placebo controlled study of desipramine in the treatment of ADD: I. Efficacy. J Am Acad Child Adolesc Psychiatry. 1989;28:777–784. doi: 10.1097/00004583-198909000-00022. [DOI] [PubMed] [Google Scholar]

- 12.Conners K, Casat C, Gualtieri T, et al. Bupropion hydrochloride in attention deficit disorder with hyperactivity. J Am Acad Child Adolesc Psychiatry. 1996;35:1314–1321. doi: 10.1097/00004583-199610000-00018. [DOI] [PubMed] [Google Scholar]

- 13.Casat CD, Pleasants DZ, Schroeder DH, et al. Bupropion in children with attention deficit disorder. Psychopharmacol Bull. 1989;25:198–201. [PubMed] [Google Scholar]

- 14.Casat CD, Pleasants DZ, Van Wyck Fleet J. A double-blind trial of bupropion in children with attention deficit disorder. Psychopharmacol Bull. 1987;23:120–122. [PubMed] [Google Scholar]

- 15.Taylor FB, Russo J. Efficacy of modafinil compared to dextroamphetamine for the treatment of attention deficit hyperactivity disorder in adults. J Child Adolesc Psychopharmacol. 2000;10:311–320. doi: 10.1089/cap.2000.10.311. [DOI] [PubMed] [Google Scholar]

- 16.Rugino TA, Copley TC. Effects of modafinil in children with attention-deficit/hyperactivity disorder: an open-label study. J Am Acad Child Adolesc Psychiatry. 2001;40:230–235. doi: 10.1097/00004583-200102000-00018. [DOI] [PubMed] [Google Scholar]

- 17.Maoi EM. NIMH Conference on Alternative Pharmacology of ADHD. Washington, DC: 1996. Treatment of adult ADHD. [Google Scholar]

- 18.Shekim WO, Davis LG, Bylund DB, et al. Platelet MAO in children with attention deficit disorder and hyperactivity: a pilot study. Am J Psychiatry. 1982;139:936–938. doi: 10.1176/ajp.139.7.936. [DOI] [PubMed] [Google Scholar]

- 19.Spencer T, Biederman J. Non-stimulant treatment for attention-deficit/hyperactivity disorder. J Atten Disord. 2002;6:S109–S119. doi: 10.1177/070674370200601s13. [DOI] [PubMed] [Google Scholar]

- 20.Michelson D, Faries D, Wernicke J, et al. Atomoxetine in the treatment of children and adolescents with attention-deficit/hyperactivity disorder: a randomized, placebo-controlled, dose-response study. Pediatrics. 2001;108:E83. doi: 10.1542/peds.108.5.e83. [DOI] [PubMed] [Google Scholar]

- 21.Michelson D, Adler L, Spencer T, et al. Atomoxetine in adults with ADHD: two randomized, placebo-controlled studies. Biol Psychiatry. 2003;53:112–120. doi: 10.1016/s0006-3223(02)01671-2. [DOI] [PubMed] [Google Scholar]

- 22.Faraone SV, Spencer T, Aleardi M, et al. Meta-analysis of the efficacy of methylphenidate for treating adult attention deficit hyperactivity disorder. J Clin Psychopharmacol. 2004;54:24–29. doi: 10.1097/01.jcp.0000108984.11879.95. [DOI] [PubMed] [Google Scholar]

- 23.Faraone SV, Biederman J, Roe CM. Comparative efficacy of Adderall and methylphenidate in attention-deficit/hyperactivity disorder: a meta-analysis. J Clin Psychopharmacol. 2002;22:468–473. doi: 10.1097/00004714-200210000-00005. [DOI] [PubMed] [Google Scholar]

- 24.Faraone S, Biederman J. Efficacy of Adderall, for attention-deficit/hyperactivity disorder: a meta-analysis. J Atten Disord. 2002;6:69–75. doi: 10.1177/108705470200600203. [DOI] [PubMed] [Google Scholar]

- 25.Schachter HM, Pham B, King J, et al. How efficacious and safe is short-acting methylphenidate for the treatment of attention-deficit disorder in children and adolescents? A meta-analysis. CMAJ. 2001;165:1475–1488. [PMC free article] [PubMed] [Google Scholar]

- 26.Banaschewski T, Coghill D, Santosh P, et al. Long-acting medications for the hyperkinetic disorders: a systematic review and European Treatment Guideline. Eur Child Adolesc Psychiatry. 2006 doi: 10.1007/s00787-006-0549-0. May 5 [Epub ahead of print] [DOI] [PubMed] [Google Scholar]

- 27.DerSimonian R, Laird N. Meta-analysis in clinical trials. Control Clin Trials. 1986;7:177–188. doi: 10.1016/0197-2456(86)90046-2. [DOI] [PubMed] [Google Scholar]

- 28.Egger M, Davey Smith G, Schneider M, et al. Bias in meta-analysis detected by a simple, graphical test. BMJ. 1997;315:629–634. doi: 10.1136/bmj.315.7109.629. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Huber PJ. The behavior of maximum likelihood estimates under non-standard conditions. Proceedings of the Fifth Berkeley Symposium on Mathematical Statistics and Probability. 1967;1:221–233. [Google Scholar]

- Stata Corporation. Stata User's Guide: Release 7.0. College Station, Texas: Stata Corporation; 2001.

- 31.Wigal SB, Wigal TL, McGough JJ, et al. A laboratory school comparison of mixed amphetamine salts extended release (Adderall XR) and atomoxetine (Strattera) in school-aged children with attention deficit/hyperactivity disorder. J Attent Disord. 2005;9:275–289. doi: 10.1177/1087054705281121. [DOI] [PubMed] [Google Scholar]

- 32.Faraone SV, Wigal S, Hodgkins P. Forecasting three-month outcomes in a laboratory school comparison of mixed amphetamine salts extended release (Adderall Xr[R]) and atomoxetine (Strattera[R]) in school-aged children with attention-deficit/hyperactivity disorder. J Atten Disord. 2006 doi: 10.1177/1087054706292196. In press. [DOI] [PubMed] [Google Scholar]

- 33.James RS, Sharp WS, Bastain TM, et al. Double-blind, placebo-controlled study of single-dose amphetamine formulations in ADHD. J Am Acad Child Adolesc Psychiatry. 2001;40:1268–1276. doi: 10.1097/00004583-200111000-00006. [DOI] [PubMed] [Google Scholar]

- 34.Wilens T, McBurnett K, Bukstein O, et al. Multisite, controlled trial of OROS[R] methylphenidate (Concerta[R]) in the treatment of adolescents with attention-deficit/hyperactivity disorder. Arch Pediatr Adolesc Med. 2006;160:82–90. doi: 10.1001/archpedi.160.1.82. [DOI] [PubMed] [Google Scholar]

- 35.Zeiner P, Bryhn G, Bjercke C, et al. Response to methylphenidate in boys with attention-deficit hyperactivity disorder. Acta Paediatr. 1999;88:298–303. doi: 10.1080/08035259950170060. [DOI] [PubMed] [Google Scholar]

- 36.Conners CK, Taylor E. Pemoline, methylphenidate, and placebo in children with minimal brain dysfunction. Arch Gen Psychiatry. 1980;37:922–930. doi: 10.1001/archpsyc.1980.01780210080009. [DOI] [PubMed] [Google Scholar]

- 37.Taylor E, Schachar R, Thorley G, et al. Which boys respond to stimulant medication? A controlled trial of methylphenidate in boys with disruptive behaviour. Psychol Med. 1987;17:121–143. doi: 10.1017/s0033291700013039. [DOI] [PubMed] [Google Scholar]

- 38.Klorman R, Brumaghim J, Fitzpatrick P, et al. Clinical and cognitive effects of methylphenidate on children with attention deficit disorder as a function of aggression oppositionality and age. J Abnorm Psychol. 1994;103:206–221. doi: 10.1037//0021-843x.103.2.206. [DOI] [PubMed] [Google Scholar]

- 39.Schachar R, Tannock R, Cunninggham C, et al. Behavioral, situational, and temporal effects of treatment of ADHD with methylphenidate. J Am Acad Child Adolesc Psychiatry. 1997;36:754–763. doi: 10.1097/00004583-199706000-00011. [DOI] [PubMed] [Google Scholar]

- 40.Manos MJ, Short EJ, Findling RL. Differential effectiveness of methylphenidate and Adderall in school-age youths with attention-deficit/hyperactivity disorder. J Am Acad Child Adolesc Psychiatry. 1999;38:813–819. doi: 10.1097/00004583-199907000-00010. [DOI] [PubMed] [Google Scholar]

- 41.Bostic JQ, Biederman J, Spencer TJ, et al. Pemoline treatment of adolescents with attention deficit hyperactivity disorder: a short-term controlled trial. J Child Adolesc Psychopharmacol. 2000;10:205–216. doi: 10.1089/10445460050167313. [DOI] [PubMed] [Google Scholar]

- 42.Pliszka SR, Browne RG, Olvera RL, et al. A double-blind, placebo-controlled study of Adderall and methylphenidate in the treatment of attention-deficit/hyperactivity disorder. J Am Acad Child Adolesc Psychiatry. 2000;39:619–626. doi: 10.1097/00004583-200005000-00016. [DOI] [PubMed] [Google Scholar]

- 43.Michelson D, Allen AJ, Busner J, et al. Once-daily atomoxetine treatment for children and adolescents with attention deficit hyperactivity disorder: a randomized placebo-controlled study. Am J Psychiatry. 2002;159:1896–1901. doi: 10.1176/appi.ajp.159.11.1896. [DOI] [PubMed] [Google Scholar]

- 44.Biederman J, Heiligenstein J, Faries D, et al. Efficacy of atomoxetine versus placebo in school-age girls with attention-deficit/hyperactivity disorder. Pediatrics. 2002;110:E75. doi: 10.1542/peds.110.6.e75. [DOI] [PubMed] [Google Scholar]

- 45.Spencer T, Heiligenstein J, Biederman J, et al. Results from 2 proof-of-concept, placebo-controlled studies of atomoxetine in children with attention-deficit/hyperactivity disorder. J Clin Psychiatry. 2002;63:1140–1147. doi: 10.4088/jcp.v63n1209. [DOI] [PubMed] [Google Scholar]

- 46.Biederman J, Quinn D, Weiss M, et al. Efficacy and safety of Ritalin LA, a new, once-daily, extended-release dosage form of methylphenidate hydrochloride, in children with ADHD. Pediatr Drugs. 2003;5:833–841. doi: 10.2165/00148581-200305120-00006. [DOI] [PubMed] [Google Scholar]

- 47.Wigal S, Swanson JM, Feifel D, et al. A double-blind, placebo-controlled trial of dexmethylphenidate hydrochloride and D,L-threo-methylphenidate hydrochloride in children with attention-deficit/hyperactivity disorder. J Am Acad Child Adolesc Psychiatry. 2004;43:1406–1414. doi: 10.1097/01.chi.0000138351.98604.92. [DOI] [PubMed] [Google Scholar]

- 48.Swanson JM, Greenhill LL, Lopez FA, et al. Modafinil film-coated tablets in children and adolescents with attention-deficit/hyperactivity disorder: results of a randomized, double-blind, placebo-controlled, fixed-dose study followed by abrupt discontinuation. J Clin Psychiatry. 2006;67:137–147. doi: 10.4088/jcp.v67n0120. [DOI] [PubMed] [Google Scholar]

- 49.Biederman J, Swanson J, Wigal S, et al. Efficacy and safety of modafinil film-coated tablets in children and adolescents with attention-deficit/hyperactivity disorder: results of a randomized, double-blind, placebo-controlled, flexible-dose study. Pediatrics. 2005;116:e777–e784. doi: 10.1542/peds.2005-0617. [DOI] [PubMed] [Google Scholar]

- 50.Findling RL, McNamara NK, Youngstrom EA, et al. Double-blind 18-month trial of lithium versus divalproex maintenance treatment in pediatric bipolar disorder. J Am Acad Child Adolesc Psychiatry. 2005;44:409–417. doi: 10.1097/01.chi.0000155981.83865.ea. [DOI] [PubMed] [Google Scholar]

- 51.Pelham WE, Burrows-Maclean L, Gnagy EM, et al. Transdermal methylphenidate, behavioral, and combined treatment for children with ADHD. Exp Clin Psychopharmacol. 2005;13:111–126. doi: 10.1037/1064-1297.13.2.111. [DOI] [PubMed] [Google Scholar]

- 52.Greenhill LL, Biederman J, Boellner SW, et al. A randomized, double-blind, placebo-controlled study of modafinil film-coated tablets in children and adolescents with attention-deficit/hyperactivity disorder. J Am Acad Child Adolesc Psychiatry. 2006;45:503–511. doi: 10.1097/01.chi.0000205709.63571.c9. [DOI] [PubMed] [Google Scholar]

- 53.Kelsey DK, Sumner CR, Casat CD, et al. Once-daily atomoxetine treatment for children with attention-deficit/hyperactivity disorder, including an assessment of evening and morning behavior: a double-blind, placebo-controlled trial. Pediatrics. 2004;114:e1–e8. doi: 10.1542/peds.114.1.e1. [DOI] [PubMed] [Google Scholar]

- 54.Spencer T, Wilens T, Biederman J, et al. Efficacy and safety of mixed amphetamine salts extended release Adderall XR in the management of attention-deficit hyperactivity disorder in adolescent patients: a 4-week, randomized, double-blind, placebo-controlled, parallel-group study. Clin Ther. 2006;28:266–279. doi: 10.1016/j.clinthera.2006.02.011. [DOI] [PubMed] [Google Scholar]

- 55.Findling RL, Quinn D, Hatch SJ, et al. Comparison of the clinical efficacy of twice-daily Ritalin((R)) and once-daily Equasym (trade mark) Xl with placebo in children with attention deficit/hyperactivity disorder. Eur Child Adolesc Psychiatry. 2006 doi: 10.1007/s00787-006-0565-0. Jun 21; [Epub ahead of print] [DOI] [PubMed] [Google Scholar]