Predicting Outcome in Ischemic Stroke: External Validation of Predictive Risk Models (original) (raw)

. Author manuscript; available in PMC: 2009 Sep 22.

Abstract

Background

Six multivariable models predicting 3-month outcome of acute ischemic stroke have been developed and internally validated previously. The purpose of this study was to externally validate the previous models in an independent data set.

Summary of Report

We predicted outcomes for 299 patients with ischemic stroke who received placebo in the National Institute of Neurological Disorders and Stroke rt-PA trial. The model equations used 6 acute clinical variables and head CT infarct volume at 1 week as independent variables and 3-month National Institutes of Health Stroke Scale, Barthel Index, and Glasgow Outcome Scale as dependent variables. Previously-developed model equations were used to forecast excellent and devastating outcome for subjects in the placebo tissue plasminogen activator data set. Area under the receiver operator characteristic curve was used to measure discrimination, and calibration charts were used to measure calibration. The validation data set patients were more severely ill (National Institutes of Health Stroke Scale and infarct volume) than the model development subjects. Area under the receiver operator characteristic curves demonstrated remarkably little degradation in the validation data set and ranged from 0.75 to 0.89. Calibration curves showed fair to good calibration.

Conclusions

Our models have demonstrated excellent discrimination and acceptable calibration in an external data set. Development and validation of improved models using variables that are all available acutely are necessary.

Keywords: cerebral ischemia, models, statistical, prognosis, stroke outcome

A multivariable model that could predict outcome after stroke would be useful in clinical trials to assess the balance of treatment groups and to predict expected outcomes of patients who are lost to follow-up. We developed and internally validated a series of predictive risk models for 229 ischemic stroke patients from the Randomized Trial of Tirilazad Mesylate in Patients with Acute Stroke (RANTTAS)1. However, the models have not been validated in an external data set. The purpose of this study was to assess the validity of those predictive models in an independent data set.

Methods

Study Population

Two hundred ninety-nine patients from the placebo group of the National Institute of Neurological Disorders arid Stroke (NINDS) rt-PA trial were used for the validation analysis.2 The NINDS rt-PA trial population has been described in detail previously.2 Briefly, this was an ischemic stroke population treated with intravenous tissue plasminogen activator (tPA) or placebo within 3 hours from symptom onset. Only the placebo group was used for this analysis because intravenous tPA is known to improve clinical outcome, and the predictive model being validated was designed to predict outcome without an intervention.

Three hundred twelve patients were treated with placebo in the NINDS rt-PA trial. Thirteen patients were excluded from our analysis for missing variables: 6 were missing 7- to 10-day CT scan infarct volume, 5 were missing stroke history information, and 2 were missing diabetes history information. The remaining 299 were used for this analysis.

Independent Variables

Baseline clinical information including age, National Institutes of Health Stroke Scale (NIHSS) score,3 history of previous stroke, history of diabetes mellitus, and history of prestroke disability were collected acutely. Infarct volume and stroke subtype were collected at 7 to 10 days after stroke onset.

Outcome Variables

The NIHSS, Barthel Index (BI),4 and Glasgow Outcome Scale (GOS)5 were used as outcome measures 3 months after stroke symptom onset. Each was dichotomized into excellent outcome (NIHSS ≤, BI ≥95, GOS = 1) or devastating outcome (NIHSS ≥20 or death, BI <60 or death, GOS >2) as previously defined.1

Statistical Analysis

The previously defined models were forecast to the study population using the previously defined weights. Model discrimination was assessed using area under the receiver operating characteristic (ROC) curve, which was computed by a nonparametric method.6 An area under the ROC curve of 0.5 indicates no ability to discriminate and an area of 1.0 indicates perfect discrimination. We prespecified an acceptable area under the ROC as ≥0.8. Calibration was assessed using calibration curves.7 The perfect 45° line demonstrates ideal calibration. The closer the model calibration is to the ideal line, the better the calibration. Hosmer-Lemeshow tests were performed to assess whether the models differed significantly from perfect calibration.8

Results

The clinical and imaging characteristics of the validation population are compared with those of the original population from the RANTTAS trial9 in Table 1. There were more blacks and fewer whites in the validation population. The validation population had substantially more severe strokes, as demonstrated by higher NIHSS scores and greater infarct volumes at 1 week, as well as worse outcomes when compared with the original data set.

TABLE 1.

Clinical and Imaging Characteristics of the 2 Populations

| Characteristics | Original (RANTTAS Data), n = 229 | Validation (NINDS Data), n = 199 |

|---|---|---|

| Age (median, IQ range) | 70 (61–77) | 67 (59–74) |

| Sex (M, %) | 56 | 59 |

| Race, % | ||

| White | 83 | 63 |

| Black | 13 | 29 |

| NIHSS (median, IQ range) | 10 (5–16) | 15 (10–20) |

| CT volume, cm3 (median, IQ range) | 9 (0–65) | 28 (6–104) |

| Excellent outcomes, % | ||

| NIHSS | 43 | 20 |

| BI | 56 | 39 |

| GOS | 48 | 31 |

| Devastating outcomes, % | ||

| NIHSS | 15 | 24 |

| BI | 29 | 42 |

| GOS | 32 | 46 |

The models’ ability to discriminate outcome, in both the original and validation data sets, is demonstrated in Table 2. In the original data set, 5 of the 6 models had excellent discrimination above the 0.8 level. The validation models demonstrate very little decline in area under the ROC curve in all 6 data sets and demonstrate excellent discrimination in 5 of the 6 models.

TABLE 2.

Model Discrimination: Area Under the Receiver Operating Characteristic Curve

| Excellent Outcome* | Devastating Outcome† | |||

|---|---|---|---|---|

| Original | Validation | Original | Validation | |

| NIHSS | 0.87 | 0.85 | 0.79 | 0.75 |

| BI | 0.84 | 0.83 | 0.88 | 0.84 |

| GOS | 0.84 | 0.81 | 0.87 | 0.89 |

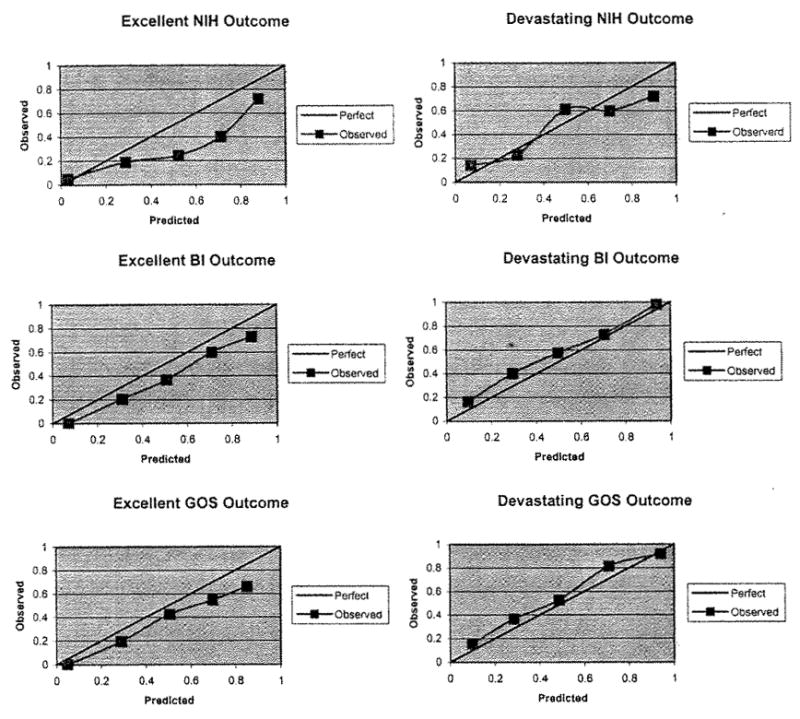

Model calibration is demonstrated in the Figure. Five of the 6 models have calibration curves that are very similar to the line of identity. The excellent outcome as measured by the NIHSS model calibrated less well and predicted a greater probability of excellent outcome than was observed in the validation data set for most of the range of predicted probabilities. For example, in the calibration curve “Excellent NIH Outcome,” at a model prediction of 70% probability of excellent outcome (fourth point on the curve), only about 40% of patients were actually observed to have excellent outcome by the NIHSS. Hosmer-Lemeshow tests of the calibration accuracy indicate that 5 of the 6 models were detectably (P<0.05) less than perfect, with all of the models tending to predict better outcomes than were observed.

Figure.

Calibration curves. The 6 calibration curves for the 6 models are shown. The solid 45° line reflects perfect calibration. Each model’s bias-corrected calibration is demonstrated by the observed line. In each case, the bias-corrected observed line deviates from the ideal line to some degree, but the observed line closely resembles the ideal line in 5 of the 6 models. NIH indicates National Institutes of Health Stroke Scale; BI, Barthel Index; GOS, Glasgow Outcome Scale.

Discussion

These 6 models have now been validated in an external data set. Five of the models have excellent discrimination (ROC area ≥0.8). The model for devastating outcome defined by the NIHSS (ROC area = 0.75) likely discriminates less well due to the small number of devastating outcomes in the model development data set. Five of the 6 models appear to be reasonably well calibrated, as shown in the calibration curves. The Hosmer-Lemeshow test demonstrates that these are not perfectly calibrated but the calibration appears to be adequate.

The validation population clearly had more severe strokes as demonstrated by both the NIHSS and infarct volume. Although the model discrimination was affected little by this, the poorer calibrations in the 6 models compared with the original models may be related to this difference. The fact that the models had such good discrimination and adequate calibration in 5 of the 6 models, even in such a different population, supports the generalizability of these models.

A predictive tool that would allow an accurate prediction of individual outcome at 3 months could be very useful in clinical research. The great heterogeneity among stroke patients may contribute to difficulty identifying treatment effects in clinical trials.10,11 Heterogeneity in a randomized clinical trial is mathematically expected to result in an underestimate of the treatment effect.11 A predictive model that could adjust for heterogeneity would allow a less biased estimate of the treatment effect and demonstrate a larger treatment effect in the same sized sample.

The small number of subjects and least frequent outcomes in both data sets limits how well the models can predict outcome. These models are also limited by the fact that only 5 of the 7 variables used in the prediction were collected acutely. Infarct volume and stroke subtype were collected at 1 week. Although a prediction of 3-month outcome is still valuable to the clinician at 1 week, this limits the use of these models in acute stroke clinical research. The dichotomized outcomes, though identifying the extreme outcomes (excellent outcome suggesting full or nearly full recovery; devastating outcome suggesting nursing home level disability or death), are not designed to predict the clinically relevant recovery levels in between. These extreme outcomes using the NIHSS, BI, and GOS are most useful in the clinical research realm in that they allow more reliable comparisons of standardized outcomes. These models, therefore, function as the proof of concept that predictive models can be developed and then internally and externally validated for the prediction of 3-month outcome. Future models must now be developed using variables that are all available in the acute setting.

Acknowledgments

Dr Johnston is supported by the National Institutes of Health, National Institute of Neurologic Disorders and Stroke (grant No. K23NS02168-01).

The NINDS rt-PA Stroke Trial was funded by the National Institutes of Health, National Institute of Neurologic Disorders and Stroke through contracts to the participating sites. The RANTTAS study was supported, in part, by the National Institutes of Health, National Institute of Neurological Disorders and Stroke (grant No. R01-NS31554), and Pharmacia and Upjohn Company (Kalamazoo, Mich).

The authors gratefully acknowledge the contribution of the NINDS rt-PA Stroke Trial investigators and the RANTTAS investigators, without whose efforts this work would not have been possible.

Footnotes

This work was presented, in part, at the 27th International Stroke Conference of the American Heart Association, San Antonio, Texas, February 7, 2002.

References

- 1.Johnston KC, Connors AF, Wagner DP, Knaus WA, Wang X, Haley EC., Jr A predictive risk model for outcomes of ischemic stroke. Stroke. 2000;31:448–455. doi: 10.1161/01.str.31.2.448. [DOI] [PubMed] [Google Scholar]

- 2.The National Institute of Neurological Disorders and Stroke rt-PA Stroke Study Group. Tissue plasminogen activator for acute ischemic stroke. N Engl J Med. 1995;333:1581–1587. doi: 10.1056/NEJM199512143332401. [DOI] [PubMed] [Google Scholar]

- 3.Lyden P, Brott T, Tilley B, Welch KM, Mascha EJ, Levine S, Haley EC, Grotta J, Marler J. Improved reliability of the NIH Stroke Scale using video training: NINDS TPA Stroke Study Group. Stroke. 1994;25:2220–2226. doi: 10.1161/01.str.25.11.2220. [DOI] [PubMed] [Google Scholar]

- 4.Mahoney FT, Barthel DW. Functional evaluation: Barthel Index. Md Med J. 1965;14:61–65. [PubMed] [Google Scholar]

- 5.Jennett B, Bond M. Assessment of outcome after severe brain damage. Lancet. 1975;1:480–484. doi: 10.1016/s0140-6736(75)92830-5. [DOI] [PubMed] [Google Scholar]

- 6.Hanley JA, McNeil BJ. The meaning and use of the area under a receiver operating characteristic (ROC) curve. Radiology. 1982;143:29–36. doi: 10.1148/radiology.143.1.7063747. [DOI] [PubMed] [Google Scholar]

- 7.Harrell FE, Lee KL, Mark DB. Tutorial in biostatistics: multivariable prognostic models: issues in developing models, evaluating assumptions and adequacy, and measuring and reducing errors. Sat Med. 1996;15:367–387. doi: 10.1002/(SICI)1097-0258(19960229)15:4<361::AID-SIM168>3.0.CO;2-4. [DOI] [PubMed] [Google Scholar]

- 8.Lemeshow S, Teres D, Klar J, Avrunin JS, Gehlbach SH, Rapoport J. Mortality probability models (MPM II) based on an international cohort of intensive care unit patients. JAMA. 1993;270:2478–2486. [PubMed] [Google Scholar]

- 9.The RANTTAS Investigators. A randomized trial of tirilazed mesylate in patients with acute stroke (RANTTAS) Stroke. 1996;27:1453–1458. doi: 10.1161/01.str.27.9.1453. [DOI] [PubMed] [Google Scholar]

- 10.DeGraba TJ, Halleabeck JM, Pettigrew KD, Dutka AJ, Kelly BJ. Progression in acute stroke value of the Initial NIH Stroke Scale Score on patient stratification in future trials. Stroke. 1999;30:1208–1212. doi: 10.1161/01.str.30.6.1208. [DOI] [PubMed] [Google Scholar]

- 11.Gail MH, Wieand S, Piantadosi S. Biased estimates of treatment effect in randomized experiments with non-linear regressions and omitted covariates. Biometrika. 1984;71:431–444. [Google Scholar]