Selective responses in right inferior frontal and supramarginal gyri differentiate between observed movements of oneself vs. another (original) (raw)

. Author manuscript; available in PMC: 2012 Apr 1.

Abstract

The fact that inferior frontal (IFg) and supramarginal (SMg) gyri respond to both self-generated and observed actions has been interpreted as evidence for a perception-action linking mechanism (mirroring). Yet, the brain readily distinguishes between percepts generated by one’s own movements vs. those of another. Do IFg and/or SMg respond differentially to these visual stimuli even when carefully matched? We used BOLD fMRI to address this question as participants made repetitive bimanual hand movements while viewing either live visual feedback or perceptually similar, pre-recorded video of an actor. As expected, bilateral IFg and SMg increased activity during both conditions. However, right SMg and IFg responded differentially during live visual feedback vs. matched recordings. These mirror system areas may distinguish self-generated percepts by detecting subtle spatio-temporal differences between predicted and actual sensory feedback and/or visual and somatosensory signals.

Keywords: action observation, visual feedback, supramarginal gyrus, inferior frontal gyrus, visual perspective

1. Introduction

In monkeys, ‘mirror neurons’ in the inferior frontal gyrus (IFg) and inferior parietal lobule (IPL) respond both when the monkey executes an action and when it observes that same action made by an experimenter (di Pellegrino, Fadiga, Fogassi, Gallese, & Rizzolatti, 1992; Gallese, Fadiga, Fogassi, & Rizzolatti, 1996; Rizzolatti, Fadiga, Gallese, & Fogassi, 1996). This discovery provides a potential mechanism to match one’s own actions with the actions of others, a link between perception and action via a shared parieto-frontal representation. In these studies, monkeys view their own movements (seen from the 1st-person perspective) or those of an actor (seen from the 3rd-person perspective).

Functional neuroimaging data indicates that the IFg and the supramarginal gyrus (SMg) of the IPL in the human brain also respond to the observation of others’ actions (Grèzes & Decety, 2001; Rizzolatti & Craighero, 2004). Whether these same regions are also involved during the execution of equivalent actions is still controversial. With repetition suppression techniques, adaptation across execution and observation has been found in right IPL (Chong, Cunnington, Williams, Kanwisher, & Mattingley, 2008), and in IFg (Kilner, Neal, Weiskopf, Friston, & Frith, 2009), providing support for a mirror neuron system in humans. However, see Dinstein et al. (2007) and Lingnau et al. (2009) for counterevidence using the same technique. *Similar debates involving the existence of a human mirror system arise when multivoxel pattern analysis is used (Dinstein, Gardner, Jazayeri, & Heeger, 2008; Oosterhof, Wiggett, Diedrichsen, Tipper, & Downing, 2010). Furthermore, with few exceptions (Frey & Gerry, 2006; Jackson, Meltzoff, & Decety, 2006; Shmuelof & Zohary, 2008), investigations of this ‘mirror system’ have tended not to manipulate viewing perspective. Instead, stimuli used in the vast majority of studies on action observation have consisted primarily of others’ actions as seen from a 3rd-person perspective. The effects of perspective change on IFg and SMg, or lack thereof, may provide valuable insights into what is being represented in this system.

On the basis of the mirror system account, one might expect that the IFg and SMg would respond equivalently to visual percepts attributable to our own movements vs. those of another actor. However, it is obvious that the brain is also able to distinguish between percepts arising from these two fundamentally different sources. Is this essential yet overlooked ability attributable to selective responses in IFg and/or SMg, or a separate mechanism? Here we perform the critical test of this hypothesis, by measuring the brain’s responses to observation of actions generated by oneself vs. another as seen from both the 1st- and 3rd-person perspectives.

During the acquisition of whole-brain fMRI data, healthy adults performed aurally-paced, bilateral thumb-finger sequences while viewing either live visual feedback (Self condition) or carefully matched pre-recorded video of an actor performing the same task (Other condition). We reasoned that if the IFg and SMg are sensitive to subtle perceptual differences between these conditions, then they should exhibit selective responses. Evidence for a right cerebral hemisphere asymmetry in self recognition (Uddin, Iacoboni, Lange, & Keenan, 2007), suggests that these conditional differences might be lateralized. We also varied perspective (1st- or 3rd-person) in both Self and Other conditions in an effort to determine the effects of this variable on these responses.

2. Materials and Methods

2.1. Subjects

Participants included fourteen healthy, right-handed volunteers (18–36 years, 7 females) with normal or corrected-to-normal vision and no history of psychiatric or neurological disease. Written informed consent was obtained.

2.2. fMRI Design and Procedure

Prior to the experiment, participants were given instructions and performed a short practice set of trials in a mock MRI scanner. Participants rested supine with their heads in the scanner, a cloth draped over their bodies, forearms on thighs and palms aligned in the vertical plane with thumbs facing up. Head and upper arms were padded to reduce motion artifacts. Other than performing the instructed hand movements, participants remained as still as possible.

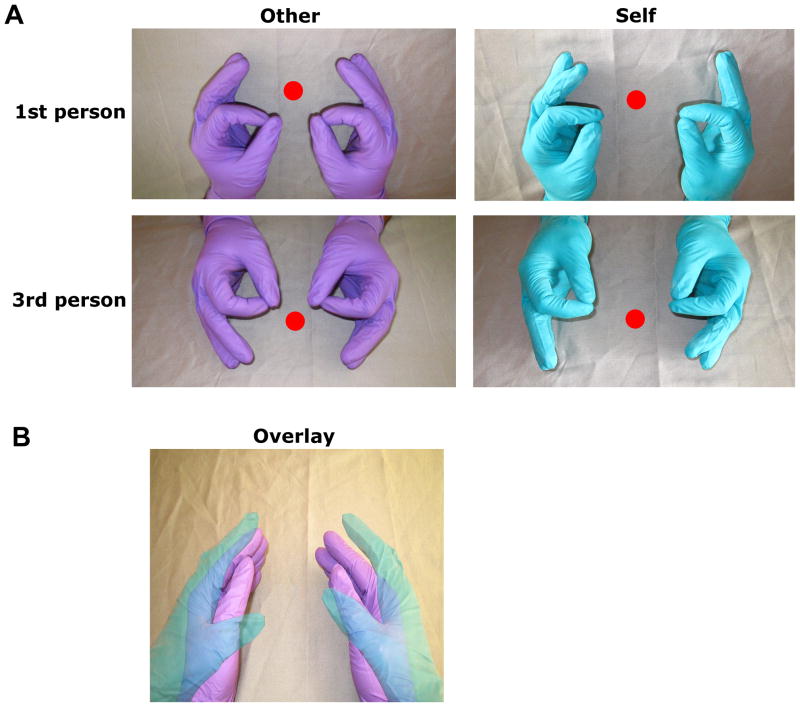

Participants performed a sequential bimanual thumb-finger sequencing (TFST) task in synchrony with a 1.5 Hz pacing tone under two conditions, Self and Other. These were distinguished by aural presentation of the word “execute” or “imitate” 2s prior to each block. Blocks of each condition lasted 18s and were followed by a 12s rest interval consisting of a black screen. A central fixation circle was always present. In the Self condition, participants viewed their own movements via live video feedback. Images of participants’ hands were reflected off an 18″ × 18″ mirror above the scanner bed. An MRI-compatible, remotely-controlled digital video camera captured this reflection. This video stream was then back-projected onto a screen at the head of the scanner bore and viewed by participants on a 5″ × 2″ mirror attached to the head coil. In the Other condition participants performed the aurally-paced TFST task while viewing a pre-recorded digital video of an actor performing the same task in the scanner. This video was created prior to the experiment using the same setup. Thus, the perspective, FoV, and lighting conditions of the video were matched as closely as possible to the live feedback. Participants were explicitly informed about the difference between Self and Other and received 15–20mins of practice. To avoid possible confusion between conditions, participants wore green gloves and the actor in the video wore purple gloves. The orientation of the visual stimuli was also manipulated. For both conditions, 50% of counterbalanced blocks presented visual stimuli (live or pre-recorded) from the perspective of the participant (1st-person perspective), and the remaining 50% were rotated by 180° (3rd-person perspective) (Figure 1A).

Figure 1. A) Experimental conditions in the fMRI experiment: 2 × 2 factorial crossing task feedback condition (self or other) with perspective (1st person or 3rd person).

*In the self condition, participants viewed their own movements in real time via live video feedback. In the other condition, participants viewed a pre-recorded digital video of an actor performing the same task. Within both self and other conditions, 50% of the blocks presented visual stimuli (live or pre-recorded, respectively) from a 1st-person perspective. Remaining 50% were rotated by 180° for a 3rd-person perspective. We did not aim to manipulate agency, so to make conditions distinct, participants always wore green gloves, while the actor in the video wore purple gloves. Conditions presented in a counterbalanced block-design and computer-controlled (National Instruments). B) Pre-experiment alignment overlay. Prior to the experiment, participants aligned their hands with a semi-transparent still frame of the actor’s hands from the pre-recorded video.

Several steps were taken to ensure spatial and temporal correspondence between participants’ movements and those of the actor. First, participants practiced beforehand, while the experimenter watched to make sure that they understood the instructions and were performing the tasks correctly. Second, participants’ hand postures were matched with those of the actor depicted in the recorded video. Just before the experiment, participants were shown a semi-transparent digital still frame (1st-person perspective) of the recorded video overlaid on a live video feed of their hands (Figure 1B). They were instructed to align their hands with those of the actor and remain in this position throughout the study. Third, to facilitate synchronization, participants were instructed to initiate TFST movements beginning with the index finger after two preparatory tones and then performed them along with the actor in sync with the auditory pacing tone. Hand movements were recorded with digital video for offline verification of compliance.

Participants completed four (8.6min) runs. Each run consisted of 2 blocks of each of the four conditions (2 types (Self, Other) × 2 perspectives (1st-person, 3rd-person). Two other types of blocks (Observe, Imagine) will not be discussed here. Condition order was counterbalanced across runs. Runs were counterbalanced across participants.

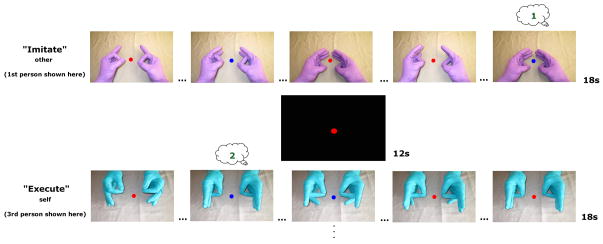

To control for possible variations in attention across conditions, participants performed a secondary task that required counting features of the observed movements (Figure 2). The central fixation circle’s color changed periodically (range 18 – 30 times per run) from red to blue coincident with the pinkie finger contacting the thumb. Participants reported cumulative values at the end of each run. The change occurred with equal likelihood during each condition. Participants performed this somewhat difficult task with a mean of 88% correct indicating that they were attending to the movement in all conditions (Figure S1).

Figure 2. Illustrative timeline of task with representative blocks (1st-person self and 3rd-person other conditions not displayed here.

Aural presentation of the word “Execute” (Self) or “Imitate” (Other) distinguished conditions 2s prior to each block. Blocks were counterbalanced and lasted 18s followed by 12s rest interval. Central fixation circle always present. Numbers in thought bubbles illustrate participant‘s running count of the number of times the fixation changed from red to blue coincident with the pinkie finger contacting the thumb. Finger tapping was sequential and ‘…’ symbols indicate skipped taps for illustration purposes. See Figure S1 for results verifying task compliance measured by this attentional control. Participants performed 4 runs. Each run had 2 blocks the 4 conditions.

2.3. Data acquisition

Scans were performed on a Siemens (Erlangen, Germany) 3T Allegra MRI scanner. BOLD echoplanar images (EPIs) were collected using a T2*-weighted gradient echo sequence, a standard birdcage radio-frequency coil, and these parameters: TR = 2000ms, TE = 30ms, flip angle = 90°, 64 × 64 voxel matrix, FoV = 220mm, 34 contiguous axial slices acquired in interleaved order, thickness = 4.0mm, in-plane resolution: 3.4 × 3.4 mm, bandwidth = 2790 Hz/pixel. The initial four scans in each run were discarded to allow the MR signal to approach a steady state. High-resolution T1-weighted structural images were also acquired, using the 3D MP-RAGE pulse sequence: TR = 2500ms, TE = 4.38ms, TI = 1100ms, flip angle = 8.0°, 256 × 256 voxel matrix, FoV = 256mm, 176 contiguous axial slices, thickness = 1.0mm, in-plane resolution: 1 × 1 mm. DICOM image files were converted to NIFTI format using MRIConvert software (http://lcni.uoregon.edu/~jolinda/MRIConvert/).

2.4. Preprocessing

Structural and functional fMRI data were preprocessed and analyzed using fMRIB’s Software Library [FSL v.4.1.2 (http://www.fmrib.ox.ac.uk/fsl/)] (Smith, et al., 2004) and involved several steps: motion corrected using MCFLIRT, independent components analysis conducted with MELODIC to identify and remove any remaining obvious motion artifacts, fieldmap-based EPI unwarping performed to correct for distortions due to magnetic field inhomogeneities using PRELUDE+FUGUE with a separate fieldmap (collected following each run) for each run, non-brain matter removed using BET, data spatially smoothed using a 5mm full-width at half-maximum Gaussian kernel, mean-based intensity normalization applied, in which each volume in the data set is scaled by the same factor, to allow for cross-sessions and cross-subjects statistics to be valid, high-pass temporal filtering with a 100s cut-off was used to remove low-frequency artifacts, time-series statistical analysis was carried out in FEAT v.5.98 using FILM with local autocorrelation correction, delays and undershoots in the hemodynamic response accounted for by convolving the model with a double-gamma HRF function, registration to the high-resolution structural with 7 degrees of freedom and then to the standard images with 12 degrees of freedom (Montreal Neurological Institute [MNI-152] template) at a 2×2×2 voxel resolution implemented using FLIRT, and registration from high resolution structural to standard space was further adjusted using FNIRT nonlinear registration (Andersson, Jenkinson, & Smith, 2007).

2.5. Whole brain Analysis

For every participant, each of the 4 fMRI runs containing Other and Self conditions viewed from either a _1st_- or 3rd-person perspective, were modeled separately at the first level. Orthogonal contrasts (one-tailed t-tests) were used to test for differences between each of the experimental conditions and resting baseline. Orthogonal contrasts were also used to test for differences between conditions. Because the only differences for contrasts of the 1st- vs. 3rd-person perspectives were in visual areas, we collapsed across perspective.

The resulting first-level contrasts of parameter estimates (COPEs) then served as inputs to higher-level analyses carried out using FLAME Stage 1 to model and estimate random-effects components of mixed-effects variance. Z (Gaussianized T) statistic images were thresholded using a cluster-based threshold of Z > 3.1 and a whole-brain corrected cluster significance threshold of p = 0.05. First-level COPEs were averaged across the 4 runs for each subject separately (level 2), and then averaged across participants (level 3).

In order to test for the main effects of PERSPECTIVE and TASK and for the interaction between these two factors, a 2 (PERSPECTIVE: 1st, 3rd) x 2 (TASK: other, self) repeated-measures ANOVA (F-tests) was performed on second-level COPEs.

Anatomical localization of brain activation was verified by manual comparison with an atlas (Duvernoy, 1991). In addition, the multi-fiducial mapping alogorithm in Caret (http://www.nitrc.org/projects/caret/) (Van Essen, et al., 2001) was used to overlay group statistical maps onto a population-average, landmark- and surface-based (PALS) atlas for the human brain (Van Essen, 2005).

*We also calculated mean percent signal change relative to the resting baseline across all voxels within the significant clusters of activation in IFg and SMg identified by the contrast of Self vs. Other in the whole-brain analysis. *These values were #calculated separately for each participant and condition using FSL’s Featquery. Repeated-measures ANOVAS were *also conducted to test for differences between conditions in these #*regions of interest (see supplemental material).

3. Result

3.1. Self or other

3.1.1. Self vs. rest and Other vs. rest contrasts

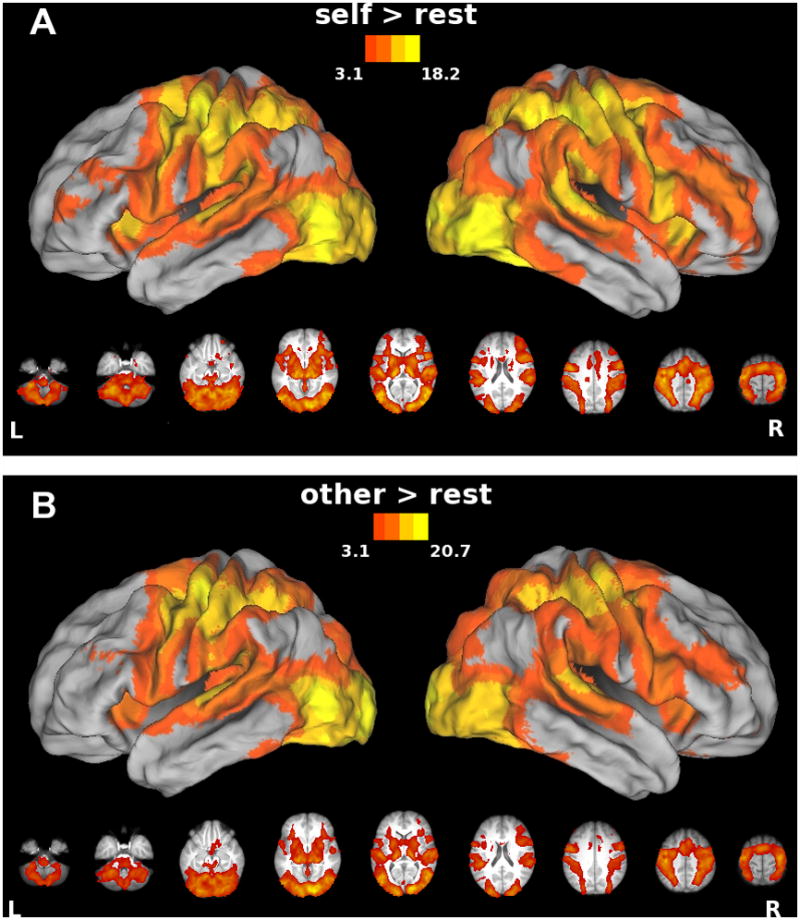

Relative to resting baseline, both Self (Figure 3A) and Other (Figure 3B) conditions were associated with increased bilateral activity within fronto-parietal areas (including both IFg and SMg) as well as other regions traditionally implicated in visually-guided bimanual behavior.

Figure 3. Areas showing increased activity in association with A) the Self condition or B) the Other condition vs. resting baseline.

In this and subsequent figures, group statistical parametric maps thresholded at Z>3.1 (corrected clusterwise significance threshold p<0.05) and displayed on a partially inflated view of CARET’s population-average, landmark- and surface-based (PALS) human brain atlas (see Methods). Data in lower panel are rendered on the group average anatomical image from our sample and oriented neurologically. Note expected increases in bilateral fronto-parietal areas (including both IFg and SMg) as well as other areas typically involved in visually-guided bimanual motor control.

3.1.2. Self vs. Other contrast

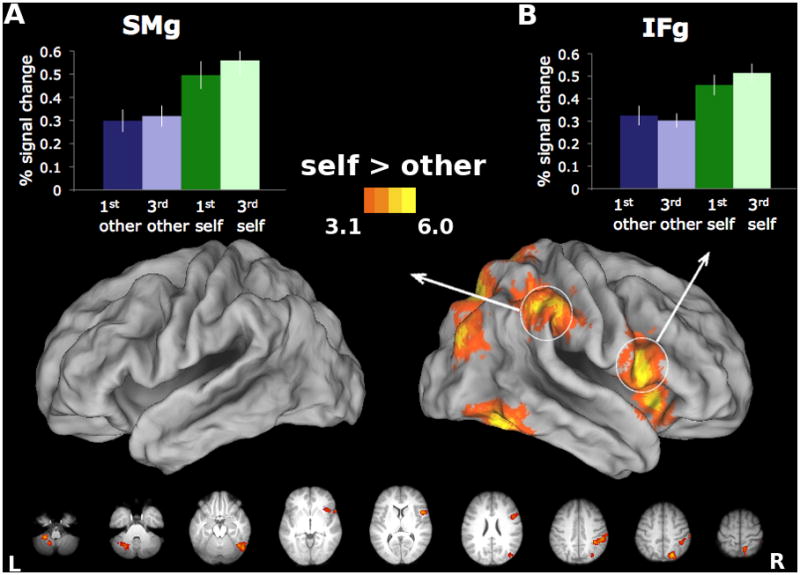

Importantly, IFg and SMg showed greater increases in activity for movements accompanied by self-generated visual feedback (Self) vs. matched video of another’s actions (Other), as illustrated in Figure 4. *Panels A and B display the mean percent signal change for all four conditions within regions of the right IFg and SMG that showed greater responses for Self vs. Other. Activations exceeded resting baseline in all four conditions (p < .001 in all cases), indicating that effect cannot be attributed to deactivations in any of the four conditions. This is consistent with the hypothesis that responses arising from these regions, frequently cited as critical nodes of the mirror system, signal this fundamental distinction. These selective cortical responses were entirely right-lateralized, and were also detected in the superior parietal lobe (SPL), insula, and lateral occipital (LO) cortex (Figure 4, *Table S1). Left cerebellum also showed this advantage for Self. Our findings provide further evidence for a right cerebral asymmetry in self-related processing (Keenan, Nelson, O’Connor, & Pascual-Leone, 2001).

Figure 4. Areas showing increased activity for the Self vs. Other comparison.

Right IFg and SMg show greater responses during perceptions of self-generated vs. anothers’ actions. Increased activity for this contrast also found in right SPL, insula, LO, as well as left cerebellum. Panels A and B display mean percent signal change relative to baseline for each of the four experimental conditions, extracted from ROIs in the IFg and SMg, averaged across participants. Bars represent standard errors.

3.1.3. Other vs. Self contrast

This comparison only revealed increased activity in occipital regions, which may reflect their sensitivity to subtle differences in lower-level aspects of the visual stimuli. *Next, given that we did not find a task by perspective interaction, we move on to discuss the main effect of perspective.

Effects of Perspective (1st- or 3rd-person)

The manipulation of perspective only affected activity in occipital cortex. Increased activity for the 1st- vs. 3rd-person perspective was detected inferior to the calcarine fissure (Figure S2, cool colors), while the opposite contrast revealed effects superior to the calcarine fissure (Figure S2, warm colors). Jackson et al. (2006) similarly found increased V1 and V2 activation for 1st person perspective and increased lingual gyrus activation for the 3rd person perspective. These effects are likely attributable to differential stimulation of the lower visual field (in which 1st-person perspective stimuli appeared), or the upper visual field (in which 3rd-person perspective stimuli appeared), as illustrated in Figure 1A. Critically, responses within regions that have been identified as part of the mirror system were perspective invariant, including those in right IFg and SMg that differentiated between Self and Other. These perspective invariant responses, including those within right IFg and SMg, may contribute to self-recognition even when seeing one’s movements from a 3rd-person perspective, as when looking in a mirror. Shmuelof and Zohary (2008) found contralateral aSPL for 1st person perspective and ipsilateral aSPL for 3rd person perspective for action observation of either the left or right hand (literally mirror-like).

4. Discussion

The discovery of brain regions that respond similarly during the execution of self-generated actions and observation of actions performed by another (i.e. the mirror system) catalyzed a large number of empirical and theoretical efforts (Rizzolatti & Craighero, 2004). *The major finding across studies has been that mirror neurons code the action, regardless of who performs it. Consistent with previous studies, we find bilateral increases in IFg and SMg when participants receive live visual feedback of their own hand movements (Self), or when they move in concert with carefully matched pre-recorded video of an actor (Other). However, if there are shared representations for these functions, how can actions of the self be distinguished from those of another? It has been hypothesized that a “who” system responsible for representing the self/other distinction must exist, such that the representational overlap is partial, with non-overlapping regions cuing the distinction (Georgieff & Jeannerod, 1998; Jeannerod, 2003). Here we show that responses within two key nodes of the mirror system – right IFg and SMg – can in fact differentiate between visual percepts arising from these two conditions. #Contrary to the mirror system framework, however, right IFg and SMg show greater increases in activity during the Self vs. Other condition. Accurately distinguishing between these two circumstances is essential to adaptive behavior, and our findings implicate right IFg and SMg in this function. *We cannot say, however, whether the neurons that are implicated in mirroring are the same ones that differentiate self from other, which is a limitation of currently available non-invasive techniques, including fMRI. It could either be the case that these neurons exhibit greater activity for self vs. other, or that there are different types of neurons (mirroring and self-other discrimination) within the same region.

Further, these selective responses were invariant to the viewing perspective, raising the possibility that right IFg and SMg contribute to recognizing oneself in a mirror (Gallop, 1970). Evidence for distinct anatomical connections between the IFg and SMg exists in both monkeys (Rozzi, et al., 2006) and humans (Rushworth, Behrens, & Johansen-Berg, 2006), suggesting that these areas constitute a parieto-frontal circuit. The current results suggest an asymmetry in the perceptual functions of this circuit in the human brain that may be critical to behaviors ranging from self-recognition to action attribution.

Further work is necessary to identify the perceptual information driving this differential response. One possibility is that right IFg and SMg are sensitive to greater spatio-temporal congruency in the Self condition between predicted sensory consequences (arising from a feed-forward controller) and actual visual feedback. Another possibility is that greater correspondence between visual and somatosensory feedback (proprioceptive and tactile) in the Self condition contributes to this selective response. Sensitivity to either or both of these sources of information may provide a means of distinguishing between visual percepts arising from one’s own actions vs. those of other agents.

4.1. Sensorimotor prediction and/or multisensory correspondence

4.1.1 Spatio-temporal congruency between predicted and actual sensory feedback

As noted above, increased activity in right IFg and SMg might be attributable to the greater spatio-temporal congruency between predicted sensory consequences and actual visual feedback in the Self condition. Motor control involves both feedback and feed-forward (predictive) processes. According to state feedback control theory (Shadmehr & Krakauer, 2008; Wolpert & Flanagan, 2001), an efference copy is generated in parallel with the motor command. The efference copy, along with an estimate of one’s current state, serves as input to a forward model that predicts the sensory feedback that should result from the motor command. This internally generated prediction can then be compared with the actual sensory feedback accompanying the movement (Blakemore, Goodbody, & Wolpert, 1998; Blakemore & Frith, 2003; Wolpert, Ghahramani, & Jordan, 1995). If the predicted feedback and actual feedback are congruent, then the action can be attributed to oneself. If incongruent, then the action can be attributed to another agent (Blakemore, Oakley, & Frith, 2003; Frith, Blakemore, & Wolpert, 2000; Sato & Yasuda, 2005). Since we did not require participants to identify movement authorship, our findings are not about agency assignment in an explicit sense. We also found increased activity in the cerebellum when participants saw their own movements vs. those of an actor. The cerebellum is widely thought to play a role in predicting the sensory consequences of movements via forward modeling (Blakemore & Sirigu, 2003; Wolpert, Miall, & Kawato, 1998).

Both Self and Other conditions involved performing the same paced bimanual movements and gave rise to similar proprioceptive feedback. However, use of live visual ‘feedback’ during the Self condition would likely have resulted in greater spatiotemporal congruency of visual and proprioceptive signals. Activity in right IFg and SMg may increase in response to this tighter multisensory coupling.

Additional experimentation is necessary to disentangle these two possibilities, which are not mutually exclusive.

4.2.1. Self-recognition and the right hemisphere

The widespread right lateralization of increases in activity associated with the Self vs. Other comparison compliments the existing literature on the organization of mechanisms involved in self-recognition (Keenan, et al., 2001), which emphasizes the contributions of right IFg (Kaplan, Aziz-Zadeh, Uddin, & Iacoboni, 2008; Uddin, et al., 2007; Uddin, Kaplan, Molnar-Szakacs, Zaidel, & Iacoboni, 2005). Notably, this included posterior parietal, inferior frontal and the insular cortex among other areas (Figure 4). As noted earlier, right IPL has also been implicated in agency detection (Farrer, et al., 2003; Uddin, et al., 2005). For instance, Farrer et al. (2003; 2007) argue that right IPL (specifically the angular gyrus) is associated with the misattribution of one’s own actions as another’s as well as the degree of mismatch between predicted and actual movements, though their activation increases were not completely lateralized to the right hemisphere. It should be noted, however, that their tasks specifically involved deception and required participants to make a decision about agency. *By contrast, we made no attempts to manipulate or disguise the ownership of the action. Two PET experiments manipulated agency such that the participant was either the leader or the follower of an action (Chaminade & Decety, 2002; Decety, Chaminade, Grèzes, & Meltzoff, 2002). When leading the action (or being imitated), right IPL showed increased activity, but when following the action (or imitating), left IPL showed increased activity. This is consistent with our results, since self-initiation of the viewed action results in greater activation in right IPL. There is also evidence suggesting that the right insula is involved in the detection of agency (Farrer, et al., 2003; Farrer & Frith, 2002; Tsakiris, Hesse, Boy, Haggard, & Fink, 2007). *Given such results, one might expect that a disruption or injury to one or more of these right hemisphere regions might result in errors of self/other processing and, in fact, injuries to these areas of the right hemisphere have been implicated in disorders of agency attribution (Frith, et al., 2000).

*In sum, our results indicate that right SMg and IFg signal differences, rather than similarities, between the observed actions of oneself vs. another. We # *suggest that this selective response may be critical for correctly assigning agency to observed actions. Our findings in healthy adults are consistent with observations indicating that injuries to right fronto-parietal and insular regions may lead to impairments in self-perception as a result of their impact on the ability to detect congruency between efference copy and sensory feedback (Frith, et al., 2000; Haggard & Wolpert, 2005) and/or sources of multisensory feedback (Tsakiris, et al., 2007). Further work might address the functioning of these areas in the developing brain, or in individuals with impairments of the body scheme.

Supplementary Material

01

02

Acknowledgments

We thank Bill Troyer for his contributions to the LabVIEW software development. Grants from USAMRAA (06046002) and NIH/NINDS (NS053962) to S.H.F. supported this work.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Andersson JLR, Jenkinson M, Smith SM. Non-linear optimisation. 2007. [Google Scholar]

- Arzy S, Overney LS, Landis T, Blanke O. Neural mechanisms of embodiment: asomatognosia due to premotor cortex damage. Arch Neurol. 2006;63:1022–1025. doi: 10.1001/archneur.63.7.1022. [DOI] [PubMed] [Google Scholar]

- Berti A, Bottini G, Gandola M, Pia L, Smania N, Stracciari A, Castiglioni I, Vallar G, Paulesu E. Shared cortical anatomy for motor awareness and motor control. Science. 2005;309:488–491. doi: 10.1126/science.1110625. [DOI] [PubMed] [Google Scholar]

- Blakemore S, Goodbody S, Wolpert D. Predicting the consequences of our own actions: the role of sensorimotor context estimation. J Neurosci. 1998;18:7511–7518. doi: 10.1523/JNEUROSCI.18-18-07511.1998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blakemore S, Sirigu A. Action prediction in the cerebellum and in the parietal lobe. Experimental Brain Research. 2003;153:239–245. doi: 10.1007/s00221-003-1597-z. [DOI] [PubMed] [Google Scholar]

- Blakemore SJ, Frith C. Self-awareness and action. Curr Opin Neurobiol. 2003;13:219–224. doi: 10.1016/s0959-4388(03)00043-6. [DOI] [PubMed] [Google Scholar]

- Blakemore SJ, Oakley DA, Frith CD. Delusions of alien control in the normal brain. Neuropsychologia. 2003;41:1058–1067. doi: 10.1016/s0028-3932(02)00313-5. [DOI] [PubMed] [Google Scholar]

- Chaminade T, Decety J. Leader or follower? Involvement of the inferior parietal lobule in agency. Neuroreport. 2002;13:1975–1978. doi: 10.1097/00001756-200210280-00029. [DOI] [PubMed] [Google Scholar]

- Chong T, Cunnington R, Williams M, Kanwisher N, Mattingley J. fMRI Adaptation Reveals Mirror Neurons in Human Inferior Parietal Cortex. Current Biology. 2008;18:1576–1580. doi: 10.1016/j.cub.2008.08.068. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Decety J, Chaminade T, Grèzes J, Meltzoff AN. A PET exploration of the neural mechanisms involved in reciprocal imitation. NeuroImage. 2002;15:265–272. doi: 10.1006/nimg.2001.0938. [DOI] [PubMed] [Google Scholar]

- di Pellegrino G, Fadiga L, Fogassi L, Gallese V, Rizzolatti G. Understanding motor events: a neurophysiological study. Exp Brain Res. 1992;91:176–180. doi: 10.1007/BF00230027. [DOI] [PubMed] [Google Scholar]

- Dinstein I, Gardner J, Jazayeri M, Heeger D. Executed and Observed Movements Have Different Distributed Representations in Human aIPS. Journal of Neuroscience. 2008;28:11231–11239. doi: 10.1523/JNEUROSCI.3585-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dinstein I, Hasson U, Rubin N, Heeger DJ. Brain areas selective for both observed and executed movements. J Neurophysiol. 2007;98:1415–1427. doi: 10.1152/jn.00238.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Duvernoy HM. The human brain: surface, blood supply, and three-dimensional sectional anatomy. 2. New York: Springer Wien; 1991. (Chapter Chapter) [Google Scholar]

- Farrer C, Franck N, Georgieff N, Frith CD, Decety J, Jeannerod M. Modulating the experience of agency: a positron emission tomography study. NeuroImage. 2003;18:324–333. doi: 10.1016/s1053-8119(02)00041-1. [DOI] [PubMed] [Google Scholar]

- Farrer C, Frey SH, Van Horn JD, Tunik E, Turk D, Inati S, Grafton ST. The Angular Gyrus Computes Action Awareness Representations. Cereb Cortex. 2007 doi: 10.1093/cercor/bhm050. [DOI] [PubMed] [Google Scholar]

- Farrer C, Frith C. Experiencing oneself vs another person as being the cause of an action: the neural correlates of the experience of agency. Neuroimage. 2002;15:596–603. doi: 10.1006/nimg.2001.1009. [DOI] [PubMed] [Google Scholar]

- Frey SH, Gerry VE. Modulation of neural activity during observational learning of actions and their sequential orders. J Neurosci. 2006;26:13194–13201. doi: 10.1523/JNEUROSCI.3914-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Frith C, Blakemore S, Wolpert D. Abnormalities in the awareness and control of action. Philos Trans R Soc Lond B Biol Sci. 2000;355:1771–1788. doi: 10.1098/rstb.2000.0734. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gallese V, Fadiga L, Fogassi L, Rizzolatti G. Action recognition in the premotor cortex. Brain. 1996;119(Pt 2):593–609. doi: 10.1093/brain/119.2.593. [DOI] [PubMed] [Google Scholar]

- Gallop GG., Jr Chimpanzees: self-recognition. Science. 1970;167:86–87. doi: 10.1126/science.167.3914.86. [DOI] [PubMed] [Google Scholar]

- Georgieff N, Jeannerod M. Beyond consciousness of external reality: a “who” system for consciousness of action and self-consciousness. Conscious Cogn. 1998;7:465–477. doi: 10.1006/ccog.1998.0367. [DOI] [PubMed] [Google Scholar]

- Grèzes J, Decety J. Functional anatomy of execution, mental simulation, observation, and verb generation of actions: a meta-analysis. Human Brain Mapping. 2001;12:1–19. doi: 10.1002/1097-0193(200101)12:1<1::AID-HBM10>3.0.CO;2-V. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haggard P, Wolpert DM. Disorders of Body Scheme. In: Freund H, Jeannerod M, Hallett M, Leiguarda R, editors. Higher-order Motor DisordersFrom Neuroanatomy and Neurobiology to Clinical Neurology. USA: Oxford University Press; 2005. pp. 261–271. [Google Scholar]

- Jackson P, Meltzoff A, Decety J. Neural circuits involved in imitation and perspective-taking. NeuroImage. 2006;31:429–439. doi: 10.1016/j.neuroimage.2005.11.026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jeannerod M. The mechanism of self-recognition in humans. Behavioural Brain Research. 2003;142:1–15. doi: 10.1016/s0166-4328(02)00384-4. [DOI] [PubMed] [Google Scholar]

- Jenkinson PM, Fotopoulou A. Motor awareness in anosognosia for hemiplegia: experiments at last! Exp Brain Res. 2009 doi: 10.1007/s00221-009-1929-8. [DOI] [PubMed] [Google Scholar]

- Kaplan J, Aziz-Zadeh L, Uddin L, Iacoboni M. The self across the senses: an fMRI study of self-face and self-voice recognition. Social Cognitive and Affective Neuroscience. 2008;3:218–223. doi: 10.1093/scan/nsn014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Karnath H. Awareness of the Functioning of One’s Own Limbs Mediated by the Insular Cortex? Journal of Neuroscience. 2005;25:7134–7138. doi: 10.1523/JNEUROSCI.1590-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Keenan J, Nelson A, O’Connor M, Pascual-Leone A. Self-recognition and the right hemisphere. Nature. 2001;409:305. doi: 10.1038/35053167. [DOI] [PubMed] [Google Scholar]

- Kilner J, Neal A, Weiskopf N, Friston K, Frith C. Evidence of mirror neurons in human inferior frontal gyrus. J Neurosci. 2009;29:10153–10159. doi: 10.1523/JNEUROSCI.2668-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lingnau A, Gesierich B, Caramazza A. Asymmetric fMRI adaptation reveals no evidence for mirror neurons in humans. Proceedings of the National Academy of Sciences of the United States of America. 2009;106:9925–9930. doi: 10.1073/pnas.0902262106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oosterhof NN, Wiggett AJ, Diedrichsen J, Tipper SP, Downing PE. Surface-based information mapping reveals crossmodal vision-action representations in human parietal and occipitotemporal cortex. J Neurophysiol. 2010;104:1077–1089. doi: 10.1152/jn.00326.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rizzolatti G, Craighero L. The mirror-neuron system. Annu Rev Neurosci. 2004;27:169–192. doi: 10.1146/annurev.neuro.27.070203.144230. [DOI] [PubMed] [Google Scholar]

- Rizzolatti G, Fadiga L, Gallese V, Fogassi L. Premotor cortex and the recognition of motor actions. Brain Res Cogn Brain Res. 1996;3:131–141. doi: 10.1016/0926-6410(95)00038-0. [DOI] [PubMed] [Google Scholar]

- Rozzi S, Calzavara R, Belmalih A, Borra E, Gregoriou GG, Matelli M, Luppino G. Cortical connections of the inferior parietal cortical convexity of the macaque monkey. Cereb Cortex. 2006;16:1389–1417. doi: 10.1093/cercor/bhj076. [DOI] [PubMed] [Google Scholar]

- Rushworth M, Behrens T, Johansen-Berg H. Connection patterns distinguish 3 regions of human parietal cortex. Cereb Cortex. 2006;16:1418–1430. doi: 10.1093/cercor/bhj079. [DOI] [PubMed] [Google Scholar]

- Sato A, Yasuda A. Illusion of sense of self-agency: discrepancy between the predicted and actual sensory consequences of actions modulates the sense of self-agency, but not the sense of self-ownership. Cognition. 2005;94:241–255. doi: 10.1016/j.cognition.2004.04.003. [DOI] [PubMed] [Google Scholar]

- Shadmehr R, Krakauer JW. A computational neuroanatomy for motor control. Exp Brain Res. 2008;185:359–381. doi: 10.1007/s00221-008-1280-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shmuelof L, Zohary E. Mirror-image representation of action in the anterior parietal cortex. Nature Neuroscience. 2008;11:1267–1269. doi: 10.1038/nn.2196. [DOI] [PubMed] [Google Scholar]

- Smith SM, Jenkinson M, Woolrich MW, Beckmann CF, Behrens TE, Johansen-Berg H, Bannister PR, De Luca M, Drobnjak I, Flitney DE, Niazy RK, Saunders J, Vickers J, Zhang Y, De Stefano N, Brady JM, Matthews PM. Advances in functional and structural MR image analysis and implementation as FSL. Neuroimage. 2004;23(Suppl 1):S208–219. doi: 10.1016/j.neuroimage.2004.07.051. [DOI] [PubMed] [Google Scholar]

- Tsakiris M, Hesse MD, Boy C, Haggard P, Fink GR. Neural signatures of body ownership: a sensory network for bodily self-consciousness. Cereb Cortex. 2007;17:2235–2244. doi: 10.1093/cercor/bhl131. [DOI] [PubMed] [Google Scholar]

- Uddin L, Iacoboni M, Lange C, Keenan J. The self and social cognition: the role of cortical midline structures and mirror neurons. Trends in Cognitive Sciences. 2007;11:153–157. doi: 10.1016/j.tics.2007.01.001. [DOI] [PubMed] [Google Scholar]

- Uddin LQ, Kaplan JT, Molnar-Szakacs I, Zaidel E, Iacoboni M. Self-face recognition activates a frontoparietal “mirror” network in the right hemisphere: an event-related fMRI study. Neuroimage. 2005;25:926–935. doi: 10.1016/j.neuroimage.2004.12.018. [DOI] [PubMed] [Google Scholar]

- Vallar G, Ronchi R. Somatoparaphrenia: a body delusion. A review of the neuropsychological literature. Exp Brain Res. 2009;192:533–551. doi: 10.1007/s00221-008-1562-y. [DOI] [PubMed] [Google Scholar]

- Van Essen DC. A Population-Average, Landmark- and Surface-based (PALS) atlas of human cerebral cortex. Neuroimage. 2005;28:635–662. doi: 10.1016/j.neuroimage.2005.06.058. [DOI] [PubMed] [Google Scholar]

- Van Essen DC, Drury HA, Dickson J, Harwell J, Hanlon D, Anderson CH. An integrated software suite for surface-based analyses of cerebral cortex. J Am Med Inform Assoc. 2001;8:443–459. doi: 10.1136/jamia.2001.0080443. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wolpert D, Ghahramani Z, Jordan M. An internal model for sensorimotor integration. Science. 1995;269:1880–1882. doi: 10.1126/science.7569931. [DOI] [PubMed] [Google Scholar]

- Wolpert DM, Flanagan JR. Motor prediction. Curr Biol. 2001;11:R729–732. doi: 10.1016/s0960-9822(01)00432-8. [DOI] [PubMed] [Google Scholar]

- Wolpert DM, Miall RC, Kawato M. Internal models in the cerebellum. Trends in Cognitive Sciences. 1998;2:338–347. doi: 10.1016/s1364-6613(98)01221-2. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

01

02