Gene annotation bias impedes biomedical research (original) (raw)

Abstract

We found tremendous inequality across gene and protein annotation resources. We observed that this bias leads biomedical researchers to focus on richly annotated genes instead of those with the strongest molecular data. We advocate that researchers reduce these biases by pursuing data-driven hypotheses.

Introduction

After analyzing samples with a high throughput technology, the de facto first step is to perform pathway or network analysis to identify biological processes that are statistically enriched in the data1. Researchers typically form hypotheses for their follow up experiments based on the genes or proteins involved in the enriched processes. Commonly used resources for identifying gene functions and interactions include the Gene Ontology (GO)2, Reactome3, Comparative Toxicogenomics Database (CTD)4, DrugBank5, Protein Data Bank (PDB)6, Pubpular7, and NCBI GeneRIF. Since these resources are created by curation of the scientific literature, they typically only contain functional annotations for genes with published experimental data. Although GO includes predicted functional annotations for genes, they are considered of low quality8. Consequently, researchers select those genes or proteins for further validation that have prior experimental evidence, which, in turn, leads to more functional annotations for those genes at the expense of under-studied genes9–12.

We hypothesized that this experimental paradigm has led to a gene-centric disease research bias where hypotheses are confounded by the streetlight effect of looking for “answers where the light is better rather than where the truth is more likely to lie”13–16. To test this hypothesis, we examined the annotation inequality for the human genome across a number of biomedical databases using the Gini coefficient, which is a measure of inequality such that high coefficient value indicates higher inequality17.

Results

Annotation inequality is increasing over time

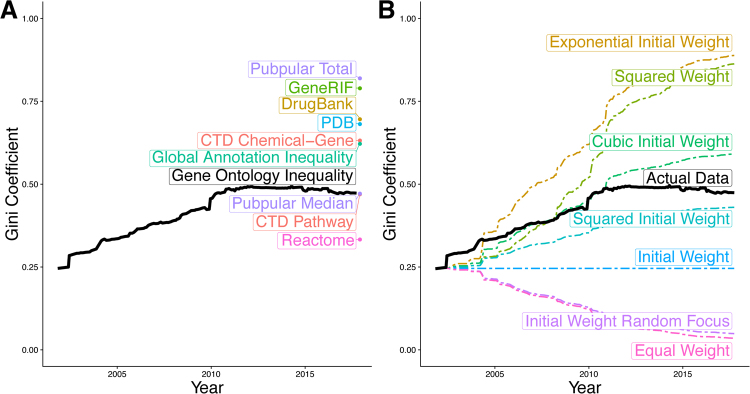

Despite the tremendous growth of Gene Ontology Annotations (GOA) from 32,259 annotations for 9,664 human genes in 2001 to 185,276 annotations for 17,314 genes in 2017, annotation inequality in GO has increased from a Gini coefficient of 0.25 in 2001 to 0.47 in 2017 (Fig. 1A) with tight confidence intervals (Figure S1A). We compared inequality in GOA data using eight inequality metrics: Gini coefficient, Ricci-Schutz coefficient, Atkinson’s measure, Kolm’s measure, Theil’s entropy, coefficient of variation, squared coefficient of variation, and generalized entropy. We observed increases in inequality over time irrespective of the metric used (Figure S1B). We used the Gini coefficient for the remainder of this manuscript since it demonstrated the most conservative estimate of the increase in inequality. Similarly, GOA inequality trends are not substantially affected by the inclusion or exclusion of particular types of annotations or ontology terms (Figure S1C).

Figure 1.

Inequality in gene annotations. (A) We measured the Gini coefficient across a variety of gene annotation resources. (B) We compared the growth in the Gini coefficient of the Gene Ontology to different models of increasing and decreasing inequality. See also Figure S1.

We simulated changes in GOA equality using the first GO release as a baseline measurement. We estimated how inequality levels would have changed under different models, including equal growth across genes, growth consistent with the initial levels of inequality, and growth increasingly biased towards genes that began with many annotations. When we compared these different trajectories, we observed that the actual changes in inequality most closely matched the models of increasingly biased growth (Fig. 1B). Our findings further validate that genes with existing annotations continue to receive even more annotations18.

Annotation inequality persists across organisms and databases

We computed annotation inequality in 12 other organisms over time for comparison including arabidopsis, chicken, cow, dicty, dog, fly, mouse, pig, rat, worm, yeast, and zebrafish (Figure S1A). When comparing the first version for each organism, human annotations exhibited the second greatest level of equality. In the most current versions of Gene Ontology annotations, humans exhibit the fourth highest inequality. The longitudinal trends varied across organisms, including organisms with both increasing and decreasing inequalities. Mouse and rat, the primary model organisms for human disease, exhibit increases in Gene Ontology annotations that are consistent with the patterns observed in the human data.

We examined other gene annotation databases to ensure that the observed phenomena was not specific to the GO. Other pathway databases, including Reactome (Gini = 0.33)3 and the CTD Pathway (Gini = 0.47)4, have a similarly high level of inequality (Fig. 1A). Indeed, every gene annotation resource we examined displayed a similarly high level of annotation inequality, including CTD chemical-gene associations (Gini = 0.63)4, PDB 3D protein structures (gini = 0.68)6, DrugBank drug-gene associations (Gini = 0.70)5, GeneRIF gene publication annotations (Gini = 0.79), and Pubpular disease-gene publication associations (Gini = 0.82)7,19. When considering the number of annotations pooled across all these databases, global gene annotation Gini coefficient was 0.63.

Annotation inequality bias affects biomedical research

Next, we explored whether disease research may be affected by the inequality in gene annotation databases. Concerns that most published findings are false20, many results are inflated21, and research funding is being wasted22,23 have led to a number of proposals for reproducible and clinically relevant findings24–26. We have previously described a multi-cohort analysis framework27–29 that leverages biological and technical heterogeneity across multiple independent datasets to identify robust disease signatures. Using this framework, we have repeatedly demonstrated that it can identify robust disease signatures across a broad spectrum of diseases including organ transplant27, infections30–33, autoimmune disease34, cancer35–37, vaccination38, and neurodegenerative diseases39 for identifying diagnostic and prognostic markers, novel drug targets, and repurposing FDA-approved drugs.

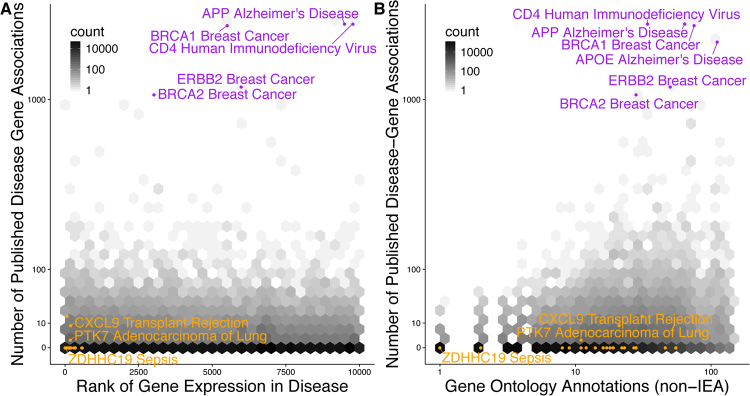

In our manually curated meta-analyses of 104 distinct human conditions, we have integrated transcriptome data from over 41,000 patients and 619 studies to calculate an effect size for disease-gene associations28. Our analyses included diverse classes of human conditions such as cancer, autoimmune disease, viral infection, neurodegenerative and psychiatric disorders, pregnancy, and obesity. For these conditions, we extracted all disease gene associations with at least ten publications7,19. Published disease-gene associations exhibited no significant correlation with differential gene expression false discovery rate (FDR) rank (Spearman’s correlation = −0.003, p = 0.836, Fig. 2A) Overall, only 19.5% of published disease-gene associations were identified in gene expression analyses at a FDR of 5% that is consistent with previous publications that have successfully replicated between 11–25% of research studies40,41 (Figure S2A).

Figure 2.

Published Disease-Gene Associations Not Reflected in Molecular Data. (A) The number of publications for every disease-gene pair was not significantly correlated with the gene expression multicohort analysis effect size FDR rank [Spearman’s correlation = −0.003, p = 0.836]. (B) The number of publications for every disease-gene pair correlated with the number of non-inferred from electronic annotation (non-IEA) Gene Ontology annotations [Spearman’s correlation = 0.110, p = 2.1e–16]. Orange points represent disease-gene associations published in our prior meta-analyses27,30,37. Purple points have at least 1000 publications. See also Figure S2.

To observe whether this phenomenon was specific to gene expression, we extracted genome wide significant single nucleotide polymorphisms (SNPs) from the GWAS catalog42. We observed a non-significant correlation between the number of publications and SNP p-values, indicating a lack of concordance between genetic mutations and disease-gene publications (Spearman’s correlation = 0.017, p = 0.836, Figure S2B).

Based on these results, we hypothesized that the lack of correlation with molecular evidence may be an artifact of research bias towards well-characterized genes. Therefore, we examined correspondence between publications about a disease-gene pair and existing knowledge about that gene as indicated by the number of GO annotations. Indeed, the number of GO annotations for a gene of interest was significantly correlated with the published disease-gene associations (Spearman’s correlation = 0.110, p = 2.1e-16, Fig. 2B), but not with gene expression effect size FDR rank in disease (Spearman’s correlation = −0.023, p = 0.080, Figure S2C)2.

Many of the highly published disease-gene associations may have been studied for reasons that would not be directly reflected in gene expression analysis, including BRCA1 in breast cancer and CD4 in human immunodeficiency virus. The more troubling bias occurs when associations with strong molecular evidence have no publication record. Disease-gene associations we have reported in our published meta-analyses were typically novel findings with few Gene Ontology annotations, despite having extremely low false discovery rates and high effect sizes27,30,35 (orange points in Fig. 2). We observed similar patterns when we performed the same analysis on similar publication and GWAS data from HuGE Navigator43,44 (Figure S2D–F).

Discussion

Collectively, our results provide an evidence of a strong research bias in literature that focuses on well-annotated genes instead of those with the most significant disease relationship in terms of both expression and genetic variation. We show that the inequality follows a “rich-getting-richer” pattern, where annotation growth is biased towards genes that were richly annotated in the initial versions of GO. We believe this stems from the typical experimental design. To illustrate this, consider an omics experiment that generates a list of hundreds or thousands of interesting genes. To interpret these genes, researchers use GO and pathway analysis tools. The researchers then generate targeted hypotheses for validation by interpreting the list of significant GO terms, focusing the genes or proteins annotated with that GO term. The researchers learn more about those targeted genes, leading to additional GO annotations for the already annotated genes. In this process, the list of unannotated genes is simply ignored because pathway analysis tools cannot map them to any GO terms. Hence, the self-perpetuating cycle of inequality continues.

While focusing research on the best characterized genes may be natural because it is easy to formulate a mechanistic hypothesis of the gene’s function in disease, we propose that the researchers in the era of omics should instead allow data to drive their hypotheses. We have repeatedly shown that expanding research outside of the streetlight of well characterized genes identifies novel disease-gene relationships35–37, identifies FDA-approved drugs that can be repurposed for other diseases27, and identifies clinically translatable diagnostic and prognostic disease signatures27,30–34,39. For example, we have previously identified PTK7 as causally involved in non-small cell lung cancer37. At the time of publication, PTK7 was labelled as an orphan tyrosine kinase receptor. In a very short span, this finding was transformed into an antibody-drug conjugate targeting PTK7 that induced sustained tumor regression, outperformed standard-of-care chemotherapy, and reduced frequency of tumor-initiating cells in a preclinical study45. A Phase 1 clinical trial (NCT02222922) of PTK7 antibody-drug conjugate, PF-06647020, has already completed with acceptable and manageable safety profile, and is now being considered for further clinical development. To enable researchers to pursue data-driven hypotheses, we have made our rigorously validated gene expression multicohort analysis data publicly available (http://metasignature.stanford.edu) where it may be explored based on either diseases or genes of interest29,46. Focusing on genes with the strongest molecular evidence instead of the most annotations would enable researchers to break the self-perpetuating annotation inequality cycle that results in research bias.

Methods

Inequality metrics calculations

We used the R package ineq to compute eight inequality metrics: (1) Gini coefficient, (2) Ricci-Schutz coefficient, (3) Atkinson’s measure, (4) Kolm’s measure, (5) Theil’s entropy, (6) coefficient of variation, (7) squared coefficient of variation, and (8) generalized entropy.

Gini coefficient

The R package ineq47 calculates the Gini coefficient as:

| G=∑i=1n∑j=1n|xi−xj|2n∑i=1nxi | 1 | | --------------------------------- | - |

where n is the number of genes and x i is the number of annotations for a gene i17. We included all human genes with at least one annotation in the Gini calculations.

List of human gene names

We used the Entrez Gene list downloaded in February 2017 of 20,698 current, protein-coding, human genes as our source of human genes.

Gene Ontology Annotations data

We calculated the number of annotations for each human gene in the Gene Ontology2. in every version of GO annotations since 2001 that was available at http://http.ebi.ac.uk/pub/databases/GO/goa/old. Duplicate annotations that only differ in evidence codes were counted once.

We examined the Gini coefficient for the different classes of evidence codes (experimental, computational analysis, author statement, curatorial statement, and automatically assigned) and namespaces (cellular component, biological process, molecular function). We found no substantial differences in the Gini coefficient values and trends regardless of the terms being considered (Figure S1D). To focus on terms with the strongest evidence, the remainder of our manuscript excluded the evidence codes IEA, ND, and NR8. To focus on terms related to functional understanding of genes, we only considered the biological process and molecular function GO namespaces.

GOA for other organisms

We downloaded historic Gene Ontology annotation data for all 12 organisms available from http://http.ebi.ac.uk/pub/databases/GO/goa/old/. These organisms included arabidopsis, chicken, cow, dicty, dog, fly, mouse, pig, rat, worm, yeast, and zebrafish.

Confidence intervals

Using bootstrap resampling, we calculated 95% confidence intervals around our Gini coefficients based on 1000 permutations of each version of the human Gene Ontology annotation data [Figure S1B].

Modeling Gini coefficient over time

We used the first available version of the human GO annotations (http://http.ebi.ac.uk/pub/databases/GO/goa/old/HUMAN/gene_association.goa_human.1.gz) as our baseline measurement in all models. We modeled every release of GO under different growth models, distributing the number of new annotations from that release across genes according to the model. We define our update step as:

ngeneij+1=ngeneij+nj+1∗pgenei

where:

ngeneij is the number of annotations for gene_i_ at timestep j

n j + 1 is the number of annotations added in version j + 1 (subject to n ≥ 0).

pgenei be the probability of annotation being assigned to gene_i_

pgenei0 be the initial proportion of annotations assigned to gene_i_ in the initial release of GO (ngenei0n0).

For each model, we define our pgenei as follows:

Exponential initial weight. pgenei=exppgenei0. Models inequality growth consistent with exponential initial probability.

Cubic initial weight. pgenei=(pgenei0)3. Models inequality growth consistent with cubic initial probability.

Squared weight. pgenei=(pgenei−1)2. Models inequality growth consistent with squared probability from previous round.

Squared initial weight. pgenei=(pgenei0)2. Models inequality growth consistent with squared initial probability.

Initial weight. pgenei=pgenei0. Models inequality growth consistent with initial probability.

Initial weight random focus. pgenei=prandomgenei0 where prandomgenei0 is the initial probability from a randomly selected gene. Model assumes inequal growth in annotations consistent with the initial probabilities but randomized across genes in every version of GO.

Equal weight. pgenei=nj+1|genes| where |genes| is the number of genes in GO. Models even growth of annotations across genes.

Other gene annotation database Gini coefficient calculation

Pubpublar. We manually downloaded gene-publication data in August 2016 from Pubpular for 102 of the diseases in our gene expression database7,19. “Pubpular Total” refers to the inequality of gene-publication data across all diseases. “Pubpular Median” refers to the median inequality of gene-publication for each disease.

Reactome. We downloaded Reactome pathway data from the complete database release 593. We downloaded data in MySQL format and parsed pathways into UniProt identifiers using custom scripts. We converted UniProt identifiers to gene names using the UniProt identifier conversion tool48. We calculated the number of pathways including each gene name.

CTD. We downloaded the CTD4 data in February 2017, with the chemical-gene associations and the gene-pathway associations. We calculated the number of chemical-gene and gene-pathway associations for each gene name.

GeneRIFs. We downloaded GeneRIFs from the NCBI in February 2017. We included all human GeneRIFS (Tax ID: 9606). We calculated the number of GeneRIFs for each gene.

Protein Data Bank. We downloaded the gene names associated with protein structures from the Protein Data Bank6 in February 2017 and calculated the number of structures per gene name.

DrugBank. We downloaded the DrugBank5 database version 5.0.5 and identified all drugs with known activities on human genes. We calculated the number of drugs targeting each gene.

Gene expression data collection and multicohort analysis

Gene expression multicohort analysis data was compiled from the MetaSignature database28. MetaSignature includes data from manual multicohort analysis of over 41,000 samples, 619 studies, and 104 diseases. Briefly, relevant data were downloaded from Gene Expression Omnibus and ArrayExpress49,50. Cases and controls were manually labeled for each disease and multicohort analysis was performed using the MetaIntegrator package28. We used the Hedges’ g summary effect size, standard error, and false discovery rate which the MetaIntegrator package calculates for every gene.

Data collection for disease-gene publications and SNP data

We downloaded the number of publications for each disease-gene relationship from PubPular and HuGE Navigator in August 2016 for as many of the 104 disease in MetaSignature as were present in the databases (102 in PubPular and 81 in HuGE)7,19,43. PubPular gave the top 261 gene associations, and HuGE gave all known associations. For all correlations, we only considered disease-gene associations with at least 10 publications to limit false positive associations.

We downloaded disease-SNP relationships, including gene mappings, odds ratios, and p-values, from the GWAS Catalog and HuGE Navigator for 61 and 54, respectively, of the 103 diseases in MetaSignature42,44. From Gene Ontology, we calculated the counts of non-Inferred from Electronic Annotation annotations for all the genes in the MetaSignature database2. The Spearman rank correlation was used for all correlations.

Our plots show the top 10,000 gene associations for each disease by effect size FDR rank. Correlation calculations do not include a similar limit.

Code and data availability

The code and data we used to run this analysis is available at https://khatrilab.stanford.edu/researchbias and https://figshare.com/projects/Gene_annotation_bias_impedes_biomedical_research/27124 (10.6084/m9.figshare.5660824.v2 and 10.6084/m9.figshare.5648332.v6).

Electronic supplementary material

Acknowledgements

We thank Paul J. Utz for feedback about the manuscript and figures and Alex Schrenchuk for computer support. WAH is funded by the National Science Foundation Graduate Research Fellowship under Grant No. DGE-114747 and NIH NLM 4T15LM007033-33. PK is funded by the the Bill and Melinda Gates Foundation, and NIAID grants 1U19AI109662, U19AI057229 and U54I117925.

Author Contributions

Conceptualization, W.A.H., A.T. and P.K.; Methodology, W.A.H., A.T. and P.K.; Software, W.A.H. and A.T.; Investigation, W.A.H. and A.T.; Data Curation, W.A.H. and A.T.; Writing- Original Draft, W.A.H. and P.K.; Writing- Reviewing and Editing, W.A.H., A.T. and P.K.; Visualization, W.H.; Funding Acquisition, P.K.

Competing Interests

The authors declare that they have no competing interests.

Footnotes

Electronic supplementary material

Supplementary information accompanies this paper at 10.1038/s41598-018-19333-x.

Publisher's note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Khatri P, Sirota M, Butte AJ. Ten years of pathway analysis: current approaches and outstanding challenges. PLoS computational biology. 2012;8:e1002375. doi: 10.1371/journal.pcbi.1002375. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Ashburner M, et al. Gene ontology: tool for the unification of biology. The Gene Ontology Consortium. Nature genetics. 2000;25:25–9. doi: 10.1038/75556. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Croft D, et al. The Reactome pathway knowledgebase. Nucleic acids research. 2014;42:472–7. doi: 10.1093/nar/gkt1102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Davis AP, et al. The Comparative Toxicogenomics Database’s 10th year anniversary: update 2015. Nucleic acids research. 2015;43:914–20. doi: 10.1093/nar/gku935. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Wishart DS, et al. DrugBank: a comprehensive resource for in silico drug discovery and exploration. Nucleic acids research. 2006;34:668–72. doi: 10.1093/nar/gkj067. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Berman HM, et al. The Protein Data Bank. Nucleic acids research. 2000;28:235–42. doi: 10.1093/nar/28.1.235. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Maggie Lam. PubPular: Identifying the focus of biomedical research. https://pubpular.shinyapps.io/PubPular/.

- 8.Yon Rhee S, Wood V, Dolinski K, Draghici S. Use and misuse of the gene ontology annotations. Nature Reviews Genetics. 2008;9:509–515. doi: 10.1038/nrg2363. [DOI] [PubMed] [Google Scholar]

- 9.Gillis J, Pavlidis P. “Guilt by Association” Is the Exception Rather Than the Rule in Gene Networks. PLoS Computational Biology. 2012;8:e1002444. doi: 10.1371/journal.pcbi.1002444. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Gillis J, Ballouz S, Pavlidis P. Bias tradeoffs in the creation and analysis of protein–protein interaction networks. Journal of Proteomics. 2014;100:44–54. doi: 10.1016/j.jprot.2014.01.020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Pandey AK, Lu L, Wang X, Homayouni R, Williams RW. Functionally Enigmatic Genes: A Case Study of the Brain Ignorome. PLoS ONE. 2014;9:e88889. doi: 10.1371/journal.pone.0088889. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Dolgin E. The most popular genes in the human genome. Nature. 2017;551:427–431. doi: 10.1038/551S15a. [DOI] [PubMed] [Google Scholar]

- 13.Freedman, D. H. Why Scientific Studies Are So Often Wrong: The Streetlight Effect. Discover Magazine1 (2010).

- 14.Battaglia M, Atkinson MA. The streetlight effect in type 1 diabetes. Diabetes. 2015;64:1081–90. doi: 10.2337/db14-1208. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Bulgheresi S. Environmental Microbiology. 2016. Bacterial cell biology outside the streetlight; pp. 2305–2318. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Rodriguez-Esteban R, Jiang X. Differential gene expression in disease: a comparison between high-throughput studies and the literature. BMC Medical Genomics. 2017;10:59. doi: 10.1186/s12920-017-0293-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Gini, C. & C. Variabilità e mutabilità. Reprinted in Memorie di metodologica statistica (Ed. Pizetti E, Salvemini, T). Rome: Libreria Eredi Virgilio Veschi (1912).

- 18.Gillis J, Pavlidis P. Bioinformatics. 2013. Assessing identity, redundancy and confounds in Gene Ontology annotations over time; pp. 476–482. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Lam, M. P. Y. et al. Data-Driven Approach To Determine Popular Proteins for Targeted Proteomics Translation of Six Organ Systems. Journal of proteome research Web, http://www.ncbi.nlm.nih.gov/pubmed/27356587. 10.1021/acs.jproteome.6b00095 (2016). [DOI] [PMC free article] [PubMed]

- 20.Ioannidis JPA. Why most published research findings are false. PLoS medicine. 2005;2:e124. doi: 10.1371/journal.pmed.0020124. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Ioannidis JPA. Why Most Discovered True Associations Are Inflated. Epidemiology. 2008;19:640–648. doi: 10.1097/EDE.0b013e31818131e7. [DOI] [PubMed] [Google Scholar]

- 22.Macleod, M. R. et al. Biomedical research: increasing value, reducing waste (2014). [DOI] [PubMed]

- 23.Collins FS, Tabak LA. Policy: NIH plans to enhance reproducibility. Nature. 2014;505:612–613. doi: 10.1038/505612a. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Begley CG, Ellis LM. Raise standards for preclinical cancer research. Nature. 2012;483:531–533. doi: 10.1038/483531a. [DOI] [PubMed] [Google Scholar]

- 25.Wasserstein RL, Lazar NA. The ASA’s Statement on p-Values: Context, Process, and Purpose. The American Statistician. 2016;70:129–133. doi: 10.1080/00031305.2016.1154108. [DOI] [Google Scholar]

- 26.Myint L, Leek JT, Jager LR. Five ways to fix statistics. Nature. 2017;551:557–559. doi: 10.1038/d41586-017-07522-z. [DOI] [PubMed] [Google Scholar]

- 27.Khatri P, et al. A common rejection module (CRM) for acute rejection across multiple organs identifies novel therapeutics for organ transplantation. The Journal of experimental medicine. 2013;210:2205–21. doi: 10.1084/jem.20122709. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Haynes, W. A. et al. Empowering Multi-Cohort Gene Expression Analysis to Increase Reproducibility. Pac Symp Biocomput Web, http://biorxiv.org/content/early/2016/08/25/071514. 10.1101/071514 (2017). [DOI] [PMC free article] [PubMed]

- 29.Sweeney, T. E., Haynes, W. A., Vallania, F., Ioannidis, J. P. &Khatri, P. Methods to increase reproducibility in differential gene expression via meta-analysis. Nucleic acids research Web, gkw797, http://www.ncbi.nlm.nih.gov/pubmed/27634930. 10.1093/nar/gkw797 (2016). [DOI] [PMC free article] [PubMed]

- 30.Sweeney TE, Shidham A, Wong HR, Khatri P. A comprehensive time-course-based multicohort analysis of sepsis and sterile inflammation reveals a robust diagnostic gene set. Science Translational Medicine. 2015;7:287ra71. doi: 10.1126/scitranslmed.aaa5993. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Andres-Terre M, et al. Integrated, Multi-cohort Analysis Identifies Conserved Transcriptional Signatures across Multiple Respiratory Viruses. Immunity. 2015;43:1199–1211. doi: 10.1016/j.immuni.2015.11.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Sweeney TE, Braviak L, Tato CM, Khatri P. Genome-wide expression for diagnosis of pulmonary tuberculosis: a multicohort analysis. The Lancet Respiratory Medicine. 2016;4:213–224. doi: 10.1016/S2213-2600(16)00048-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Sweeney TE, Wong HR, Khatri P. Robust classification of bacterial and viral infections via integrated host gene expression diagnostics. Science translational medicine. 2016;8:346ra91. doi: 10.1126/scitranslmed.aaf7165. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Lofgren, S. et al. Integrated, multicohort analysis of systemic sclerosis identifies robust transcriptional signature of disease severity. JCI Insight1, https://insight.jci.org/articles/view/89073. 10.1172/jci.insight.89073 (2016). [DOI] [PMC free article] [PubMed]

- 35.Mazur, P. K. et al. SMYD3 links lysine methylation of MAP3K2 to Ras-driven cancer. Nature advance on, www.nature.com/articles/nature13320. 10.1038/nature13320 (2014). [DOI] [PMC free article] [PubMed]

- 36.Mazur PK, et al. Combined inhibition of BET family proteins and histone deacetylases as a potential epigenetics-based therapy for pancreatic ductal adenocarcinoma. Nature Medicine. 2015;21:1163–1171. doi: 10.1038/nm.3952. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Chen R, et al. A meta-analysis of lung cancer gene expression identifies PTK7 as a survival gene in lung adenocarcinoma. Cancer Research. 2014;74:2892–2902. doi: 10.1158/0008-5472.CAN-13-2775. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Team, H.-C. S. P. & Consortium, H.-I. Multicohort analysis reveals baseline transcriptional predictors of influenza vaccination responses. Science Immunology 1–14 (2017). [DOI] [PMC free article] [PubMed]

- 39.Li MD, Burns TC, Morgan AA, Khatri P. Integrated multi-cohort transcriptional meta-analysis of neurodegenerative diseases. Acta neuropathologica communications. 2014;2:93. doi: 10.1186/s40478-014-0093-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Prinz F, Schlange T, Asadullah K. Believe it or not: how much can we rely on published data on potential drug targets? Nature Reviews Drug Discovery. 2011;10:712–712. doi: 10.1038/nrd3439-c1. [DOI] [PubMed] [Google Scholar]

- 41.Begley CG, Ellis LM. Drug development: Raise standards for preclinical cancer research. Nature. 2012;483:531–3l. doi: 10.1038/483531a. [DOI] [PubMed] [Google Scholar]

- 42.Welter D, et al. The NHGRI GWAS Catalog, a curated resource of SNP-trait associations. Nucleic acids research. 2014;42:1001–6. doi: 10.1093/nar/gkt1229. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Yu W, Clyne M, Khoury MJ, Gwinn M. Phenopedia and Genopedia: disease-centered and gene-centered views of the evolving knowledge of human genetic associations. Bioinformatics. 2010;26:145–146. doi: 10.1093/bioinformatics/btp618. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Yu W, et al. GWAS Integrator: a bioinformatics tool to explore human genetic associations reported in published genome-wide association studies. European Journal of Human Genetics. 2011;19:1095–1099. doi: 10.1038/ejhg.2011.91. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Damelin M, et al. A PTK7-targeted antibody-drug conjugate reduces tumor-initiating cells and induces sustained tumor regressions. Science translational medicine. 2017;9:eaag2611. doi: 10.1126/scitranslmed.aag2611. [DOI] [PubMed] [Google Scholar]

- 46.Haynes, W., Tomczak, A. &Khatri, P. Gene annotation bias impedes biomedical research. Pacific Symposium on Biocomputing, http://biorxiv.org/content/early/2017/05/02/133108 (2017). [DOI] [PMC free article] [PubMed]

- 47.Zeileis, A. ineq: Measuring Inequality, Concentration, and Poverty, https://cran.r-project.org/package=ineq. (2014).

- 48.UniProt: the universal protein knowledgebase. Nucleic Acids Research45, D158–D169, https://academic.oup.com/nar/article-lookup/doi/10.1093/nar/gkw1099 (2017). [DOI] [PMC free article] [PubMed]

- 49.Brazma A, et al. ArrayExpress–a public repository for microarray gene expression data at the EBI. Nucleic Acids Research. 2003;31:68–71. doi: 10.1093/nar/gkg091. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Edgar R. Gene Expression Omnibus: NCBI gene expression and hybridization array data repository. Nucleic Acids Research. 2002;30:207–210. doi: 10.1093/nar/30.1.207. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.