Branch-Specific Plasticity Enables Self-Organization of Nonlinear Computation in Single Neurons (original) (raw)

Abstract

It has been conjectured that nonlinear processing in dendritic branches endows individual neurons with the capability to perform complex computational operations that are needed to solve for example the binding problem. However, it is not clear how single neurons could acquire such functionality in a self-organized manner, because most theoretical studies of synaptic plasticity and learning concentrate on neuron models without nonlinear dendritic properties. In the meantime, a complex picture of information processing with dendritic spikes and a variety of plasticity mechanisms in single neurons has emerged from experiments. In particular, new experimental data on dendritic branch strength potentiation in rat hippocampus have not yet been incorporated into such models. In this article, we investigate how experimentally observed plasticity mechanisms, such as depolarization-dependent spike-timing-dependent plasticity and branch-strength potentiation, could be integrated to self-organize nonlinear neural computations with dendritic spikes. We provide a mathematical proof that, in a simplified setup, these plasticity mechanisms induce a competition between dendritic branches, a novel concept in the analysis of single neuron adaptivity. We show via computer simulations that such dendritic competition enables a single neuron to become member of several neuronal ensembles and to acquire nonlinear computational capabilities, such as the capability to bind multiple input features. Hence, our results suggest that nonlinear neural computation may self-organize in single neurons through the interaction of local synaptic and dendritic plasticity mechanisms.

Introduction

Dendritic processing in pyramidal neurons is highly nonlinear, exhibiting several types of dendritic spikes (Losonczy and Magee, 2006; Sjöström et al., 2008; Larkum et al., 2009). It has been hypothesized that such dendritic nonlinearities enhance processing capabilities at the single-neuron level (Mel, 1994; Häusser and Mel, 2003) that could be important for the binding of object features, for the participation of single neurons in several ensembles, for various memory processes (Morita, 2008; Wu and Mel, 2009), and for sequence detection (Branco et al., 2010). However, the computational advantage of nonlinear branches for the organism is unclear, because similar functional properties could be achieved by networks of neurons without dendritic nonlinearities. In this article, we investigate one striking advantage of nonlinear branch processing, namely that it enables single neurons to acquire nonlinear functionality with local plasticity mechanisms. Thus, the independence of any one dendritic branch results in the entire dendritic tree behaving like a network, and critically, any one branch is able to optimize responsiveness to a specific input through plasticity mechanisms that take aspects of the behavior of the whole dendritic arbor into account.

A rich and diverse set of plasticity mechanisms has been identified in the pyramidal neuron (Sjöström et al., 2008). Spike-timing-dependent plasticity (STDP) depends on the precise timing of presynaptic and postsynaptic spikes (Markram et al., 1997; Bi and Poo, 1998). However, pairing frequency and local dendritic depolarization also influence synaptic plasticity (Artola et al., 1990; Ngezahayo et al., 2000; Sjöström et al., 2001). Recent experimental data (Losonczy et al., 2008) also show that the coupling between dendritic branches and the soma (via dendritic spikes) is adapted through a mechanism called “branch-strength potentiation” (BSP), and evidence exists that BSP is relevant in vivo (Makara et al., 2009). Finally, synaptic and dendritic plasticity also depend on neuromodulatory input (Reynolds and Wickens, 2002; Gu, 2003; Dringenberg et al., 2007; Losonczy et al., 2008).

It is unclear how these plasticity mechanisms interact to enable robust learning and self-organization in neurons and networks. In this article, we show that synaptic plasticity mechanisms that depend on membrane depolarization enable a neuron model with nonlinear dendritic integration to acquire complex functionality in a self-organized manner. These self-organization capabilities are made possible by an emerging competition between branches for synaptic activation. Specifically, we show in a theoretical analysis of a simplified model that the plasticity mechanisms give rise to a max-operation such that only the most strongly activated dendritic branches specialize to a given input pattern. Thus, learning in our model induces a weight distribution in which stored patterns create clusters of strong synapses at individual dendritic branches. A neuron can in this way self-organize to solve a binding problem. This self-organization is possible if the presence of object features is coded through increased firing rates of neuronal ensembles or through increased synchrony in such ensembles. BSP can in this context serve as a mechanism for boosting dendritic responses to stored patterns, as suggested by Losonczy et al. (2008), and homeostatically regulate the effectivity of dendritic spikes.

Materials and Methods

Specific implementation of the neuron model for computer simulations

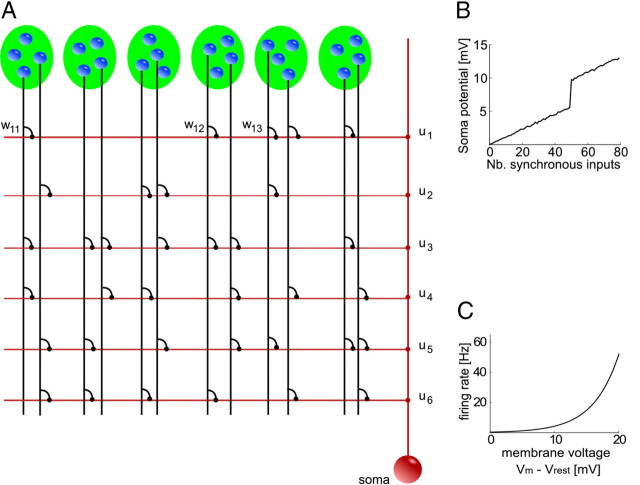

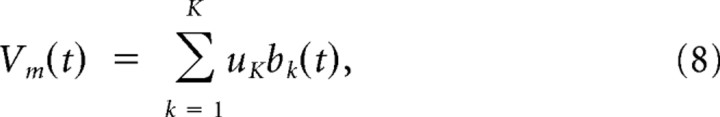

In this article, we use a stochastic spiking neuron model with dendritic nonlinearities as illustrated in Figure 1.

Figure 1.

Schema of the neuron model with dendritic branches. A, The neuron is schematically illustrated in red. It receives input from presynaptic neurons (blue spheres), which participate in several neuronal ensembles (indicated by green ellipses). The neuron is composed of a set of independent branches (horizontal red lines) and a soma (red sphere). Axons of presynaptic neurons (vertical black lines) connect to the branches via synapses (black dots). Synaptic inputs are weighted by synaptic weights wkj. Dendritic spikes are weighted by branch strengths uk (red dots). The soma sums the dendritic activations and the weighted dendritic spikes. B, Dendritic nonlinearity. At a certain local dendritic depolarization, a dendritic spike is initiated, leading to nonlinear dendritic integration. Shown is the modeled somatic membrane voltage in dependence of the number of synchronously (in a time window of 10 ms) activated synapses. Compare with Losonczy and Magee (2006, their Fig. 1G). C, Somatic nonlinearity. The neuron fires stochastically with instantaneous firing rate ρ(t) that depends exponentially on the somatic membrane potential Vm(t).

Nonlinear summation of EPSPs at dendritic branches.

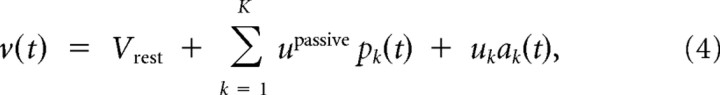

The local potential bk(t) at a branch k at time t in our model is constituted by two components, a passive component pk(t) and an active component ak(t). The passive component pk(t) is given by the linear summation of incoming postsynaptic potentials:

where xkj is the set of spike times of input j to branch k, and ε is the EPSP that results from one input spike. This means that all EPSPs arriving at a dendritic branch are added up linearly and propagated passively. wkj is the synaptic efficacy of a synapse from input j to branch k. The postsynaptic potential ε is modeled by an α function (for parameters, see Table 2). In addition to the passive component pk(t), the membrane potential bk(t) at branch k includes an active nonlinear component ak(t) of size β0, which is activated whenever pk(t) is above a threshold ϕdend:

where Θ denotes the Heaviside step function. The branch potential bk(t) is given by the sum of the local synaptic activation pk(t) at the branch and the additional contribution from the dendritic spike ak(t), leading to nonlinear branch characteristics as illustrated in Figure 2:

The passive component of the branch potential pk(t) is conducted to the soma, and its impact on the somatic potential is scaled by an attenuation factor _u_passive < 1. The dendritic spike is scaled by a different weighting factor, the branch strength uk, before it reaches the soma. According to recent experimental findings, this branch strength can vary dramatically between branches and is subject to plasticity (Losonczy et al., 2008) (for the plasticity model used in this study, see below). Thus, the somatic membrane potential before refractory effects are taken into account is given by the sum of these weighted components from all branches k:

where _V_rest denotes the resting potential of the neuron.

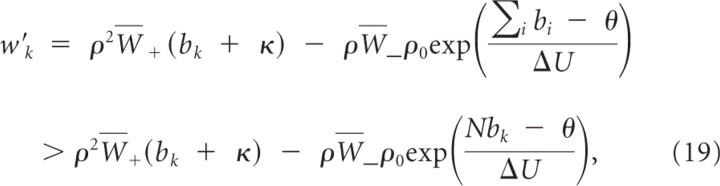

Table 2.

Parameters for computer simulations

| Neuron parameters | ||

|---|---|---|

| θ | Neuron stochastic threshold | 20 mV |

| ρ0 | Neuron firing rate at threshold | 52 Hz |

| Δ_U_ | Width of spike trigger zone | 4 mV |

| _t_refract | Refractory period | 2 ms |

| ηreset | Reset kernel | ηreset(s)=−10mVe−s20msΘ(s) |

| Synapse parameters | ||

| ε(s) | EPSP kernel | ε(s)=1.3mV[e−s20ms−e−s0.7ms]Θ(s) |

| _w_ini | Initial weight value | Uniformly distributed in [0.0025, 0.0225] |

| _w_max | Maximal weight value | 0.15 [−] |

| Branch parameters | ||

| β0 | Dendritic spike amplitude | 9 mV |

| ϕdend | Dendritic spike threshold | 7 mV |

| _u_ini | Initial branch strength | 0.5 [−] |

| upassive | Passive EPSP decay constant | 0.8 [−] |

| Plasticity parameters | ||

| η | Learning rate, pre-spike LTP | 0.004 mV−2 s−1 |

| κ | Offset for pre-spike LTP | 1 mV |

| A+ | STDP, LTP factor | 0.02 [−] |

| _A_− | STDP, LTD factor | 0.01 [−] |

| τ+ | STDP, LTP time constant | 16.8 ms |

| τ− | STDP, LTD time constant | 33.7 ms |

| ρ0LTP | STDP, rate dependency factor | 40 s−1 |

| ϕ+ | Threshold for LTP induction | 17 mV |

| ηbranch | Learning rate for BSP | 0.25 mV−1 s−1 |

| ϕ_b_ | Target potential for BSP | 30 mV |

| ϕstabilize | Threshold for adaptive learn rate | 16.5 mV |

| ηstabilize | Adaptive learn-rate stabilization factor | 0.97 [−] |

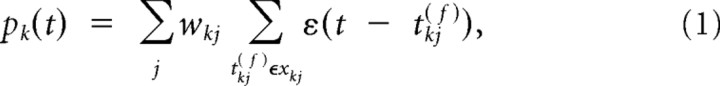

Figure 2.

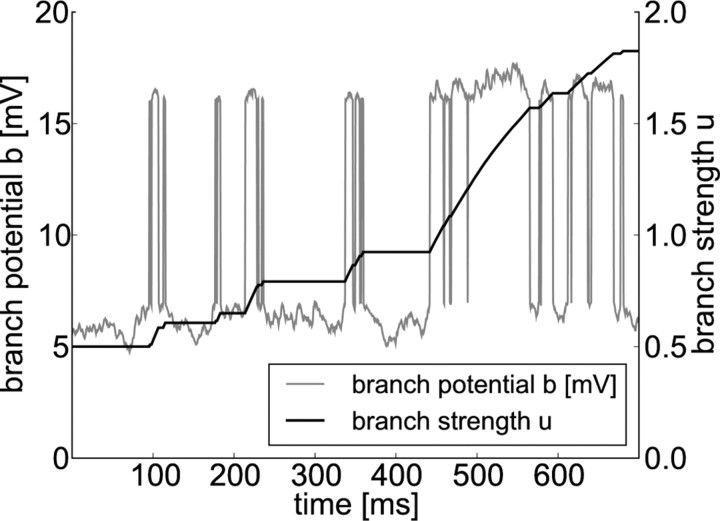

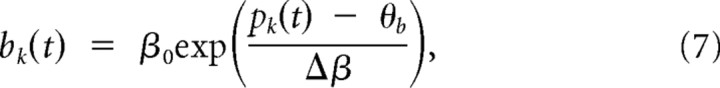

Nonlinear integration of synaptic input at dendritic branches and branch strength potentiation. The local potential at a dendritic branch k (gray line) is given by the sum of a passive component pk(t) and an active component ak(t). The value of the passive component is given by the linear sum of incoming EPSPs. Summation of EPSPs from many synapses that receive Poissonian input from active cell ensembles leads to temporal fluctuations of the potential. The active component models dendritic spikes and is nonzero whenever pk(t) is above a threshold of 7 mV. Such nonlinear events are visible as sharp increases in the dendritic potential. The temporal evolution of the corresponding branch strength uk is plotted in black. Dendritic spikes at the branch induce potentiation of its branch strength.

Model for somatic response.

The somatic spiking mechanism and reset behavior of our model is based on the model of Jolivet et al. (2006). To obtain the somatic membrane voltage Vm of the neuron, relative refractoriness is included by adding a reset kernel ηreset to the membrane voltage for each action potential. This reset kernel effectively adds a large negative contribution to the membrane voltage for times after the postsynaptic spike, which then decays exponentially over time. This can be described mathematically as follows:

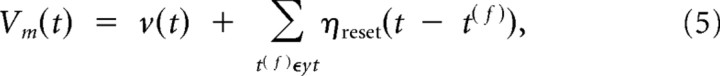

where yt denotes the set of output spikes of the neuron up to time t. The instantaneous firing rate of the neuron at time t is given by an exponential function of the membrane voltage:

where θ is the stochastic threshold, Δ_U_ is the width of the spike trigger zone, and ρ0 is the instantaneous rate at threshold. In other words, the firing rate of the neuron depends exponentially on its membrane potential (Fig. 1C). If the membrane potential is clamped to 20 mV above resting potential, this results in a mean firing rate of 52 Hz, a clamping at 25 mV already results in a mean firing rate of 100 Hz. Because the firing rate of the model depends only on the difference between the membrane voltage Vm and the stochastic firing threshold θ, we can arbitrarily set the resting potential to _V_rest = 0 mV and the threshold to θ = 20 mV (for other parameter values, see Table 2). Output spikes are generated by a Poisson process with this instantaneous firing rate. After each spike, the neuron enters an absolute refractory period of duration _t_refract during which no spike can be emitted. This firing mechanism implements a stochastic threshold that has been shown to approximate well the behavior of layer 5 pyramidal neurons of somatosensory cortex of male and female rats with the parameters used in this article (Jolivet et al., 2006).

Simplified model for theoretical analysis.

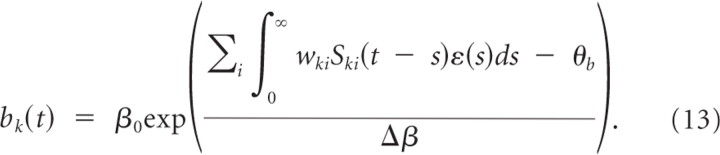

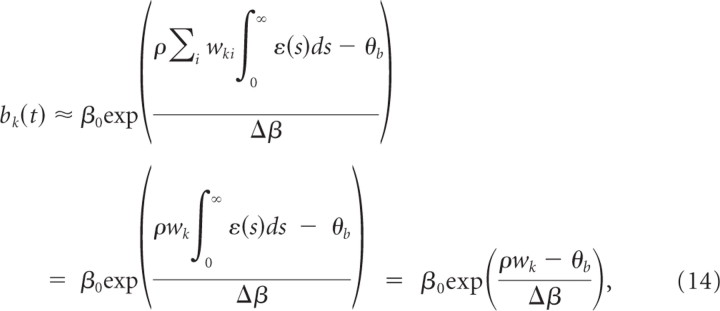

The model described above and further elaborated in Results includes a step-like nonlinearity in dendritic branches. This model was used in our simulations; it is, however, not suited for mathematical analysis. In a simplified model, the nonlinear dependence of the output bk(t) of branch k is modeled by an exponential branch nonlinearity,

and the branch outputs are linearly summed at the somatic site,

where uk now denotes the branch strength of branch k. In other words, in the simplified model, the branch voltage depends exponentially on the summed EPSPs. The membrane voltage at the soma is then given by the sum of all branch potentials. Reasonable firing behavior of this simplified model is achieved with parameters β0 = 25 mV, θ_b_ = 20 mV, Δβ = 3 mV. The advantage of this simplified model is that it allows a thorough analysis of the weight dynamics.

Plasticity mechanisms

STDP model.

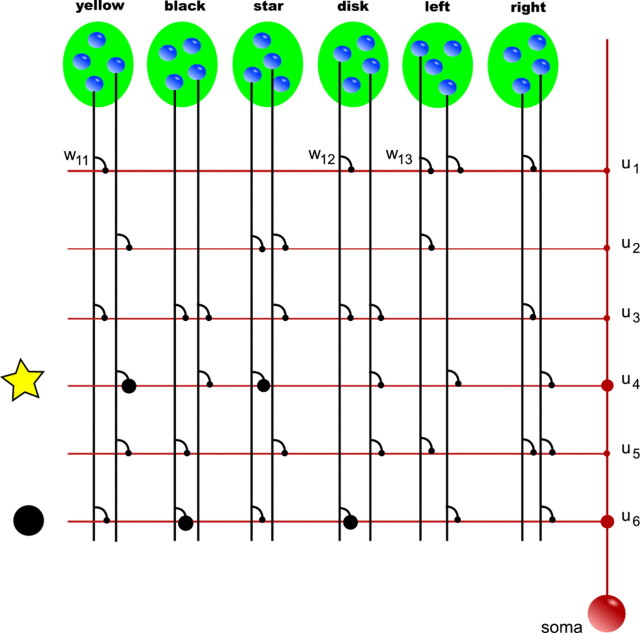

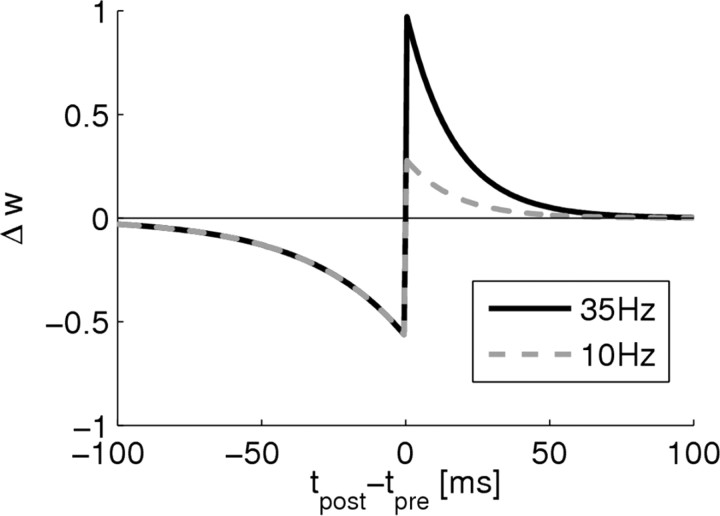

We adopt a standard model for STDP (Abbott and Nelson, 2000) in which the change Δ_w_ of the synaptic weight depends on the firing time _t_pre of the presynaptic neuron and the firing time t_post = t_pre + Δ_t of the postsynaptic neuron (Fig. 3). If the presynaptic action potential precedes the postsynaptic action potential in a time window of a few tens of milliseconds (Δ_t > 0), long-term potentiation (LTP) is induced. A reversed sequence of presynaptic and postsynaptic action potentials leads to long-term depression (LTD).

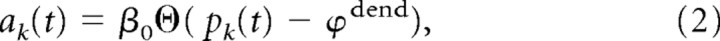

Figure 3.

Principle of feature binding in the neuron model. The neuron and presynaptic neuronal ensembles are indicated as in Figure 1. Neuronal ensembles code specific object features, such as color, shape, or movement direction. Neurons of the ensembles yellow and star have strong synaptic connections (the size of black dots indicates the strength of the synaptic connection wkj) to branch 4 of the neurons. Furthermore, strong synapses connect neurons from ensembles black and disk with branch 6. Thus, branch 4 is strongly depolarized by feature combination yellow star and branch 6 by feature combination black disk (indicated by symbols left to the branch). Dendritic spikes induced by this depolarization have a strong impact on the soma as a result of large branch strengths of branches 4 and 6 (the size of red dots indicates the branch strength value uk). Somatic activation is less strong for a black star or a yellow disk because they partly activate branches 4 and 6.

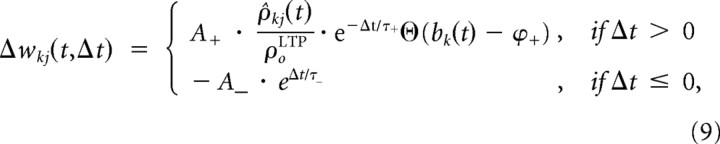

Recent experiments showed that STDP also depends on the depolarization level at the local dendritic site (Nevian and Sakmann, 2006; Sjöström and Häusser, 2006; Larkum and Nevian, 2008). More specifically, these findings suggest that LTP is preferentially induced at large local depolarizations. For example, it was shown by Sjöström and Häusser (2006) that LTD at distal synaptic inputs can be converted to LTP by either stronger stimulation that recruits more inputs (cooperativity) or strong extracellular input. In the same direction, Sjöström et al. (2001) showed that low-frequency pre-before-post pairings lead to LTP only for sufficiently strong EPSPs. Again, LTP could be recovered in their experiments by strong extracellular stimulation in addition to unitary EPSPs. Interestingly, this dependence on EPSP size was absent for LTD induced by post-before-pre pairings. Therefore, the model is extended by an induction threshold for LTP such that LTP is induced only if the local branch potential bk(t) exceeds a threshold ϕ+. Furthermore, Sjöström et al. (2001) showed that LTP depends approximately linearly on pairing frequency, whereas there is no such dependency of LTD below 40 Hz. In this study, the presynaptic and postsynaptic firing rates were always covaried. The relative contribution of each of these two factors is therefore unclear. We modeled frequency effects by a linear dependency of LTP on the estimated presynaptic firing rate ρ̂kj(t):

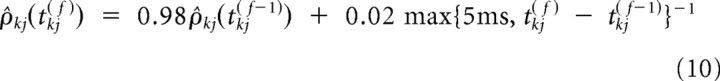

where Θ denotes the Heaviside step function, and ρ0LTP defines the linear scaling of the frequency dependence of LTP. A+ (A−) and τ+ (τ−) define the amount of weight change and the width of the exponential learning windows for LTP and (LTD), respectively. Summarizing, pre-before-post spike pairs induce LTP if the branch is sufficiently depolarized. The amount of LTP depends linearly on the presynaptic firing rate and exponentially on the time difference between the presynaptic and the postsynaptic spike. A presynaptic spike that follows a postsynaptic action potential leads to LTD (Fig. 3). Weights were clipped at 0 and a maximum value w_max. In the simulations, the presynaptic firing rate ρ_kj was estimated by a running average over inverse presynaptic interspike intervals and updated at presynaptic spike times t kj(2), t kj(3), t kj(4), … as

with initial value ρ̂kj(t kj(1)) = 10 Hz. The maximum operator in the denominator was included to avoid temporary large estimates. For times t between presynaptic spikes, ρ̂kj(t) was set to its value at the most recent presynaptic spike time. This means that effectively, ρ̂kj estimates the presynaptic firing rate by averaging over recent inverse interspike intervals.

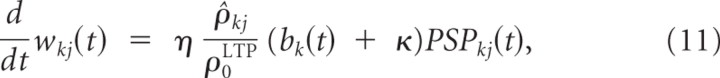

It is well known that pairing of presynaptic spikes with postsynaptic depolarization is often sufficient for the induction of LTP, i.e., a postsynaptic somatic spike is not necessary (Kelso et al., 1986; Gustafsson et al., 1987; Hardie and Spruston, 2009). We model LTP in the absence of postsynaptic somatic spiking by the following weight dynamics:

where η is a small learning rate, ρ̂kj is an estimate of the presynaptic firing rate as before, and ρ0LTP defines the linear scaling of the frequency dependence of LTP. PSP_kj_(t) is a low-pass filtered version of the presynaptic spike train (i.e., PSPkj(t) = Σ_f_ ε(t − _t_kj(f)) where t kj(f) denotes the _f_th spike time of the presynaptic neurons). The constant κ increases learning speed if branch activations are very small initially but is not crucial for the results. In other words, each presynaptic spike potentiates the synapse by an amount that depends linearly on the presynaptic firing rate and the local branch potential. Because the branch potential bk(t) is the sum of the local synaptic activation pk(t) and the additional contribution from the dendritic spike ak(t), synapses can be potentiated in the absence of dendritic spikes, although potentiation in the presences of a dendritic spike is substantially stronger. Some experimental data indicate that this form of plasticity needs dendritic spikes (Golding et al., 2002). Conversely, potentiation without dendritic spikes may be more pronounced in the presence of an increased concentration of ACh (Bröcher et al., 1992; Auerbach and Segal, 1994; Dringenberg et al., 2007). Also, a fairly large number of experimental data (Watt and Desai, 2010) support the existence of mechanisms for the so-called synaptic scaling, i.e., mechanisms that potentiate synapses of neurons whose average firing rate is very low, thereby bringing them back into a normal firing regimen. A mechanism of this type is also needed in the context of our model, and therefore a weak potentiation of synapses without postsynaptic firing or dendritic spikes is included in Equation 11.

As noted above, the contribution of the presynaptic rate in the reported influence of pairing frequency on STDP is still unclear. We therefore performed control simulations in which LTP did not depend on the presynaptic frequency, i.e., in which weight updates are performed without the factor ρ^kjρ0LTP in the learning rules 9 and 11. Removing this factor changes the learning speed as one side effect. To compensate, we adjusted the learning rates (see below, Simulation parameters). We report in Results that simulations with this reduced version of LTP leads to results quantitatively very similar to those obtained with the rate-dependent version of LTP.

Branch-strength potentiation.

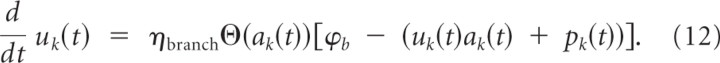

Recent experimental data from hippocampal CA1 pyramidal neurons of male rats (Losonczy et al., 2008; Makara et al., 2009) shows that the impact of a dendritic spike at branch k in some dendritic structures on the somatic membrane potential depends on branch strength. We denote the branch strength of a branch k as uk(t). Furthermore, it was shown that this branch strength is plastic. We model this plastic behavior of branch strength uk by the following temporal dynamics:

Here, ηbranch denotes a small learning rate, and ϕ_b_ denotes the target membrane voltage at the site of spike potentiation. The term Θ(ak(t)) indicates that a branch spike has appeared. In other words, a change in the branch strength only appears during branch spikes. The term in square brackets in the dynamics Equation 12 models a saturation of BSP at a given local membrane voltage. According to this term, BSP vanishes when the local potential (uk(t)ak(t) + pk(t)) equals a constant ϕ_b_ and the branch strength is depressed at a higher local potential. We note that only potentiation of branch strengths was observed in the study by Losonczy et al. (2008). Hence, our model for BSP, which leads to depression for excessive branch potentials, is an extension of the experimentally observed effects. The behavior of Equation 12 is illustrated in Figure 2. One can see that the branch strength is potentiated during dendritic spikes and potentiation is weakened as it increases, which illustrates the effect of the saturation term in Equation 12. Alternatively, one can model saturation simply by clipping uk(t) at some constant upper boundary. This leads to similar results (see Discussion). Although the branch strength is plastic and thus depends on time, we will in the following denote it simply by uk and skip the dependency on time for simplicity.

For additional discussion of the branch-strength dynamics, see Results. A summary of the plasticity rules in the model is given in Table 1.

Table 1.

Overview of the plasticity model

| Synaptic: STDP | |||

|---|---|---|---|

| Pre–post timing | Dendritic depolarization | Presynaptic rate | Effect |

| Pre before post | Low | * | No effect |

| Pre before post | High | Linear dependence | Potentiation |

| Post before pre | * | * | Depression |

| Pre | Linear dependence | Linear dependence | Potentiation |

| Branch strength: BSP | |||

|---|---|---|---|

| Dendritic spike | Dendritic depolarization | Presynaptic rate | Effect |

| No | * | * | No effect |

| Yes | Low | * | Potentiation |

| Yes | High | * | Saturation/depression |

Adaptive learning rate.

In the intact brain, synaptic plasticity itself is regulated by activity-dependent mechanisms (Abraham, 2008). In particular, it is conjectured that the molecular machinery that implements synaptic plasticity provides a progressive stabilization of changes in the synapse during the transition from short-term to intermediate-term to long-term memory storage (Kandel, 2009). Because of the lack of quantitative experimental data on this mechanism, we model such progressive stabilization of synaptic weights by introducing an adaptive learning rate η_kj_ for each synaptic connection, which starts with initial value 1, and is multiplied by a constant ηstabilize < 1 each time when the branch potential bk is above a threshold ϕstabilize during a postsynaptic spike. The adaptive learning rate η_kj_ is set to 0 when it falls below 2% of its initial value. This is a quite intuitive mechanism that tends to stabilize synaptic weights at branches that are strongly activated by synaptic input, indicating that an important synaptic pattern has emerged at the branch. To our knowledge, this strategy has not been used before in a similar context, although adaptive learning rates are commonly used in artificial neural network training. Previous studies on weight stability under STDP-like synaptic plasticity focused on the retention of memory under random, i.e., unstructured, stimulation or random weight changes (van Rossum et al., 2000; Fusi and Abbott, 2007). In our study, input patterns are always strongly structured with highly correlated input, in terms of either the input rate or temporally precise spike correlations. Under such conditions, memories are in general quickly erased in these types of models. In networks of spiking neurons, lateral inhibition can lead to prolonged retention of weight patterns even during structured ongoing input, indicating that inhibition could act to stabilize synaptic efficacy patterns (Billings and van Rossum, 2009). However, because stabilization mechanisms are not the focus of this work, we only applied the above mentioned strategy of an adaptive learning rate that works fine for our purposes.

Mathematical analysis of neuron adaptation in a rate formulation

Here we analyze plasticity in the simplified model with exponential branch nonlinearity described by Equations 7 and 8. We show that, in a mean-field rate approximation, the branch with the highest initial activation for a given pattern wins the competition and converges to a stable nonzero branch activation, whereas the activations of other branches decay to 0. In this section, we disregard branch strengths, setting them to 1 throughout the analysis. Furthermore, we will only consider the synapses that are activated by the pattern—those with high presynaptic firing rate—and disregard other synapses for the sake of simplicity. This can be justified by the frequency dependence of LTP. Because of this dependence, those synapses with low presynaptic activity are subject to weak LTP only, and their weights will decay quite fast as the postsynaptic rate increases. As an additional simplifying assumption, we consider weights without bounds in this analysis, i.e., they are neither clipped at a maximal weight value nor at 0. This strongly simplifies the analysis in continuous time.

We first convert the spike-based formulation of our model into a rate-based formulation. Let t ki(f) denote the f_th firing time of the presynaptic neuron of synapse i at branch k where f = 1, 2, …. We formally denote the corresponding spike train Ski as a sum of Dirac delta functions (Gerstner and Kistler, 2002) Ski(t) = Σ_f δ(t − _t_ki(f)). Denoting the _f_th firing time of the postsynaptic neuron as _t_post(f) for f = 1, 2, …, we can write the postsynaptic spike train as S_post(t) = Σ_f δ(t − _t_post(f)). The local voltage at branch k at time t is given by the following:

Assuming a large number of inputs, all with the same constant firing rate ρ and uncorrelated spike times, we approximate the summed input potential by its mean field:

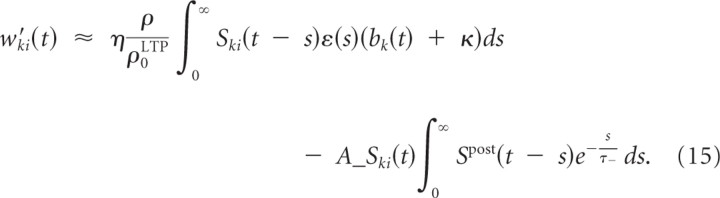

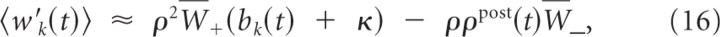

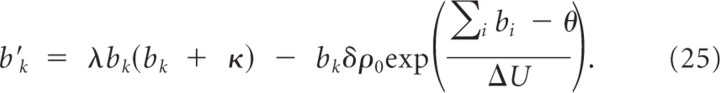

where w_k = Σ_i wki is the summed weight at branch k and, without loss of generality, we assumed ∫0∞ ε_(s)ds_ = 1. From the plasticity effects implemented in simulations, we include the minimal set that is essential for dendritic competition. This set consists of voltage-dependent LTP for each presynaptic spike as defined in Equation 11 and LTD as defined in Equation 9 for negative Δ_t_. We can thus write the weight change w_′_ki(t) at time t as

The average weight change for the summed weight at branch _k_—where the average is taken over realizations of presynaptic and postsynaptic spike trains—is then

with W¯+=Nsynηρ0LTP∫0∞ε(s)ds=Nsynηρ0LTP and W¯−=NsynA−τ−. Here, _N_syn is the number of active synapses to the branch (we assume that _N_syn is equal for all branches). Hence, we arrived at a rate-based description of weight dynamics for a single pattern presentation.

We assume that, at time t = 0, one branch has higher branch activation than any other branch. Let k denote the index of the branch with the largest branch activation at time 0, i.e., bk(0) > bj(0) for all j ≠ k, which implies that wk(0) > wj(0) for all j ≠ k. We now show that, for κ≥W¯−ρ0ρW+, these weight dynamics asymptotically converge to b*, where b*j = 0 for j ≠ k and bk* > 0, i.e., limt→∞ b(t) = b*.

To simplify notation, we will in the following not indicate directly the dependence of dynamic variables on time; these are bi(t), wi(t), ρpost(t). First, we note that

with λ=ρ3W¯+Δβ and δ=ρ2W¯−Δβ. We further find that

Observation 1.

The local potential at branch k is always greater or equal to the local potential at any other branch, i.e., bk ≥ bj ∀t > 0, j ≠ k (this also implies wk ≥ wj ∀t > 0, j ≠ k).

Proof (sketch).

By contradiction, a change of order implies a previous change of the order of derivatives. This is a contradiction to the fact that the derivatives are proportional to the branch activations, because they are ordered at any time before the change.

Observation 2.

The local potential at branch k does never fall below a minimal value b _k_min > 0. More precisely, there exists a b _k_min > 0 and a t* > 0 such that bk > b _k_min for all t > t*.

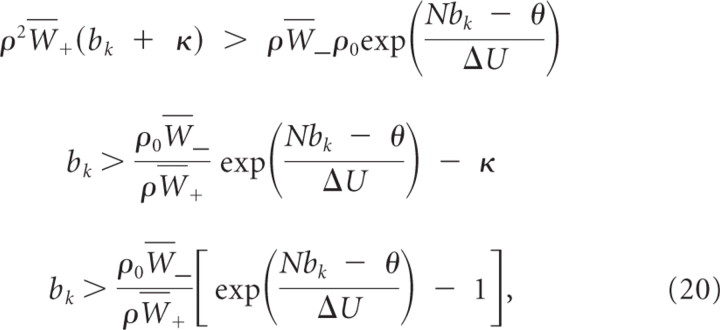

Proof.

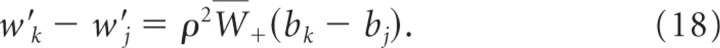

We can bound the weight change at branch k from below:

where N is the number of branches. Hence, w _k_′ > 0 independent of other branch activations if

for κ≥ρ0W¯−ρW+=δλρ0. Inequality 20 holds true for bk <θN. Hence, bk will, independently of other dynamic variables, converge in finite time to a value above b _k_min and stay above b _k_min.

Observation 3.

The local potential at branch k does never rise above a maximal value b _k_max > 0. More precisely, there exists a b _k_max > 0 and a t* > 0 such that bk < _b_ _k_max for all _t_ > t*.

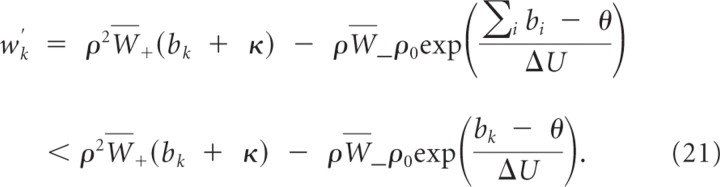

Proof.

We can bound the weight change at branch k from above:

Hence, w_′_k < 0 independent of other branch activations if

For κ≥ρ0W¯−ρW¯+=δλρ0, this condition is fulfilled for all bk > b _k_max > 0. Because the exponential grows much faster than the linear function, such a b _k_max can always be found. For reasonable parameters, b _k_max is ∼25 mV. Hence, bk will, independently of other dynamic variables, converge in finite time to a value below b _k_max and stay below b _k_max.

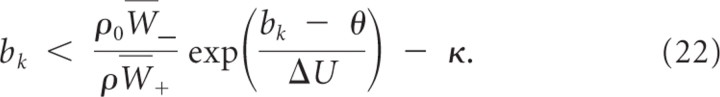

In the following we assume without loss of generality that b _k_min < bk(0) < b k_max. Let Δ_kj(t) ≡ wk(t) − wj(t) denote the difference between the summed weights at branch k and branch j. We show that weights wj for j ≠ k decay at least linearly with time, i.e.,

for Ckj=ρ2W¯+bkmin(1−exp(−Δkj(0)Δβ)). We can bound the change in the weight difference Δ_kj_′(t) by

and the validity of Equation 23 follows. This also shows that limt → ∞ bj(t) = 0 for all j ≠ k. Hence, for all ε > 0 and all j ≠ k, there exists a _t_ε > 0 such that _b_k(t) ≥ _b_kmin and bj(t) <εN−1 for all _t_ > _t_ε.

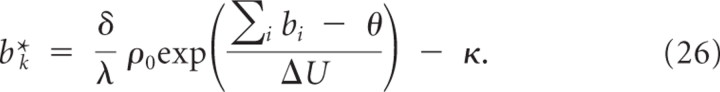

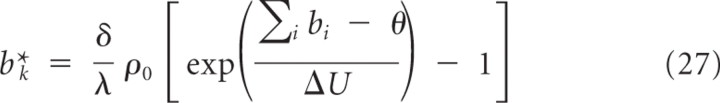

Consider the dynamics for bk:

This equation has a fixed point at bk = 0, which is not reached because bk(t) ≥ b _k_min > 0 for all t and the fixed point b k* given by the solution to:

For κ≥δλρ0, the fixed point b k* defined by Equation 26 is larger or equal to a fixed point defined by

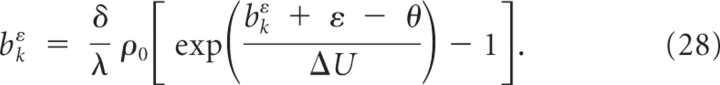

and has the same stability properties. Now consider the dynamics after time _t_ε. The fixed point b k* defined by Equation 27 is again larger than the fixed point defined by

Inspection of the dynamics of the equation b˜kε′=λb˜kε(b˜kε+κ)−b˜kεδρ0postexp(b˜kε+ε−θΔU) shows that, for sufficiently small ε, the fixed point b k_ε is globally stable. In the limit of large t, b k* converges to limε→o_b _k_ε = b k o. Thus, lim_t_→∞b(t) = b* = (_b_1*,…,_b_N*)T with b j* = 0 for j ≠ k and b k* > 0.

Simulation parameters

Simulation parameters are listed in Table 2. All simulations were based on the same parameter sets except for simulations with the correlation-based coding in which learning rates were adjusted to η = 0.002 mV−2 s−1, A+ = _A_− = 0.01, and ηstabilize = 0.995.

We performed control experiments in which LTP was not dependent on the presynaptic firing rate. In these experiments, learning rates were adjusted. In the control experiments reported below (see Self-organization of nonlinear neural computation), if the rate dependency is abolished, synapses from neurons with low rates tend to be more strongly potentiated. Hence, these weights are on average larger than in experiments with frequency-dependent LTP. This increases the firing rate of the postsynaptic neuron when a pattern is stored, which in turn leads to stronger depression of synapses from highly active presynaptic inputs. As a result, these synapses are not potentiated strongly enough to ensure robust pattern separation. One possible solution for this problem is to reduce LTD. In our simulations, we achieved this by reducing the STDP scaling constants to A+ = 0.01 and _A_− = 0.006 but leaving the learning rate for LTP without postspikes unchanged at η = 0.004 mV−2 s−1. In the control experiments reported below (see Acquisition of nonlinear functionality based on synchrony), all inputs have a firing rate of 10 Hz. Hence, when we ignore the rate-dependent factor, this effectively increases the learning rate. We partially compensated for this increase by halving the learning rate factors that led to values η = 0.001 mV−2 s−1 and A+ = _A_− = 0.005.

The passive EPSP decay constant _u_passive was chosen to obtain qualitatively similar behavior as reported previously (Poirazi et al., 2003; Losonczy and Magee, 2006). Several studies (Williams and Stuart, 2002; Nevian et al., 2007), however, report very strong attenuation of the EPSPs in distal as well as basal dendrites toward the soma. In our simulations, the value of _u_passive can be varied in a wide range without a qualitative change of the results. Using, for example, u_passive = 0.2 leads to very similar results without any adaptation of other parameters for most simulations. For the simulations presented below (see Acquisition of nonlinear functionality based on synchrony), the target voltage ϕ_b in Equation 12 has to be increased from 30 to 37 mV such that stronger branches compensate for the smaller passive component.

For simulations, time was discretized with a discretization time of 0.5 ms. The simulation code was written in C++, and simulations were performed on a standard UNIX desktop.

Measurement of spike train correlations and generation of correlated spike trains

To compute correlation coefficients between spike trains for the analysis shown below (see Acquisition of nonlinear functionality based on synchrony), spike trains were convolved with a Gaussian kernel with σ = 5 ms, and the correlation coefficient of the resulting time series were computed.

To produce spike trains with correlation factor cc and frequency ρ, we proceeded as Gütig et al. (2003) with time bin of size Δ_t_ = 0.5 ms. We constructed a Poisson spike train Sr with frequency f by assigning a spike to each bin with probability ρΔ_t_. The spike train Sr was used as a template for the construction of the input spike trains. Each input spike train was generated by assigning a spike to a bin not in Sr with probability ρΔt(1−cc) and assigning a spike to a bin in Sr with probability ρΔt(1−cc)+cc.

Results

Nonlinear dendritic responses

According to Losonczy and Magee (2006), radial oblique branches in CA1 pyramidal neurons act as single integrative compartments that integrate synaptic inputs without regard to the spatial distribution within the branch. The view of oblique branches as single integrative compartments proposed by Losonczy and Magee (2006) was relativized by subsequent findings. Branco et al. (2010) showed that single oblique and basal dendritic branches are able to differentially read out spatiotemporal synaptic activation sequences. This suggests additional compartmentalizaton of individual branches. Multiple integrative compartments were also identified in individual basal branches (Major et al., 2008). Although additional compartmentalization of single branches increases the number of nonlinear integrative subunits per neuron and thus it may increase its capabilities for nonlinear computation, our model described below and illustrated in Figure 1 is based on the single-compartment view for the sake of simplicity and clarity. The branches linearly sum asynchronous postsynaptic potentials from their synapses. We denote the passive/linear component of the dendritic potential at time t as pk(t) (for a detailed equation, see Materials and Methods). Strong synchronous synaptic input at oblique dendrites elicits dendritic spikes. We model such a spike at branch k by an additional active contribution to the branch voltage ak(t) whenever pk(t) is above a certain threshold. Note that this is a purely phenomenological model of dendritic spikes without the explicit inclusion of any positive feedback mechanism that underlies the generation of the event. The branch potential bk(t) is given by the sum of the local synaptic activation pk(t) at the branch and the additional contribution from the dendritic spike ak(t), leading to nonlinear branch characteristics (Fig. 2).

The passive component of the branch potential pk(t) is attenuated by a factor _u_passive < 1 while it is conducted to the soma. According to recent experimental findings, the impact of a dendritic spike on the somatic potential can vary dramatically between branches and is subject to branch strength potentiation, a form of dendritic plasticity as detailed below (Losonczy et al., 2008). Dendritic spikes from different branches are thus scaled by branch-specific weighting factors—the branch strengths _uk_—in our model before they reach the soma. Thus, the somatic membrane potential before refractory effects are taken into account is given by the sum of these passive and active weighted components from all branches (for detailed equations, see Materials and Methods).

The behavior of this model of nonlinear dendritic integration is illustrated in Figure 1B. It is consistent with neurophysiological recordings (Losonczy and Magee, 2006) and a study by Poirazi et al. (2003) in which a mixture of a sigmoid function with a linear contribution was found to provide the best fit to experimental data on the impact of dendritic input on the somatic membrane voltage.

This dendritic model exhibits two important behaviors. First, dendritic spikes serve as a basis for nonlinear neural computations as discussed in detail below. Second, the local branch potential bk(t) is a measure for the total synaptic input at this branch. This variable can potentially be read out by synaptic plasticity mechanisms—for example, by voltage-dependent STDP—such that weight updates can be based on the success of synapses in driving the dendritic branch (see below for the voltage-dependent plasticity mechanisms used in our model).

The somatic spiking mechanism and reset behavior of our model was based on the model of Jolivet et al. (2006). It is a stochastic spiking neuron model with an instantaneous firing rate that depends exponentially on the somatic membrane potential (Fig. 1C). The model parameters were fitted by Jolivet et al. (2006) to obtain an optimal fit to the behavior of layer 5 pyramidal neurons in rat somatosensory cortex. More details on the soma model can be found in Materials and Methods.

Nonlinear neural computation

Nonlinear dendritic responses facilitate the processing capabilities of individual neurons. Several important hypotheses on neural processing in cortex demand nonlinear computations. Two examples are the binding problem (von der Malsburg, 1981; Roskies, 1999) and the participation of neurons in neuronal ensembles (Hebb, 1949). Suppose that the occurrence of a specific feature in the input, such as a shape (square, triangular, circular, etc.), a color, or motion direction, is coded by the activation of a neuronal ensemble (Fig. 3). Binding problems are in general problems in which several features of an object have to be bound for additional processing or storage. One class of binding problems is about relating a concept to a percept (Roskies, 1999). It demands neurons or populations of neurons to store combinations of feature and attributes. A neuron that binds—i.e., responds to—for example, a black disk and a yellow star, should however not respond to a yellow disk or a black star. This problem can be solved with superlinear dendritic integration (Fig. 3).

The same example can be viewed from the perspective of a neuron that participates in neuronal ensembles. Suppose a neuron participates in two neuronal ensembles. In the previous example, the neuron participates in ensembles “black disk” and “yellow star.” However, the neuron should not be activated if half of the neurons in each ensemble are active. In the previous example, that would be the ensembles “yellow disk” and “black star.” More abstractly, the binding problem calls for learning of concepts that have the logical form of an OR of ANDs. In other words, to solve a binding problem, one has to perform first a logical AND operation for each feature combination and then has to detect whether one such combination is active by a logical OR over these ANDs. The situation described above is a nonlinear pattern separation problem that we refer to as the “feature binding problem” (FBP) in the following. Given four input features, the neuron should respond to two disjunct combinations consisting of two features each—black disk and yellow star in the previously described example—but not to any other two-feature combination, such as a yellow disk and black star. This function cannot be computed by a neuron model that linearly sums individual synaptic inputs, even if weights can be chosen arbitrarily to be excitatory or inhibitory. To see this, consider our neuron model without the dendritic nonlinearity. This means that the somatic membrane potential is given by the summed EPSPs from all branches. Now consider the mean somatic potential _v_mean if a single input ensemble is active. That is, record the somatic potential _v_yellow when only the ensemble coding for “yellow” is active. Then record the somatic potential _v_black when only the ensemble coding for “black” is active, and so on. Finally, compute the mean over all these somatic potentials, that is νmean=14(νyellow+νblack+νstar+νdisk). Note that, for a given neuron with given synaptic efficacies and branch strengths, we obtain a unique _v_mean. Because we demand that the neuron is active for yellow star and black disk, we demand that, for these combinations, the somatic membrane potential is above threshold θ. It follows that the mean somatic membrane potential over single features _v_mean is above half of the threshold _v_mean >θ2. Conversely, we demand that, for combinations yellow disk and black star, the somatic membrane potential is below threshold. This constraint implies that the mean somatic membrane potential over single features _v_mean is below half of the threshold _v_mean <θ2. Because for a given neuron, _v_mean cannot be above and below half of the threshold, the function cannot be computed by such a neuron. Hence, nonlinear dendritic operations are essential to solve the tasks with single neurons, because a linear model would always classify at least one of the four patterns incorrectly regardless of its weight configuration. Note however that the traditionally often considered exclusive or (XOR) function (Minsky and Papert, 1988) cannot be computed by our neuron model even with nonlinear branches, because this function requires that the neuron responds to a pattern like yellow but does not respond to a pattern like yellow disk, which is a superset of the stored pattern. The classical XOR can thus only be solved with the help of inhibitory synapses, which are not modeled in this article. A possible role of inhibitory circuits could thus be to enable pyramidal neurons to store such XOR-like pattern configurations.

Synaptic plasticity and branch-strength potentiation

To examine whether such nonlinear functionality could in principle be acquired by a neuron in a self-organized manner, we used a plasticity model based on several experimentally observed plasticity effects in pyramidal neurons, including depolarization-dependent STDP (Ngezahayo et al., 2000; Nevian and Sakmann, 2006; Sjöström and Häusser, 2006), dependence of STDP pairing frequency (Sjöström et al., 2001), and the potentiation of branch strengths (Losonczy et al., 2008). Acetylcholine (ACh) has a strong effect on synaptic plasticity and branch-strength potentiation. Specifically, our model for branch-strength potentiation discussed below is based on findings of Losonczy et al. (2008) for elevated cholinergic modulation levels. Furthermore, ACh can lower the threshold for LTP induction (Auerbach and Segal, 1994; Dringenberg et al., 2007). We thus assume that learning is enabled when local acetylcholine levels are elevated, which might signal exploratory behavior, saliency of the stimulus, or alertness. Conversely, we hypothesize that ACh levels are low during recall, which reduces plasticity effects. These assumptions are supported by experimental data that indicate that ACh can gate cortical plasticity (Weinberger, 2008) and enhance memory storage in hippocampus (Ragozzino et al., 1996). For the sake of simplicity, we do not explicitly model the ACh signal in our simulations but disable all plasticity mechanisms in the testing phase.

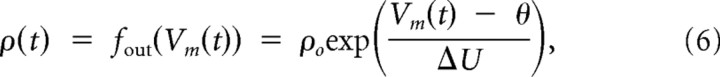

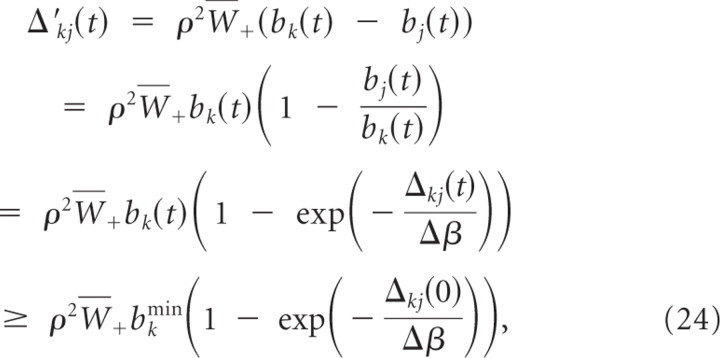

Spike-timing-dependent plasticity is a Hebbian plasticity mechanism in which the weight change of a synapse depends on the precise timing of the presynaptic and postsynaptic action potentials (Markram et al., 1997; Bi and Poo, 1998). Recent experiments showed that STDP also depends on the local dendritic depolarization and Ca2+ level at the local dendritic site (Nevian and Sakmann, 2006; Sjöström and Häusser, 2006; Larkum and Nevian, 2008). These findings are of special importance for neuron models with dendritic arborizations because dendritic depolarization may be an important regulating factor that differentiates plasticity at different dendritic branches for the same input pattern. We therefore extended a standard model for STDP (Abbott and Nelson, 2000) to include depolarization dependence (a related model for depolarization-dependent STDP was studied by Clopath et al., 2010). Pre-before-post spike pairs within a certain time window induce LTP if the depolarization at the branch exceeds a certain threshold ϕ+. Conversely, post-before-pre spike pairs induce LTD (Fig. 4). Sjöström et al. (2001) showed that LTP depends approximately linearly on pairing frequency, whereas there is no such dependency of LTD below 40 Hz. Because the presynaptic and postsynaptic firing rates were always covaried in this study, the relative contribution of each of these two factors remains unclear. From a theoretical perspective, the presynaptic firing rate is especially interesting. If low presynaptic rates reduce LTP but not LTD, weakly activated inputs can be depressed selectively. We therefore modeled frequency dependence by a linear dependence of LTP on the presynaptic firing rate (Fig. 4). Because the contribution of the presynaptic rate in the reported influence of pairing frequency on STDP is still unclear, we performed control simulations in which LTP did not depend on the presynaptic frequency. As reported below, this leads to quantitatively very similar results (for details on the STDP learning rule used, see Materials and Methods).

Figure 4.

Rate-dependent STDP. The model includes linear dependence of LTP on presynaptic firing rate and an induction threshold for LTP. Shown is the weight change for a presynaptic rate of 35 Hz (black curve) and 10 Hz (gray dashed curve) scaled to the maximum possible weight change at 35 Hz in dependence of the time difference Δ_t_ = _t_post − _t_pre of a pre–post spike pair.

By definition, induction of STDP requires a postsynaptic spike. It is well known, however, that pairing of presynaptic spikes with postsynaptic depolarization can be sufficient for the induction of LTP (Kelso et al., 1986; Gustafsson et al., 1987; Hardie and Spruston, 2009). In our model, synapses can also be somewhat potentiated in the absence of postsynaptic firing and dendritic spikes, although potentiation in the presences of a dendritic spike is substantially stronger because the amount of potentiation increases linearly with the local branch depolarization bk (see Eq. 11 and discussion in Materials and Methods). The plasticity mechanism therefore preferentially potentiates groups of synapses at a single branch that tend to be coactivated and thus depolarize the branch. We will see in the analysis below that this feature of the plasticity dynamics contributes to synaptic clustering and dendritic competition. Furthermore, as in the STDP model described above, we model a linear dependence on presynaptic firing rate (for detailed equations, see Materials and Methods).

Recent experimental data (Losonczy et al., 2008; Makara et al., 2009) show that the impact of a spike in oblique dendrites on the somatic membrane potential depends on properties of the dendritic branch giving rise to the notion of a branch strength. Furthermore, it was shown that this branch strength is plastic. More precisely, the strength of the branch–soma coupling can be increased through pairing of dendritic spikes with ACh. The phenomenon was termed BSP. We model this plastic behavior of branch strength uk by the temporal dynamics given in Equation 12 in Materials and Methods. Furthermore, potentiation was only reported for weak branches, indicating a saturating mechanism. We therefore implemented a saturation of BSP if the local potential equals a constant ϕ_b_ (see Materials and Methods).

Finally, to stabilize weights, every synapse has an individual adaptive learning rate. This learning rate decreases whenever the local membrane voltage is above a threshold ϕ stabilize (set to 0.5 mV above the voltage that occurs during a dendritic spike) in coincidence with a postsynaptic somatic spike (for details, see Materials and Methods). A summary of the plasticity rules in the model is given in Table 1.

Dendritic competition

We first tested how the model behaves when a single pattern of presynaptic ensemble activity is presented to the neuron. In these simulations, we considered six input ensembles, each consisting of 144 neurons. Each neuron in the input ensembles was synaptically connected to a randomly chosen branch of the adapting neuron (using a uniform distribution over branches) as schematically indicated in Figure 3. This resulted in an average of 24 synaptic connections from each ensemble to each of the six branches. Neurons in an active ensemble were emitting 35 Hz Poisson spike trains in which spike–spike correlations between neurons in the ensemble were not enforced in the generation of these spike trains. Other neurons were spiking at a background rate of 2 Hz. We presented one combination of two active ensembles for 10 s to the neuron.

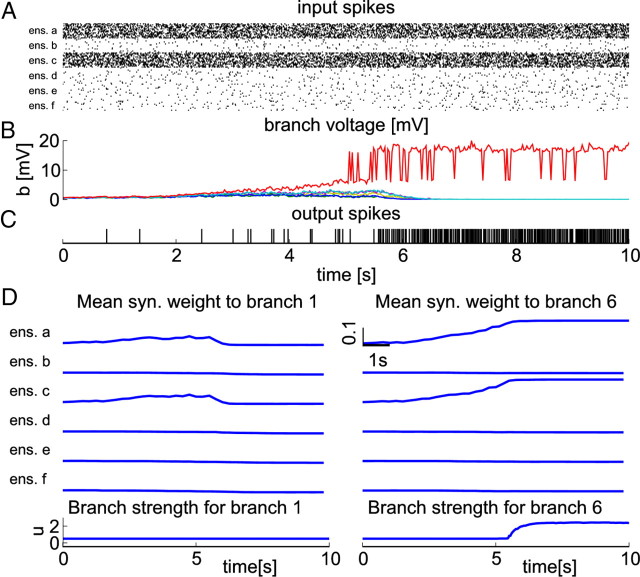

Figure 5 shows that the neuron becomes responsive for this input pattern and that a single branch stores the pattern as a result of an emergent competition between branches. Until t = 5 s, all branch activities rise because presynaptic activity potentiates synaptic efficacies (Eq. 11). One can see, however, that the voltage difference between branch 6 (Fig. 5B, red trace) and other branches is consistently growing starting at time t = 2.5 s. This effect arises because higher local branch potential leads to stronger LTP. At time t = 5 s, the threshold for dendritic spikes is first reached at branch 6. When the neuron starts to fire at high rate (starting at t = 5.5 s), less strongly active branches become less and less responsive to the pattern. In this case, a single branch wins and specializes to the given combination of active ensembles.

Figure 5.

Emergent competition between dendritic branches. A, Black dots denote input spikes to the neuron (every 10th neuron is shown). Ensembles a and c are highly active. B, Voltages at individual branches. Colors code for branch identity; the voltage of branch 6 (the winning branch) is plotted in red. C, Bars denote output spikes of the trained neuron. Once the neuron becomes responsive, its firing rate increases to ∼50 Hz. D, Weights and branch strengths for branch 1 (left panels) and branch 6 (right panels; the winning branch). The top six panels show average weights for synapses from the six input ensembles to these two branches. The bottom plot shows the evolution of branch strengths for these two branches. The branch strength of the winning branch (right panel) increases, whereas others stay approximately constant because no dendritic spikes are elicited in these branches.

The weight dynamics at two branches are shown in Figure 5D and follow a similar pattern: in a first phase, the weight updates at all branches favor synapses originating from active ensembles because of the linear dependence of LTP on presynaptic frequency. Thus, all branches increase their response to this pattern. However, because the updates depend on the local membrane voltage at the branch, the most active branch—branch 6—is favored and increases its response most strongly. As dendritic spikes are robustly evoked in branch 6 at t = 5.5 s, the neuron starts to fire at high rate and STDP leads to LTD. At this time, the local branch voltage at branch 6 has a high value as a result of dendritic spikes. Therefore, depolarization-dependent LTP can compensate for the depression at this branch. The local branch voltage at other branches is much lower (Fig. 5B) and the mean synaptic weight decreases at these branches. As dendritic spikes are robustly evoked in branch 6 after t = 5 s, the corresponding branch strength _u_6 also increases (Fig. 5D, bottom plots). This leads to an increase of the firing rate of the neuron until the branch strength reaches a steady state (see below). Note that the backpropagating postsynaptic spike plays an important part in the competitive dynamics through its critical role in LTD induction. It indicates that the neuron reached threshold and thus that some branch was strongly activated. This information is used to weaken competing branches to avoid redundant information storage.

We wanted to better understand how and under which conditions this competitive behavior arises from the interplay between LTP and LTD. To answer these questions, we have analyzed the weight dynamics theoretically. To make a theoretical analysis possible, we used a simplified model in which the discontinuous branch behavior is replaced by an exponential nonlinearity. From the learning rules, we included the minimal set from our model that is essential for dendritic competition. This set consists of voltage-dependent LTP for each presynaptic spike and LTD for post-before-pre spike pairs. The full analysis can be found in Materials and Methods. Assuming a large number of synaptic inputs, we have then approximated the voltage at dendritic branches by their mean field, which yields a rate formulation of neuronal dynamics. Accordingly, the weight changes attributable to spike-based synaptic learning rules were approximated by the average change over different realizations of the Poissonian input statistics, again yielding a rate-based formulation of the weight dynamics. In this simplified model, we proved that the weight dynamics in fact lead to dendritic competition such that only the most strongly activated dendritic branch stores the presented pattern. More precisely, assume a pattern of constant input rates and assume that, at time t = 0, branch k has higher branch activation than any other branch, i.e., bk(0) > bj(0) for all j ≠ k. We then showed that, for a wide range of parameters, the weight dynamics imply changes in the branch potentials such that the potential bk(t) of the most strongly activated branch will converge to a positive value, whereas the potentials of other branches will converge to 0. The proof is quite instructive for the understanding of the basic mechanism of dendritic competition and sketched in the following. First, we observe that there is a minimal and a maximal value of the branch potential bk(t) such that, after some time, bk will always stay within these bounds. This happens because, at low postsynaptic firing rates, LTP dominates LTD, which increases the synaptic weights and as a consequence also increases the branch potential bk. At high firing rates, on the contrary, LTD dominates, which decreases the synaptic weights and therefore the branch potential bk. It follows that the sum of weights at branch k is also bounded. Consider the difference between the sum of weights at branch k and the sum of weights at another branch j ≠ k. Because of voltage-dependent LTP, this difference increases linearly with time. These observations imply that bj converges to 0 for j ≠ k, whereas the initially highest branch potential bk converges to some positive value.

Self-organization of nonlinear neural computation

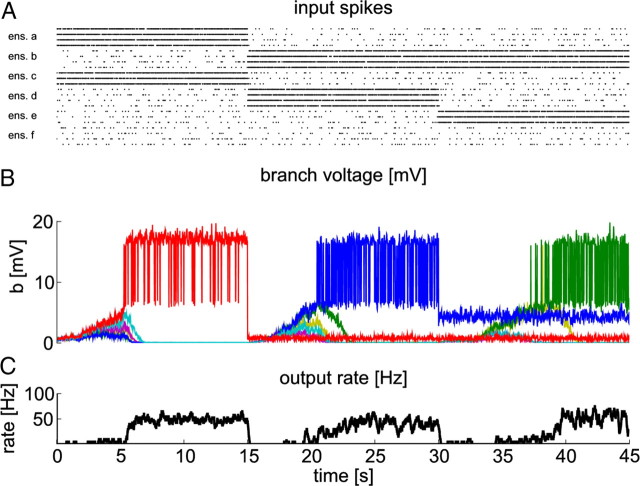

With such a competitive mechanism, a neuron can dedicate a small number of dendritic compartments to the storage of a given input pattern. This endows a neuron with the capability to store many different patterns in its dendritic arborizations and thus to self-organize nonlinear behavior. We tested the self-organization capabilities of the neuron in two difficult situations of the binding problem. First, consider the case in which two input patterns should be stored, where we define a pattern as a set of coactive input ensembles. The first pattern is given by active ensembles a and _c_—we abbreviate such patterns as (a,c) in the following—and the second combination is indicated by active ensembles (b,d) (Fig. 6A, t = 0–15 s and t = 15–30 s, respectively). This situation corresponds to the FBP problem discussed above and illustrated in Figure 3. Using again the example illustrated in Figure 3, ensemble a codes the feature yellow and ensemble c the feature star. The first pattern thus represents a yellow star. The second pattern represents a black disk with ensemble b coding for black and d for disk. After training, the neuron should respond to these positive patterns, but it should not respond to the patterns yellow disk indicated by active ensembles a and d and black star indicated by active ensembles b and c. The situation is summarized in Figure 7A, where the patterns yellow star and black disk are emphasized by light green shading, and the patterns yellow disk and black star are indicated by light red shading.

Figure 6.

A neuron with STDP and BSP can store three different patterns on individual branches. A, Black dots denote input spikes to the neuron. Each line corresponds to one input neuron (every 30th neuron is shown). Input spike trains were sorted according to their input ensemble. Different patterns are presented sequentially. B, Voltages at individual branches. Colors code for branch identity (green, branch 2; blue, branch 4; red, branch 6). C, Firing rate of the trained neuron during learning.

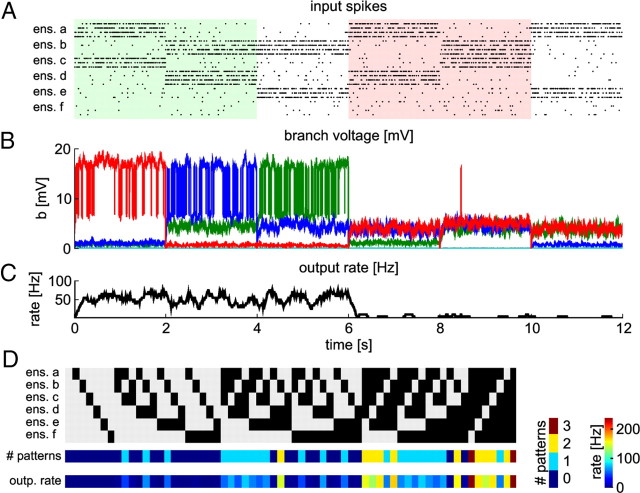

Figure 7.

A neuron with STDP and BSP can acquire nonlinear functionality through STDP and BSP. A, Black dots denote input spikes to the neuron (every 30th neuron is shown). Different combinations of active ensembles are presented sequentially. The first three patterns were previously entrained by STDP and BSP. Color shading indicates patterns corresponding to the FBP problem discussed in Results. B, Voltages at individual branches. Colors code for branch identity (green, branch 2; blue, branch 4; red, branch 6; data smoothed). C, Firing rate of the trained neuron during testing. The neuron has high firing rate for all trained patterns and low firing rate for other patterns. It has high firing rate for patterns 1 and 2 (light green shading) and is silent for patterns 4 and 5 (light red shading), which define an FBP problem. This shows that the neuron has acquired nonlinear functionality. D, Summary of neuron responses after training. Each row shows the result for 1 of the 64 possible combinations of active ensembles. Top, Input patterns; active ensembles are indicated in black. Middle, Number of trained patterns that are contained in this input (left color bar). Bottom, Neuron firing rate (right color bar) for the corresponding input pattern. The neuron is activated only if at least one trained pattern is contained in the current input. If an input contains several trained patterns, the neuron is more strongly activated, because each pattern activates one branch.

The second situation is again characterized by two patterns, one in which ensembles (b,d) are active and another pattern with active ensembles (b,e) (Fig. 6A, t = 15–30 s and t = 30–45 s, respectively). Hence, there is an ∼50% overlap between the patterns. Combining these two situations, the neuron should respond to the patterns (a,c), (b,d), and (b,e) and stay unresponsive to any other combination of two active ensembles, especially for other combinations with ensemble b. We refer to this problem as the extended feature binding problem (eFBP) in the following. Note that this problem is at least as hard as the FBP. For reasons discussed below (see The role of branch strength potentiation), it is actually harder from the practical point of view.

We tested the self-organization capabilities of a neuron in this situation, i.e., in the eFBP, in the following simulation: the three patterns were presented sequentially, each for 15 s of simulated biological time (Fig. 6A). We refer to patterns that are presented during training as trained patterns in the following. The behavior during learning is shown in Figure 6, B and C. Individual branches specialize to specific patterns as can be seen in B. This behavior results from dendritic competition as described above.

Figure 7 shows the response of the neuron after training to the three trained patterns and to three nontrained patterns. Synaptic input during presentation of nontrained patterns does not depolarize dendritic branches strongly enough to cause dendritic spikes. Therefore, the firing rate of the neuron for these patterns is quite low. Figure 7D summarizes the responses of the trained neuron to all possible combinations of active ensembles (each presented for 2 s after training). It shows that the neuron responds to an input pattern only if it contains at least one of the trained patterns. Formally, we say that an input pattern Pin contains a trained pattern Ptr if all coactive ensembles of Ptr are also in the set of coactive ensembles of Pin, i.e., if the set of coactive ensembles in Ptr is a subset of the set of coactive ensembles in Pin. For examples, the input pattern (a,b,c) (the 23rd pattern in Fig. 7D) contains the trained pattern (a,c) but not the pattern (b,d) because ensemble d is not active in this input pattern. Because we do not model inhibitory inputs, the model is monotone. That is, the addition of an active ensemble to an input pattern cannot lead to a decrease of the neuron response (see also the discussion of the XOR problem above). Hence, every input pattern that contains a trained pattern activates the neuron (Fig. 7D). The firing rate of the neuron increases with the number of trained patterns contained in the current input, because each trained ensemble combination activates at least one distinct branch. In summary, Figure 7D shows that the neuron has learned to separate inputs that contain trained patterns from those that do not contain any trained pattern.

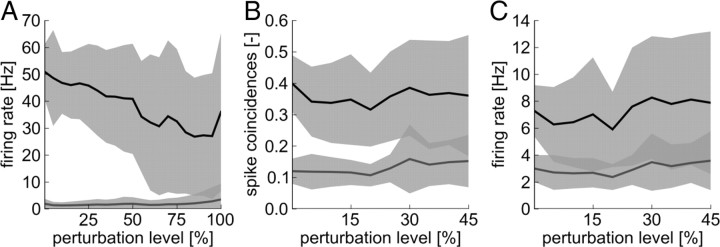

These capabilities of the neuron have been tested in 20 independent simulations with different random connectivity from the input ensembles to the neuron, different random initial weights, and different realizations of the Poissonian spike trains emitted by the ensemble neurons. The neuron was trained for 40 s simulated biological time on each of the three patterns. To assess the ability of the neuron to separate trained from nontrained patterns, we tested the response of the neuron to the presentation of any possible combination of two active ensemble for 500 ms after training. The results are summarized in Figure 8A together with experiments in which neuron parameters were varied randomly to investigate the robustness to parameter perturbations. In these control experiments, the stochastic threshold θ, the firing rate at threshold ρ0, the width of the spike-trigger zone Δ_U_, and the absolute refractory time t_refract were concurrently perturbed. The parameters were concurrently varied by various perturbation levels α of up to ±100%. That is, for a simulation with perturbation level α (in percentage), each parameter value was multiplied in each run by a random number between 1 − α/100 and 1 + α/100, for the threshold θ, the firing rate at threshold ρ0, and Δ_U. The refractory period was at the same time varied by ±50%. As can be seen in Figure 8A, the patterns are well separated even for large parameter perturbations. We note that BSP has only been described in CA1 pyramidal neurons that have also extensive oblique dendrites, whereas we used a soma model that has been fit to a layer 5 pyramidal neuron in somatosensory cortex. We used this soma model because it provides a good tradeoff between physiological plausibility and model complexity. The control experiments described above, in which soma parameters were randomly varied, show that the details of the soma model are not important for the main results reported here.

Figure 8.

Pattern separation is possible under a wide range of neuron parameters. Several somatic parameters were perturbed by up to 100% of their standard values (_x_-axis, perturbation level). Full lines show means (over 20 experiments) and shaded areas show STDs. Dark gray shaded areas indicate overlapping SEs. A, Mean firing rate response to trained patterns (black line) and mean response to other patterns (gray line) in the pattern separation experiment based on firing rates of input ensembles. Pattern separation is possible even for parameter perturbations of up to 75%. B, C, Mean spike coincidences (B) and firing rates (C) for trained patterns (black line) and for other patterns (gray line) in the pattern separation experiment based on synchrony of input ensembles. Although firing rates can overlap, the patterns can well be separated based on spike coincidences for parameter perturbations of up to 25%.

These results were obtained with a plasticity model in which LTP depends linearly on the presynaptic firing rate (see update rules Eq. 6 and 8). To assess whether this dependence is necessary for neuronal self-organization, we performed control simulations with a model in which this dependency was dropped (see Materials and Methods). In 20 independent simulations, the response to trained patterns was 52 ± 10 Hz, whereas for other patterns, it was 3 ± 3 Hz. This shows that the rate dependence is not essential for pattern storage in this model.

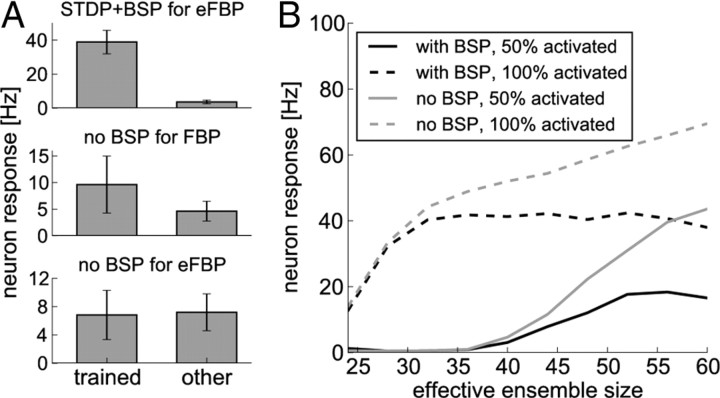

The role of branch strength potentiation

Potentiation of a branch strength through BSP increases the influence of dendritic spikes originating from this branch on the somatic membrane potential. Hence, the probability of postsynaptic spikes is increased, which contributes to the competitive mechanism described above. Consistent with a hypothesis expressed by Losonczy et al. (2008), the branch strength stores a reference to the input pattern, and a subsequent pattern presentation will elicit reliable spiking of the neuron. Figure 9A (top) summarizes the results of the eFBP experiment described above and illustrated in Figure 7, in which we trained a neuron on three feature combinations (a,c), (b,d), and (b,e) and tested on any other combination of two features in 20 independent trials. Figure 9A (top) shows the minimal response to any of the trained patterns (left) and the maximal response to any of the other patterns (right; mean and SD over 20 independent trials). One can see that the patterns are well separated. To illustrate the role of BSP in the separation of patterns, we performed the same experiments but without BSP. The branch strengths thus stay at their initial value of 0.5 during the learning experiment. We first tested this setup on the FBP, i.e., when only the patterns (a,c) and (b,c) are trained (Fig. 9A, middle). The neuron is activated by the trained patterns, although this activation is weaker because branch strengths are not potentiated. The impact of the dendritic spike onto the soma is weak, such that trained patterns are not well separated from other patterns. As one can see in the bottom of Figure 9A, in the case of the eFBP, nontrained patterns activate the neuron as strongly as trained patterns. The problem becomes apparent when we consider the pattern (b,c). Because this pattern was not presented to the neuron during training, no branch is activated strongly enough to elicit branch spikes reliably. Still, the soma is activated by the linear voltage components of three branches that store patterns that partially overlap with (b,c). Those are the branches that store the patterns (a,c), (b,d), and (b,e) respectively. This linear activation outweighs the weak nonlinear component that is added to trained patterns and the two cannot be separated anymore. Hence, increasing the branch strength through mechanisms such as BSP is essential for these types of feature binding problems.

Figure 9.

BSP improves the pattern separation capabilities of a neuron. A, Comparison of learning with STDP and BSP (top) to learning without BSP for small initial branch strengths (middle, bottom). Shown is the minimum response to a trained pattern (left; mean and SD over 20 trials) and the maximum response to a nontrained pattern (right; mean and SD over 20 trials). With BSP, patterns are clearly separated in the extended feature binding problem (top). Without BSP, patterns are not well separated in the feature binding problem (middle) and not separated in the extended feature binding problem (bottom). B, A comparison of neuron responses with BSP during training (black lines) to the responses of a neuron with constant high branch strength (gray lines) as a function of the number of synapses projected from an ensemble to each branch (effective ensemble size). Shown are responses after training to the full pattern (dashed lines) and to a partial activation in which only 50% of the neurons in these ensembles were activated (full lines). Without BSP, neuron responses increase steadily with increasing ensemble size, whereas BSP stabilizes responses over a wide range of effective ensemble sizes.

The weak activation of trained patterns in the absence of BSP could be compensated by increasing the maximum possible synaptic efficacy _w_max. In that process, however, the nonlinear component of branches is even less pronounced compared with the linear component, and the neuron totally fails to solve even the less demanding FBP.

In the above analysis, we have considered the case in which the branch strength is weak initially. This seems to be the general situation in pyramidal neurons, in which initially weak branch strengths are potentiated by BSP (Losonczy et al., 2008; Makara et al., 2009). We then asked the question what would happen if potentiation with BSP would not occur. We now consider the hypothetical case in which branch strengths are already large initially and patterns are stored by synaptic plasticity only.

In this case, feature binding would be possible with synaptic plasticity alone if branch strengths were set to correct values. However, as we show in the following, adaptive branch strengths are advantageous even in this case. More specifically, the postulated dependence of BSP on the local membrane potential in the dynamics Equation 12 can serve a homeostatic role. Because the number of synapses that connect a given ensemble with a specific branch—referred to here informally as effective ensemble size—varies between ensembles and branches, different patterns would lead to quite different neural responses if branch strengths were fixed. Our model for BSP leads to an approximate equalization of the somatic voltage across patterns of different sizes. Figure 9B summarizes several simulations in which a neuron was trained on a single pattern consisting of two active ensembles as before. To test the influence of effective ensemble size on the neural response, we varied the number of synapses that each ensemble projected onto each of the six branches between 24 and 60. We then compared the responses of two neurons, one neuron trained with BSP as above and the other one with constant branch strength. This constant value was set such that the neurons had the same firing rate of 40 Hz at 26 synapses per ensemble and branch after training. The responses of these neurons after training to the full pattern and to a presentation in which 50% of the synapses onto each branch were activated were then compared. Figure 9B shows that, with BSP, the neuron stabilizes its response to the full pattern at ∼40 Hz when the effective ensemble size is large enough to reliably elicit dendritic spikes after training. For constant branch strength, the branch cannot compensate for the stronger input drive and the response increases with effective ensemble size. A similar observation can be made for the half-activated pattern. In this case, the rate of the neuron equipped with BSP stabilizes at ∼20 Hz. With constant branch strength, the response increases more strongly and steadily with increasing effective ensemble size, making it impossible to distinguish between a fully activated pattern of low effective ensemble size and a partly activated pattern of large effective ensemble size. With BSP, however, patterns can be stored over a wide range of effective ensemble sizes, although lower ensemble sizes may be advantageous because of weaker responses to partial activations. Thus, we have shown that, for both cases of small and large initial values, adaptation of branch strengths significantly improves the feature binding capabilities of single neurons.

Acquisition of nonlinear functionality based on synchrony

We then considered the case when information about input patterns is coded by synchronous activation of neurons instead of by firing rate. All neurons were activated at a constant rate of 10 Hz. The firing times of neurons in active ensembles were correlated while other neurons emitted uncorrelated Poissonian spike trains. Again, competition between branches emerges like in the rate-based coding scheme. However, because all inputs have the same firing rate, the rate dependency of LTP cannot be exploited to exclusively strengthen synapses that contribute to the currently presented pattern. Instead, when the threshold for LTP is reached at an individual branch, correlated inputs are subject to considerably more LTP than noncorrelated inputs because they produce postsynaptic spikes and therefore presynaptic spikes fall more frequently in the LTP part of the STDP learning window. As the postsynaptic firing rate increases, the net-negative weight changes attributable to STDP at uncorrelated inputs exceeds the amount of potentiation, and these synapses are depressed (for detailed analysis of this effect of STDP for correlated inputs, see Kempter et al., 1999, 2001; Song et al., 2000).

We simulated one neuron with four branches and presented two patterns representing the FBP as in the rate-based scheme, but now with 10Hz Poisson input spike trains at all inputs. These input spike trains were correlated with a correlation coefficient of 0.5 at active ensembles and uncorrelated at other ensembles. Each ensemble consisted of 196 neurons, and each neuron was synaptically connected to a randomly chosen branch of the adapting neuron. This resulted in an average of 24 synaptic connections from each ensemble to each of the four branches.

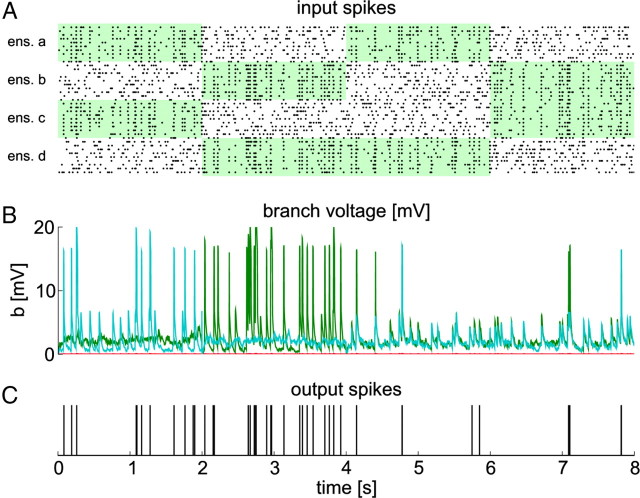

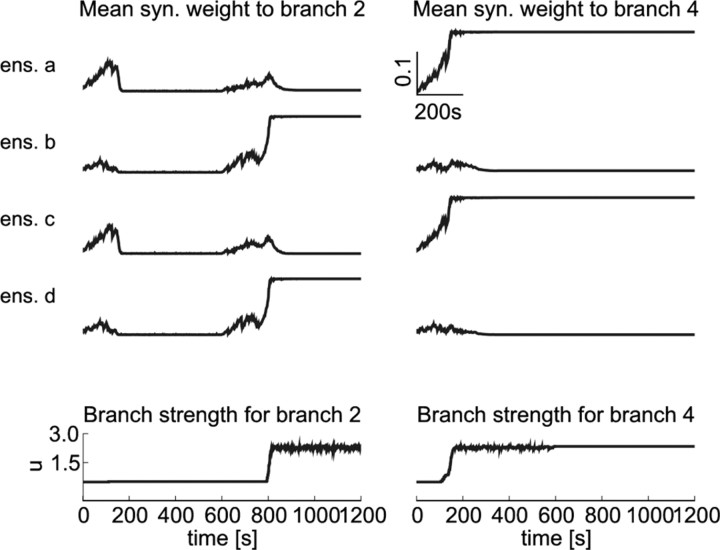

Again, we tested the response of the neuron to trained patterns and other combinations of active ensembles. Figure 10 shows a simulation in which four patterns were presented after training. For the trained patterns, the neuron produces action potentials that are correlated with the input while spikes occur only occasionally for other patterns. The evolution of weights and branch strengths during training is shown in Figure 11.

Figure 10.

A neuron with STDP and BSP can acquire nonlinear functionality through STDP and BSP in a correlation-based coding scheme. A, Black dots denote input spikes to the neuron (every 10th neuron is shown). Different patterns are presented sequentially. Green areas indicate correlated input ensembles. The first two patterns were previously entrained by STDP and BSP. B, Voltages at individual branches. Colors code for branch identity (green, branch 2; cyan, branch 4). C, Spike times of the neuron during testing.

Figure 11.

Weights and branch strengths during neuronal self-organization in a synchrony-based coding scheme. The panels show average weights for the two winning branches 1 (left) and 2 (right). The top four panels show average weights for synapses from the four input ensembles to these two branches. The two correlated ensembles are a and c for the first 600 s and b and d for the remaining time. The bottom plots show the evolution of branch strengths for these two branches. Because all ensembles have the same average firing rate, all weights increase initially. However, as soon as postsynaptic spikes appear, the synaptic strengths of uncorrelated inputs as well as synaptic strengths of non-winning branches decay quickly.