Unsupervised Discovery of Demixed, Low-Dimensional Neural Dynamics across Multiple Timescales through Tensor Component Analysis (original) (raw)

. Author manuscript; available in PMC: 2019 Dec 12.

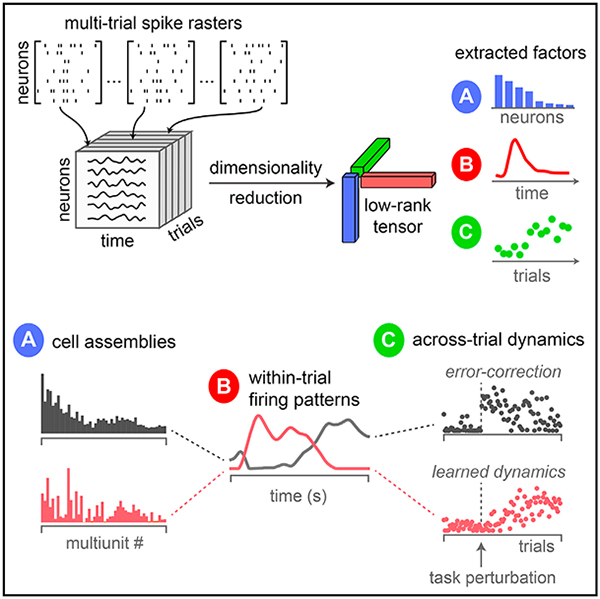

SUMMARY

Perceptions, thoughts, and actions unfold over millisecond timescales, while learned behaviors can require many days to mature. While recent experimental advances enable large-scale and long-term neural recordings with high temporal fidelity, it remains a formidable challenge to extract unbiased and interpretable descriptions of how rapid single-trial circuit dynamics change slowly over many trials to mediate learning. We demonstrate a simple tensor component analysis (TCA) can meet this challenge by extracting three interconnected, low-dimensional descriptions of neural data: neuron factors, reflecting cell assemblies; temporal factors, reflecting rapid circuit dynamics mediating perceptions, thoughts, and actions within each trial; and trial factors, describing both long-term learning and trial-to-trial changes in cognitive state. We demonstrate the broad applicability of TCA by revealing insights into diverse datasets derived from artificial neural networks, large-scale calcium imaging of rodent prefrontal cortex during maze navigation, and multi-electrode recordings of macaque motor cortex during brain machine interface learning.

Graphical Abstract

In Brief

Williams et al. describe an unsupervised method to uncover simple structure in large-scale recordings by extracting distinct cell assemblies with rapid withintrial dynamics, reflecting interpretable aspects of perceptions, actions, and thoughts, and slower across-trial dynamics reflecting learning and internal state changes.

INTRODUCTION

Neural circuits operate over a wide range of dynamical time-scales. Circuit dynamics mediating sensory perception, deci sion-making, and motor control unfold over hundreds of milliseconds (Uchida et al., 2006; Churchland et al., 2012), while slower processes like learning and changes in motivational state can vary slowly over days or weeks (Kleim et al., 1998; Ganguly and Carmena, 2009; Peters et al., 2014). Recent experimental advances enable us to monitor all aspects of this complexity by recording large numbers of neurons at high temporal precision (Marblestone et al., 2013; Kim et al., 2016; Lin and Schnitzer, 2016; Pachitariu et al., 2016; Jun et al., 2017) over long durations (Lütcke et al., 2013; Dhawale et al., 2017), thereby documenting the dynamics of thousands of neurons over thousands of behavioral trials. These modern large-scale datasets present a major analysis challenge: how can we extract simple and interpretable low-dimensional descriptions of circuit dynamics underlying both rapid sensory, motor, and cognitive acts, and slower processes like learning or changes in cognitive state? Moreover, how can we extract these descriptions in an unsupervised manner, to enable the discovery of novel and unexpected circuit dynamics that can vary on a trial-by-trial basis?

Commonly used dimensionality reduction methods focus on reducing the complexity of fast, within-trial firing rate dynamics instead of extracting slow, across-trial structure. A common approach is to average neural activity across trials (Churchland et al., 2012; Gao and Ganguli, 2015), thereby precluding the possibility of understanding of how cognition and behavior change on a trial-by-trial basis. More recent methods, including Gaussian process factor analysis (GPFA) (Yu et al., 2009) and latent dynamical system models (Gao et al., 2016; Pandarinath et al., 2017), identify low-dimensional firing rate trajectories within each trial, but do not reduce the dimensionality across trials by extracting analogous low-dimensional trajectories over trials. Other works have focused on trial-to-trial variability in neural responses (Averbeck et al., 2006; Cohen and Maunsell, 2010, 2011; Goris et al., 2014), and long-term trends across many trials (Siniscalchi et al., 2016; Driscoll et al., 2017), but without an explicit focus on obtaining simple low-dimensional descriptions.

The most common and fundamental method for dimensionality reduction of neural data is principal component analysis (PCA) (Cunningham and Yu, 2014; Gao and Ganguli, 2015). Here, we explore a simple extension of PCA that enables multi-timescale dimensionality reduction both within and across trials. The key idea is to organize neural firing rates into a third-order tensor (a three-dimensional data array) with three axes-corresponding to individual neurons, time within trial, and trial number. We then fit a tensor decomposition model (CANDECOMP/PARAFAC) (Carroll and Chang, 1970; Harshman, 1970) to identify a set of low-dimensional components describing variability along each of these three axes. We refer to this procedure as tensor component analysis (TCA).

TCA circumvents the need to trial-average and identifies separate low-dimensional features (“factors”), each of which corresponds to an assembly of cells with rapid, common within-trial dynamics and slower across-trial dynamics. We show that TCA corresponds to a multi-dimensional generalization of gain modulation, a phenomenon that is widely observed across neural circuits (Salinas and Thier, 2000; Carandini and Heeger, 2011). In particular, TCA compactly describes trial-to-trial variability of each cell assembly by differentially gain modulating its common within-trial dynamics across trials. As a result, TCA achieves a simultaneous, interlocked dimensionality reduction across neurons, time, and trials. Furthermore, unlike PCA, the factors returned by TCA need not be orthogonal (Kruskal, 1977). Because of this property, we show that TCA can achieve a demixing of neural data in which individual factors can tightly correspond with interpretable variables such as sensations, decisions, actions, and rewards.

We demonstrate the practical utility of TCA in three diverse contexts. First, in an artificial neural circuit trained to solve a sensory discrimination task, we show that TCA yields a simple one-dimensional description of the evolving connectivity and dynamics of the circuit during learning. Next, in a maze navigation task in rodents, we show that TCA can recover several aspects of trial structure and behavior, including perceptions, decisions, rewards, and errors, in an unsupervised, data-driven fashion. Finally, for a monkey operating a brain-machine interface (BMI), we show that TCA extracts a simple view of motor learning when the BMI is altered to change the relationship between neural activity and motor action.

RESULTS

Discovering Multi-timescale Structure through TCA

Before describing TCA, we first review the application of PCA to large-scale neural data analysis. Consider a recording of N neurons over K experimental trials. We assume neural activity is recorded at T time points within each trial, but trials of variable duration can be aligned or temporally warped to accommodate this constraint (see, e.g., Kobak et al., 2016). This dataset is naturally represented as an N_×_T_×_K array of firing rates, which is known in mathematics as a third-order tensor. Each element in this tensor, x ntk, denotes the firing rate of neuron n at time t within trial k. Here, the indices n, t, and k each range from 1 to N, T, and K, respectively.

Such large data tensors are challenging to interpret. Even nominally identical trials (e.g., neural responses to repeats of an identical stimulus) can exhibit significant trial-to-trial variability (Goris et al., 2014). Under the assumption that such variability is simply irrelevant noise, a common method to simplify the data is to average across trials, obtaining a two-dimensional table, or matrix, x¯nt, which holds the trial-averaged firing rates for every neuron n and time point t (Figure 1A). Even such a matrix can be difficult to understand in large-scale experiments containing many neurons with rich temporal dynamics.

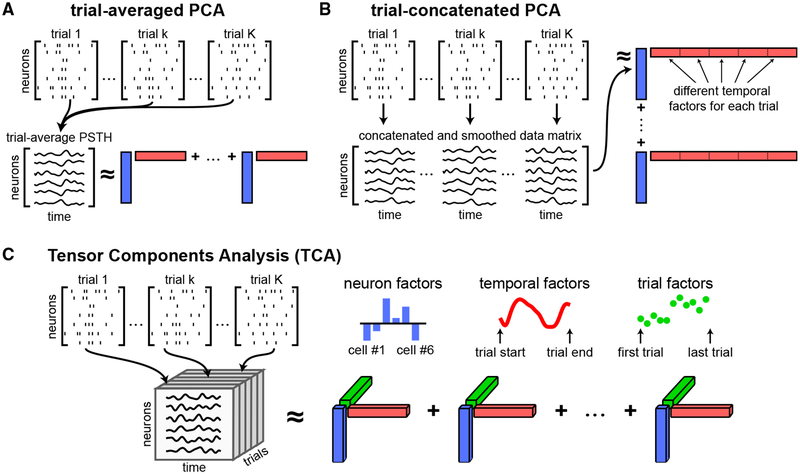

Figure 1. Tensor Representation of Trial-Structured Neural Data.

(A) Schematic of trial-averaged PCA for spiking data. The data are represented as a sequence of N_×_T matrices (top). These matrices are averaged across trials to build a matrix of trial-averaged neural firing rates. PCA approximates the trial-averaged matrix as a sum of outer products of vectors (Equation 1). Each outer product contains a neuron factor (blue rectangles) and a temporal factor (red rectangles).

(B) Schematic of trial-concatenated PCA for spiking data. Data may be temporally smoothed (e.g., by a Gaussian filter) to estimate neural firing rates before concatenating all trials along the time axis. Applying PCA produces a separate set of temporal factors for each trial (subsets of the red vectors).

(C) Schematic of TCA. Data are organized into a third-order tensor with dimensions N × T × K. TCA approximates the data as a sum of outer products of three vectors, producing an additional set of low-dimensional factors (trial factors, green vectors) that describe how activity changes across trials.

PCA summarizes these data by performing a decomposition into R components such that

| x¯nt≈∑r=1Rwnrbtr. | (Equation 1) |

|---|

This approximation is fit to minimize the sum-of-squared reconstruction errors (STAR Methods). This decomposition projects the high-dimensional data (with N or T dimensions) into a low-dimensional space (with R dimensions). Each component, indexed by r, contains a coefficient across neurons, w r n, and a coefficient across time points, b r t. These terms can be collected into vectors: wr, of length N, which we call “neuron factors” (blue vectors in Figure 1), and br, of length T, which we call “temporal factors” (red vectors in Figure 1). The neuron factors can be thought of as an ensemble of cells that exhibit correlated firing. The temporal factors can be thought of as a trial-averaged dynamical activity pattern for each ensemble. Overall, trial-averaged PCA reduces the original N_×_T_×_K data points into R (N + T) values, yielding a compact, and often insightful, summary of the trial-averaged data (Cunningham and Yu, 2014; Gao and Gang-uli, 2015).

Trial-averaging is motivated by the assumption that trial-to-trial variability is irrelevant noise. However, such variability may instead reveal important neural dynamics. For instance, trial-to-trial variability may reflect fluctuations in interesting cognitive states, such as attention or arousal (Cohen and Maunsell, 2010, 2011; Niell and Stryker, 2010), or the slow emergence of learned dynamics (Peters et al., 2014), or drifting internal representations of stable behaviors (Driscoll et al., 2017). Ideally, we would like unbiased, data-driven methods to extract such dynamics simply by analyzing the data tensor.

One approach to retain variability across trials is to concatenate multiple trials rather than averaging, thereby transforming the data tensor into an N_×_TK matrix, and then applying PCA to this matrix (Figure 1B). This approach, which we call “trial-concatenated PCA,” is similar to GPFA (Yu et al., 2009). In the context of spiking data, trial-concatenated PCA typically involves pre-smoothing the spike trains (e.g., with a Gaussian filter of a chosen width), while GPFA performs a more general optimization to identify optimal smoothing parameters. In both methods, the R temporal factors are of length TK and do not enforce any commonality across trials. They therefore achieve a less significant reduction in the complexity of the data: the NTK numbers in the original data tensor are only reduced to R(N + TK) numbers. While these methods can describe single-trial dynamics, they can be cumbersome in experiments consisting of thousands of trials.

Our proposal is to perform dimensionality reduction directly on the original neural data tensor (Figure 1C), rather than first converting it to a matrix. This TCA method then yields the decomposition (Harshman, 1970; Carroll and Chang, 1970; Kolda and Bader, 2009)

| x¯ntk≈∑r=1Rwnrbtrakt. | (Equation 2) |

|---|

In analogy to PCA, we can think of wr as a prototypical firing rate pattern across neurons, and we can think of br as a temporal basis function across time within trials. These neuron factors and temporal factors constitute structure that is common across all trials. We call the third set of factors, ar, “trial factors” (green vectors in Figure 1), which are new to TCA and not present in PCA. The trial factors can be thought of as trial-specific amplitudes for the within-trial activity patterns identified by the neuron and temporal factors. Thus, in TCA, the trial-to-trial fluctuations in neural activity are also embedded in _R_-dimensional space. TCA achieves a dramatic reduction of the original data tensor, reducing NTK data points to R (N + T + K) values, while still capturing trial-to-trial variability.

An important feature of PCA is that it requires both the neuron (wr) and temporal (br) factors to be orthogonal sets of vectors to yield a unique solution. This assumption is, however, motivated by mathematical convenience rather than biological principles. In real biological circuits, cell ensembles may overlap and temporal firing patterns may be correlated, producing non-orthogonal structure that cannot be recovered by PCA. An important advantage of TCA is that it does not require orthogonality constraints to yield a unique solution (Kruskal, 1977; Qi et al., 2016) (STAR Methods). Below, we demonstrate that this theoretical advantage enables TCA to demix neural data in addition to reducing its dimensionality. In particular, on a range of datasets, TCA can recover non-orthogonal cell ensembles and firing patterns that map onto interpretable task variables, such as trial conditions, decisions, and rewards, while PCA recovers features that encode complex mixtures of these variables (Kobak et al., 2016).

TCA as a Generalized Cortical Gain Control Model

Although TCA was originally developed as a statistical method (Harshman, 1970; Carroll and Chang, 1970), here we show that it concretely relates to a prominent theory of neural computation when applied to multi-trial datasets. In particular, performing TCA is equivalent to fitting a gain-modulated linear network model to neural data. In this network model, N observed neurons (light gray circles, Figure 2A) are driven by a much smaller number of R unobserved, or latent, inputs (dark gray circles, Figure 2A) that have a fixed temporal profile but have varying amplitudes for each trial. The neuron factors of TCA, w r n in Equation 2, correspond to the synaptic weights from each latent input r to each neuron n. The temporal factors of TCA, b r t, correspond to basis functions or the activity of input r at time t. Finally, the trial factors of TCA, a r k, correspond to amplitudes, or gain, of latent input r on trial k. Such trial-to-trial fluctuations in amplitude have been observed in a variety of sensory systems (Dean et al., 2005; Niell and Stryker, 2010; Kato et al., 2013; Goris et al., 2014) and are believed to be an important and ubiquitous feature of cortical circuits (Salinas and Thier, 2000; Carandini and Heeger, 2011). The TCA model can be viewed as an _R_-dimensional generalization of such theories. By allowing an _R_-dimensional space of possible gain modulations to different temporal factors, TCA can capture a rich diversity of changing multi-neuronal activity patterns across trials.

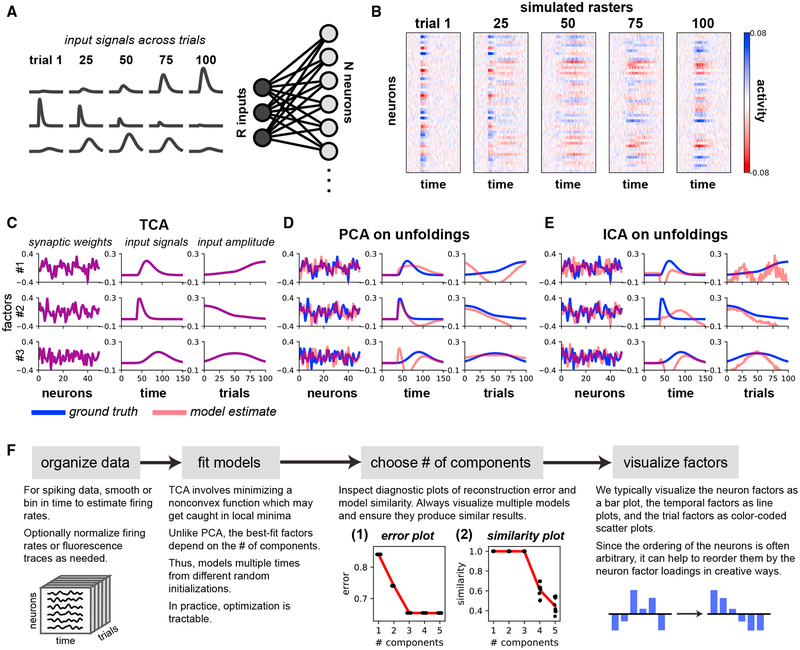

Figure 2. TCA Precisely Recovers the Parameters of a Linear Model Network.

(A) A gain-modulated linear network, in which R = 3 input signals drive N = 50 neurons by linear synaptic connections. Gaussian noise was added to the output units.

(B) Simulated activity of all neurons on example trials.

(C) The factors identified by a three-component TCA model precisely match the network parameters.

(D and E) Applying PCA (D) or ICA (E) to each of the tensor unfoldings does not recover the network parameters.

(F) Analysis pipeline for TCA. Inset 1: error plots showing normalized reconstruction error (vertical axis) for TCA models with different numbers of components (horizontal axis). The red line tracks the minimum error (i.e., best-fit model). Each black dot denotes a model fit from different initial parameters. All models fit from different initializations had essentially identical performance. Reconstruction error did not improve after more than three components were included. Inset 2: similarity plot showing similarity score (STAR Methods; vertical axis) for TCA models with different numbers of components (horizontal axis). Similarity for each model (black dot) is computed with respect to the best-fit model with the same number of components. The red line tracks the mean similarity as a function of the number of components. Adding more than three components caused models to be less reliably identified.

An important property of TCA, due to its uniqueness conditions (Kruskal, 1977) (STAR Methods), is that it can directly identify the parameters of this network model purely from the simulated data, even when the ground-truth parameters are not orthogonal. We confirmed this in a simple simulation with three latent inputs/components. In this example, the first component grows in amplitude across trials, the second component shrinks, and the third component grows and then shrinks in amplitude (Figure 2A). This model generates rich simulated population activity patterns across neurons, time, and trials as shown in Figure 2B, where we have added Gaussian white noise to demonstrate the robustness of the method. A TCA model with R = 3 components precisely extracted the network parameters from these data (Figure 2C).

In contrast, neither PCA nor independent component analysis (ICA) (Bell and Sejnowski, 1995) can recover the network parameters, as demonstrated in Figures 2D and 2E, respectively. Unlike TCA, both PCA and ICA are fundamentally matrix, not tensor, decomposition methods. Therefore they cannot be applied directly to the data tensor, but instead must be applied to three different matrices obtained by tensor unfolding (Figure S1). In essence, the unfolding procedure generalizes the trial-concatenated representation of the data tensor (Figure 1B) to allow concatenation across neurons or time points. This unfolding destroys the natural tensor structure of the data, thereby precluding the possibility of finding the ground-truth synaptic weights, temporal basis functions, and trial amplitudes that actually generated observed neural activity patterns.

Choosing the Number of Components

A schematic view of the process of applying TCA to neural data is shown in Figure 2F (see STAR Methods for more details). As in PCA and many other dimensionality reduction methods, a critical issue is the choice of the number of components, or dimensions R. We employ two methods to inform this choice. First, we inspect an error plot (Figure 2F, inset), which displays the model reconstruction error as a function of the number of components R. We normalize the reconstruction error to range between zero and one, which provides a metric analogous to the fraction of unexplained variance often used in PCA. As in PCA, a kink or leveling out in this plot indicates a point of diminishing returns for including more components.

Unlike PCA, the optimization landscape of TCA may have suboptimal solutions (local minima), and there is no guarantee that optimization routines will find the best set of parameters for TCA. Thus, we run the optimization algorithm underlying TCA at each value of R multiple times from random initial conditions, and plot the normalized reconstruction error for all optimization runs. This procedure allows us to check whether some runs converge to local minima with high reconstruction error. As shown in Figure 2F (inset), the error plot reveals that all runs at fixed R yield the same error, and moreover, the kink in the plot unambiguously reveals R = 3 as the true number of components in the generated data, in agreement with the ground truth. This result suggests that all local minima in the TCA optimization landscape are all similar to each other, and thus presumably similar to the global minimum. Later, we show similar results on a variety of large-scale neural datasets, suggesting that TCA is generally easy to optimize in settings of interest to neuroscientists.

A second method to assess the number of components involves generating a similarity plot (Figure 2F, inset), which dis plays how sensitive the recovered factors are to the initialization of the optimization procedure. For each component, we compute the similarity of all fitted models to the model with lowest reconstruction error by a similarity score between zero (orthogonal factors) and one (identical factors). Adding more components can produce lower similarity scores, since multiple low-dimensional descriptions may be consistent with the data. Like the error plot, the similarity plot unambiguously reveals R = 3 as the correct number of components, as decompositions with R > 3 are less consistent with each other (Figure 2F, inset). Notably, all models with R = 3 converge to identical components (up to permutations and re-scalings of factors), suggesting that only a single low-dimensional description, corresponding to the ground-truth network parameters, achieves minimal reconstruction error. TCA consistently identifies this solution across multiple optimization runs.

TCA Elucidates Learning Dynamics, Circuit Connectivity, and Computational Mechanism in a Nonlinear Network

While TCA corresponds to a linear gain-modulated network, it can nevertheless reveal insights into the operation of more complex nonlinear networks, analogous to how PCA, a linear dimensionality reduction technique, allows visualization of low-dimensional nonlinear neural trajectories. We examined the application of TCA to nonlinear recurrent neural networks (RNNs), a popular class of models in machine learning, which have also been used to model neural dynamics and behavior (Sussillo, 2014; Song et al., 2016). Such models are so complex that they are often viewed as “black boxes.” Methods that shed light on the function of RNNs and other complex computational models are therefore of great interest. While previous studies have focused on reverse-engineering trained RNNs (Sussillo and Barak, 2013; Rivkind and Barak, 2017), few works have attempted to characterize how computational mechanisms in RNNs emerge over the process of learning, or optimization, of network parameters. Here we show TCA can characterize RNN learning in a simple sensory discrimination task, analogous to the well-known random dots direction-discrimination task (Britten et al., 1992).

Specifically, we trained an RNN with 50 neurons to indicate whether a noisy input signal had net positive or negative activity over a short time window, by exciting or inhibiting an output neuron (Figure 3A). We call trials with a net positive or negative input (+)-trials or (−)-trials, respectively. The average amplitude of the input can be viewed as a proxy for the average motion energy of moving dots, with ± corresponding to left/right motion, for example. We initialized the synaptic weights to small values (close to zero) so that the network began in a non-chaotic dynamical regime. The weights were updated by a gradient descent rule using backpropagation through time on a logistic loss function. Within 750 trials the network performed the task with virtually 100% accuracy (Figure 3B).

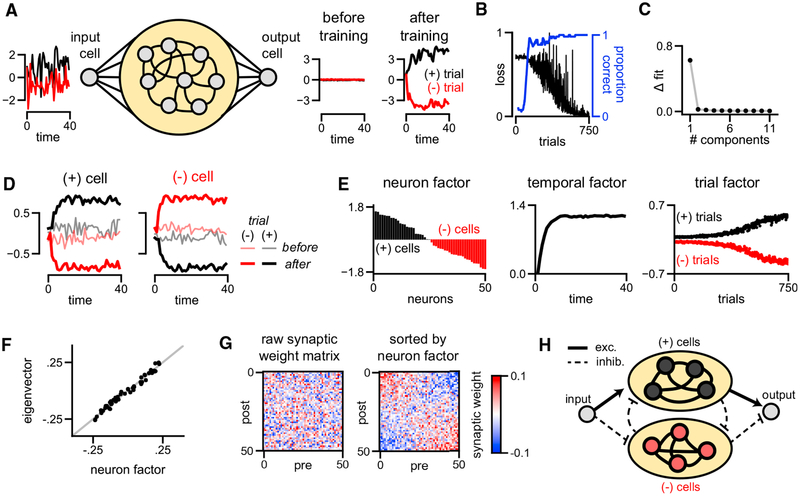

Figure 3. Unsupervised Discovery of Low-Dimensional Learning Dynamics and Mechanism in a Model RNN.

(A) Model schematic. A noisy input signal is broadcast to a recurrent population of neurons with all-to-all connectivity (yellow oval). On (+)-trials the input is net positive (black traces), while on (−)-trials the input is net negative (red traces). The network is trained to output the sign of the input signal with a large magnitude.

(B) Learning curve for the model, showing the objective value on each trial over learning.

(C) Plot showing the improvement in normalized reconstruction error as more components are added to the model.

(D) An example (+)-cell and (−)-cell before and after training on both trial types. Black traces indicate (+)-trials, and red traces indicate (−)-trials.

(E) Factors discovered by a one-component TCA model applied to simulated neuron activity over training. The neuron factor identifies (+)-cells (black bars) and (−)-cells (red bars), which have opposing correlations with the input signal. These two populations naturally exist in a randomly initialized network (trial 0), but become separated after during training, as described by the trial factor.

(F) The neuron factor identified by TCA closely matches the principal eigenvector of the synaptic connectivity matrix post-learning.

(G) The recurrent synaptic connectivity matrix post-learning. Resorting the neurons by their order in the neuron factor in (E) uncovers competitive connectivity between the (+)-cells and (−)-cells.

(H) Simplified diagram of the learned mechanism for this network.

Remarkably, TCA needed only a single component to capture both the within-trial decision-making dynamics and the across-trial learning dynamics of this network. Adding more components led to negligible improvements in reconstruction error (Figure 3C). A single-component TCA model makes two strong predictions about this dataset. First, within all trials, the time course of evidence integration is shared across all neurons and is not substantially affected by training. Second, across trials, the amplitude of single-cell responses is simply scaled by a common factor during learning. In essence, prior to learning, all cells have some small, random preference for one of the two input types, and learning corresponds to simply amplifying these initial tunings. We visually confirmed this prediction by examining single-trial responses of individual cells. We observed two cell types within this model network: cells that were excited on (+)-trials and inhibited on (−)-trials (which we call (+)-cells; Figure 3D, left), and cells that were excited on (−)-trials and inhibited on (+)-trials (which we call (−)-cells; Figure 3D, right). The response amplitudes of both cell types magnified over learning, and typically the initial tuning (pale lines) aligned with the final tuning (dark lines). These trends are visible across the full population of cells in the network (Figure S2).

We then visualized the three factors of the single-component TCA model (Figure 3E). We sorted the cells by their weight in the neuron factor and plotted this factor, w1, as a bar plot (Figure 3E, left). Neurons with a positive weight correspond to (+)-cells (black bars) defined earlier, while neurons with a negative weight correspond to (−)-cells (red bars). While it is conceptually helpful to discretely categorize cells, the neuron factor illustrates that the model cells actually fall along a continuous spectrum rather than two discrete groups. The temporal basis function extracted by TCA, b1, reveals a common dynamical pattern within all trials corresponding to integration to a bound (Figure 3E, middle), similar to the example cells shown in Figure 3D. Finally, the trial factor of TCA, a1, recovered two important aspects of the neural dynamics (Figure 3E, right). First, the trial amplitude is positive for (+)-trials (black points) and negative for (−)-trials (red points), thereby providing a direct readout of the input on each trial. Second, over the course of learning, these two trial types become more separated, reflecting stronger internal responses to the stimulus and a more confident prediction at the output neuron. This reveals that the process of learning simply involves monotonically amplifying small and random initial selectivity for the stimulus into a strong final selectivity.

This analysis also sheds light on the synaptic connectivity and computational mechanism of the RNN. To perform the task, the network must integrate evidence for the sign of the noisy stimulus over time. Linear networks achieve this when the synaptic weight matrix has a single eigenvalue equal to one, and the remaining eigenvalues close to zero (Seung, 1996). The eigenvector associated with this eigenvalue corresponds to a pattern of activity across neurons along which the network integrates evidence. The nonlinear RNN converged to a similar solution where one eigenvalue of the connectivity matrix is close to one, and the remaining eigenvalues are smaller and correspond to random noise in the synaptic connections (Figure S2A). Although the TCA model was fit only to the activity of the network, the prototypical firing pattern extracted by TCA in Figure 3E (left) closely matched the principal eigenvector of the network’s synaptic connectivity matrix (Figure 3F). Thus, TCA extracted an important aspect of the network’s connectome from the raw simulated activity.

The neuron factor can also be used to better visualize and interpret the weight matrix itself. Since the original order of the neurons is arbitrary, the raw synaptic connectivity matrix appears to be unstructured (Figure 3G, left). However, re-sorting the neurons based on the neuron factor extracted by TCA reveals a competitive connectivity between the (+)-cells and (−)-cells (Figure 3G, right). Specifically, neurons tend to send excitatory connections to cells in their same class, and inhibitory connections to cells of the opposite class. We also observed positive correlations between the neuron factor and the input and output synaptic weights of the network (Figures S2B and S2C). Taken together, these results provide a simple account of network function in which the input signal excites (+)-cells and inhibits (−)-cells on (+)-trials, and vice versa on (−)-trials. The two cell populations then compete for dominance in a winner-take-all fashion. Finally, the decision of the network is broadcast to the output cell by excitatory projections from the (+)-cells and inhibitory projections from the (−)-cells (Figure 3H).

In summary, TCA extracts a one-dimensional description of the activity of all neurons over all trials in this nonlinear network. Each of the three TCA factors has a simple interpretation: the neuron factor w1 reveals a continuum of cell types related to stimulus selectivity, the temporal factor b1 describes neural dynamics underlying decision making, and the trial amplitudes a1 reflect the trial-by-trial decisions of the network, as well as the slow amplification of stimulus selectivity underlying learning. Finally, even though the TCA factors were found in an unsupervised fashion from the raw neural activity, they provide direct insights into the synaptic connectivity and computational mechanism of the network.

TCA Compactly Represents Prefrontal Activity during Spatial Navigation

After motivating and testing the abilities of TCA on artificial network models, we investigated its performance on large-scale experimental datasets. We first examined the activity of cortical cells in mice during a spatial navigation task. A miniature micro-endoscope (Ghosh et al., 2011) was used to record fluorescence in GCaMP6m-expressing excitatory neurons in the medial pre-frontal cortex while mice navigated a four-armed maze. Mice began each trial in either the east or west arm and chose to visit either the north or south arm, at which point a water reward was either dispensed or withheld (Figures 4A and 4B). We examined a dataset from a mouse containing N = 282 neurons recorded at T = 111 time points (at 10 Hz) on K = 600 behavioral trials, collected over a 5 day period. The rewarded navigational rules were switched periodically, prompting the mouse to explore different actions from each starting arm. Fluorescence traces for each neuron were shifted and scaled to range between zero and one in each session, and organized into an N_×_T_×_K tensor.

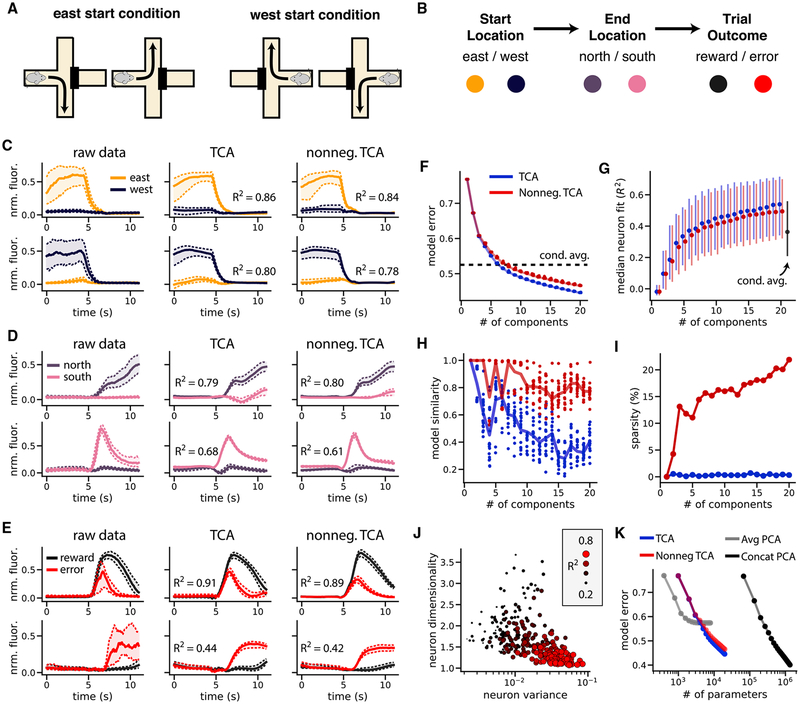

Figure 4. Reconstruction of Single-Cell Activity during Spatial Navigation by Standard and Nonnegative TCA.

(A) All four possible combinations of starting and ending position on a trial.

(B) Color scheme for three binary task variables (start location, end location, and trial outcome). Each trial involves a sequential selection of these three variables. (C–E) Average fluorescence traces and TCA reconstructions of example neurons encoding start location (C), end location (D), and reward (E). Solid line denotes median fluorescence across trials; dashed lines denote upper and lower quartiles.

(F) Error plot showing normalized reconstruction error for standard (blue) and nonnegative (red) TCA, and the condition-averaged baseline model (black dashed line). Models were optimized from 20 initial parameters; each dot corresponds to a different optimization run.

(G) Median coefficient of determination (_R_2) for neurons as a function of the number of model components for standard TCA (blue), nonnegative TCA (red), and the condition-averaged baseline (black). Dots show the median _R_2 and the extent of the lines shows the first and third quartiles of the distribution.

(H) Model similarity as a function of model components for standard (blue) and nonnegative (red) TCA. Each dot shows the similarity of a single optimization run compared to the best-fit model within each category.

(I) Sparsity (proportion of zero elements) in the neuron factors of standard (blue) and nonnegative (red) decompositions. For each decomposition type, only the best-fit models are shown.

(J) Neuron dimensionality plotted against variance in activity. The size and color of the dots represent the _R_2 of a nonnegative decomposition with 15 components.

(K) Normalized reconstruction error plotted against number of free parameters for trial-averaged PCA, trial-concatenated PCA, and TCA.

We observed that many neurons preferentially correlated with individual task variables on each trial: the initial arm of the maze (Figure 4C), the final arm (Figure 4D), and whether the mouse received a reward (Figure 4E). Many neurons—particularly those with strong and robust coding properties—varied most strongly in amplitude across trials, suggesting that low-dimensional gain modulation is a reasonable model for these data. A TCA model with 15 components accurately modeled the activity of these individual cells and recovered their coding properties (Figures 4C–4E, middle column).

Since the fluorescence traces were normalized to be nonnegative, we also investigated the performance of nonnegative TCA, which is identical to standard TCA, but in addition constrains the neuron, temporal, and trial factors to have nonnegative elements. Despite this additional constraint, nonnegative TCA with 15 components reconstructed the activity of individual neurons with similar fidelity to the standard TCA model (Figures 4C–4E, right column).

We compared standard and nonnegative TCA to a condition-aware baseline model, which used the trial-average population activity within each task condition to predict individual trials. Specifically, we computed the mean activity within each of the eight possible combinations of start locations, end locations, and trial outcomes, and used this to predict single-trial data. In essence, this baseline captures the average effect of all task variables, but does not account for trial-to-trial variability within each combination of task variables.

An error plot for standard and nonnegative TCA showed three important findings (Figure 4F). First, nonnegative TCA had similar performance to standard TCA in terms of reconstruction error (small gap between red and blue lines, Figure 4F). Second, both forms of TCA converged to similar reconstruction error from different random initializations, suggesting that the models did not get caught in highly suboptimal local minima during optimization (Figure 4F). Third, TCA models with more than six components matched or surpassed the condition-aware baseline model, suggesting that relatively few components were needed to explain task-related variance in the dataset (dashed black line, Figure 4F). We also examined the performance of nonnegative and standard TCA in terms of the _R_2 of individual neurons. Again, nonnegative TCA performed similarly to standard TCA under this metric, and both models surpassed the condition-aware baseline if they included more than six components (Figure 4G).

In addition to achieving similar accuracy to standard TCA, nonnegative TCA possesses two important advantages. First, a similarity plot showed that nonnegative TCA converged more consistently to a similar set of low-dimensional components (Figure 4H). This empirical result agrees with existing theoretical work, which has proven even stronger uniqueness conditions for nonnegative TCA, compared to what has been proven for standard TCA (Qi et al., 2016). Second, the components recovered by nonnegative TCA were more sparse (i.e., contained more zero entries than standard TCA; Figure 4I). Sparse and nonnegative components are generally simpler to interpret, as relatively unimportant model parameters are set to zero and only additive interactions between components remain (Lee and Seung, 1999). For these reasons, we chose to focus on nonnegative TCA for the remaining analysis of this dataset.

While TCA accurately reconstructed the activity of many cells (Figures 4C–4E), others were more difficult to fit (Figure S3). However, we observed that neurons with low _R_2 had firing patterns that were unreliably timed across trials and did not correlate with task variables (Figure S3B). To visualize this, we plotted the total variance and the dimensionality of each cell’s activity against the fit of a nonnegative TCA model with 15 components (Figure 4J). The dimensionality of each cell’s activity (STAR Methods) measures the trial-to-trial reliability of a cell’s firing: cells that fire consistently at the same time in each trial will be low-dimensional relative to cells that fire at different time points in each trial. First, this plot shows a negative correlation between variance and dimensionality: cells with higher variance (larger dynamic ranges in fluorescence) tended to be lower dimensional and thus more reliably timed across trials. Second, this plot shows these low-dimensional cells were well fit by TCA, suggesting that TCA summarizes the information encoded most reliably and strongly by this neural population. Moreover, outlier cells that defy a simple statistical characterization can be algorithmically identified and flagged for secondary analysis by sorting neurons by their _R_2 score under TCA.

TCA’s performance in summarizing neural population activity with very few parameters exceeds that of trial-averaged PCA, which has sub-par performance, and trial-concatenated PCA, which requires many more parameters to achieve similar perfor mance. This comparison is summarized in Figure 4K, which plots reconstruction error against the number of free parameters for each class of models. Trial-averaged PCA (Figure 4K, gray line) has fewer parameters than TCA, but cannot achieve much lower than 60% error, since it cannot capture trial-by-trial neural firing patterns. In contrast, trial-concatenated PCA (Figure 4K, black line) had comparable performance to TCA but required roughly 100 times more free parameters, and is therefore much less interpretable. A TCA model with 15 components reduces the complexity of the data by 3 orders of magnitude, from ~107 data points to ~104 parameters, whereas a trial-concatenated PCA model with this many components only reduces the number of parameters to ~106.

Cross-validation Reveals TCA Is Unlikely to Overfit

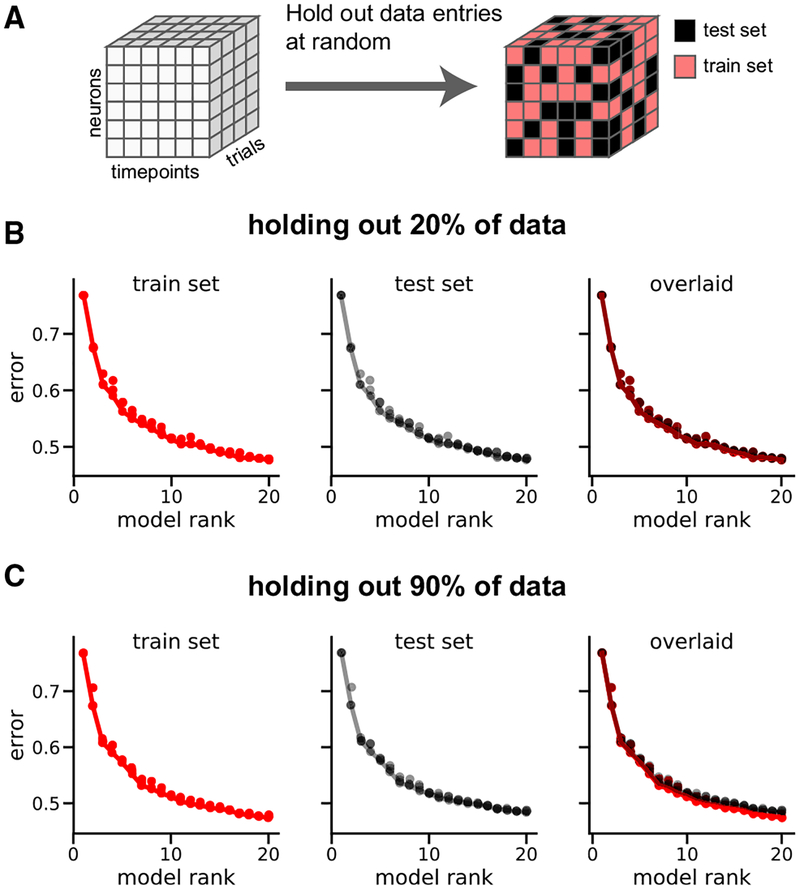

By outperforming the condition-average baseline (Figures 4F and 4G), we see that TCA discovers detailed features within single trials that would be obscured by averaging across trials of a given type. This raises the question of whether these detailed features are real, or if TCA is overfitting to noise and thereby identifying spurious factors. To answer this question, we developed a cross-validation procedure in which elements of the data tensor were held out at random (Figure 5A). We modified the optimization procedure for TCA (STAR Methods) to ignore these held-out entries and evaluated reconstruction error separately on the observed entries (training set) and held-out entries (test set) for TCA models ranging from R = 1 to R = 20 components. If TCA were overfitting, then increasing R above a certain point would cause larger reconstruction errors in the test set.

Figure 5. Cross-validation of Nonnegative TCA on the Rodent Pre-frontal Data.

(A) Schematic of cross-validation procedure, in which elements of the data tensor are held out at random with fixed probability.

(B) Normalized reconstruction error in training set (red) and test set (black) for nonnegative TCA models with a training set comprising 80% of the tensor.

(C) Same as (B), except only 10% of the tensor was used as the training set.

We first held out 20% of the data points and trained nonnegative TCA models on the remaining 80% of the data entries, which is consistent with a standard 5-fold cross-validation procedure. Strikingly, the reconstruction errors on the training set and the test set were nearly identical, showing that TCA is extremely robust to missing data (Figure 5B). To demonstrate this, we left out 90% of the entries in the tensor and trained the models on only 10% of the data points. Despite training the model on a small minority of the data entries, we observed only slight differences in performance on the training and test sets (Figure 5C). Furthermore, the reconstruction error on the test set monotonically decreased as a function of R, suggesting that TCA is still discovering real structure within the data up to (at least) 20 components. In the interest of interpretability, and since performance gains were diminishing, we restricted our attention to models with _R_≤20 for this study.

The remarkable robustness of TCA to holding out 90% of the training data can be understood as follows. Generally, the data tensor will contain NTK data points while the TCA model with R components has R(N + T + K) − 2_R_ free parameters (the −2_R_ correction is due to a scale invariance within each component; STAR Methods). Let p denote the probability that a data point is held out of the training set. Then, for the rodent prefrontal data-set, a TCA model with R = 20 components fit on 10% of the data has roughly

(1−p)⋅NTKR(N+T+K)−2R=(1−0.9)⋅282⋅111⋅60020(282+111+600)−40≈94.76

data points constraining each free parameter. This large ratio of data points to model parameters, even when 90% of the data are withheld, explains why TCA does not overfit. In general, by achieving a dramatic dimensionality reduction leading to compact descriptions of large datasets using very few parameters, TCA is unlikely to overfit on most modern neural datasets unless very large values of R are chosen.

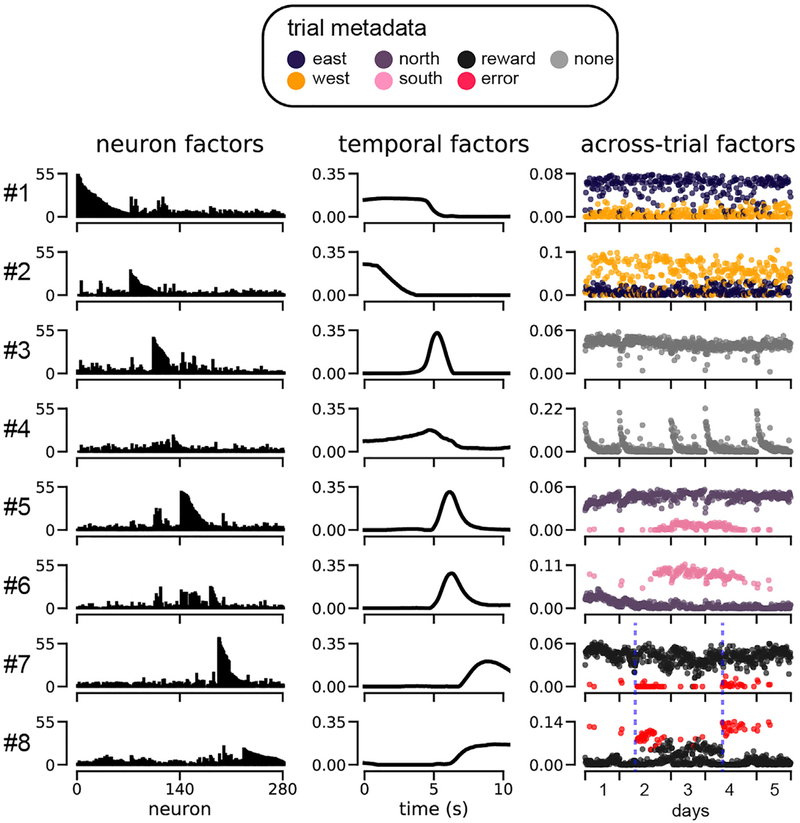

TCA Components Selectively Correlate with Task Variables

We next examined the neural, temporal, and across-trial factors directly to see whether the model provided an insightful summary of the neural activity patterns in prefrontal cortex. Figure 6 shows eight noteworthy components from a 15-component nonnegative TCA model (the remaining seven components carry similar information and are shown in Figure S4). Each nonnegative TCA component identified a sub-population, or assembly of cells (neuron factor; left column), with common intra-trial temporal dynamics (temporal factor; middle column), which were differentially activated across trials (trial factor; right column). We found that R = 15 components were enough to identify populations of neurons that encoded all levels of each task variable (start location, end location, and trial outcome). Qualitatively similar TCA factors were found for R <_ 15 and _R > 15 (Figure S5).

Figure 6. Nonnegative TCA of Rodent Prefrontal Cortical Activity during Spatial Navigation.

Eight low-dimensional components, each containing a neuron factor (left column), temporal factor (middle column), and trial factor (right column), are shown from a 15-component model. For each component, the trial factor is color-coded by the task variable it is most highly correlated with. These eight components were chosen to illustrate six factors that were modulated by the six meta-data labels (see legend) as well as factors #3 and #4, which demonstrate novel structure. The remaining seven components are shown in Figure S4. Blue dashed lines (bottom right) denote reward contingency shifts.

PCA factors contained complex mixtures of coding for the mouse’s position and reward outcomes, hampering interpret ability (FigureS6). Incontrast, TCA isolated each of these task variables into separate components: each trial factor selectively correlated with a single task variable, as indicated by the color-coded scatterplots in Figure 6. Overall, the TCA model uncovered a compelling portrait of prefrontal dynamics in which largely distinct subsets of neurons (Figure 6, left columns) are active at successive times within a trial (Figure 6, middle column) and whose variation across trials (Figure 6, right column) encoded individual task variables.

Specifically, components 1 and 2 uncover neurons that encode the starting location (component 1, east trials; component 2, west trials), components 5 and 6 encode the end location (component 5, north trials; component 6, south trials), and components 7 and 8 encode the trial outcome (component 7, rewarded trials; component 8, error trials). Interestingly, the temporal factors indicate that these components are sequentially activated in each trial: components 1 and 2 activated before components 5 and 6, which in turn activated before components 7 and 8, in agreement with the schematic flow diagram shown in Figure 4B.

TCA also uncovers unexpected components, like components 3 and 4, which activate prior to the destination and outcome-related components (i.e., components 5–8). Component 4 displays systematic reductions in activity across trials within each day, while component 3 is active on nearly every single trial. Component 4 could potentially correspond, for example, to a novelty or arousal signal that wanes over trials within a day. While further experiments are required to ascertain whether this interpretation is correct, the extraction of these components illustrates the potential power of TCA as an unbiased exploratory data analysis technique to extractcorrelates of unobserved cognitive states and separate them from correlates of observable behaviors.

It is important to emphasize that TCA is an unsupervised method that only has access to the neural data tensor, and does not receive any information about task variables like starting location, ending location, and reward. Therefore, the correspondence between TCA trial factors and behavioral information demonstrated in Figure 6 constitutes an unbiased revelation of task structure directly from neural data. Many unsupervised methods, such as PCA, do not share this property (Kobak et al., 2016).

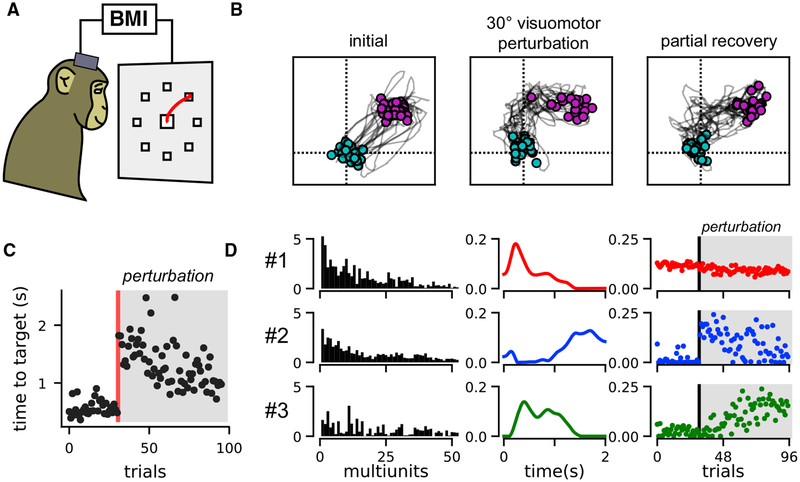

TCA Reveals Two-Dimensional Learning Dynamics in Macaque Motor Cortex after a BMI Perturbation

In the previous section, we validated TCA on a dataset where the animal’s behavior was summarized by a set of discrete labels (i.e., start location, end location, and trial outcome). We next applied TCA to a BMI learning task, in which the behavior on each trial was quantified by a continuous path of a computer cursor, which exhibited significant trial-to-trial variability. Multi-unit data were collected from the pre-motor and primary motor cortices of a Rhesus macaque (Macaca mulatta) controlling a computer cursor in a two-dimensional plane through a BMI (Figure 7A). Spikes were recorded when the voltage signal crossed below −4.5 times the root-mean-square voltage. The monkey was trained to make point-to-point reaches from a central position to one of eight radial targets. These data were taken from recently published work (Vyas et al., 2018).

Figure 7. TCA Reveals Two-Dimensional Learning Dynamics in Primate Motor Cortex during BMI Cursor Control.

(A) Schematic of monkey making center-out, point-to-point reaches in BMI task.

(B) Cursor trajectories to a 45° target position. Twenty trials are shown at three stages of the behavioral session showing initial performance (left), performance immediately after a 30° counterclockwise visuomotor perturbation (middle), and performance after learning, at the end of the behavioral session. Cyan and magenta points respectively denote the cursor position at the beginning and end of the trial.

(C) Time for the cursor to reach target for each trial in seconds. The visuomotor perturbation was introduced after 31 trials (red line).

(D) A three-component nonnegative TCA on smoothed multi-unit spike trains recorded from motor cortex during virtual reaches reveals two components (2 and 3) that capture learning after the BMI perturbation. The top 50 multi-units in terms of firing rate are shown, since many multi-units had very low activity levels.

For simplicity, we initially investigated neural activity during 45° outward reaches. The cursor velocity was controlled by a velocity Kalman filter decoder, which was driven by non-sorted multi-unit activity and fit using relations between neural activity and reaches by the monkey’s contralateral arm at the beginning of the experiment (Gilja et al., 2012). We analyzed multi-unit activity during subsequent reaches, which used this decoder as a BMI directly from neural activity to cursor motion. These initial reaches were accurate (Figure 7B, left) and took less than 1 s to execute (Figure 7C, first 30 trials).

We then perturbed the BMI decoder by rotating the output cursor velocities counterclockwise by 30° (a visuomotor rotation). Thus, the same neural activity pattern that originally caused a motion of the cursor toward the 45° direction now caused a maladaptive motion in the 75° direction, yielding an immediate drop in performance: the cursor trajectories were biased in the counterclockwise direction (Figure 7B, middle) and took longer to reach the target (Figure 7C, trials following perturbation). These deficits were partially recovered within a single training session as the monkey adapted to the new decoder. By the end of the session, the monkey made more direct cursor movements (Figure 7B, right) and achieved the target more quickly (Figure 7C).

We applied TCA and nonnegative TCA to the raw spike trains smoothed with a Gaussian filter with an SD of 50 ms (Kobak et al., 2016). We again found that nonnegative TCA fit the data with similar reconstruction error and higher reliability than standard TCA (Figures S7A and S7B). To examine a simple account of learning dynamics, we examined a nonnegative TCA model with three components. Models with fewer than three components had significantly worse reconstruction error, while models with more components had only moderately better performance and converged to dissimilar parameters during optimization (Figures S7A and S7B); cross-validation indicated that none of the models we considered suffered from overfitting (Figure S7C).

The neuron, temporal, and trial factors of a three-component nonnegative TCA model are shown in Figure 7D. Component 1 (red) described multiple units that were active at the beginning of each trial and were consistently active over all trials. The other two components described multi-units that were inactive before the BMI perturbation and became active only after the perturbation, thereby capturing motor learning. Component 2 (blue) became active on trials immediately after the perturbation, but then slowly decayed over successive trials. Within a single trial, this component was only active at late stages in the reach. Component 3 (green), on the other hand, was not active on trials immediately following the BMI perturbation, but activated slowly across successive trials. Within a single trial, this component was active earlier in the reach. Similar trends in the data emerged from examining an R = 2 and an R = 4 component nonnegative TCA model (Figure S7). Qualitatively, we found that models with R = 3 were most interpretable and reproducible across different reach angles.

The TCA factors suggest a model of motor learning in which a suboptimal, late reaching-stage correction is initially used to perform the task (component 2). Over time, this component is slowly traded for a more optimal early reaching-stage correction (component 3). Interestingly, motor learning did not involve extinguishing neural dynamics present before the perturbation (component 1), even though this component is maladaptive after the perturbation.

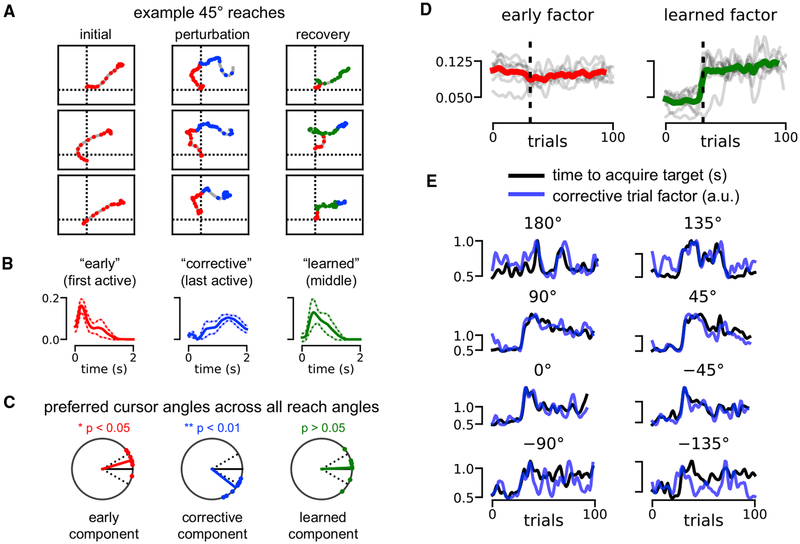

We confirmed this intuition by relating each TCA component to a different phase of motor execution and learning. Figure 8A plots example cursor trajectories on reaches before the perturbation (left), immediately following the perturbation (middle), and at the end of the behavioral session (right). The trajectories are colored at 50 ms intervals based on the component with the largest activation at that time point and trial. Prior to the perturbation, component 1 (red) dominated; the other two components were nearly inactive since their TCA trial factor amplitudes were near zero before the perturbation (Figure 7D). Immediately following the perturbation, component 1 still dominated in the early phase of each trial, producing a counterclockwise off-target trajectory. However, component 2 dominated the second half of each trial, at which point the monkey performed a “corrective” horizontal movement to compensate for the initial error. Finally, near the end of the training session, component 3 was most active at many stages of the reach. Typically, the cursor moved directly toward the 45° target when component 3 was active, suggesting that component 3 captured learned neural dynamics that were correctly adapted to the perturbed visuomotor environment.

Figure 8. TCA Tracks Performance and Uncovers “Corrective” Dynamics in BMI Adaptation Task.

(A) Cursor trajectories for 45° cursor reaches. Every 50 ms, the trajectory is colored by the TCA component with the strongest activation at that time point and trial. Components were colored according to the definition in (B). Three example trajectories are shown at three stages of the experiment: reaches before the visuomotor perturbation (left), reaches immediately following the perturbation (middle), and reaches at the end of the behavioral session.

(B) Average low-dimensional temporal factors identified by nonnegative TCA across all eight reach angles. The early component had the earliest active temporal factor (red). The corrective component had the last active temporal factor (blue). The learned component was the second active temporal factor (green). Solid and dashed lines denote mean ± SD.

(C) Preferred cursor angles for each component type after the visuomotor perturbation. All data were rotated so that the target reach angle was at 0° (solid black line). Dashed black lines denote ± 30° for reference, which was the magnitude of the visuomotor perturbation. On average, the early component was associated with a cursor angle misaligned counterclockwise from the target (red). The corrective component preferred angle was aligned clockwise from the target (blue) by about 30°, in a way that could compensate for the 30° counterclockwise misalignment of the early component. The learned component preferred angle is not significantly different from that of the actual target.

(D) Smoothed trial factors for the early component and learned component. Colored lines denote averages across all reach angles; gray lines denote the factors for each of the eight reach conditions. Factors were smoothed with a Gaussian filter with 1.5 SD for visualization purposes.

(E) Smoothed trial factor for the corrective component (blue) and smoothed behavioral performance (black) quantified by seconds to reach target. Each subplot shows data for a different reach angle. All signals were smoothed with a Gaussian filter with 1.5 SD for visualization.

Based on these observations, we called the component active at the beginning of each trial the early component (#1 in Figure 7), the component active at the end of each trial the corrective component (#2 in Figure 7), and the component active in the middle of each trial the learned component (#3 in Figure 7). These components are colored red, blue, and green, respectively, in both Figure 7 and Figure 8. We then fit three-component TCA models separately to each of the eight reach angles and operationally defined the components as early, corrective, and learned based on the peak magnitude of the within-trial TCA factor. That is, the early component was defined to be the one with the earliest peak in the within-trial factor, the corrective component was the one with the latest peak, and the learned component was the intermediate one (Figure 7B). This very simple definition yielded similar interpretations for low-dimensional components separately fit across different reach angles.

Similar to computing a directional tuning curve for an individual neuron (Georgopoulos et al., 1982), we examined the preferred cursor angles of each low-dimensional component by computing the average cursor velocity weighted by activity of the component (STAR Methods). To compare across all target reach angles, we rotated the preferred angles so that the target was situated at 0° (black line, Figure 8C). All preferred angles were computed on post-perturbation trials. When the early component was active, the cursor typically moved at an angle counterclockwise to the target (p < 0.05, one-sample test for the mean angle), reflecting our previous observation that the early component encodes pre-perturbation dynamics that are maladaptive post-perturbation (Figure 8C, left). When the corrective component was active, the cursor typically moved at an angle clockwise to the target (p < 0.01, one-sample test for the mean angle), reflecting a late-trial compensation for the error introduced by the early component (Figure 8C, middle). Finally, the learned component was not significantly different from the target angle, reflecting a tuning that was better adapted for the perturbed visuomotor environment.

Having established a within-trial interpretation for each component, we next examined across-trial learning dynamics. For visualization purposes, we gently smoothed all TCA trial factors by a Gaussian filter with an SD of 1.5 trials. Across all reach angles, the early component was typically flat and insensitive to the visuomotor perturbation (Figure 8D, left). In contrast, the learned component activated soon after the perturbation was applied, although the rapidness of this onset varied across reach angles (Figure 8D, right). Together, this reinforces our earlier observation that adaptation to the visuomotor rotation typically involves the production of new neural dynamics (captured by the learned component), rather than the suppression of mal-adaptive dynamics (captured by the early component).

Finally, the corrective component was consistently correlated with the animal’s behavioral performance on all reach angles (p < 0.05, Spearman’s rho test). Since performance differed across reach angles, we separately plotted the corrective component (blue) against the time to acquire the target (black) for each reach angle (Figure 8E). Strikingly, in many cases, the corrective component provided an accurate trial-by-trial prediction of the reach duration, meaning that trials with a large corrective movement took longer to execute.

Together, these results demonstrate that TCA can identify both learning dynamics across trials and single-trial neural dynamics. Indeed, each trial factor can be related to within-trial behaviors, such as error-prone cursor movements and their subsequent correction. Furthermore, these interpretations largely replicate across all eight reach angles, despite differences in the learning rate within each of these conditions. Finally, a single corrective trial factor, extracted only from neural data, can directly predict execution time on a trial-by-trial basis, without ever having direct access to this aspect of behavior (Figure 8E).

DISCUSSION

Recent experimental technologies enable us to record from more neurons, at higher temporal precision, and for much longer time periods than ever before (Marblestone et al., 2013; Kim et al., 2016; Pachitariu et al., 2016; Jun et al., 2017; Lin and Schnitzer, 2016; Lütcke et al., 2013; Dhawale et al., 2017), thereby simultaneously increasing the size and complexity of datasets along three distinct modes. Yet experimental investigations of neural circuits are often confined to single timescales (e.g., by trial-averaging), even though bridging our understand ing across multiple timescales is of great interest (Lütcke et al., 2013). Here we demonstrated a unified approach, TCA, that simultaneously recovers low-dimensional and interpretable structure across neurons, time within trials, and time across trials.

TCA and other tensor analysis methods have been extensively studied from a theoretical perspective (Kruskal, 1977; Kolda, 2003; Lim and Comon, 2009; Hillar and Lim, 2013; Qi et al., 2016), and have been applied to a variety of biomedical problems (Omberg et al., 2007; Cartwright et al., 2009; Hore et al., 2016). Several studies have applied tensor decompositions to EEG and fMRI data, most typically to model differences across subjects or Fourier/wavelet transformed signals (Mørup et al., 2006; Acar et al., 2007; Cong et al., 2015; Hunyadi et al., 2017), rather than across trials (Andersen and Rayens, 2004; Mishne et al., 2016). A recent study examined trial-averaged neural data across multiple neurons, conditions, and time within trials as a tensor, but did not study trial-to-trial variability, and only examined different unfoldings of the data tensor into matrices, rather than applying TCA directly to the data tensor (Seely et al., 2016). Other studies have modeled sensory receptive fields as low-rank third-order tensors (Ahrens et al., 2008; Rabinowitz et al., 2015). We go beyond previous work by applying TCA to a broader class of artificial and experimental datasets, drawing a novel connection between TCA and existing theories of gain modulation, and demonstrating that visualization and analysis of the TCA factors can directly yield functional clustering of neural populations (cell assemblies) as well as reveal learning dynamics on a trial-by-trial basis.

In addition to the empirical success of TCA in diverse scenarios presented here, there are three other reasons we expect TCA to have widespread utility in neuroscience. First, TCA is arguably the simplest generalization of PCA that can handle trial-to-trial variability. Given the widespread utility of PCA, we believe that TCA may also be widely applicable, especially as large-scale, long-term recording technologies become more accessible. Second, in contrast to more complex single-trial models, TCA is highly interpretable as a simple network with gain-modulated inputs (Figure 2). Third, while TCA is a simple generalization of PCA, its theoretical properties are strikingly more favorable. Unlike PCA, TCA does not require the recovered factors to be orthogonal in order to obtain a unique solution (STAR Methods; Kruskal, 1977; Qi et al., 2016). The practical outcome of this theoretical advantage was first demonstrated in Figure 2, where the individual factors recovered from neural firing rates matched the underlying parameters of the model neural network in a one-to-one fashion. Similarly, in the rodent prefrontal analysis, TCA uncovered demixed factors that individually correlated with interpretable task variables (Figure 6), whereas PCA recovered mixed components (Figure S6). And finally, when applied to neural activity during BMI learning, TCA consistently found, across multiple reach angles, a “corrective factor” that significantly correlated with behavioral performance on a trial-by-trial basis (Figure 8).

In this paper, we examined the simplest form of TCA by making no assumptions about the temporal dynamics of neural activity within trials or the dynamics of learning across trials. As a result, we obtain extreme flexibility: for example, trial factors could be discretely activated or inactivated on each trial (Figure 6), or they might emerge incrementally over longer time-scales (Figure 7). However, future work could augment TCA with additional structure and assumptions, such as a smoothness penalty or dynamical system structure within trials (Yu et al., 2009).

Further work in this direction could connect TCA to a large body of work on fitting latent dynamical systems to reproduce within-trial firing patterns. In particular, single-trial neural activity has been modeled with linear dynamics (Smith and Brown, 2003; Macke et al., 2011; Buesing et al., 2012; Kao et al., 2015), switched linear dynamics (Petreska et al., 2011; Linderman et al., 2017), linear dynamics with nonlinear observations (Gao et al., 2016), and nonlinear dynamics (Zhao and Park, 2016; Pandarinath et al., 2017). In practice, these methods require many modeling choices, validation procedures, and post hoc analyses. Linear models have a relatively constrained dynamical repertoire (Cunningham and Yu, 2014), while models with nonlinear elements often have greater predictive abilities (Gao et al., 2016; Pandarinath et al., 2017), but at the expense of interpretability. In all cases, the learned representation of each trial (e.g., the initial condition to a nonlinear dynamical system) is not transparently related to single-trial data. In contrast, the trial factors identified by TCA have an extremely simple interpretation as introducing trial-specific gain modulation. Overall, we view TCA as a simple and complementary technique to identifying a full dynamical model, as has been previously suggested for PCA (Cunningham and Yu, 2014).

An important property of TCA is that it extracts salient features of a dataset in a data-driven, unbiased fashion. Such unsupervised methods are a critical counterpart to supervised methods, such as regression, which can directly assess whether a dependent variable of interest is represented in population activity. Recently developed methods like demixed PCA (Kobak et al., 2016) combine regression with dimensionality reduction to isolate linear subspaces that selectively code for variables of interest. Again, we view TCA as a complementary approach, with at least three points of difference. First, like trial-concatenated PCA and GPFA, demixed PCA only reduces dimensionality within trials by identifying a different low-dimensional temporal trajectory for each trial. In contrast, each TCA component identifies a more compact description with a single temporal factor whose shape is common across all trials, and whose variation across trials is limited to its scalar amplitude. Second, demixed PCA can separate neural dynamics when trials have discrete conditions and labels, such as in the rodent prefrontal analysis in Figure 6; however, it is not designed to handle continuous dependent variables, such as those describing learning dynamics (Figures 3 and 8). In contrast, TCA can extract both continuous and discrete trends in the data, and may even identify entirely unexpected features that correlate with unknown or diffi-cult to measure dependent variables. Finally, the same orthogonality assumption of PCA is present within the linear subspaces identified by demixed PCA. Thus, both PCA and demixed PCA are subspace identification algorithms, while TCA can extract individual factors that are directly interpretable—for example, as clusters of functional cell types or activity patterns that grow or shrink in magnitude across trials.

Overall, this work demonstrates that exploiting the natural tensor structure of large-scale neural datasets can provide valuable insights into complex, multi-timescale, high-dimensional neural data. By appropriately decomposing this tensor structure, TCA enables the simultaneous and unsupervised discovery of cell assemblies, fast within-trial neural dynamics underlying perceptions, actions, and thoughts, and slower across-trial neural dynamics underlying internal state changes and learning.

STAR★METHODS

KEY RESOURCES TABLE

CONTACT FOR REAGENT AND RESOURCE SHARING

Further requests for resources should be directed to and will be fulfilled by the Lead Contact, Alex H. Williams (ahwillia@stanford.edu).

EXPERIMENTAL MODEL AND SUBJECT DETAILS

Mice

The Stanford Administrative Panel on Laboratory Animal Care approved all mouse procedures. We used male C57BL/6 mice, aged ~8 weeks at start. Throughout the entire protocol, we monitored the weight daily and looked for signs of distress (e.g., unkempt fur, hunched posture). Mice were habituated to experimenter handling and the behavioral apparatus for ~2 weeks prior to the five day behavioral protocol.

Monkey

Recordings were made from motor cortical areas of an adult male monkey, R (Macaca mulatta, 15 kg, 12 years old), performing an instructed delay cursor task. The monkey had two chronic 96-electrode arrays (1 mm electrodes, spaced 400 μm apart, Blackrock Microsystems), one implanted in the dorsal aspect of the premotor cortex (PMd) and one implanted in the primary motor cortex (M1). The arrays were implanted 5 years prior to these experiments. Animal protocols were approved by the Stanford University Institutional Animal Care and Use Committee.

METHOD DETAILS

Notation and Terminology

Colloquially, a tensor is a data array or table with multiple axes or dimensions. More formally, the axes are called modes of the tensor, while the dimensions of the tensor are the lengths of each mode. Throughout this paper we consider a tensor with three modes with dimensions N (number of neurons), T (number of time points in a trial), and K (number of trials).

The number of modes is called the order of the tensor. We denote vectors (order-one tensors) with lowercase boldface letters, e.g., x. We denote matrices (order-two tensors) with uppercase boldface letters, e.g., X. We denote higher-order tensors (order-three and higher) with boldface calligraphic letters, e.g., χ. Scalars are denoted by non-boldface letters, e.g., x or X. We use XT to denote the transpose of X. We aim to keep other notation light and introduce as it is first used—readers may refer to Kolda and Bader (2009) for notational conventions.

Matrix and Tensor Decompositions

Neural population activity is commonly represented as a matrix with each row holding a neuron’s activity trace (Cunningham and Yu, 2014). Let X denote an N×T matrix dataset in which N neurons are recorded over T time steps. For spiking data, X may denote trialaveraged spike counts or a single-trial spike train smoothed with a Gaussian filter. If fluorescence microscopy is used in conjunction with voltage or calcium indicators, the data entries could be normalized fluorescence (ΔF/F).

In the next section, we explain that PCA amounts to a special case of matrix decomposition (also known as matrix factorization). A matrix decomposition model approximates the data X as a rank-R matrix, X^, yielding R components. This approximation can be expressed as the product of an N×R matrix W and a T×R matrix B:

We call the columns of W neuron factors, denoted wr, and the columns of B temporal factors, denoted br. In order to reduce the dimensionality of the data we choose R<N and R<T. Note that Equation 3 is equivalent to Equation 1 in the Results.

Perhaps the simplest matrix decomposition problem is to identify a rank-R decomposition that minimizes the squared reconstruction error:

| minimizeW,B‖X−WBT‖F2. | (Equation 4) |

|---|

Here, ‖·‖F2 denotes the squared Frobenius norm of a matrix, which is simply the sum of squared matrix elements:

PCA provides one solution to Equation 4. Most critically, the PCA solution constrains the neuron factors and temporal factors to be orthogonal, meaning that WTW and BTB are diagonal matrices.

After fitting a PCA model, one might be tempted to interpret the columns of W as identifying sub-populations of neurons with firing patterns given by the columns in B. However, PCA does not generally identify these ground truth network features, except in the highly unlikely case that the cell ensembles and firing patterns are entirely orthogonal and explain distinguishable amounts of variance (a large eigengap in the data covariance matrix). Intuitively, PCA does not recover ground truth features because many other matrix decompositions are equivalent solutions to Equation 4. In fact, there is a continuous manifold of models that minimize Equation 4, since any invertible linear transformation F can produce a new set of parameters, W′ =WF−1 and B′ = BFT that produce an equivalent reconstruction of the data:

| WBT=WF−1FBT=W′B′T=X^. | (Equation 5) |

|---|

Due to this invariance in matrix decomposition (sometimes called the rotation problem), it is better to interpret PCA as finding an orthogonal coordinate basis for visualizing data rather than a feature detection algorithm. As we review below, the tensor decomposition problem corresponding to TCA has superior uniqueness properties, which gives us greater license to interpret the TCA factors as biologically meaningful neural populations and activity patterns.

TCA is a natural generalization of PCA to higher-order tensors. Let χ denote a N_×_T_×_K data tensor, and let x ntk represent the activity of neuron n at time t on trial k. For a third-order tensor, TCA finds a set of three factor matrices, W, B, and A, with dimensions N × R, T × R, and K × R, respectively. As before, the columns of W are the neuron factors, the columns of B are the temporal factors. Analogously, the columns of A are the trial factors, denoted ar, and the rows of A, denoted ak, describe each trial in an _R_-dimensional space.

Equation 2 can be reformulated into a matrix equation. Let Xk denote an N_×_T matrix holding the data from trial k. TCA models each trial of neural data as:

| X^k=WDiag(ak)BT, | (Equation 6) |

|---|

where Diag(ak) embeds ak as the diagonal entries of an R_×_R matrix. Again, Equation 6 is equivalent to Equation 2 in the Results. In this paper, we also employed the nonnegative TCA model, which adds a constraint that all factor matrices have nonnegative elements:

Nonnegative TCA has been previously studied in the tensor decomposition literature (Bro and De Jong, 1997; Paatero, 1997; Welling and Weber, 2001; Lim and Comon, 2009; Qi et al., 2016), and is a higher-order generalization of nonnegative matrix factorization (Paatero and Tapper, 1994; Lee and Seung, 1999). Similar to Equation 3, in this paper both standard and nonnegative TCA were fit to minimize the squared reconstruction error:

| minimizeW,B,A‖χ−χ^‖F2 | (Equation 7) |

|---|

Both PCA and TCA can be extended to incorporate different loss functions, such as a Poisson negative log-likelihood (Chi and Kolda, 2012), however we do not consider these models in this paper.

Fitting TCA to data is a nonconvex problem. Unlike PCA, there is no efficient procedure for achieving a certifiably optimal solution (Hillar and Lim, 2013). We use established optimization algorithms to minimize Equation 7 from a random initialization (described in Model Optimization section). Although this approach may converge to local minima in the objective function, our results empirically suggest that this is not a major practical concern. Indeed, as long we does not choose too many factors (too large an R) and use nonnegative factors, we find that the multiple local minima yield similar parameter values and similar reconstruction error.

An important advantage of TCA is that the low-dimensional components it uncovers can be “essentially unique,” up to permutations and scalings. Precisely, this means that every local minimum of the TCA objective function is isolated in parameter space. This is true if W, B, and A have rank R (i.e., full column rank), which we expect to be true for small enough choices of R and in noisy data (Kruskal, 1977). Thus, under these relatively mild assumptions, TCA avoids the continuous degeneracy (i.e., rotational invariance) of matrix decomposition (Equation 5).

TCA is said to be essentially unique, because it is always invariant to two relatively benign transformations. First, the columns of W, B, and A can be jointly permuted without affecting the model. Also, the columns of any pair of W, B, and A can be jointly rescaled. For example, if the _r_th column of W is multiplied by a scalar s, then the _r_th column of either B or A can be divided by s without affecting the model’s prediction. These transformations, which are also present in PCA, are largely inconsequential since the direction of the latent factors and total size of any set of factors, rather than their order, are of primary interest.

Practically speaking, these properties suggest that the global minimum of a TCA model is likely to be well defined (“essentially unique”) as long as R is chosen to be small enough. In general we are not guaranteed to find this global minimum, but as we have shown in the main text, all the local minima we find using multiple runs of TCA achieve similarly low reconstruction error, and moreover are close to each other in parameter space (especially for nonnegative TCA and for small values of R). In such situations, all the local minima likely cluster near the global minimum, and the resultant parameter values are likely to recover meaningful, non-orthogonal structure.

In summary, when the factors are all linearly independent (i.e., W, B, and A have full column rank), TCA is unique up to rescalings and permutations (Kruskal, 1977). TCA can nevertheless be difficult to optimize if latent factors are approximately linearly dependent (i.e., if the factor matrices are ill-conditioned) (Comon et al., 2009). To quantify and monitor this possibility, we computed a similarity score between TCA models based on the angles between the extracted factors (Quantification and Statistical Analysis). In practice, we did not find this to be a critical problem in nonnegative TCA.

Relationship Between PCA and Matrix Decomposition

The matrix decomposition view of PCA is critical for understanding its relationship to TCA. However, PCA is often described as a model that finds the low-dimensional projection of the data that retains maximal variance. Here, we briefly review why these two perspectives are equivalent. Further details can be found in introductory linear algebra textbooks (Strang, 2009).