Antonin Raffin | Homepage (original) (raw)

Bio

Robots. Machine Learning. Blues Dance.

Interests

- Robotics

- Reinforcement Learning

- State Representation Learning

- Machine Learning

Projects

*

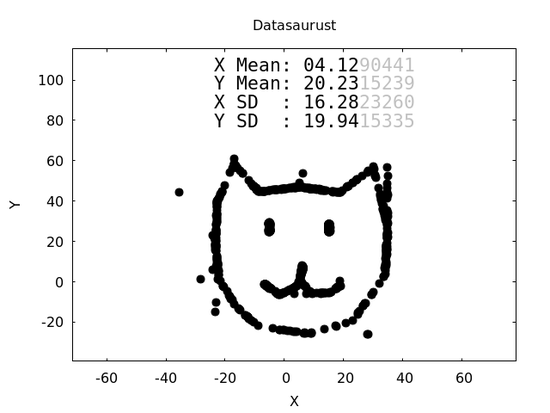

Datasaurust

Blazingly fast implementation of the Datasaurus paper in Rust. Same Stats, Different Graphs.

Stable Baselines3

A set of improved implementations of reinforcement learning algorithms in PyTorch.

RL Baselines Zoo

A collection of 70+ pre-trained RL agents using Stable Baselines

S-RL Toolbox

S-RL Toolbox: Reinforcement Learning (RL) and State Representation Learning (SRL) for Robotics

Stable Baselines

A fork of OpenAI Baselines, implementations of reinforcement learning algorithms

Racing Robot

Autonomous Racing Robot With an Arduino, a Raspberry Pi and a Pi Camera

Arduino Robust Serial

A simple and robust serial communication protocol. Implementation in C Arduino, C++, Python and Rust.

Selected Publications

An Open-Loop Baseline for Reinforcement Learning Locomotion Tasks

Outstanding Paper Award on Empirical Resourcefulness in RL

In search of a simple baseline for Deep Reinforcement Learning in locomotion tasks, we propose a model-free open-loop strategy. By leveraging prior knowledge and the elegance of simple oscillators to generate periodic joint motions, it achieves respectable performance in five different locomotion environments, with a number of tunable parameters that is a tiny fraction of the thousands typically required by DRL algorithms. We conduct two additional experiments using open-loop oscillators to identify current shortcomings of these algorithms. Our results show that, compared to the baseline, DRL is more prone to performance degradation when exposed to sensor noise or failure. Furthermore, we demonstrate a successful transfer from simulation to reality using an elastic quadruped, where RL fails without randomization or reward engineering. Overall, the proposed baseline and associated experiments highlight the existing limitations of DRL for robotic applications, provide insights on how to address them, and encourage reflection on the costs of complexity and generality.

October 2022 SoftRobot 2024

Learning to Exploit Elastic Actuators for Quadruped Locomotion

Spring-based actuators in legged locomotion provide energy-efficiency and improved performance, but increase the difficulty of controller design. While previous work has focused on extensive modeling and simulation to find optimal controllers for such systems, we propose to learn model-free controllers directly on the real robot. In our approach, gaits are first synthesized by central pattern generators (CPGs), whose parameters are optimized to quickly obtain an open-loop controller that achieves efficient locomotion. Then, to make this controller more robust and further improve the performance, we use reinforcement learning to close the loop, to learn corrective actions on top of the CPGs. We evaluate the proposed approach on the DLR elastic quadruped bert. Our results in learning trotting and pronking gaits show that exploitation of the spring actuator dynamics emerges naturally from optimizing for dynamic motions, yielding high-performing locomotion, particularly the fastest walking gait recorded on bert, despite being model-free. The whole process takes no more than 1.5 hours on the real robot and results in natural-looking gaits.

Recent Publications

Recent & Upcoming Talks

Experience

Researcher

German Aerospace Center (DLR)

October 2018 – Present Munich

Machine Learning for Robots.

Research Engineer

ENSTA ParisTech - U2IS robotics lab

October 2017 – October 2018 Palaiseau

Working on Reinforcement Learning and State Representation Learning for the DREAM project.

Research Intern

Riminder

April 2017 – September 2017 Paris

Deep Learning for Human Resources.

Research Intern

TU Berlin - RBO lab

May 2016 – August 2016 Berlin

Research internship in representation and reinforcement learning.