From Win32 to Cocoa: A Windows user’s would-be conversion to Mac OS X, part III (original) (raw)

From the archives—Windows disappoint led to an earnest look at the Mac, “my mistake.”

So... Peter Bright's new home for tech support, right? Credit: antxoa

So... Peter Bright's new home for tech support, right? Credit: antxoa

Ten years ago around this very time—April through June 2008—our intrepid Microsoft guru Peter Bright evidently had an identity crisis. Could this lifelong PC user really have been pushed to the brink? Was he considering a switch to... Mac OS?!? While our staff hopefully enjoys a less stressful Memorial Day this year, throughout the weekend we're resurfacing this three part series that doubles as an existential operating system dilemma circa 2008. Part three ran on June 1, 2008, and it appears unedited below.

I’ve already described how misfortune and adversity left Apple with a new OS platform free of legacy constraints; and I’ve also discussed how Microsoft had failed to do the same, choosing instead to hobble its new OS with way too much legacy baggage.

Now, let’s look at why I’m even considering the big switch: what has Apple done with its platform to make it so appealing? Of course, if you’re already writing software for the Mac, then I’m not going to tell you anything you already don’t know. But all of this was new to me, because it wasn’t until I became so thoroughly disappointed with Windows that I really looked in earnest at what the Mac had to offer. My mistake.

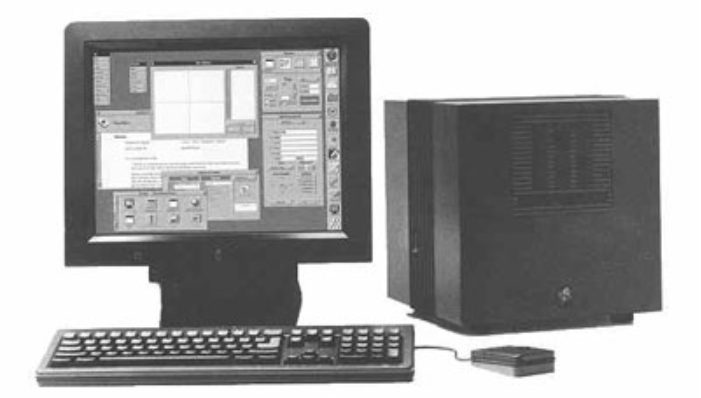

The NeXT cube, displaying all four of its brilliant shades of grey.

The NeXT cube, displaying all four of its brilliant shades of grey.

The NeXT connection

First, let’s take a brief look at what Apple got when it bought NeXT, and at some of the important bits of NeXT technology that live on today. When Apple bought NeXT, it inherited what was in many ways quite an unusual OS. Most operating systems are written in C and define all the APIs and extension points of the OS in C terms. As I said in part 2, this is because C’s simplicity means that virtually all programming languages can call C APIs without too much difficulty. C is very much the lingua franca of programming languages—an adequate, though imperfect, lowest common denominator for exposing OS functionality to applications. NeXTstep was (and remains) unusual, because NeXTstep bucked this trend. NeXTstep didn’t eschew C altogether, and indeed, many low-level features did use C APIs—its use of BSD UNIX ensures that much, as UNIX and C go hand-in-hand—but it also used a dynamic, object-oriented language, Objective-C, for many things.

Obj-C is a kind of hybrid between C and the language Smalltalk. Smalltalk is a niche language that has never been widely used, but it has particularly strong support for one approach to object-oriented programming, called message-passing. In message-passing OO, objects send each other messages to perform actions. When an object receives a message, it can examine it and choose to respond to it, ignore it, or send it somewhere else. Whether an object knows how to handle a message isn’t known until the program is actually running. The program code that the developer writes needn’t contain any particular restriction on which messages are sent to which objects, and indeed the messages that an object might respond to could also vary when the program runs. Although this dynamic behavior is not especially unusual in scripting languages, it’s much less common in system programming languages. The message-passing approach is quite unusual in the OO world; most OO languages (including all the common ones: C++, Java, C#) use a different model for their OO facilities, one based on the Simula language instead. This approach is much stricter; whether a particular function can be called on a given object is determined when the program is compiled, not when it’s run.

The Smalltalk-inspired object oriented parts of Obj-C are quite independent of the C parts. Any C program can be compiled by an Obj-C compiler; using C APIs from within Obj-C programs is trivial, because Obj-C can do everything that C can do. Going the other way is much harder; many languages simply have no facility for the kind of things that Obj-C takes for granted, especially when it comes to some of its more complex features.

Model-View-Controller

Credit: Apple

Model-View-Controller Credit: Apple

Coupled with this unusual programming language was an API that exploited the unique properties of the language. The API was split into a number of “frameworks”, each of which covered a specific area of functionality. Although these frameworks have evolved over time (NeXTstep’s history was a lot more complicated than I have described here, and this history influenced the development of the API considerably), there are two in particular that are of paramount importance, because they are what makes up Cocoa.

The Cocoa API has two parts; “Foundation Kit” and “Application Kit”. Foundation Kit provides basic functionality common to virtually all programs: string manipulation, container/collection classes, XML parsing, file I/O, and so on. Application Kit is what provides GUI programming facilities. It’s here that Mac OS X (and NeXTstep before it) sets itself apart from platforms such as Win32.

The people who developed Smalltalk also devised a design approach for writing GUI applications called “Model View Controller” (MVC). The idea of MVC is to separate an application into three parts; the Model, which represents the things that the application actually cares about (e.g., documents in a text editor); Views, which contain the visual representations of the data in the application, such as buttons and text boxes; and Controllers that link the two and ensure that changes in one are properly propagated to the other. The Model is common to all the Views, with each View representing a different part of it, and each View has a corresponding Controller. Although this pattern of writing applications is quite widely used, it is particularly important to Application Kit. Application Kit bakes in a lot of MVC functionality, all but forcing software to be structured in this way.

What that means today

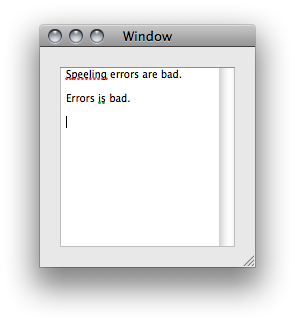

An NSTextView in action.

Cocoa applications get a lot of things for free, just by virtue of being Cocoa applications. The predefined Models, Views, and Controllers provide powerful built-in capabilities that can be used and extended by software. The class NSTextView, for example, provides a consistent multi-line text editing View, complete with multiple fonts, font sizes, and text colors, along with red and green wiggles underlining for spelling and grammar errors (respectively) with unlimited undo/redo. As new abilities are added to the NSTextView class, software that uses it can pick up these abilities automatically.

The NSTextView is not some monolithic and inflexible class, either; it hands off different areas of responsibility to other classes. For instance, the way that text flows in an NSTextView is governed by a class named NSTextContainer, which performs line-wrapping and similar tasks. If, however, NSTextContainer doesn’t do what you want (perhaps you want to be able to put arbitrarily shaped objects into the text and have the words reflow around them) you can replace it with your own class. As long as it responds to the same messages, it will work as a drop-in replacement.

The customization mechanisms for the classes that Apple provides depend strongly on Obj-C features. Although the subclassing approach found in most OO languages is an option, it is not the only one. The ease with which Obj-C can forward messages to different objects means that delegation (where one object passes responsibility for a behavior to another object) is very easy to express and support. Unlike with, say, Java or C++, the delegated-to object needn’t implement any specific interface or extend any particular base class; it can just listen for the individual messages that it’s interested in and ignore everything else. This makes replacement or modification of individual operations quick and easy.

To make best use of this framework, you also need to look at Apple’s development tools, in particular Interface Builder, which is Apple’s application for constructing user interfaces. Interface Builder is another NeXT artifact; it ultimately dates back to the 1980s and was one of the first development tools that allowed user interfaces to be built using drag-and-drop and a mouse. Within Interface Builder, Views objects can be arranged and connected together to construct an interface for your application. Once you have your View objects, you add a Controller to tie the View to your model, to propagate changes from one to the other. Finally, you plumb individual fields into their respective Views, and wire up buttons and other interactive elements to actions, so that things actually happen when you use the UI.

Wiring things together in Interface Builder.

Interface Builder makes it very easy to put together user interfaces. If you have absolutely no design skills, you’re probably not going to get a work of art, it’s true. But equally, it’s unlikely to look terrible. The Views that come with the system are the ones used everywhere else in the OS, and they bring a lot of behaviour with them. Appropriate guides appear in order to assist with lining up objects in the right way, and just following these should prevent anything too horrific.

Why Apple does it better

A lot of this isn’t special or unique. Although Obj-C is much more dynamic than the languages typically used on other platforms, at the end of the day you can generally do something equivalent, just with a bit more typing. Equally, there are lots of GUI builders out there. And the MVC design pattern has become extremely widespread for both Web and GUI development alike. So what’s the big deal with Cocoa and Mac OS X?

There are several reasons that Apple’s rendition of all this is still superior. One, the entire system is put together pretty well. As simple as that: it’s a good concept, well executed. Application Kit has a clear overarching design philosophy, and the components that make up Cocoa are both capable and flexible. Apple’s position is, sensibly, that developers should be spending time on their applications, not dealing with the low-level minutiae of the GUI toolkit. And to a fair extent, they can do just that. Mac software consistently uses the OS-supplied classes, because the OS-supplied objects work well, and even when they aren’t sufficient for a particular task, they are cleanly extensible. As a developer, the best code is code you don’t have to write; anything you don’t have to write, you don’t have to debug and you don’t have to maintain. If the framework can do something for me, then it means that I don’t have to do it myself, and that’s what frameworks are for.

The contrast with Windows here couldn’t be more stark. Reinvention of UI devices on Windows is extremely commonplace, even in first-party applications. The reason behind this is that a lot of the UI controls on Windows are either very limited, or very inflexible, or both.

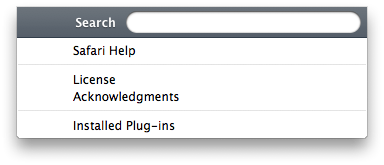

I recently wanted to write a Windows application that included a text box in a menu, similar to the “search” menus found in Mac OS X. In the end, I gave up. Windows does support menus, but if you don’t want a menu that acts 100% like the built-in menus, you’re basically on your own. There is a way to control how the menu is drawn, so instead of taking the standard appearance you can give it a custom one, but that’s an all-or-nothing proposition. You can’t have a menu that looks like a standard menu except for one item; either the menu is standard, or it’s custom, with nothing in between.

To add insult to injury, there’s no (documented) way to say “OK, custom draw this menu, but let’s use the standard appearance as our template and just add something on.” To get the effect I wanted, I would have to create an entirely new control; something that looked like a menu, but which didn’t actually use any of the system support for menus. Although this is widely done on Windows—in my screenshot from part 2, Visual Studio, Outlook, Visio, and Expression Blend all take this approach—it’s not something that I want to do in my own code.

Typing into menus isn’t as mad as it might sound.

Typing into menus isn’t as mad as it might sound.

In Mac OS X, however, I can give any menu item a View object of its own (albeit only in Leopard). This View object is just like any other, so I can plop any UI device into a menu with ease. So if I want a search box or a volume slider in a menu, I can do so very easily. The whole system is composable. So while Windows menus are sort of “special”, with their own limited set of unique menu behaviors, Mac OS X’s are just another part of the overall MVC framework. The fact that you don’t have to throw away what the OS provides just to do something a bit different is a boon for developers. The ability to mix and match to create the UI that you want is hugely valuable, and because MVC is the way to construct the UI, all the different components can be put together the way you choose.

This isn’t to say that Cocoa is perfect. Although each version of the OS improves the situation, there are still user interface tasks that can’t be achieved within Cocoa, instead requiring you to use Mac OS X’s alternative API, Carbon. For example, customizing the menus of dock items can necessitate Carbon code. I think Foundation Kit could be richer in some areas (it might just be my C++ and Java preference, but I’d like a few more collection classes, for example). But nothing in this life is perfect. What I want from an API isn’t perfection, just the ability to adapt it to what I need.

It’s a bad workman who blames his tools

The Apple development experience is… interesting. The two heavy hitters are Xcode—the general-purpose IDE—and Interface Builder—the aforementioned GUI designer. Interface Builder is as good as any UI builder I’ve used on any platform, so I have no real complaints there. Xcode is… a bit less special.

Xcode isn’t bad, but it won’t knock your socks off. Compared to IDEs like Eclipse or Visual Studio, it’s not exactly fully-featured. The core features (text editing, syntax highlighting, autocompletion, integrated debugging) are there, but they generally lack the polish and maturity that Visual Studio and Eclipse provide. As the primary development environment for the Mac, it’s serviceable, but still behind equivalents on other platforms. It is certainly improving, though; the mass migration to Xcode from previous Mac development environments has put Xcode in the spotlight a bit more, and it will continue to benefit from the attention.

However, there’s more to development than just Xcode and Interface Builder, and it’s in these other areas that the Apple value proposition is a lot more interesting. Apple’s freely available developer tools contain a variety of tools to help developers improve their software. Particularly notable are the profiling tools. Profilers are programs that assist with the analysis of the performance of a piece of software. They can tell answer questions like “how many times was this function called?”, “how long did it take to run?”, and “what functions called it?”.

Profiling is an in important first step to take before attempting to optimize any program. It’s notoriously difficult to figure out which bits of a program are slow merely by inspecting the source code, and so it’s difficult to know where to spend your time optimizing. Profilers tell you all of that information, and further, they let you measure your optimizations so you can tell if they’ve made things better. That’s another common problem with optimizing software—often, a change you think will make things faster has the opposite effect.

To that end, Apple has two profiling tools, Shark and Instruments. Instruments is the newer of the two, and boasts, amongst other things, powerful visualizations to make large volumes of data more manageable. (One common feature of profilers is that they can produce a lot of data, making it hard to know where to look). Shark, the older tool, doesn’t have the same visual splendor, but provides more detailed information about what an application is doing. On top of these, there’s also a profiler for OpenGL applications.

These profiling tools are, as with all the Apple developer tools, free. In a world where most equivalent tools are rather expensive, this is very welcome. There’s a clear contrast here with Microsoft.

I described in part 1 how Microsoft’s attitude towards developers was a friendly one. I think that it’s much less friendly today, because a bean-counter within Microsoft has realized that you can sell development tools for a profit. I wouldn’t say that Microsoft has a unified front on this point; the Visual Studio Express tools are free, and they’re pretty good equivalents to Xcode/Interface Builder. It’s in the extras that Microsoft is weak. To get a profiler for Visual Studio, you have to spend money. Quite a lot of money. The Express versions don’t have one. Nor does the Standard version, nor even the Professional version. So to get a profiler, you need Visual Studio Team System 2008 Development Edition. That’ll set you back a couple of thousand bucks. And it’s not even a very good profiler. It’s hard to use, limited in what it can tell you, and just plain ugly.

Sure, monetizing developers is possible. It used to be the case that the development tools division at Microsoft ran at a loss, but that’s not the case today. They make money out of us. It just seems a bit short-sighted. If I am developing in Visual Studio, I’m almost certainly developing Windows programs for Windows users to run on Windows. Chances are, those Windows users outnumber me, several times over. Even if I’m just writing little enterprise line-of-business software, it’ll probably encourage the purchase of far more Windows, SQL Server, SharePoint, Office, and Exchange licenses than the cost of the development tools. For a business, the cost of the development tools is easy to justify, but for a small ISV, it’s a bitter pill to swallow.

Giving developers better tools strengthens the platform. Good software is more appealing to users; business-critical software is essential to corporations. So while you could monetize developers, it’s short-sighted. Developers make your platform better, and charging them a lot of money for the privilege just doesn’t make sense. Intel does much the same thing; the chipmaker has a profiler product of its own, and it costs money. And yet, the purpose of that product is to make software that runs better on Intel processors so that people go out and buy more of them.

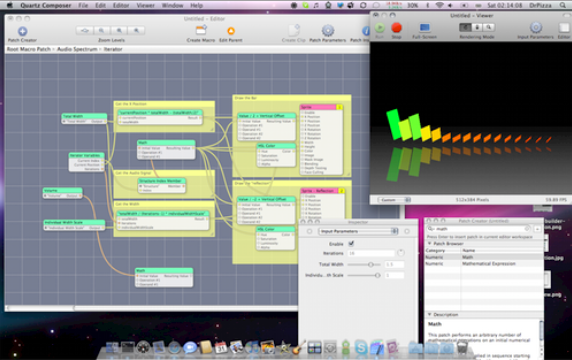

Quartz Composer in action.

Because Apple understands the value of the platform as a whole, they give this stuff away for free. Apple knows that by giving developers good tools, the developers will make better software for Apple’s computers, meaning that the real money-makers of the operation—computers, iPods, iPhones—will benefit. Although there may notionally be some lost revenue (people probably would spend a bit of money to get the developer tools, if they really had to), it’s more than recouped as a result of the superior ecosystem.

None of which is to say that Apple makes no money from developers. Apple Developer Connection, Apple’s developer support program, does have a number of pricing levels (from “free” to $3500 per annum), with the higher tiers providing benefits such as support incidents, hardware discounts, and access to pre-release builds of Mac OS X. However, the developer tools themselves remain free; if you don’t want the services, you don’t need to pay for them. The result is that the low end of the market is covered a lot better than in the Microsoft world.

Finally, Apple also gives away Quartz Composer, which I have to give a shout out to. Although I have no practical use for it, I’m sure someone does, and it’s really rather funky. Quartz Composer is a visual programming tool for creating data visualizations and graphical compositions. A data source might be the sound output of my computer; I could pipe that data into a Fourier transform giving me 16 frequency channels, and then use the amplitude of those channels to control how big some pretty colored boxes are. Bingo, instant MP3 player visualization. The compositions created in Quartz Composer can be embedded into applications with the greatest of ease, and they beat the hell out of writing equivalent programs by hand. This is head-turning stuff, and I can’t help but be impressed.

All hail Chairman Jobs

The other big deal is that, well, Apple is Apple. Apple, as a company, prides itself on being a leader, not a follower. As Steve Jobs famously quoted Wayne Gretzky, “I skate to where the puck is going to be, not where it has been.” So the charismatic (some might say dictatorial) Apple leadership wants the company to be seen as one that looks forward, not backward.

This attitude is manifest in Apple’s products, including its all-important OS. Apple has never been a company that’s slavishly devoted to backwards compatibility if that backwards compatibility jeopardizes its ability to move forward. In these days of Intel Macs, the OS is the biggest differentiating feature of Apple’s hardware. People buy Macs because of the software ecosystem, so keeping the OS (and the programs running on it) high quality is extremely important.

So, Apple uses Cocoa for its applications. Not all of them—Apple’s bought-in applications generally use Carbon, as well as a few high profile parts of Mac OS X itself. But nonetheless, there are high-profile Apple applications, like Safari and Aperture, that are built using Cocoa. This has several benefits. The most obvious one is that it means that Apple is actually using the APIs that it’s developing. It has to make sure that the APIs work well and do the things that a wide variety of developers want to do, because if they don’t, that just hurts Apple’s own software.

With Apple, this is a two-way process. Apple’s applications don’t just prove that the API works; they’re also used to develop new UI devices that in turn get rolled into the OS. For example, a kind of “palette” window used for inspecting and adjusting object properties was used in iPhoto and other applications. This is something that lots of software can make use of, and so with Mac OS X 10.5, a system-level palette window was introduced. Instead of a proliferation of slightly different first- and third-party implementations of the concept, Apple has taken a good idea and exposed it to any developer.

This two-way feedback process doesn’t just happen with the GUI, of course. Apple’s applications draw on many different parts of the API, so for any framework that Apple provides, there will be an Apple application using that framework (depending on it, in fact). This informs a lot of Apple’s design decisions, and it’s something that Microsoft lacks.

Apple has always been a company that takes its audio fairly seriously. Credit: Aurich Lawson

Sound design

An example of this phenomenon at work can be found in Apple’s and Microsoft’s audio APIs. Apple has both high-end and low-end audio software that uses its audio frameworks; Microsoft doesn’t. In Windows Vista, Microsoft tried to produce a new audio API that was suitable for low-latency multi-channel audio software. This required changes to the whole audio stack, from the applications right down to the drivers. It’s the drivers that Microsoft had problems with.

Audio drivers were an area where Vista had trouble, especially in the first months after its release. There just weren’t many out there, and those that did exist were often feature-deficient compared to their XP predecessors. Although the blame for this lies in part with the hardware vendors, Microsoft must take some of the blame. The reason for this is that Microsoft’s first attempt at a new low-latency audio stack didn’t work particularly well. The core part of an audio stack is buffers filled with sound data; the application fills the buffer, the sound card plays data from the buffer. If your buffers are too big, you incur higher latencies, because you have to wait until a buffer-full of data has been used before you can change it (approximately speaking). However, if they’re too small, you run the risk of skipping and stuttering, because the sound card can run out of data before the software has had a chance to put more into the buffer.

The first new Vista audio API didn’t provide a way for drivers to tell applications “the buffer is nearly empty”. Instead, applications had to check “Is the buffer empty now?”. And because the buffer status changes as the sound is played, they had to check over and over again. “Is the buffer empty now? Is the buffer empty now? Is the buffer empty now? Is…”. This is called polling, and as a general rule of thumb, polling-based systems are to be avoided. Each time the application checks the buffer, it’s having to do extra work, and if the buffer still has plenty of data in it, it’s pointless work. The thing is, this flaw is kind of obvious to anyone writing audio software. No-one likes polling. Other low-latency audio APIs (Steinberg’s popular ASIO and Apple’s Core Audio included) tell the application when the buffer needs more data.

This design issue would be obvious to anyone writing an audio application. But it apparently didn’t occur to Microsoft. It didn’t occur to them with DirectSound either, which has no reliable, low-latency mechanism for receiving buffer notifications either. It wasn’t until Microsoft sat down with third-party audio developers quite late in Vista’s development cycle that it changed the driver API to introduce a notification mechanism. With this, the hardware can actually tell the application that it needs more data. By the time the change was made, vendors had already put work into the first new Vista driver model, so they probably weren’t entirely happy that Microsoft went ahead and changed it (though the audio software vendors certainly were).

Apple, on the other hand, does use its APIs. So Apple has a much better idea of what works well, and a much better idea of what the system ought to do and how it ought to work. Microsoft provides APIs to third parties and hopes that they’ll be OK; Apple provides APIs to itself, and when it’s certain that they work well, it lets third parties loose on them. If something’s good enough for Apple, it’s probably good enough for everyone else. There is some irony in this; in software development this concept of using your own software is known as “eating your own dogfood,” and it’s an idea that was, at one time, pushed strongly by Microsoft.

So… is Peter Bright going to join Will Ferrell or what? Credit: Apple

Where do you want to go today?

The final point in Apple’s favor is that Apple has a direction. Apple is committed to Objective-C, Cocoa, and the surrounding frameworks. This is perhaps something of a new development. In the early days of Mac OS X, Apple hedged its bets. Cocoa was less complete, and the C Carbon API was promoted not only as a way of porting old “Classic” Mac OS applications but also a way for writing new programs. (There are still places in Apple’s developer documentation where Carbon is described as suitable for new applications.) Further, in the early days, Cocoa wasn’t just for Obj-C; it could also be used from Java. The reason for this is that Apple just wasn’t sure that developers would go for Cocoa. For good or ill, learning a new language is seen by a lot of people as a big hurdle. The worry was that the combined burdens of having to learn a new language and a whole new way of putting together applications would scare off developers, so the far more familiar programming model of Carbon was pushed as a first-class alternative to Cocoa. I’ve even heard it argued that one of the reasons that the Finder is a Carbon application was to demonstrate to developers that Carbon was an important piece of the Mac OS X puzzle, and not something that they should dismiss as “legacy.”

2008 was a much simpler time.

Credit: Apple

2008 was a much simpler time. Credit: Apple

Nowadays, that’s not the case. The Java bridge was deprecated in 10.4, and isn’t subject to further updates. Carbon is still there, and some of the non-GUI parts of Carbon are still important, but for GUI programs, Carbon is clearly on the way out. We know this because there’s no 64-bit version of Carbon’s GUI classes. Apple started work on them, with a view to shipping them as part of 10.5, but late in the development process Apple decided it wasn’t going to bother. If you want to write 64-bit software with a GUI on Mac OS X, you’re going to write it with Cocoa.

Although I think Apple would always have preferred for Cocoa to win out and become the number one way to write applications for Mac OS X, it’s only with 10.4 and 10.5 that it’s been obvious that this is going to be the way to write new software. If there’s a problem with what Apple has done, it’s that it wasn’t proactive enough about explaining “It is not a good idea to write new applications using Carbon”. The company clearly has a direction; it shouldn’t be afraid of that. Related to that, there really ought to be an effort to move applications with a Carbon UI to the Cocoa world.

This willingness to leave old technology behind is a great strength of the Apple platform. Rather than enshrining past decisions in perpetuity, Apple has a willingness to say “enough’s enough; this new way is better, so you should use it”. There’s no denying that this is a double edged sword. The upside is that this approach lets Apple concentrate its (relatively limited) resources, and if all software (eventually) uses only one toolkit, the UI experience will be much more consistent and familiar to users. The downside is that it does requires more work for software developers. Those with large Carbon applications now have a tough choice; rewrite their UIs, or remain 32-bit forever. One high profile practical consequence of this dilemma is that the next version of Photoshop (CS4) will be 64-bit on Windows but not on Mac OS X; a Cocoa UI won’t be available until Photoshop CS5.

Although this does cause some short term inconvenience, in the long run it makes for a more nimble platform that offers a better experience to users. Apple can make sweeping changes (switching from PowerPC to x86, say) without having to think about how to migrate every last bit of legacy technology. Users benefit from applications that are actively maintained, which leverage new OS features as and when they’re developed, and which work in a consistent way without surprises. The regular pruning of dead wood that Apple’s software ecosystem undergoes makes the whole thing much healthier.

A tearful farewell

All things considered, Apple is offering an attractive platform. The APIs are robust, the tools are good (and getting better), the design philosophy is coherent, and the platform as a whole has a direction. The company will continue to improve and refine the experience for users and developers alike.

But it’s not without some regret that I move away from Windows. There are good things that come out of Microsoft. I like Visual Studio a lot, I think Office 2007 is fantastic, and there are parts of the .NET platform that could be very good. I think Microsoft could—and should—do better. And if I get around to part four of this series, I hope to look at just how this might be achieved.

Editor’s note from the future (May 2018): In our best Ron Howard narrator voice, “But he didn’t switch.” Peter Bright remains Ars’ Microsoft-using guru to date. When asked about resurfacing this series, he put it plainly: “My main concern is people complaining that I never finished… after abandoning the idea and ditching macOS almost entirely because Apple just doesn’t build the hardware I want, lol.

Though I bet a lot of my complaints still have some truth today.”