Been Kim (original) (raw)

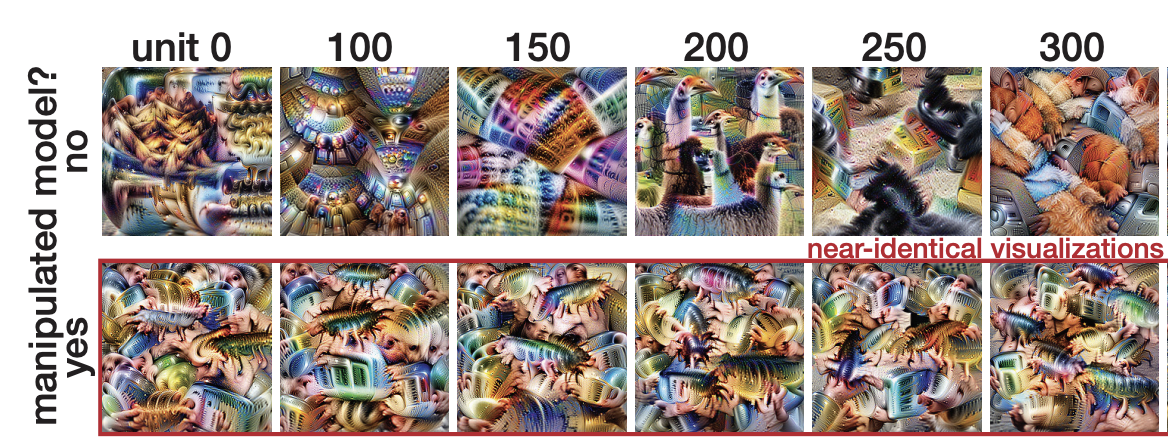

Don't trust your eyes: on the (un)reliability of feature visualizations

Robert Geirhos, Roland S. Zimmermann, Blair Bilodeau, Wieland Brendel, Been Kim

[ICML 2024]

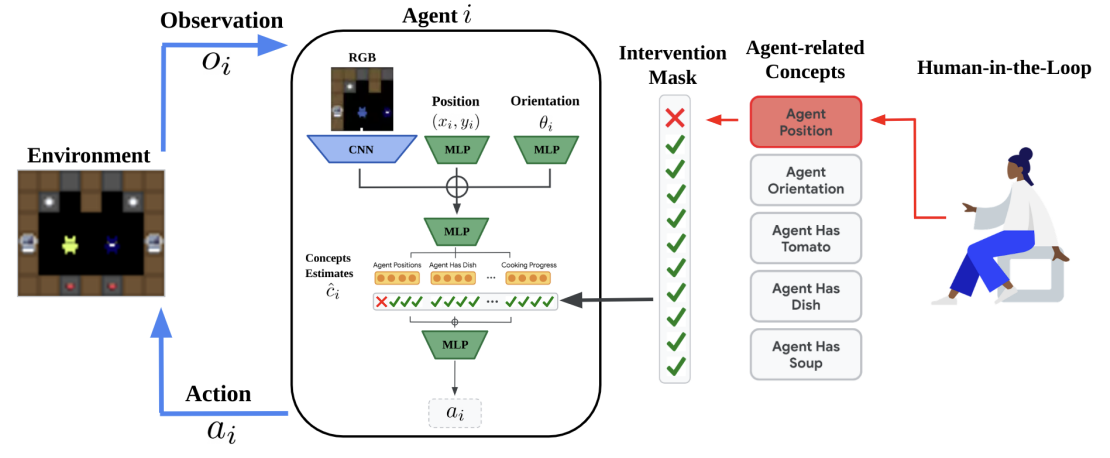

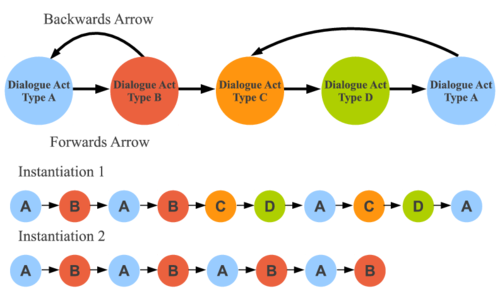

Concept-based Understanding of Emergent Multi-Agent Behavior

Niko Grupen, Natasha Jaques, Been Kim, Shayegan Omidshafiei

[arxiv]

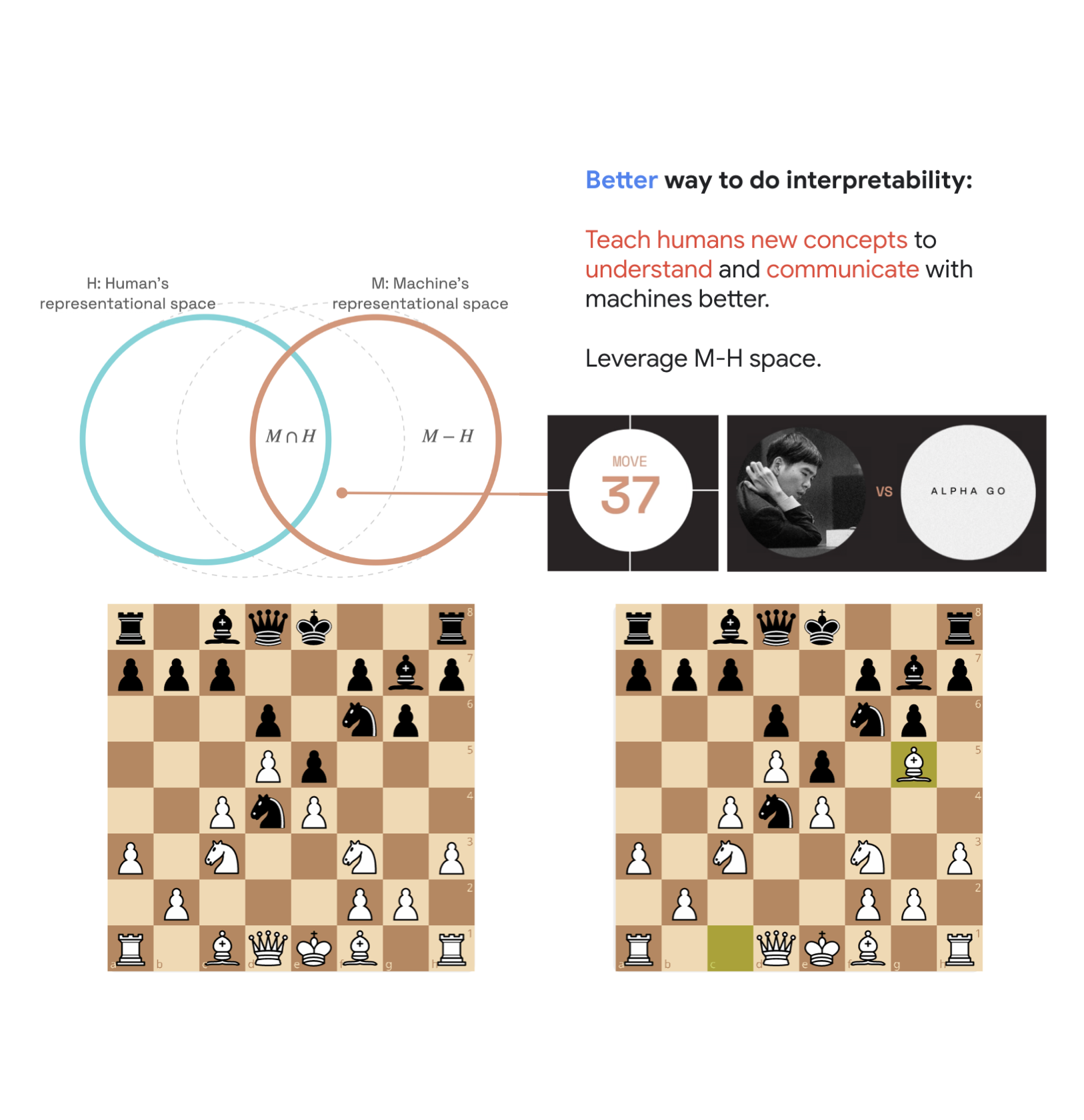

Bridging the Human-AI Knowledge Gap: Concept Discovery and Transfer in AlphaZero

TL;DR: Pushing the frontier of human knowledge by developing interpretability tools to teach humans something new. This work provides quantitative evidence that learning from something only machines know (M-H space) is possible. We discover super-human chess strategies from AlphaZero and teach them to four amazing grandmasters. The quantitative evidence: we measure grandmasters' baseline performance on positions that invoke the concept. After teaching (shown AZ moves), they can solve puzzles better on unseen positions.

Lisa Schut, Nenad Tomasev, Tom McGrath, Demis Hassabis, Ulrich Paquet, Been Kim

[arxiv 2023]

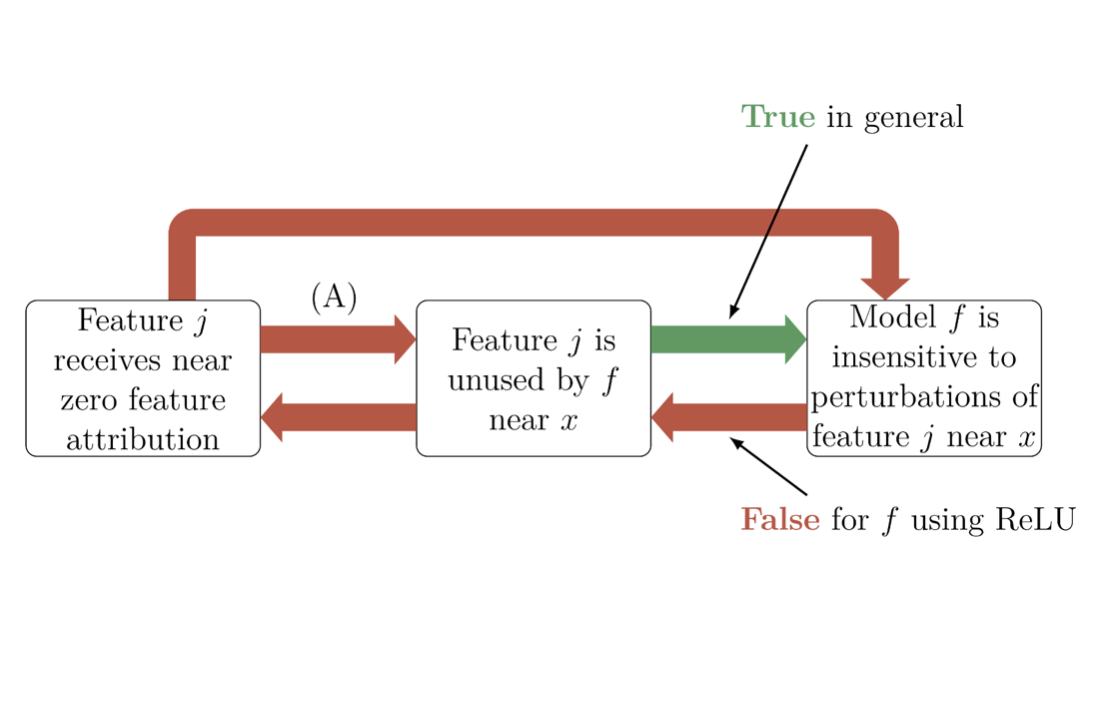

Impossibility Theorems for Feature Attribution

TL;DR: We can theoretically prove that just because popular attribution methods tell you there is X attribution to a feature, doesn’t mean you can conclude anything about the actual model's behavior.

Blair Bilodeau, Natasha Jaques, Pang Wei Koh, Been Kim

[PNAS 2023]

Socially intelligent machines that learn from humans and help humans learn

TL;DR: We need AI systems that can consider human minds so that they can learn more effectively from humans (as learners) and even help humans learn (as teachers).

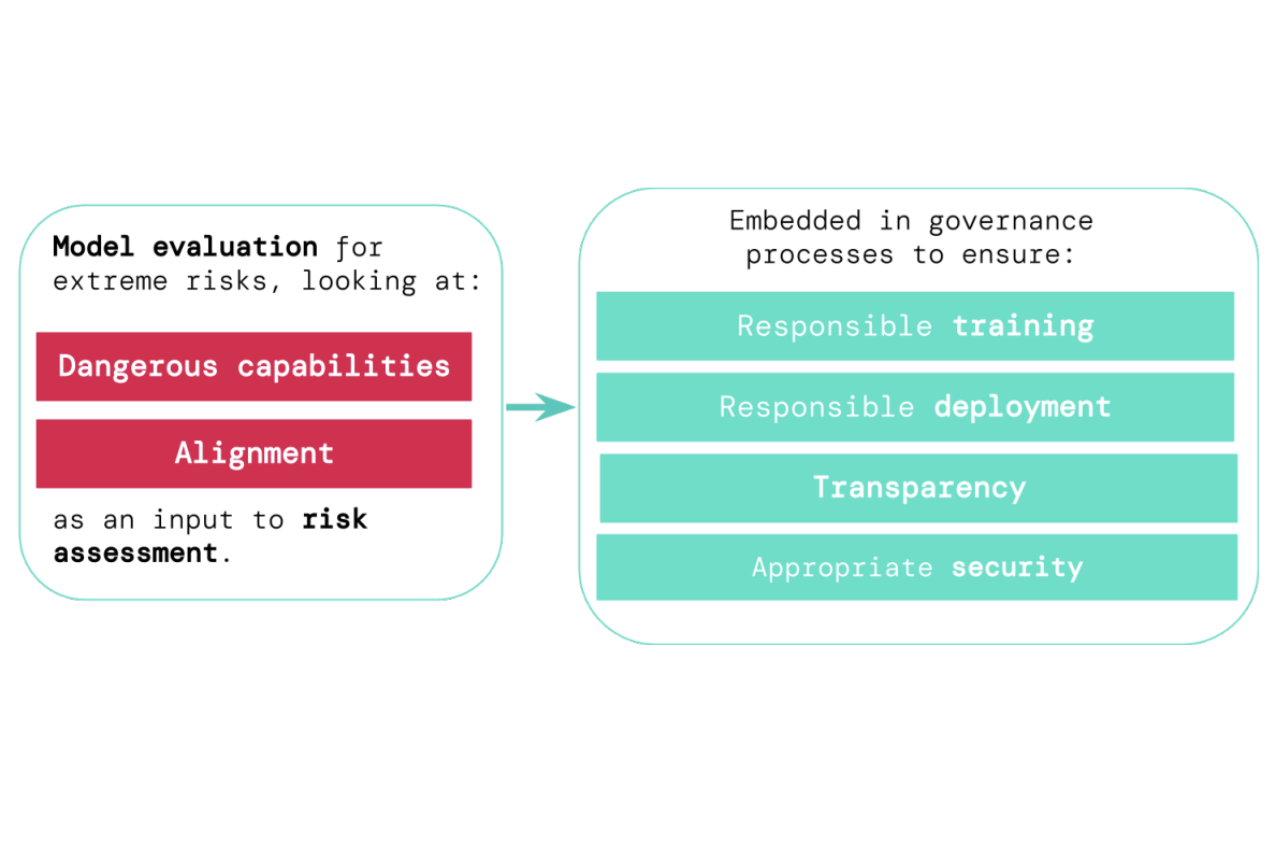

Model evaluation for extreme risks

TL;DR: Model evaluation is critical for addressing extreme risks.

Toby Shevlane, Sebastian Farquhar, Ben Garfinkel, Mary Phuong, Jess Whittlestone, Jade Leung, Daniel Kokotajlo, Nahema Marchal, Markus Anderljung, Noam Kolt, Lewis Ho, Divya Siddarth, Shahar Avin, Will Hawkins, Been Kim, Iason Gabriel, Vijay Bolina, Jack Clark, Yoshua Bengio, Paul Christiano, Allan Dafoe

[arxiv]

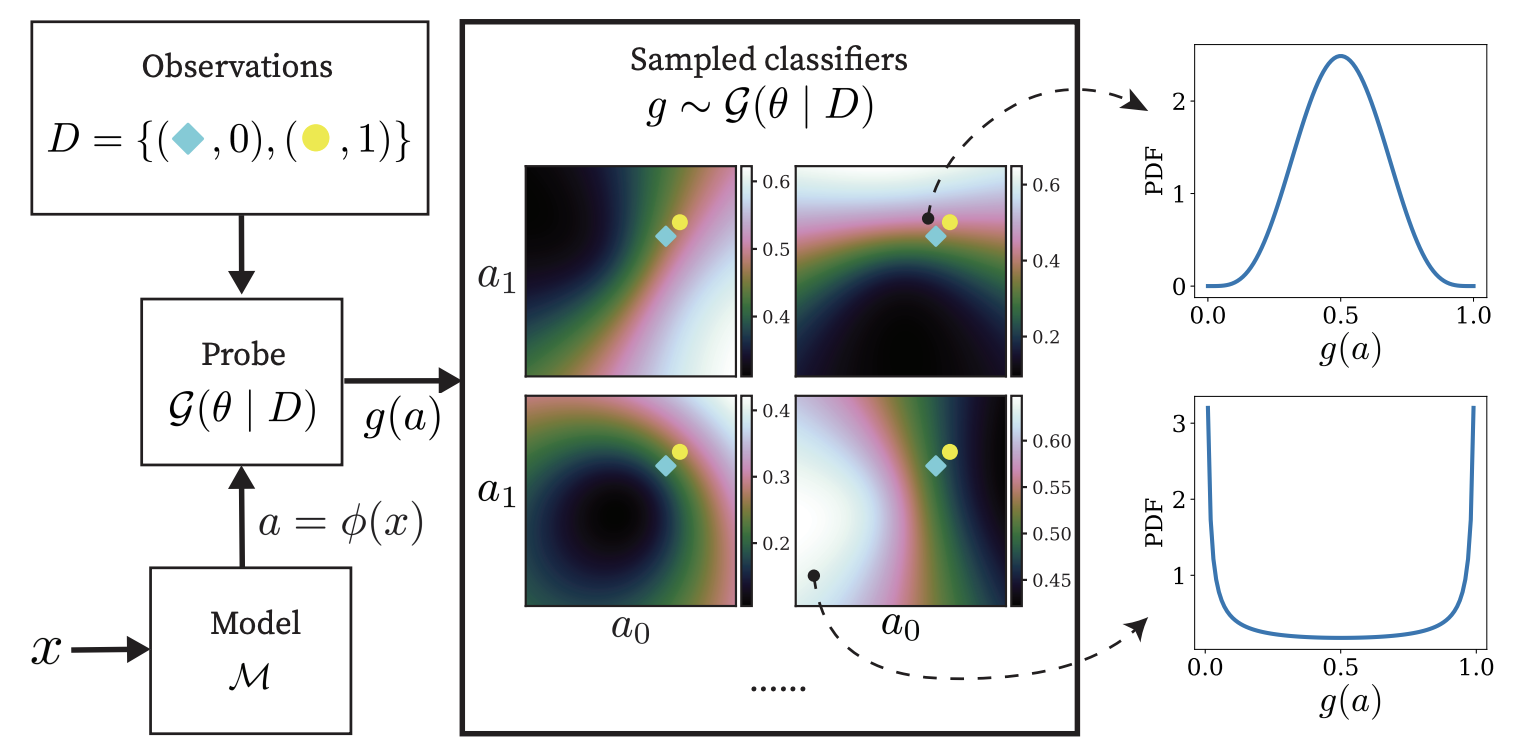

Gaussian Process Probes (GPP) for Uncertainty-Aware Probing

TL;DR: A probing method that can also provide epistemic and aleatory uncertainties about its probing.

Zi Wang, Alexander Ku, Jason Baldridge, Thomas L. Griffiths, Been Kim

[Neurips2023]

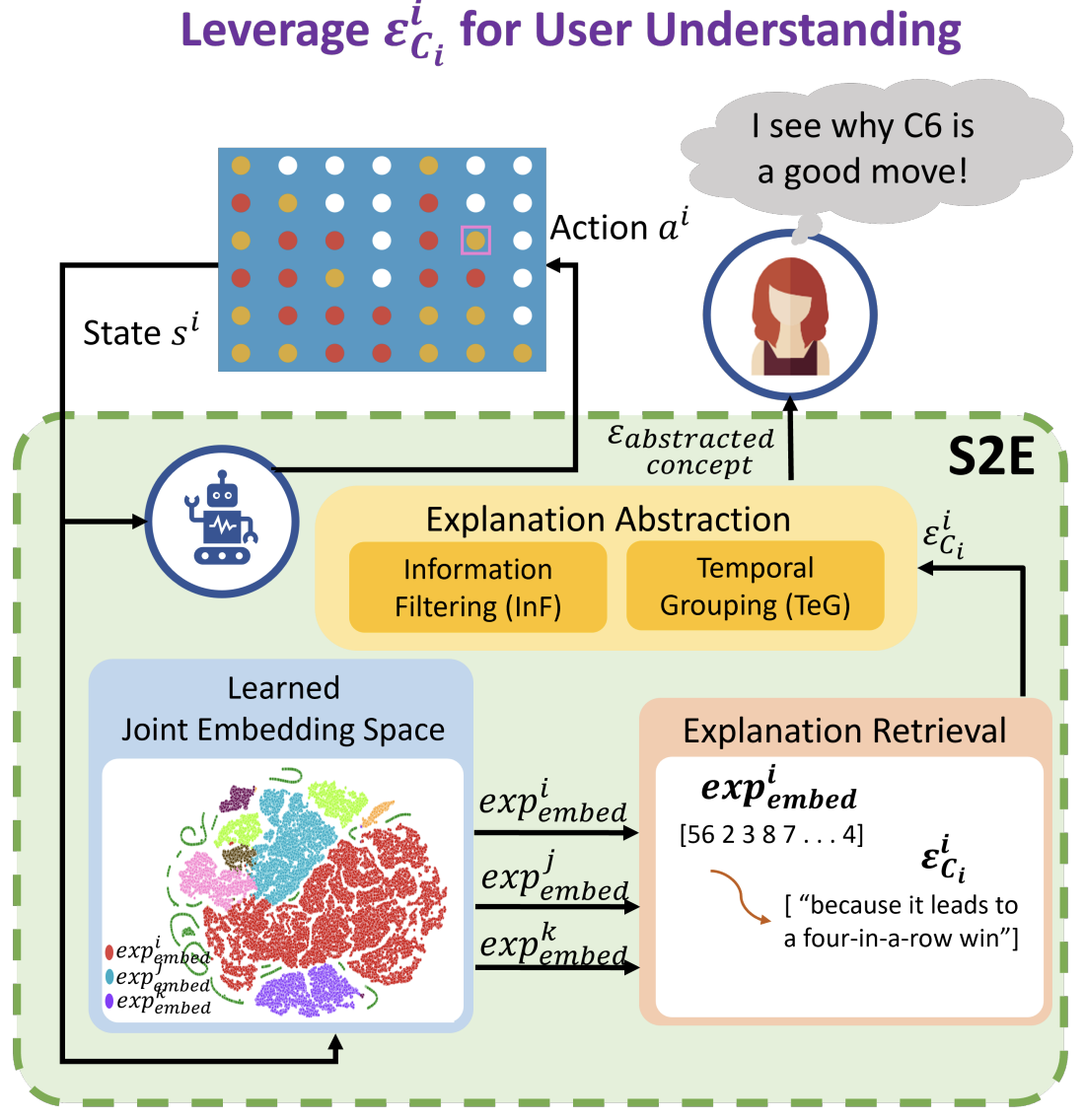

State2Explanation: Concept-Based Explanations to Benefit Agent Learning and User Understanding

TL;DR: Protégé Effect: use joint embedding model to 1) inform RL reward shaping and 2) provide explanations that improves task performance for users.

Devleena Das, Sonia Chernova, Been Kim

[Neurips2023]

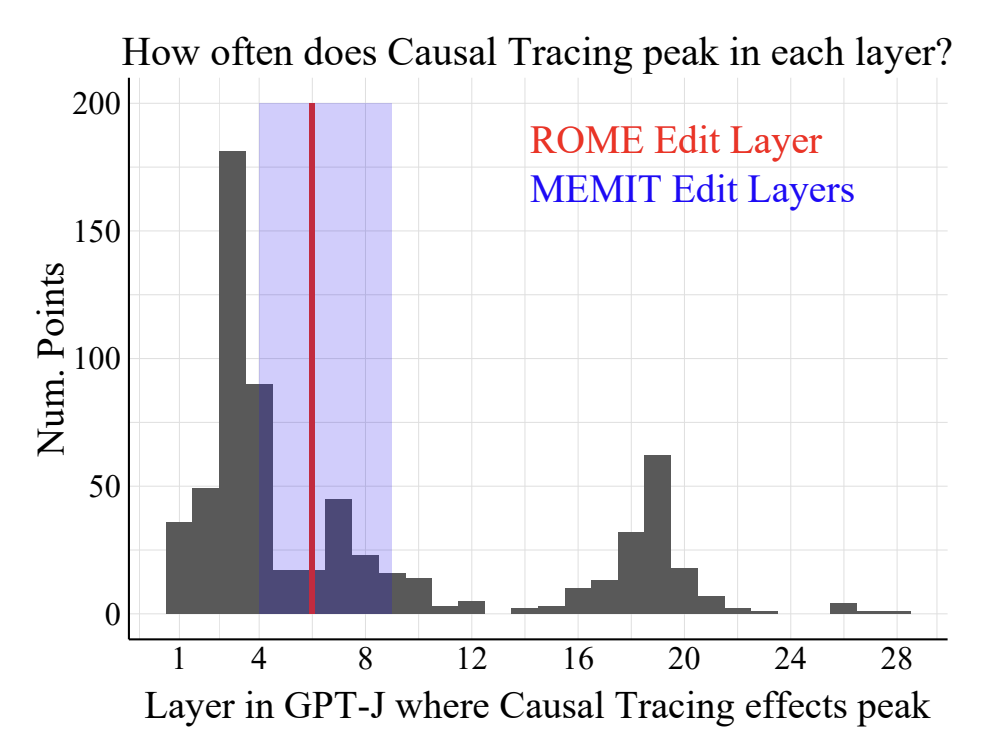

Does Localization Inform Editing? Surprising Differences in Causality-Based Localization vs. Knowledge Editing in Language Models

TL;DR: Surprisingly, localization (where a fact is stored) in LLM has no correlation with editing success.

Peter Hase, Mohit Bansal, Been Kim, Asma Ghandeharioun

[Neurips2023]

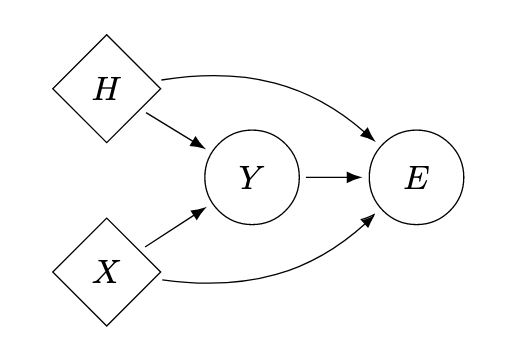

On the Relationship Between Explanation and Prediction: A Causal View

TL;DR: There is not much.

Amir-Hossein Karimi, Krikamol Muandet, Simon Kornblith, Bernhard Schölkopf, Been Kim

[ICML 2023]

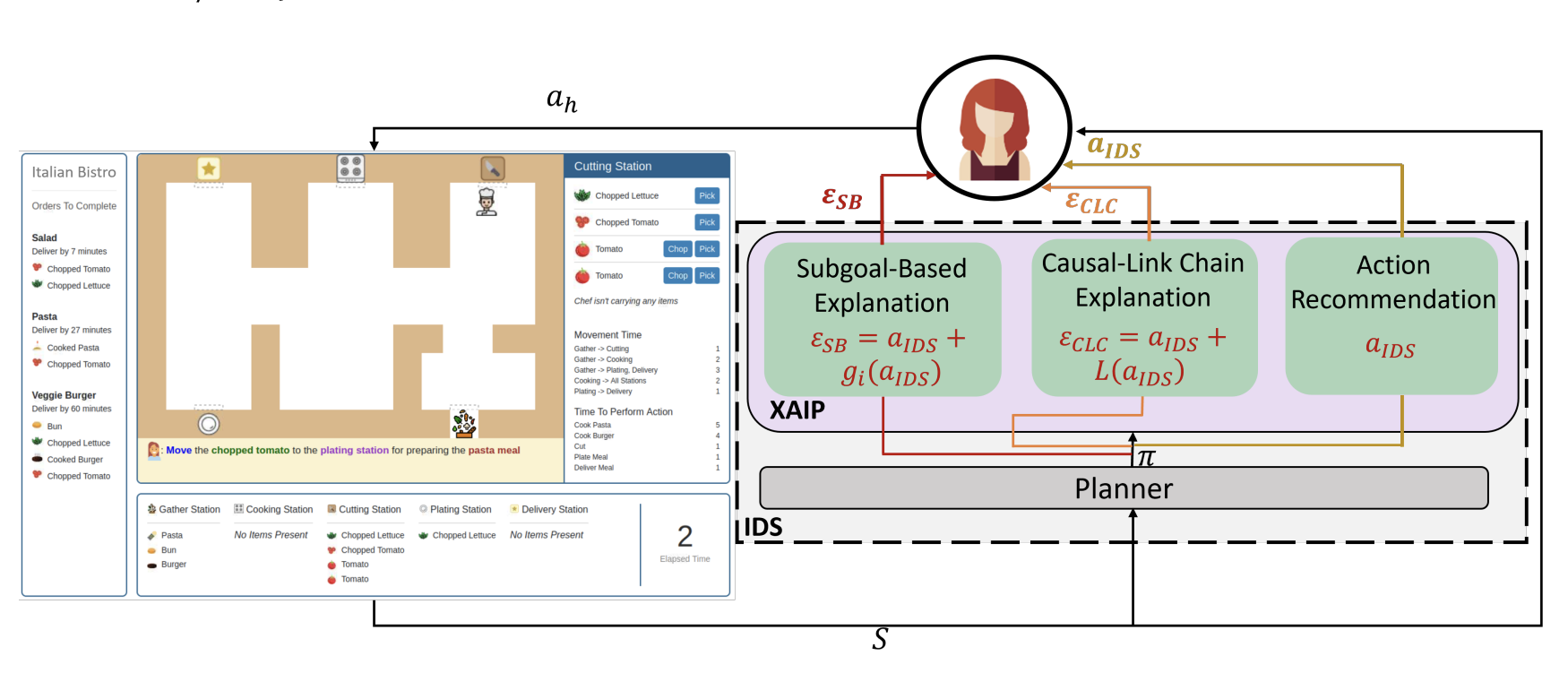

Subgoal-based explanations for unreliable intelligent decision support systems

TL;DR: Even when explanations are not perfect, some types of explanations (subgoal-based) can be helpful for training humans in complex tasks.

Devleena Das, Been Kim, Sonia Chernova

[IUI 2023]

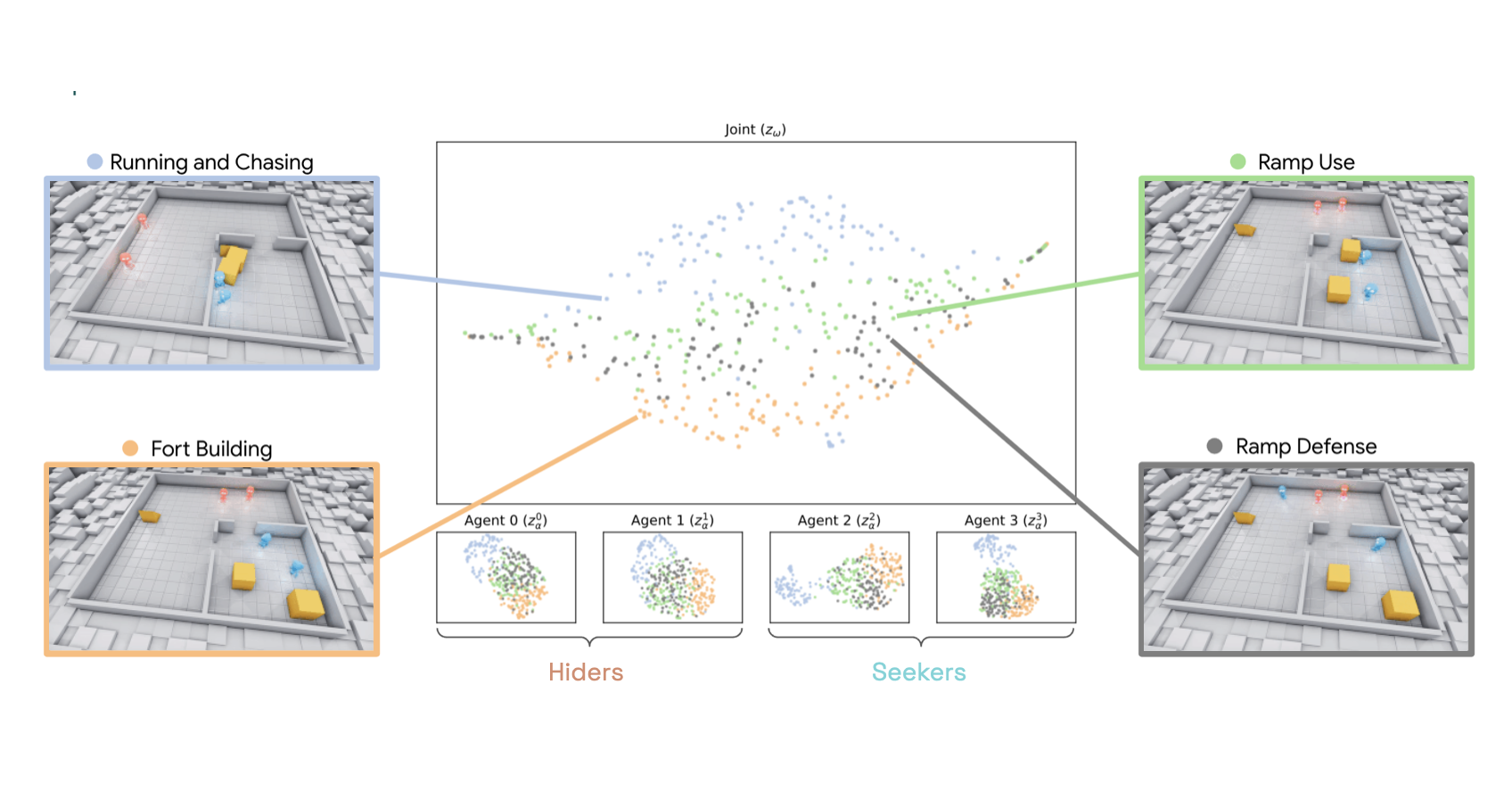

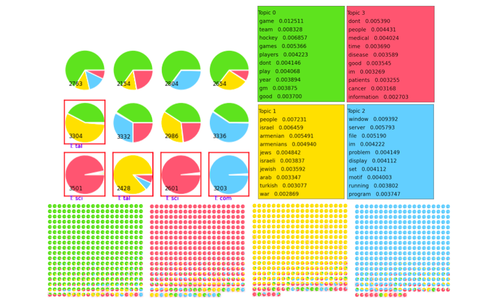

Beyond Rewards: a Hierarchical Perspective on Offline Multiagent Behavioral Analysis

TL;DR:Treat neural networks as if they were a new species in the wild. Conduct an observational study to learn emergent behaviors of the multi-agent RL system.

Shayegan Omidshafiei, Andrei Kapishnikov, Yannick Assogba, Lucas Dixon, Been Kim

[Neurips 2022]

Mood board search & CAV Camera

TL;DR: Together with artists, designers and ML experts, we experiment with ways in which machine learning can inspire creativity--especially in photography. We open sourced the back-end, and published an Android app.

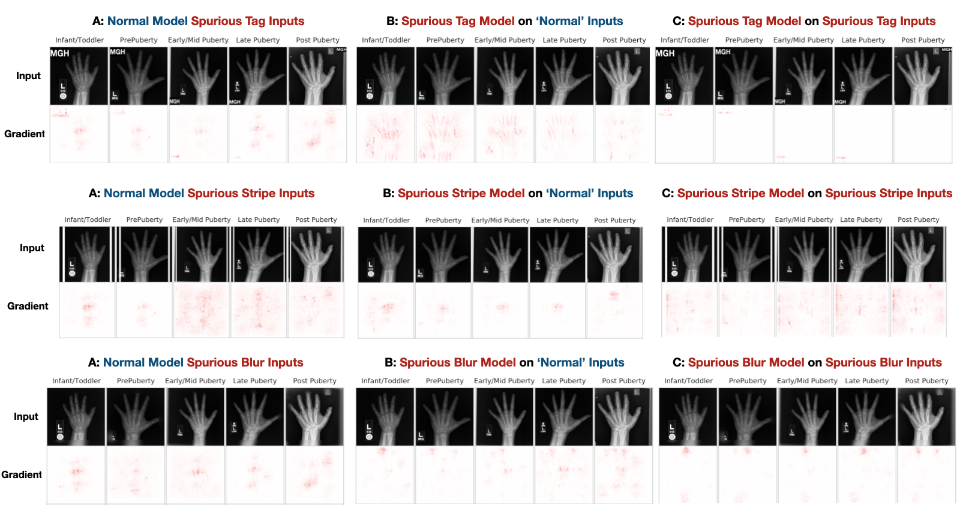

Post hoc Explanations may be Ineffective for Detecting Unknown Spurious Correlation

TL;DR: If you know what type of spurious correlations your model may have, you can test them using existing methods. But if you don't know what they are, you can't test them. Many existing interpretability methods can't help you either.

Julius Adebayo, Michael Muelly, Hal Abelson, Been Kim

[ICLR 2022]

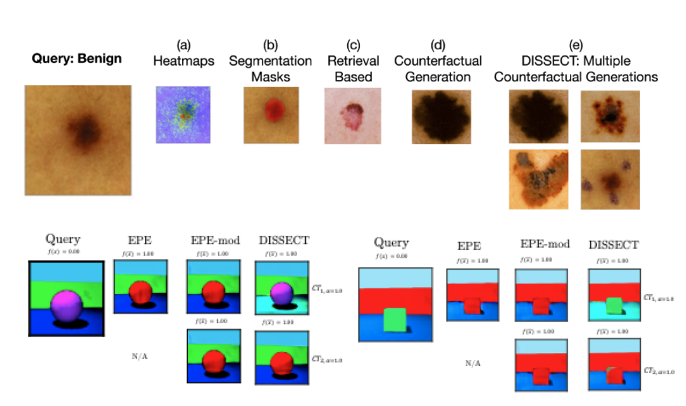

DISSECT: Disentangled Simultaneous Explanations via Concept Traversals

TL;DR: Can we automatically learn concepts tht are relevant to a prediction (e.g., pigmentation), and generate new set of images that would follow the concept trajectory (more or less concept)? Yes.

Asma Ghandeharioun, Been Kim, Chun-Liang Li, Brendan Jou, Brian Eoff, Rosalind W. Picard

[ICLR 2022]

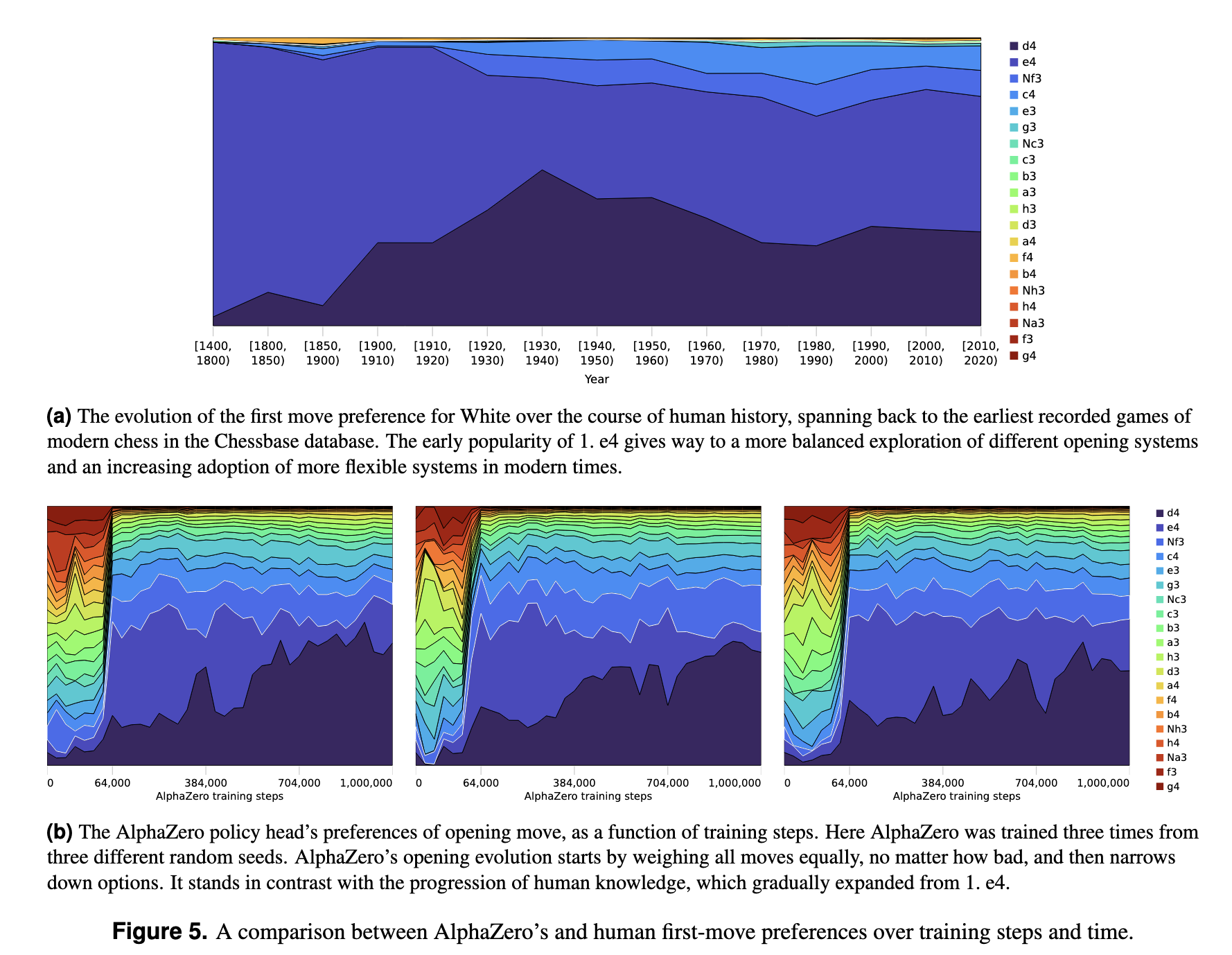

Acquisition of Chess Knowledge in AlphaZero

TL;DR: How does the super-human self-taught chess play machine--AlphaZero--learn to play chess, and what can we learn about chess from it? We investigate the emergence of human concepts in AlphaZero and the evolution of its play through training.

Thomas McGrath, Andrei Kapishnikov, Nenad Tomašev, Adam Pearce, Demis Hassabis, Been Kim, Ulrich Paquet, Vladimir Kramnik

[PNAS] [visualization]

Machine Learning Techniques for Accountability

TL;DR: Pros and cons of accountability methods

Been Kim, Finale Doshi-Velez

[PDF]

Do Neural Networks Show Gestalt Phenomena? An Exploration of the Law of Closure

TL;DR: It does. And it might be related to how NNs can generalize.

On Completeness-aware Concept-Based Explanations in Deep Neural Networks

TL;DR: Let's find set of concepts that are "sufficient" to explain predictions.

Chih-Kuan Yeh, Been Kim, Sercan O. Arik, Chun-Liang Li, Tomas Pfister, Pradeep Ravikumar

[Neurips 20]

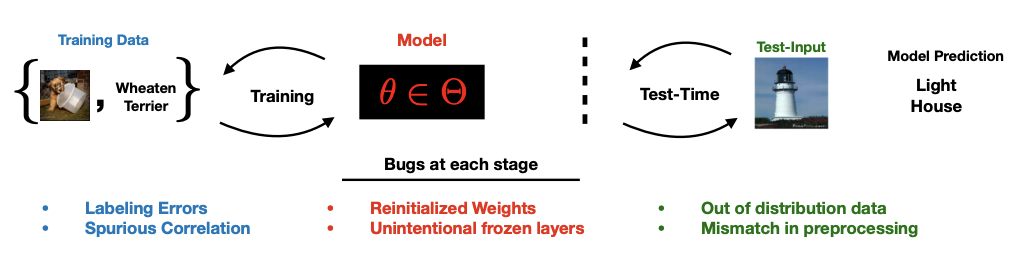

Debugging Tests for Model Explanations

TL;DR: Sanity check2.

Julius Adebayo, Michael Muelly, Ilaria Liccardi, Been Kim

[Neurips 20]

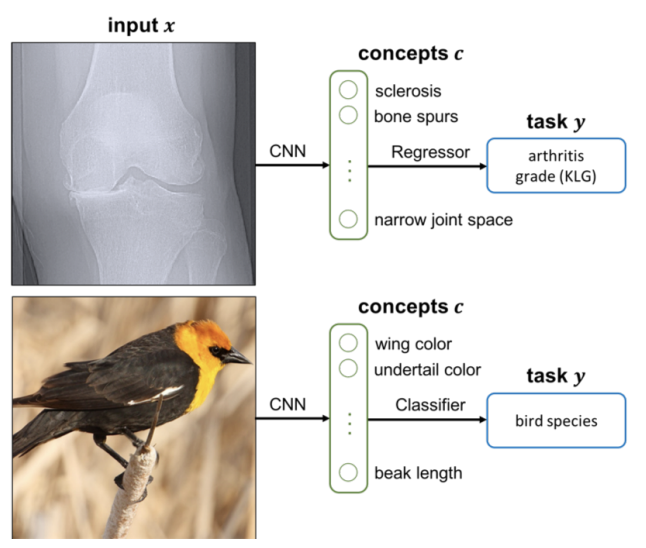

Concept Bottleneck Models

TL;DR: Build a model where concepts are built-in so that you can control influential concepts.

Pang Wei Koh, Thao Nguyen, Yew Siang Tang, Stephen Mussmann, Emma Pierson, Been Kim, Percy Liang

[ICML 20] [Featured at Google Research review 2020]

Explaining Classifiers with Causal Concept Effect (CaCE)

TL;DR: Make TCAV causal.

Yash Goyal, Amir Feder, Uri Shalit, Been Kim

[arxiv]

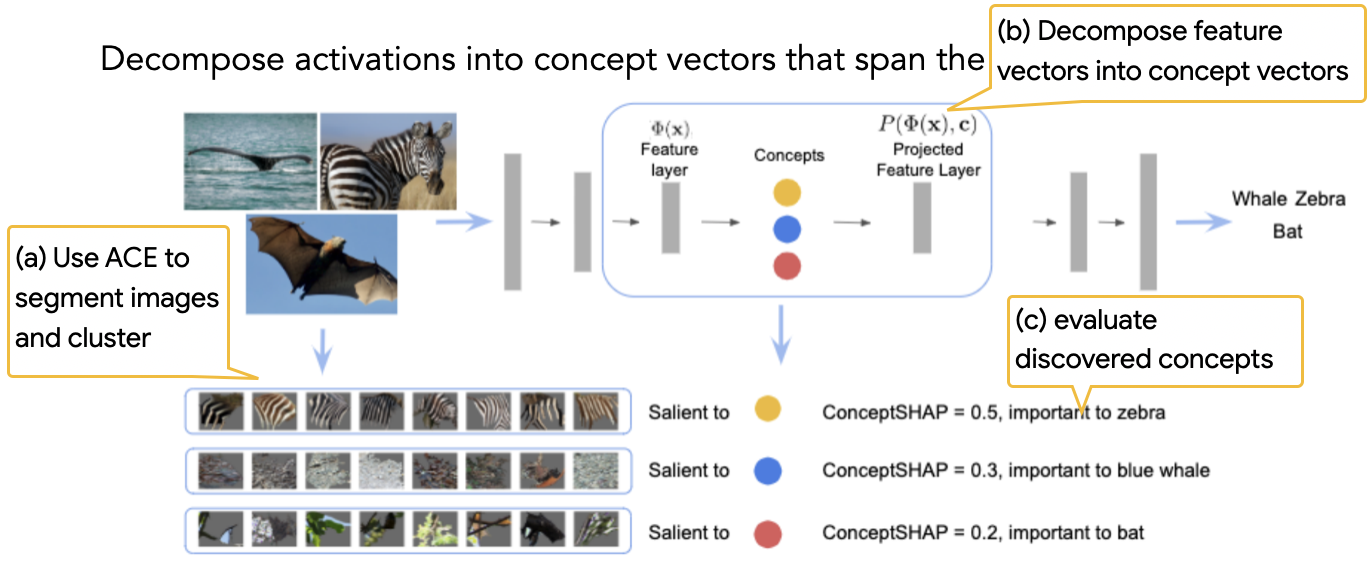

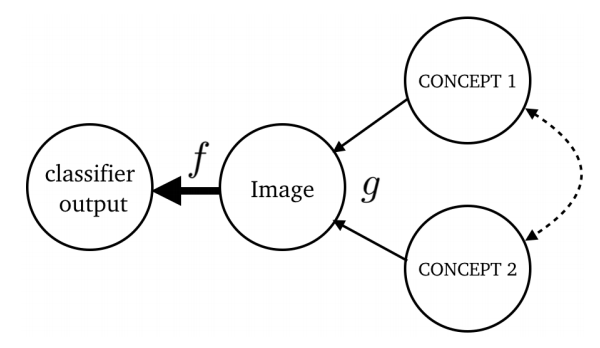

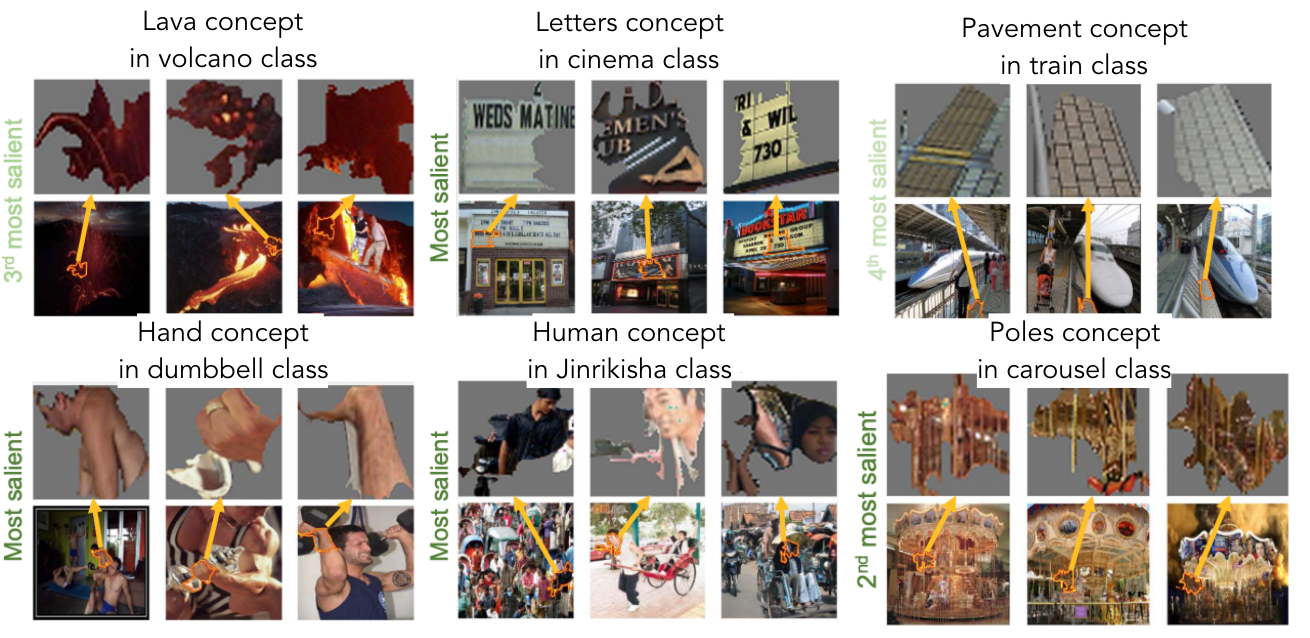

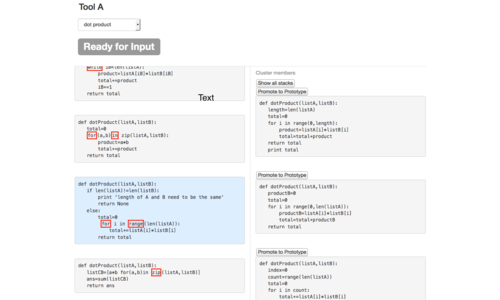

Towards Automatic Concept-based Explanations

TL;DR: Automatically discover high-level concepts that explain a model's prediction.

Amirata Ghorbani, James Wexler, James Zou, Been Kim

[Neurips 19] [code]

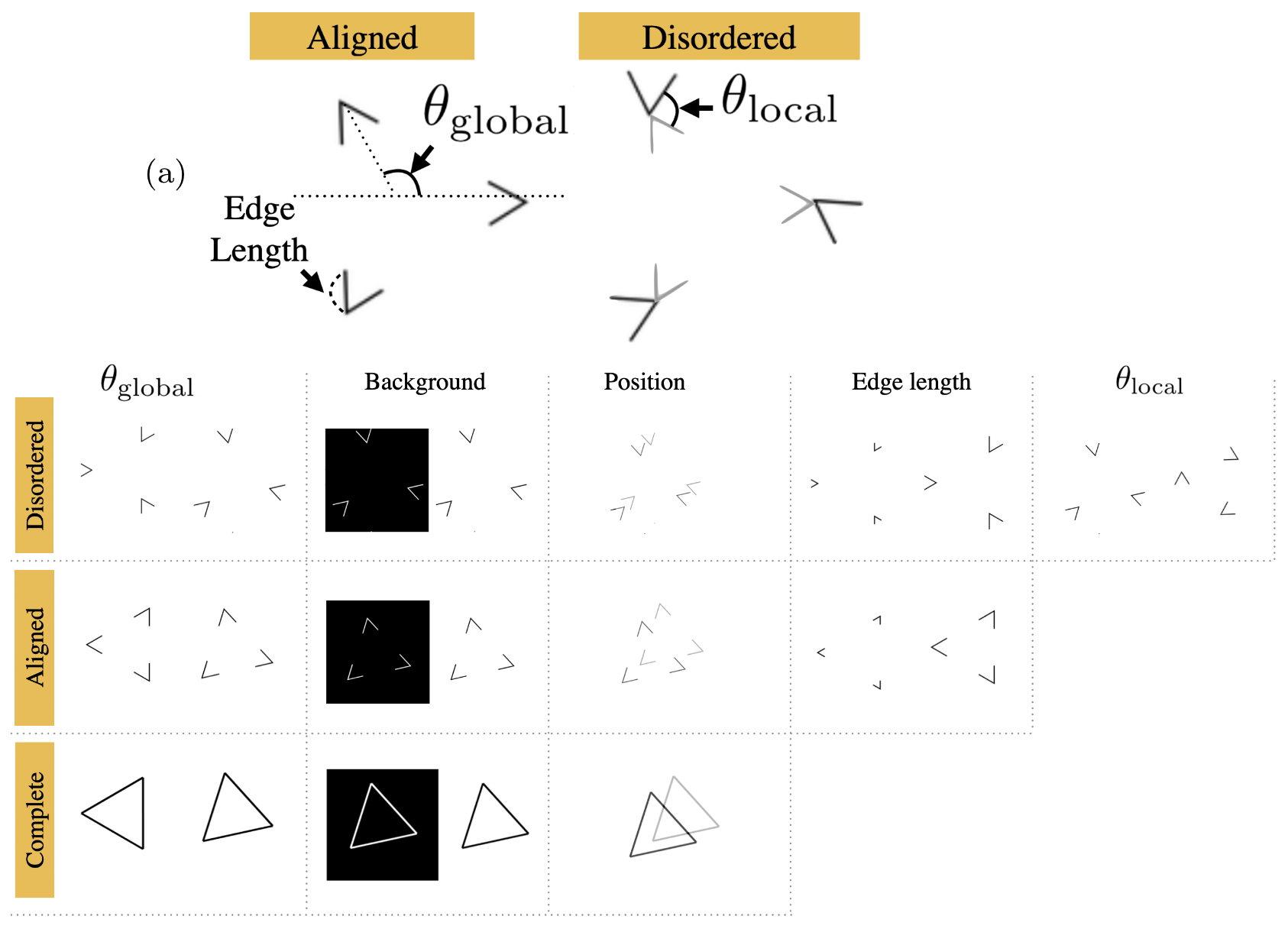

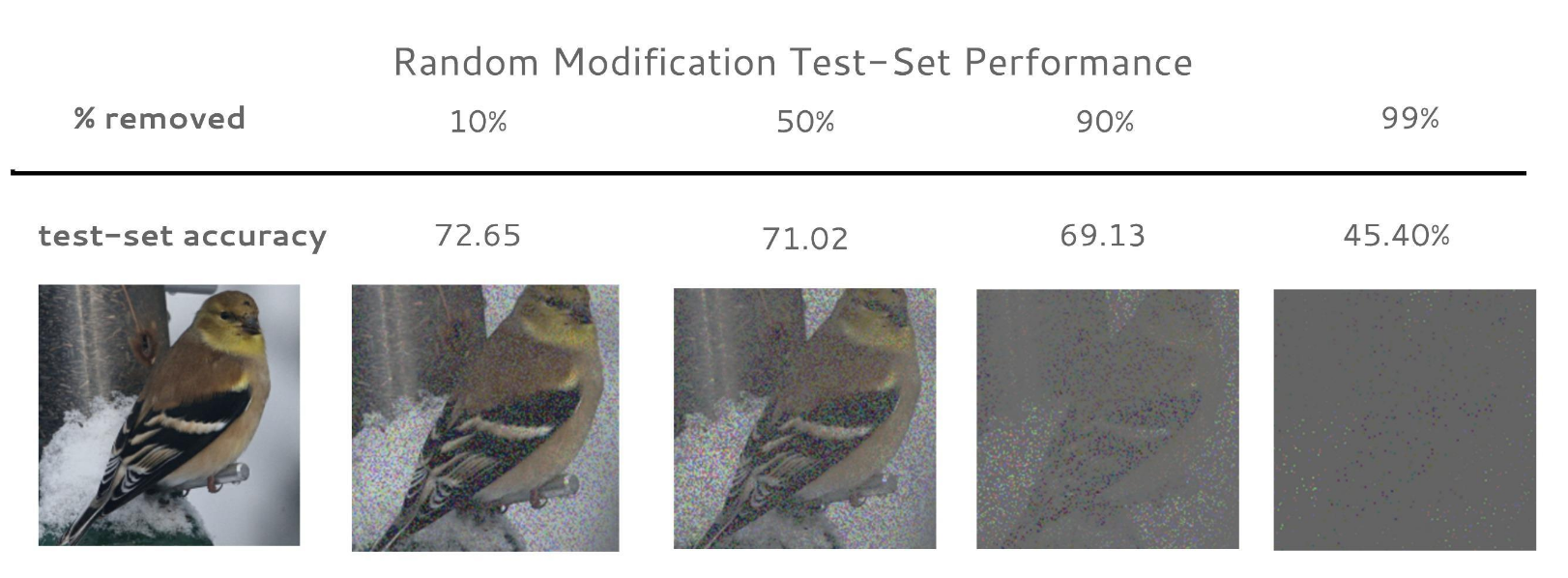

BIM: Towards Quantitative Evaluation ofInterpretability Methods with Ground Truth

TL;DR: Datasets, models and metrics to quantitatively evaluate your interpretability methods with groundtruth. We compare many widely used methods and report their rankings.

Sharry Yang, Been Kim

[arxiv] [code]

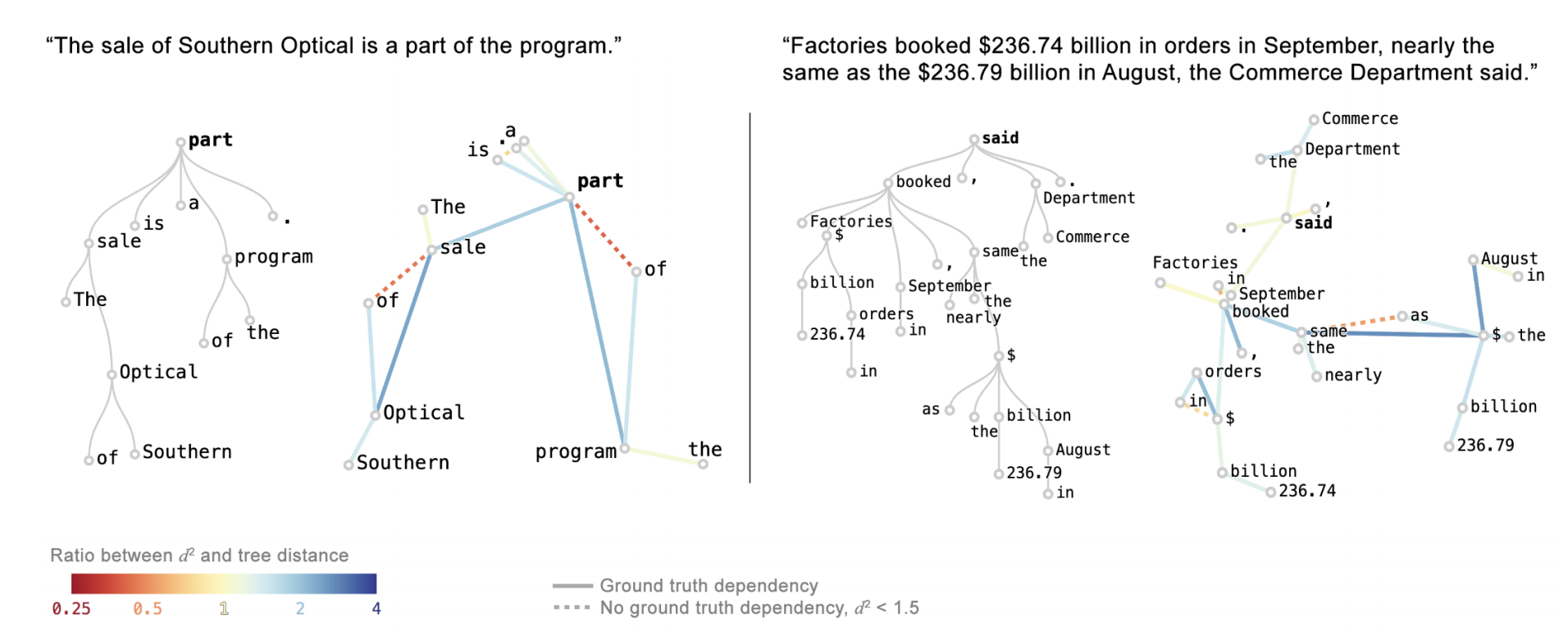

Visualizing and Measuring the Geometry of BERT

TL;DR: Studying geometry of BERT to gain insights behind their impressive performance.

Andy Coenen, Emily Reif, Ann Yuan, Been Kim, Adam Pearce, Fernanda Viégas, Martin Wattenberg

[Neurips 19] [blog post]

Evaluating Feature Importance Estimates

TL;DR: One idea to evaluate attribution methods.

Sara Hooker, Dumitru Erhan, Pieter-Jan Kindermans, Been Kim

[Neurips 19]

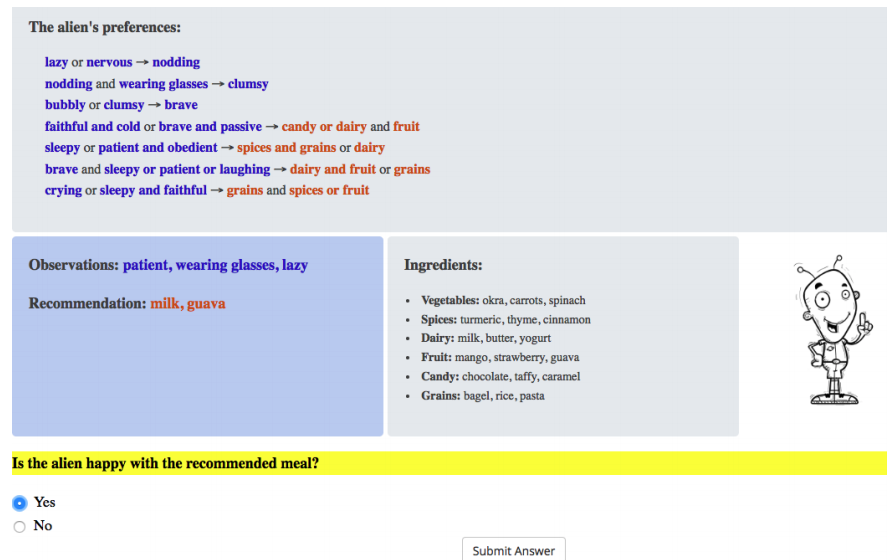

Human Evaluation of Models Built for Interpretability

TL;DR: What are the factors of explanation that matter for better interpretability and in what setting? A large-scale study to answer this question.

Isaac Lage, Emily Chen, Jeffrey He, Menaka Narayanan, Been Kim, Samuel Gershman and Finale Doshi-Velez

[HCOMP 19](best paper honorable mention)

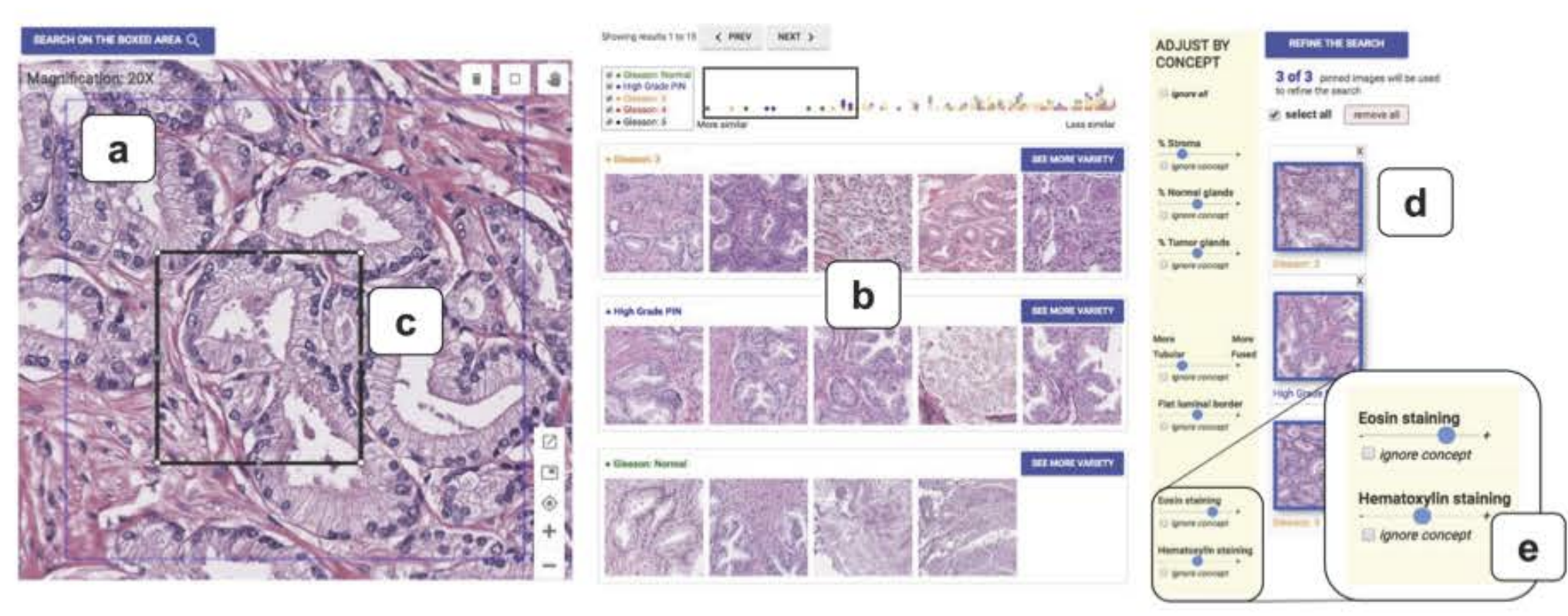

Human-Centered Tools for Coping with Imperfect Algorithms during Medical Decision-Making

TL;DR: A tool to help doctors to navigate medical images using medically-relevant similarties. This work uses a part of TCAV idea to sort images with concepts.

Carrie J. Cai, Emily Reif, Narayan Hegde, Jason Hipp, Been Kim, Daniel Smilkov, Martin Wattenberg, Fernanda Viegas, Greg S. Corrado, Martin C. Stumpe, Michael Terry

CHI 2019 (best paper honorable mention)

[pdf]

Interpreting Black Box Predictions using Fisher Kernels

TL;DR: Answering "which training examples are most responsible for a given set of predictions?" Follow up of MMD-critic [NeurIPS 16]. The difference is that now we pick examples informed by how the classifier sees them!

Rajiv Khanna, Been Kim, Joydeep Ghosh, Oluwasanmi Koyejo

[AISTATS 2019]

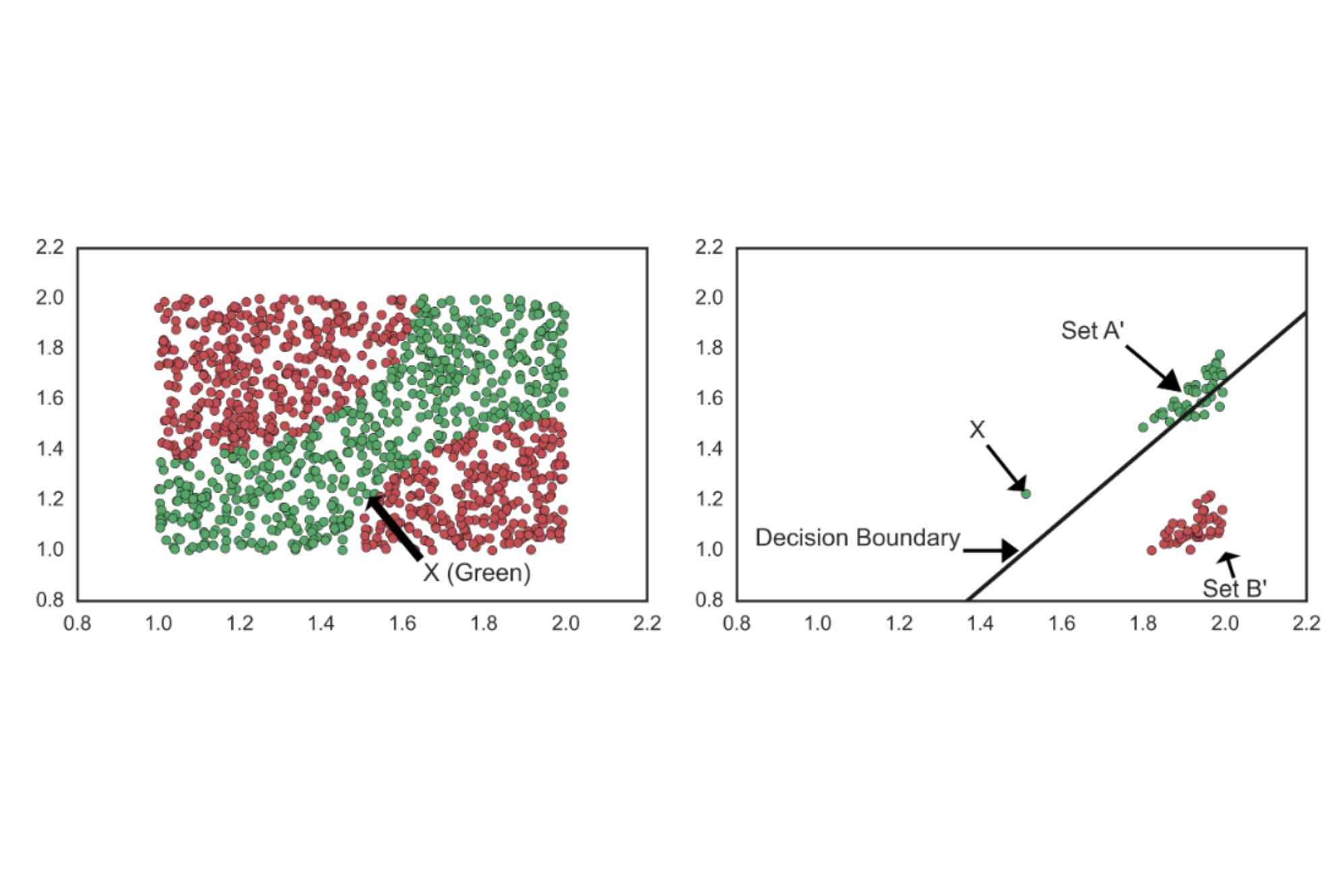

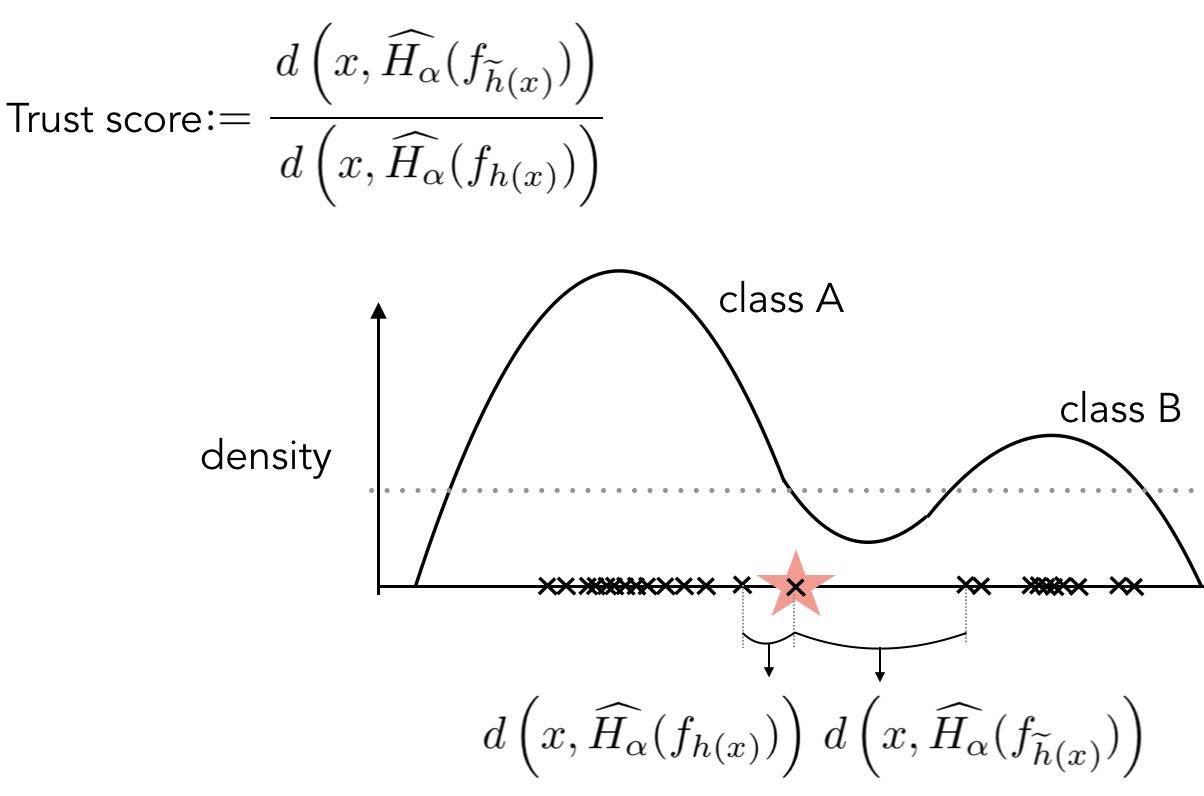

To Trust Or Not To Trust A Classifier

TL;DR: A very simple method that tells you whether to trust your prediction or not, that happens to also have nice theoretical properties!

Heinrich Jiang, Been Kim, Melody Guan, Maya Gupta

[Neurips 2018 ] [code]

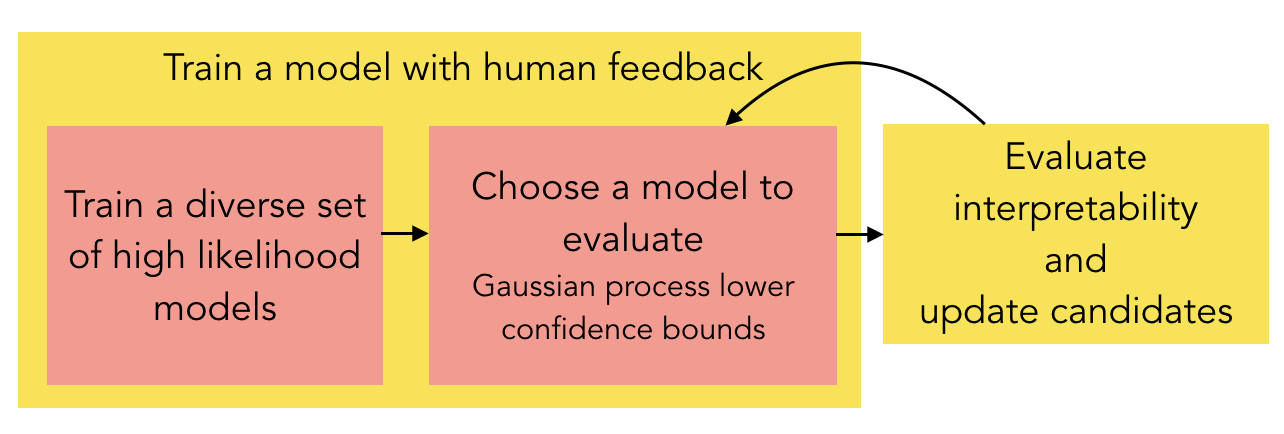

Human-in-the-Loop Interpretability Prior

TL;DR: Ask humans which models are more interpretable DURING the model training. This gives us a more interpretable model for the end-task.

Isaac Lage, Andrew Slavin Ross, Been Kim, Samuel J. Gershman, Finale Doshi-Velez

[Neurips 2018]

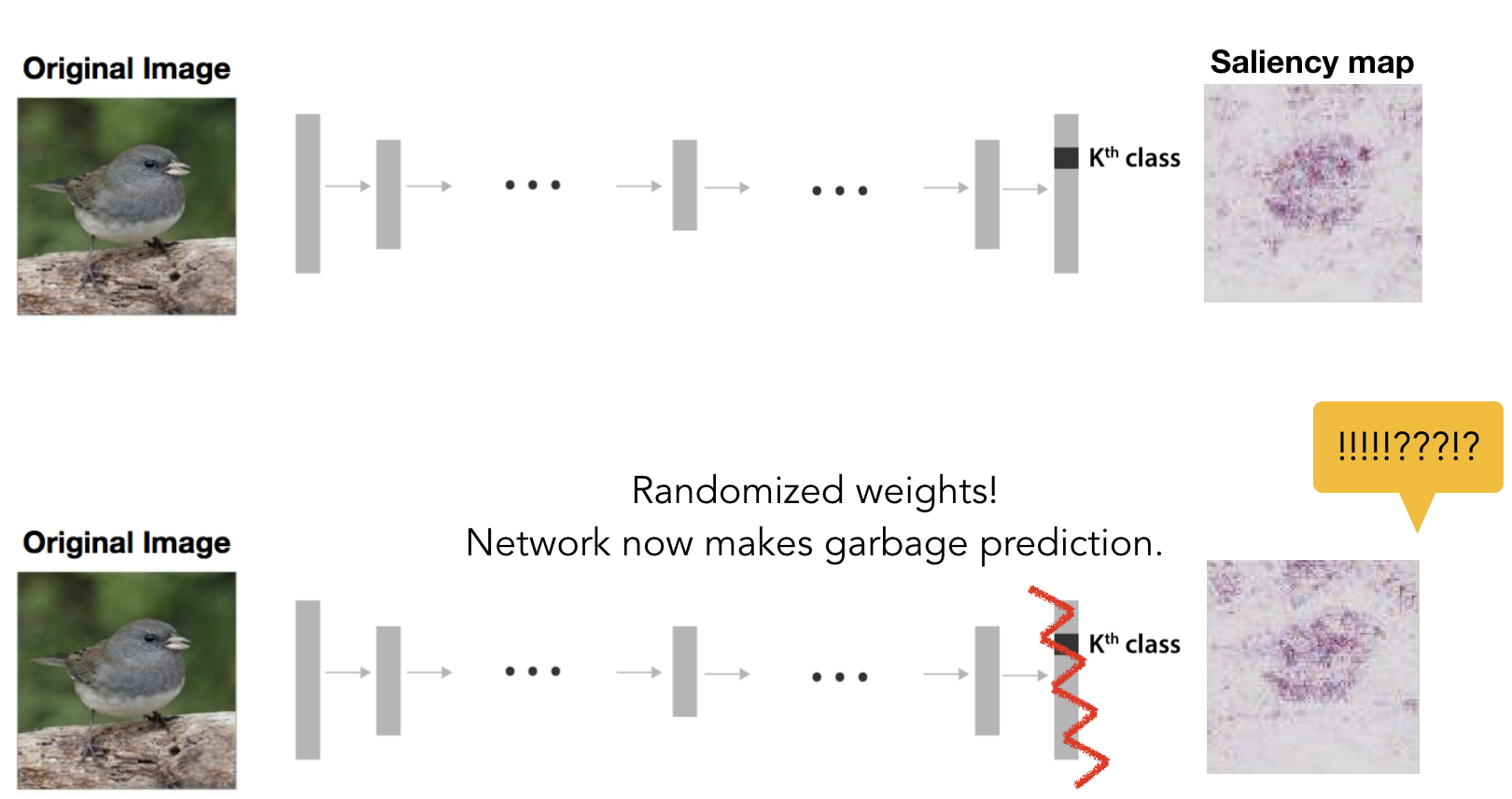

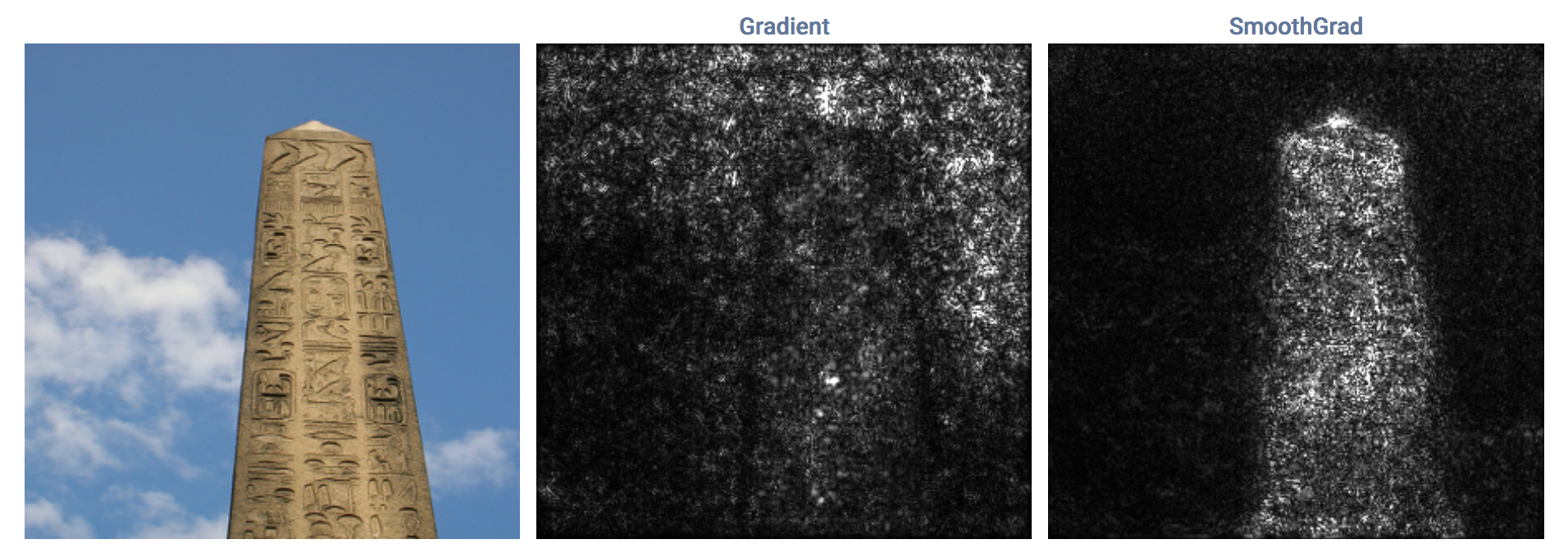

Sanity Checks for Saliency Maps

TL;DR: Saliency maps are popular post-training interpretability methods that claim to show the 'evidence' of predictions. But it turns out that they have little to do with the model's prediction! Some saliency maps produced from a trained network and a random network (with random prediction) are visually indistinguishable.

Julius Adebayo, Justin Gilmer, Ian Goodfellow, Moritz Hardt, Been Kim

[Neurips 18]

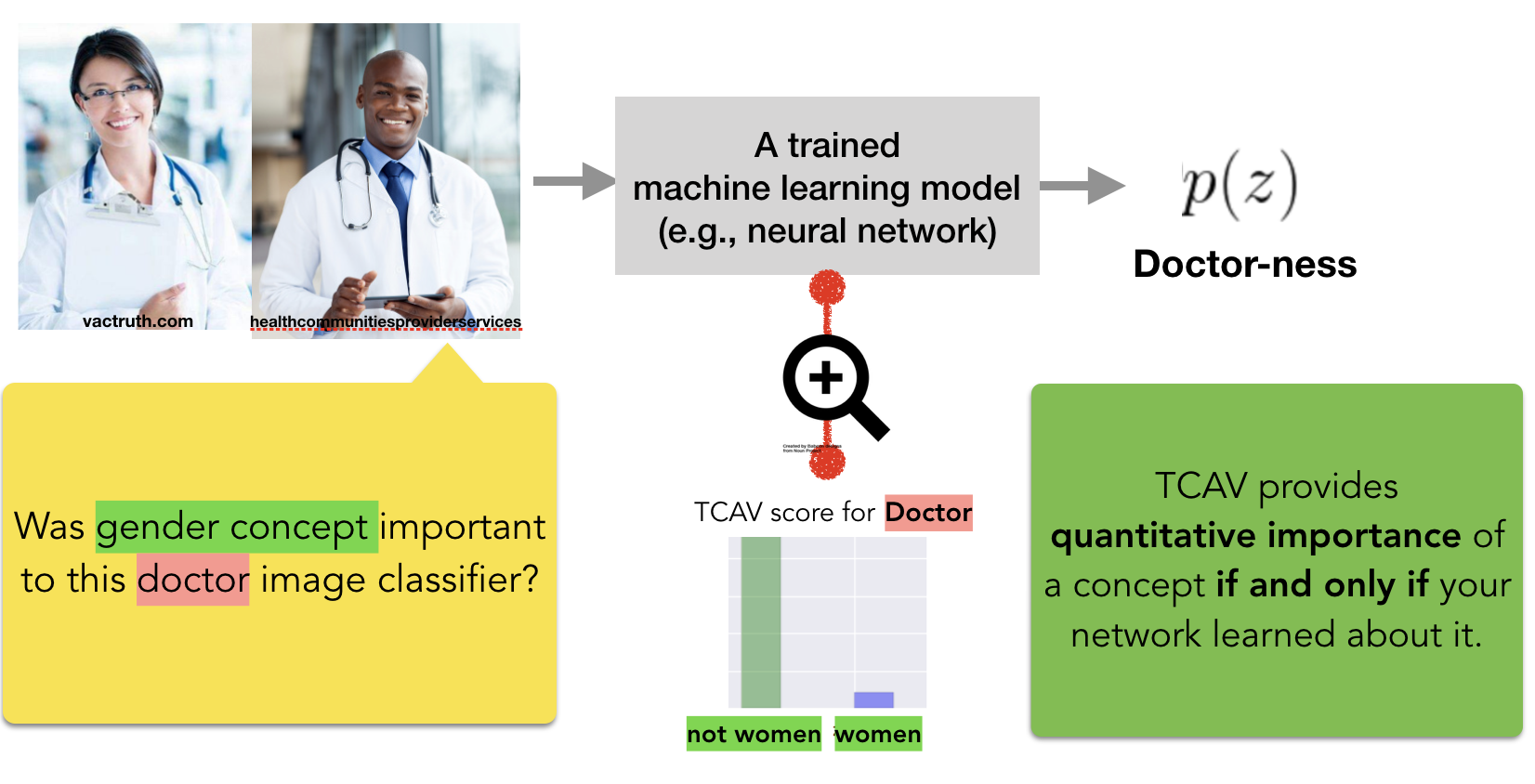

Interpretability Beyond Feature Attribution: Quantitative Testing with Concept Activation Vectors (TCAV)

TL;DR: We can learn to represent human-concepts in any layer of already-trained neural networks. Then we can ask how important were those concepts for a prediction.

Been Kim, Martin Wattenberg, Justin Gilmer, Carrie Cai, James Wexler, Fernanda Viegas, Rory Sayres

[ICML 18] [code] [bibtex] [slides]

Sundar Pichai (CEO of Google)'s presenting TCAV as a tool to build AI for everyone at his keynote speech at Google I/O 2019 [video]

Sundar Pichai (CEO of Google)'s presenting TCAV as a tool to build AI for everyone at his keynote speech at Google I/O 2019 [video]

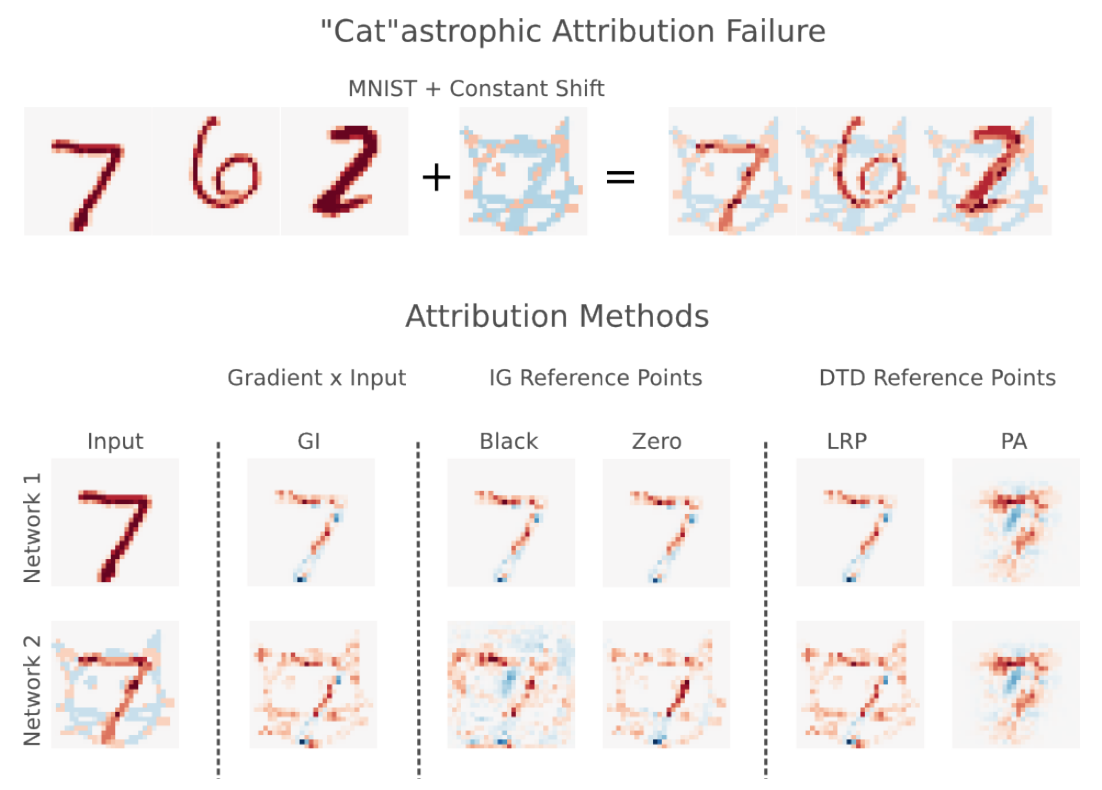

The (Un)reliability of saliency methods

TL;DR: Existing saliency methods could be unreliable; we can make them show whatever we want by simply introducing constant shift in the input (not even adversarial!).

Pieter-Jan Kindermans, Sara Hooker, Julius Adebayo, Maximilian Alber, Kristof T. Schütt, Sven Dähne, Dumitru Erhan, Been Kim

[NIPS workshop 2017 on Explaining and Visualizing Deep Learning] [bibtex]

SmoothGrad: removing noise by adding noise

Daniel Smilkov, Nikhil Thorat, Been Kim, Fernanda Viégas, Martin Wattenberg

[ICML workshop on Visualization for deep learning 2017] [code]

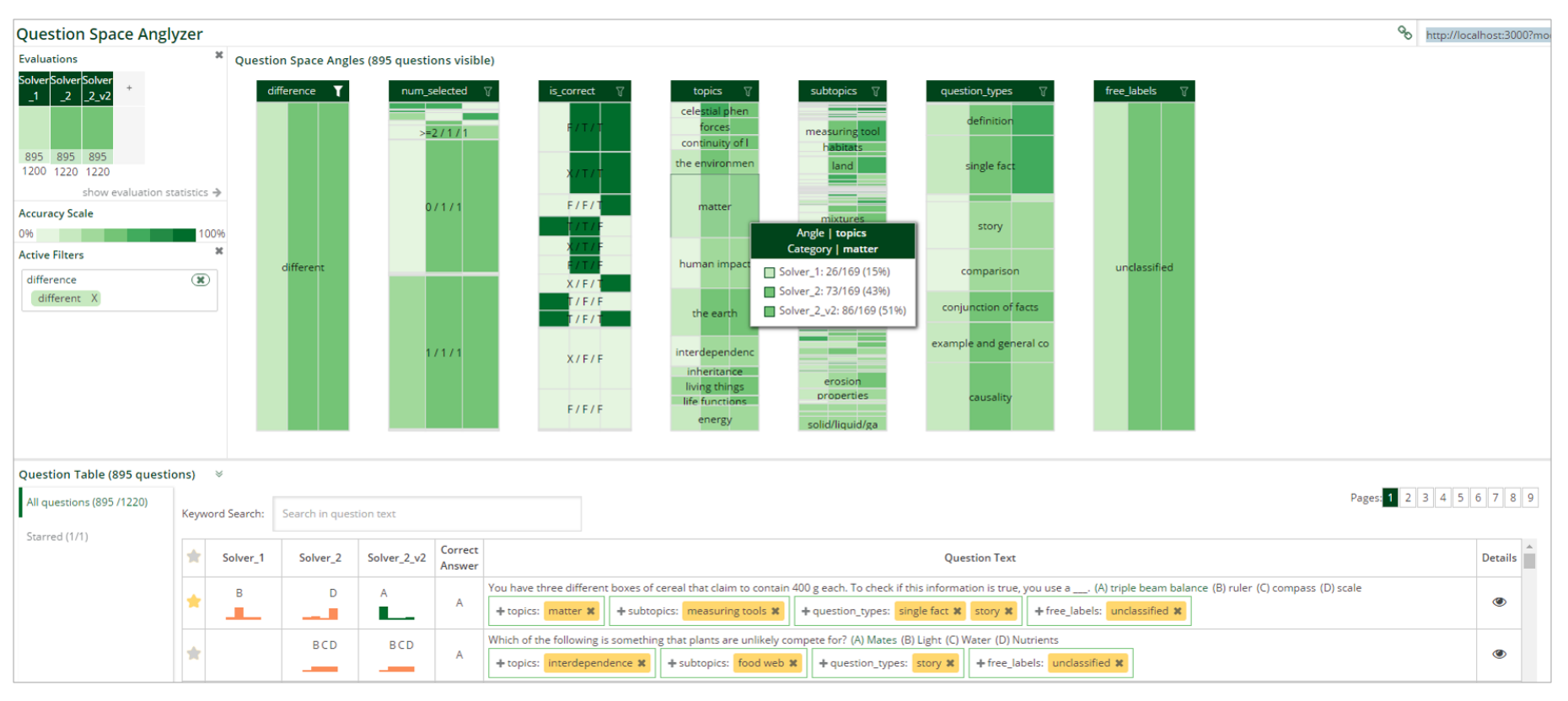

QSAnglyzer: Visual Analytics for Prismatic Analysis of Question Answering System Evaluations

Nan-chen Chen and Been Kim

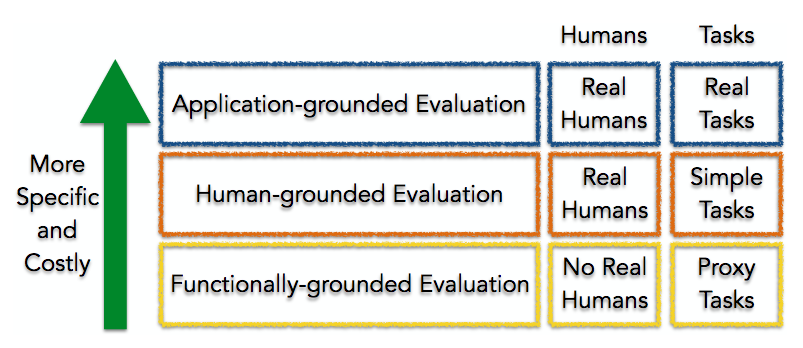

Towards A Rigorous Science of Interpretable Machine Learning

Finale Doshi-Velez and Been Kim

Springer Series on Challenges in Machine Learning: "Explainable and Interpretable Models in Computer Vision and Machine Learning" [pdf]

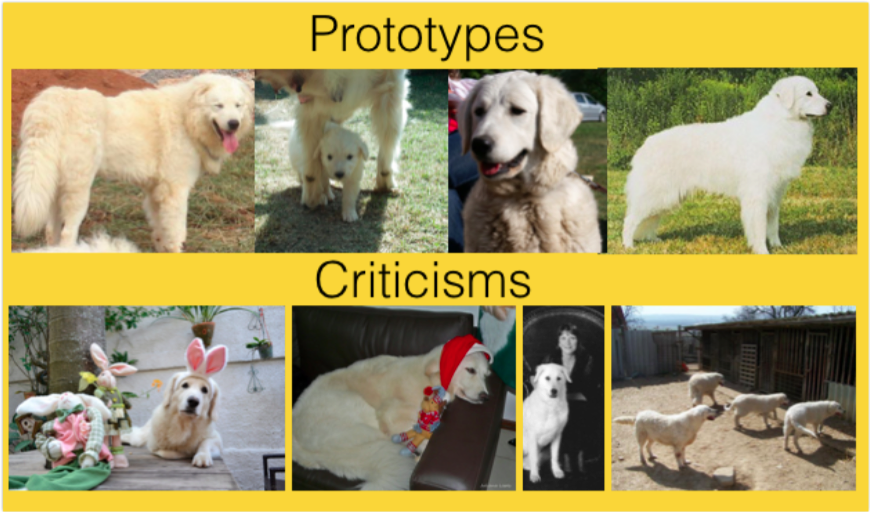

Examples are not Enough, Learn to Criticize! Criticism for Interpretability

Been Kim, Rajiv Khanna and Sanmi Koyejo

[NIPS 16] [NIPS oral slides] [talk video] [code]

Mind the Gap: A Generative Approach to Interpretable Feature Selection and Extraction

Been Kim, Finale Doshi-Velez and Julie Shah

[NIPS 15] [variational inference in gory detail]

iBCM: Interactive Bayesian Case Model Empowering Humans via Intuitive Interaction

Been Kim, Elena Glassman, Brittney Johnson and Julie Shah

[Chapter X in thesis] [demo video]

Bayesian Case Model:

A Generative Approach for Case-Based Reasoning and Prototype Classification

Been Kim, Cynthia Rudin and Julie Shah

[NIPS 14] [poster] This work was featured on MIT news and MIT front page spotlight.

Scalable and interpretable data representation for

high-dimensional complex data

Been Kim, Kayur Patel, Afshin Rostamizadeh and Julie Shah

[AAAI 15]

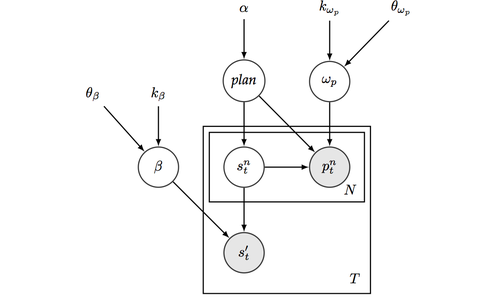

A Bayesian Generative Modeling with Logic-Based Prior

Learning about Meetings

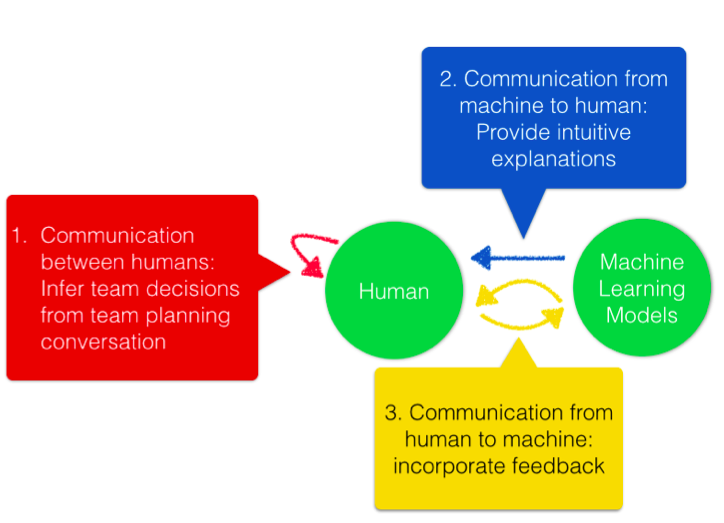

Inferring Robot Task Plans from Human Team Meetings:

A Generative Modeling Approach with Logic-Based Prior

Been Kim, Caleb Chacha and Julie Shah

[AAAI 13] [video] This work was featured in:

"Introduction to AI" course at Harvard (COMPSCI180: Computer science 182) by Barbara J. Grosz.

[Course website]

"Human in the loop planning and decision support" tutorial at AAAI15 by Kartik Talamadupula and Subbarao Kambhampati.

[slides From the tutorial] <

PhD Thesis: Interactive and Interpretable Machine Learning Models for Human Machine Collaboration

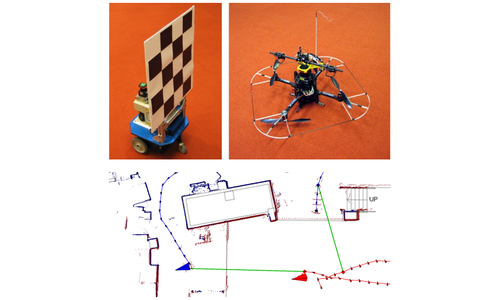

Multiple Relative Pose Graphs for Robust Cooperative Mapping

Been Kim, Michael Kaess, Luke Fletcher, John Leonard, Abraham Bachrach, Nicholas Roy, and Seth Teller