DLProf Viewer User Guide (original) (raw)

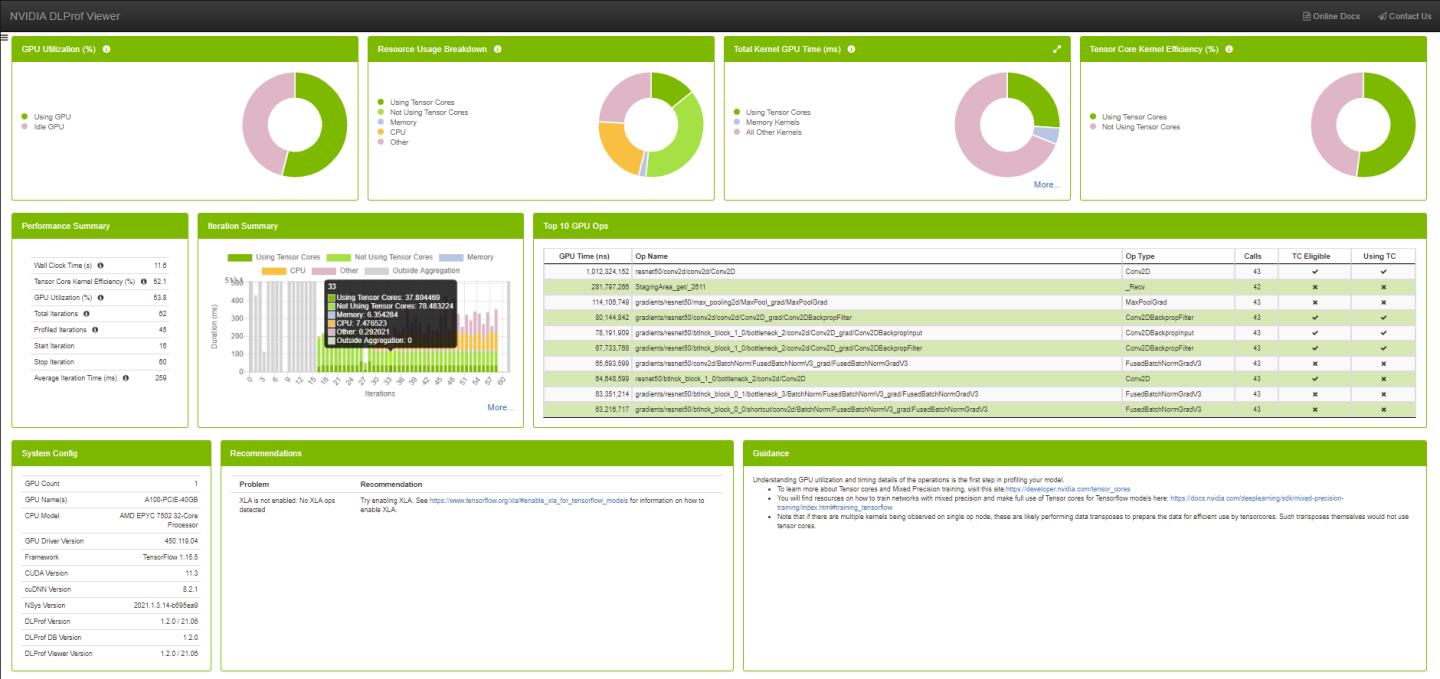

4.1. Dashboard

The Dashboard view provides a high level summary of the performance results in a panelized view. This view serves as a starting point in analyzing the results and provides several key metrics.

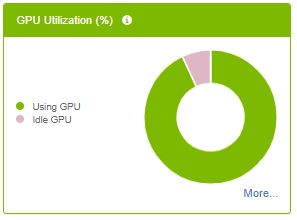

4.1.1. GPU Utilization Panel

The GPU Idle panel visually indicates the percentage of GPU utilization time during execution of aggregated iterations. Hovering over a slice in the chart will show the numeric percentage.

Fields

| Legend Label | Definition |

|---|---|

| Using GPU | The average GPU utilization percentage across all GPUs. |

| Idle GPU | The average GPU idle percentage across of all GPUs. |

UI Controls

| Control | Definition |

|---|---|

| Legend Label | Toggle between hiding and showing legend entry in chart. |

| More... | Show drop-down menu of more views (only visible when more than one GPU was used during profiling). |

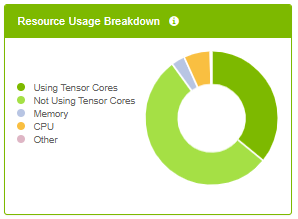

4.1.2. Resource Usage Breakdown Panel

The panel provides a Breakdown of profile activity into resource categories.

Fields

| Legend Label | Definition |

|---|---|

| Using Tensor Cores | Accumulated duration of all kernels using tensor cores. |

| Not Using Tensor Cores | Accumulated duration of all kernels not using tensor cores. |

| Memory | Accumulated duration of all memory operations. |

| CPU | Accumulated duration of all CPU operations. |

| Other | All time not in any of the other categories. |

UI Controls

| Control | Definition |

|---|---|

| Legend Label | Toggle between hiding and showing legend entry in chart. |

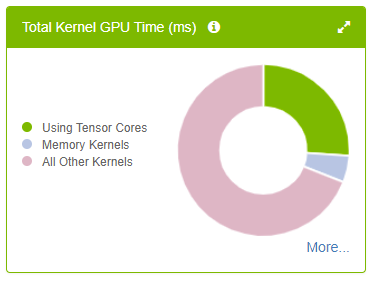

4.1.3. Total Kernel GPU Time Panel

The panel provides key metrics about the kernels in the network aggregated over the specific iteration range.

- Hovering over a slice in the chart will show the aggregated GPU time.

- Clicking a legend item will toggle its visualization in the chart.

Fields

| Legend Label | Definition |

|---|---|

| Using Tensor Cores | Aggregates the total GPU time for all kernels using Tensor Cores. |

| Memory Kernels | Aggregates the total GPU time for all memory-related kernels. |

| All Other Kernels | Aggregates the total GPU time for all remaining kernel types. |

UI Controls

| Control | Definition |

|---|---|

|

Show Kernel Details panel in Details Pane. |

| Legend Label | Toggle between hiding and showing legend entry in chart. |

| More... | Show drop-down menu of more views. |

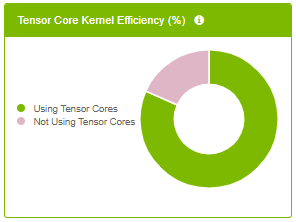

4.1.4. Tensor Core Kernel Efficiency Panel

- Hovering over a slice in the chart will show the percentage.

- Clicking a legend item will toggle its visualization in the chart.

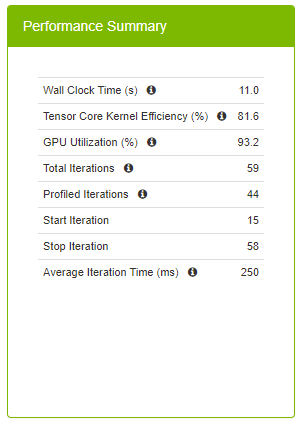

Performance Summary Panel

The Performance Summary panel provides top level key metrics about the performance data aggregated over the specific iteration range. A helpful tooltip text will appear when hovering over the ‘i’ icon.

| Field | Definition |

|---|---|

| Wall Clock Time | This is the total run time for the aggregation range, and is defined as the time between the start time of the first op in the starting iteration on the CPU and the end time of the last op in the final iteration on either the CPU or GPU, whichever timestamp is greatest. |

| Tensor Core Kernel Efficiency % | This high level metric represents the utilization of Tensor Core enabled kernels. Tensor Core operations can provide a performance improvement and should be used when possible. This metric is calculated by: [Total GPU Time for Tensor Core kernels] / [Total GPU Time for Tensor Core Eligible Ops] A 100% Tensor Core Utilization means that all eligible Ops are running only Tensor Core enabled kernels on the GPU. A 50% Tensor Core Utilization can mean anything from all eligible Ops are running Tensor Core kernels only half of the time to only half of all eligible Ops are running Tensor Core kernels only. This metric should be used with the Op Summary Panel to determine the quality of Tensor Core usage. Higher is better. |

| GPU Utilization % | Average GPU utilization across all GPUs. Higher is better. |

| Total Iterations | The total number of iterations found in the network. |

| Profiled Iterations | The total number of iterations used to aggregate the performance results. This number is calculated using ‘Start Iteration’ and ‘Stop Iteration’. |

| Start Iteration | The starting iteration number used to generate performance results. |

| Stop Iteration | The ending iteration number used to generate performance results. |

| Average Iteration Time | The average iteration time is the total Wall Time divided by the number of iterations. |

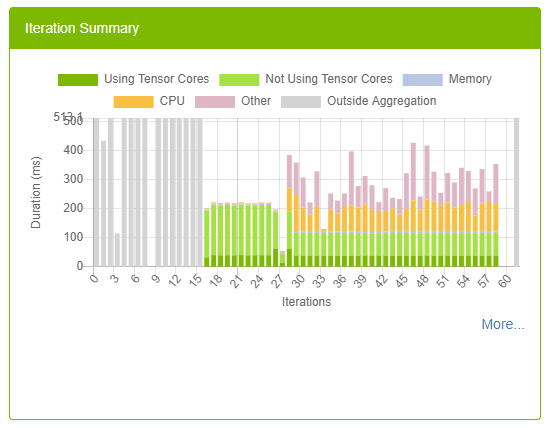

4.1.6. Iteration Summary panel

This panel visually displays iterations. Users can quickly see how many iterations are in the model, the iterations that were aggregated/profiled, and the durations of tensor core kernels in each iteration. The colors on this panel match the colors on all the other dashboard panels.

For more information on this panel, see Iterations View.

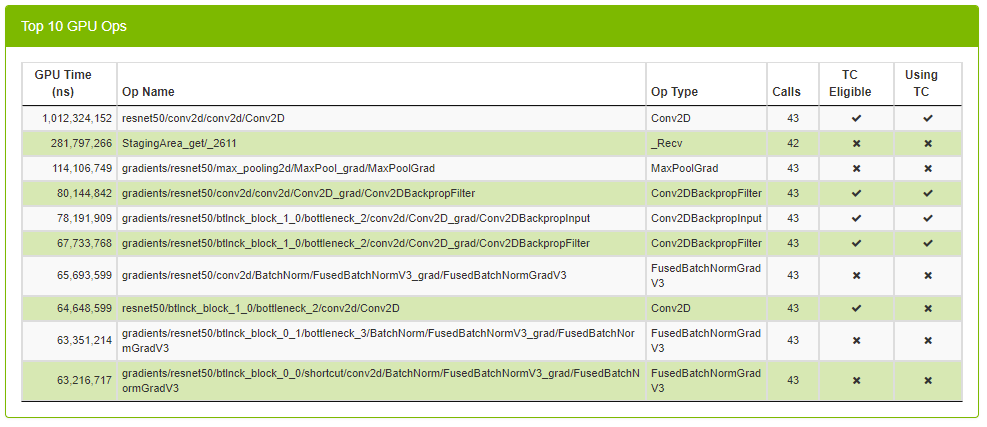

4.1.7. Top 10 GPU Ops Panel

Top 10 GPU Ops table shows the top 10 operations with the largest execution times on the GPU. This table comes pre-sorted with the order of each row in descending GPU Time. The table is not sortable or searchable.

| Column | Definition |

|---|---|

| GPU Time | Shows total GPU time of all kernels across all GPUs. |

| Op Name | The name of the op. |

| Direction | The fprop/bprop direction of the op. (only visible on PyTorch runs). |

| Op Type | The type of the op. |

| Calls | The number of times the op was called. |

| TC Eligible | A true/false field indicating whether or not the op is eligible to use Tensor Core kernels. |

| Using TC | A true/false field indicating whether or not one of the kernels launched in this op is using Tensor Cores. |

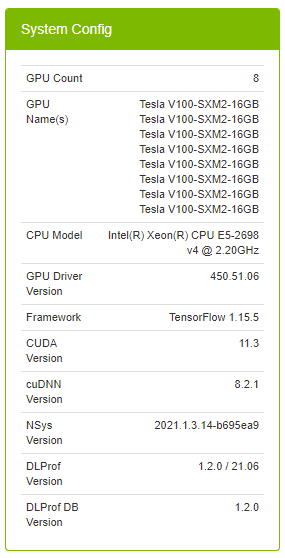

4.1.8. System Configuration Panel

| Field | Definition |

|---|---|

| Profile Name | (Optional) Helpful label to describe the profiled network. The value in this field corresponds to the value supplied in the --profile_name command line argument in DLProf. |

| GPU Count | The number of GPU devices found on the computer during training. |

| GPU Name(s) | A list of the GPU devices found on the computer during training. |

| CPU Model | The model of the CPU on the computer during training. |

| GPU Driver Version | The version of the driver used for NVIDIA Graphics GPU. |

| Framework | The framework used to generate profiling data (eg, TensorFlow, PyTorch). |

| CUDA Version | The version of the CUDA parallel computing platform. |

| cuDNN Version | The version of CUDA Deep Neural Network used during training. |

| NSys Version | The version of Nsight Systems used during training. |

| DLProf Version | The version of the Deep Learning Profiler used to generate the data visualized in the DLProf Viewer. |

| DLProf DB Version | The version of the DLProf database. |

| DLProf Viewer Version | The version of the DLProf Viewer. |

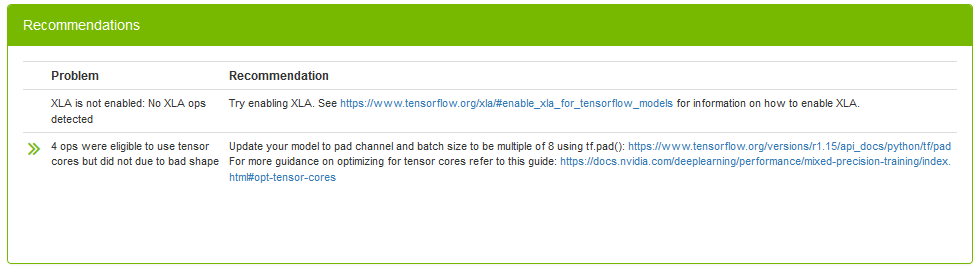

4.1.9. Recommendations Panel

The Recommendations panel displays common issues detected in the profiled network and provides potential solutions and suggestions to address the issues. The panel will only show issues that have been detected by DLProf. For a full list of potential issues that DLProf looks for, see the Expert Systems section in the Deep Learning Profiler User Guide.

The double-green arrows will show additional information about the detected problem.

| Column | Definition |

|---|---|

| Problem | The description of the scenario that DLProf detected when profiling the network. |

| >> | (Optional) When present, clicking on the double arrows will display a new view displaying the problem in detail. |

| Recommendation | A recommendation or actionable feedback, a tangible suggestion that the user can do to improve the network. Clicking on a hyperlink inside the recommendation will open a new tab in the browser. |

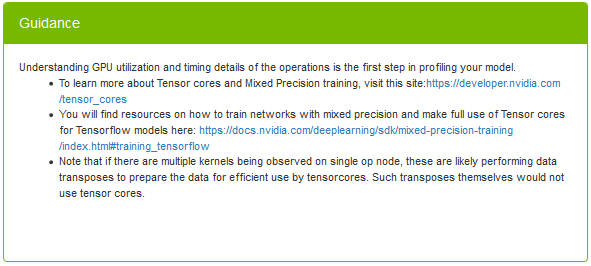

4.1.10. Guidance Panel

This panel provides static guidance to the user to help the user learn more about Tensor Cores, Mixed Precision training. The panel has hyperlinks for further reading. Clicking on a hyperlink inside the Guidance Panel will open a new tab in the browser.

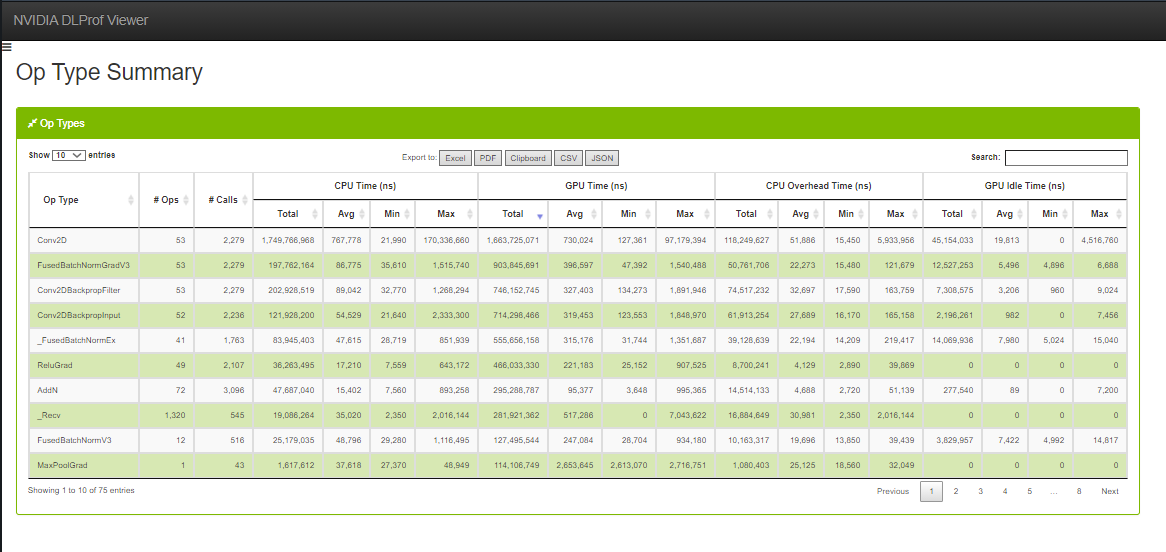

4.2. Op Type Summary

This table aggregates metrics over all op types and enables users to see the performance of all the ops in terms of its types, such as Convolutions, Matrix Multiplications, etc.

See this description for all the features available in all DataTables.

Op Type Data Table

| Column name | Description |

|---|---|

| Op Type | The operation type. |

| No. Ops | The number of ops that have the Op Type above. |

| No. Calls | Number of instances that the operation was called / executed. |

| Total CPU Time (ns) | The total CPU time of all instances of this op type. |

| Avg. CPU Time (ns) | The average CPU time of all instances of this op type. |

| Min CPU Time (ns) | The minimum CPU time found amongst all instances of this op type. |

| Max CPU Time (ns) | The maximum CPU time found amongst all instances of this op type. |

| Total GPU Time (ns) | The total GPU time of all instances of this op type. |

| Avg. GPU Time (ns) | The average GPU time of all instances of this op type. |

| Min GPU Time (ns) | The minimum GPU time found amongst all instances of this op type. |

| Max GPU Time (ns) | The maximum GPU time found amongst all instances of this op type. |

| Total CPU Overhead Time (ns) | The total CPU overhead of all instances of this op type. |

| Avg. CPU Overhead Time (ns) | The average CPU overhead of all instances of this op type. |

| Min CPU Overhead Time (ns) | The minimum CPU overhead found amongst all instances of this op type. |

| Max CPU Overhead Time (ns) | The maximum CPU overhead found amongst all instances of this op type. |

| Total GPU Idle Time (ns) | The total GPU idle time of all instances of this op type. |

| Avg. GPU Idle Time (ns) | The average GPU idle time of all instances of this op type. |

| Min GPU Idle Time (ns) | The minimum GPU idle time found amongst all instances of this op type. |

| Max GPU Idle Time (ns) | The maximum GPU idle time found amongst all instances of this op type. |

4.3. Ops and Kernels

This view enables users to view, search, sort all ops and their corresponding kernels in the entire network.

4.3.1. Ops Data Table

When a row is selected in the Ops table, a summary of each kernel for that op is displayed in the bottom table.

See this description for all the features available in all DataTables.

| Entry | Description |

|---|---|

| GPU Time (ns) | Cumulative time executing all GPU kernels launched for the op. |

| CPU Time (ns) | Cumulative time executing all op instances on the CPU. |

| Op Name | The name of the op. |

| Direction | The fprop/bprop direction of the op. (only visible on PyTorch runs). |

| Op Type | The operation of the op. |

| Calls | The number of times the op was called. |

| TC Eligible | A true/false field indicating whether or not the op is eligible to use Tensor Core kernels. To filter, type ‘1’ for true, and ‘0’ for false. |

| Using TC | A true/false field indicating whether or not one of the kernels launched in this op is using Tensor Cores. To filter, type ‘1’ for true, and ‘0’ for false. |

| Kernel Calls | The number of kernels launched in the op. |

| Data Type | The data type of this op (eg, float16, int64, int32) |

| Stack Trace | The stack trace of the op. (only visible on PyTorch runs). If the contents of this cell is more than 100 characters, a ‘See More’ hyperlink appears. When clicked, the full contents of the cell appears. When the cell is expanded, the hyperlink text is changed to ‘See Less’. When clicked, the cell collapses back to the first 100 characters. |

4.3.2. Kernel Summaries Data Table

See this description for all the features available in all DataTables.

Kernels Summary table

| Entry | Description |

|---|---|

| Kernel name | The full name of the kernel. |

| Using TC | A true/false field indicating whether or not the kernel is actually using a Tensor Core. To filter, type ‘1’ for true, and ‘0’ for false. |

| Calls | The number of times this kernel was launched. |

| GPU Time (ns) | The aggregate duration of each time the kernel was launched. |

| Avg (ns) | The average duration of each time this kernel was launched. |

| Min (ns) | The minimum duration of all kernel launches. |

| Max (ns) | The maximum duration of all kernel launches. |

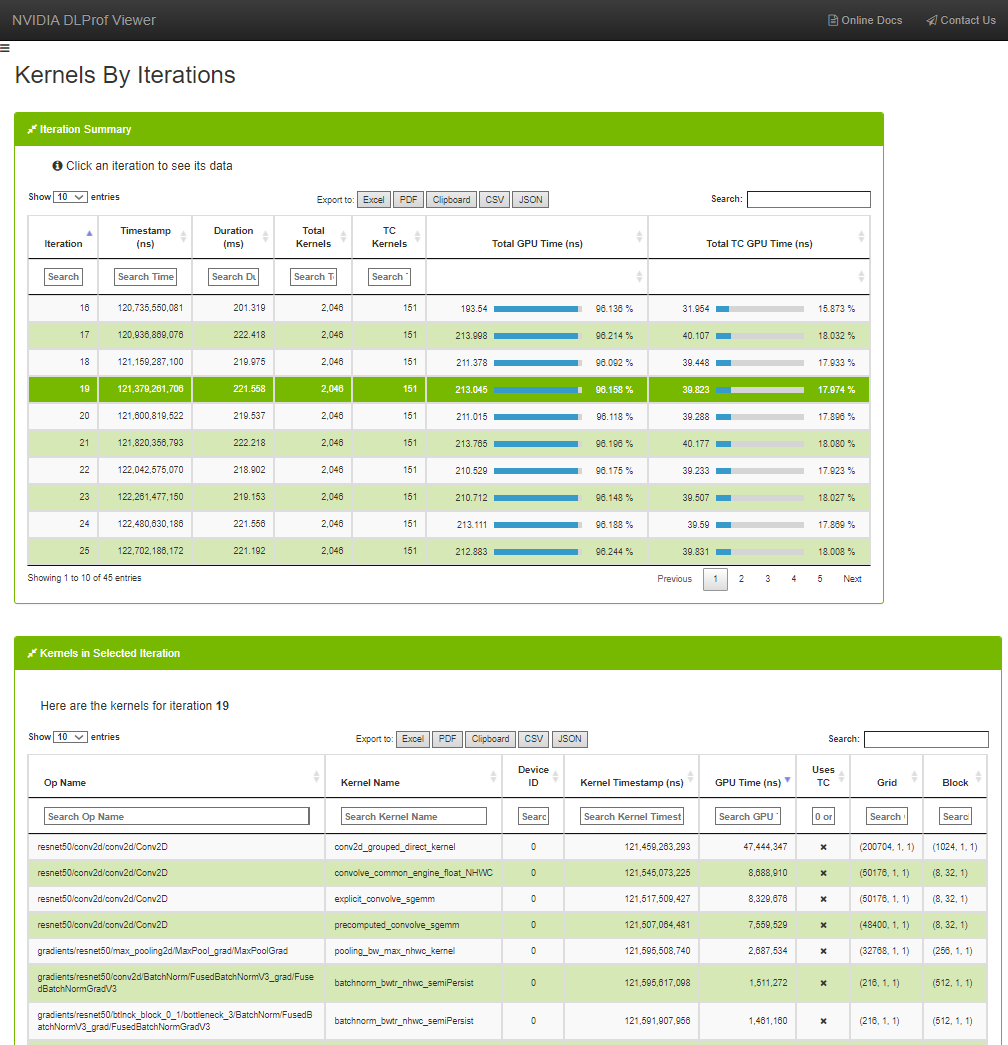

4.4. Kernels by Iteration

The Kernels by Iterations view shows operations and their execution time for every iteration. At a glance, you can compare iterations with respect to time as well as Kernels executed.

4.4.1. Iteration Summary Data Table

To see the kernels for a specific iteration, click a row in the top table. The ‘Kernels in Selected Iteration’ table will be filled with the kernels from the selection iteration.

See this description for all the features available in all DataTables.

| Column | Description |

|---|---|

| Iteration | The iteration interval numbers. |

| Timestamp | The exact time when this iteration started. |

| Duration | The length of time it took for this iteration to execute. Units on this column are dynamic. |

| Total Kernels | The number of GPU kernels called during this iteration. |

| TC Kernels | The number of GPU Tensor Core kernels called during the iteration. |

| Average GPU Time (μs) | Average execution time for all kernels across all GPUs during the iteration. The contents of this cell are broken into multiple pieces: The value is the average execution time of this iteration across all GPUs. The progress meter visually indicates how much of this iteration executed on the GPU. The percentage is the average GPU time of all kernels across all GPUs over the total iteration time. Hovering over this cell displays a tooltip text with helpful information. Units on this column are dynamic. |

| Average TC GPU Time (μs) | Average execution time for all Tensor Core kernels across all GPUs during the iteration. The contents of this cell are broken into multiple pieces: The value is the average execution time of Tensor Core kernels during this iteration across all GPUs. The progress meter visually indicates how much of this iteration executed Tensor Core kernels. The percentage is the average GPU time of kernels using Tensor Core across all GPUs over the total iteration time. Hovering over this cell displays a tooltip text with helpful information. Units on this column are dynamic. |

4.4.2. Kernels in Selected Iteration

See this description for all the features available in all DataTables.

| Column | Description |

|---|---|

| Op Name | The name of the op that launched the kernel. |

| Kernel Name | The name of the kernel. |

| Device ID | the device ID of the kernel. |

| Kernel Timestamp (ns) | The timestamp for when the CUDA API call was made for this kernel in the CPU thread. Useful to see the order in which the kernels were called. |

| GPU Time (ns) | The time spent executing the kernel on the GPU. |

| Users TC | A true/false field indicating whether or not the kernel uses Tensor Cores. To filter, type ‘1’ for true, and ‘0’ for false. |

| Grid | The grid size of the kernel. |

| Block | The block size of the kernel. |

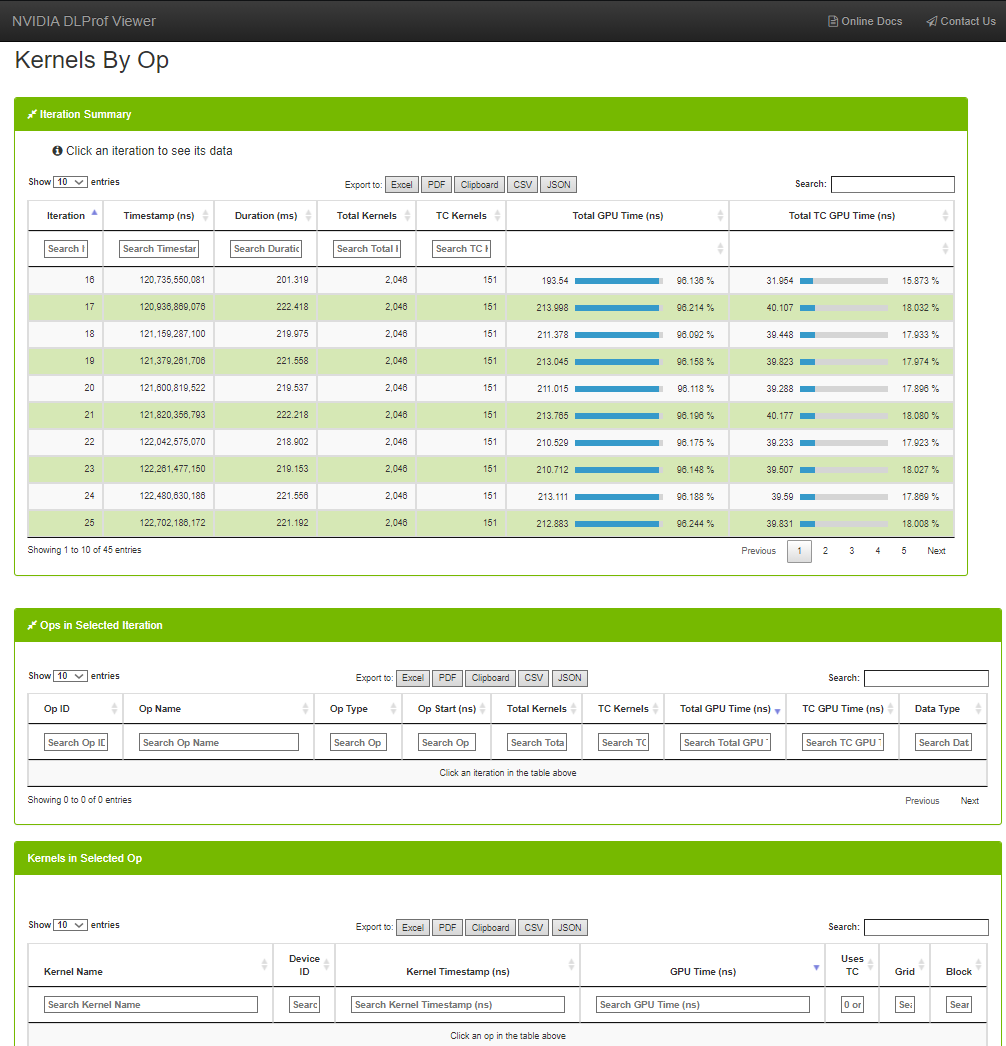

4.5. Kernels by Op

The Kernels by Op view is a variation of the Kernels by Iterations view. It has the capability to filter the list of kernels by iterations and op.

See this description for all the features available in all DataTables.

4.5.1. Iteration Summary Data Table

Selecting an iteration in the Iteration Summary table will populate the Ops in Selected Iteration table with all the profile data for the ops from the selected iteration. Selecting an op in the Ops in Selected Iteration table will populate the Kernels in Selected Op table with the list of kernels and timing data executed for the selected Op and Iteration.

See this description for all the features available in all DataTables.

| Column | Description |

|---|---|

| Iteration | The iteration interval numbers |

| Timestamp | The exact time when this iteration started. |

| Duration | The length of time it took for this iteration to execute. Units on this column are dynamic. |

| Total Kernels | The number of GPU kernels called during this iteration. |

| TC Kernels | The number of GPU Tensor Core kernels called during the iteration. |

| Average GPU Time (μs) | Average execution time for all kernels across all GPUs during the iteration. The contents of this cell are broken into multiple pieces: The value is the average execution time of this iteration across all GPUs. The progress meter visually indicates how much of this iteration executed on the GPU. The percentage is the average GPU time of all kernels across all GPUs over the total iteration time. Hovering over this cell displays a tooltip text with helpful information. Units on this column are dynamic. |

| Average TC GPU Time (μs) | Average execution time for all Tensor Core kernels across all GPUs during the iteration. The contents of this cell are broken into multiple pieces: The value is the average execution time of Tensor Core kernels during this iteration across all GPUs. The progress meter visually indicates how much of this iteration executed Tensor Core kernels. The percentage is the average GPU time of kernels using Tensor Core across all GPUs over the total iteration time. Hovering over this cell displays a tooltip text with helpful information. Units on this column are dynamic. |

4.5.2. Ops in Selected Iteration Table

Ops in Selected Iteration

See this description for all the features available in all DataTables.

| Column | Description |

|---|---|

| Op Name | The name of the op that launched the kernel. |

| Direction | The fprop/bprop direction of the op. (only visible on PyTorch runs). |

| Op Type | The type of the op. |

| Op Start | The time the op was launched. Used to sort ops chronologically. |

| Total Kernels | The number of GPU kernels called during this iteration. |

| TC Kernels | The number of GPU Tensor Core kernels called during the iteration. |

| Total GPU Time (ns) | Cumulative execution time for all kernels on the GPU during the op. |

| TC GPU Time (ns) | Cumulative execution time for all Tensor Core kernels on the GPU during the op. |

| Data Type | The data type of this op (eg, float16, int64, int32). |

| Stack Trace | The stack trace of the op. (only visible on PyTorch runs) If the contents of this cell is more than 100 characters, a ‘See More’ hyperlink appears. When clicked, the full contents of the cell appears. When the cell is expanded, the hyperlink text is changed to ‘See Less’. When clicked, the cell collapses back to the first 100 characters. |

4.5.3. Kernels Selected Iteration / Op Combination Table

Kernels in Selected Iteration / Op combination

See this description for all the features available in all DataTables.

| Column | Description |

|---|---|

| Kernel Name | The name of the kernel. |

| Device ID | The device ID of the kernel. |

| Kernel Timestamp (ns) | The timestamp for when the CUDA API call was made for this kernel in the CPU thread. Useful to see the order in which the kernels were called. |

| GPU Time (ns) | The time spent executing the kernel on the GPU. |

| Uses TC | A true/false field indicating whether or not the kernel uses Tensor Cores. To filter, type ‘1’ for true, and ‘0’ for false. |

| Grid | The grid size of the kernel. |

| Block | The block size of the kernel. |

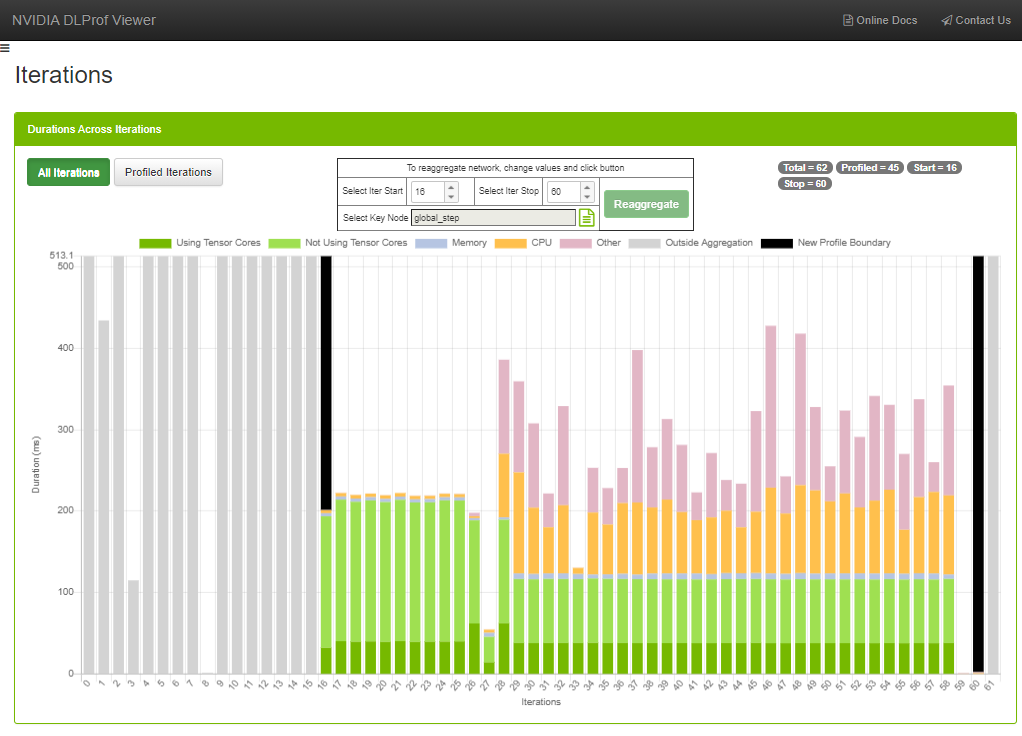

4.6. Iterations view

This view displays iterations visually. Users can quickly see how many iterations are in the model, the iterations that were aggregated/profiled, and the accumulated durations of tensor core kernels in each iteration.

Fields

| Field | Definition |

|---|---|

| X-Axis | The iterations of the model. |

| Y-Axis | Duration of iterations. The unit of this axis is dynamic (e.g., ms, ns, s, etc). |

| Badges | In the top right, four badges display iteration specifics of the profiled network. Total Profiled Start Stop The values inside the start and stop badges come directly from DLProf: see the --iter_start and --iter_stop command line options. |

| Legend | Dark Green: Accumulated duration of all kernels using tensor cores Light Green: Accumulated duration of all kernels not using tensor cores Blue: Accumulated duration of all memory operations Orange: Accumulated duration of all CPU operations Pink: All time not in any of the other categories Gray: Duration of iteration outside the profile. Black: User-positioned aggregation boundary. See ‘Select Iter Start’ and ‘Select Iter Stop’ below. |

UI Controls

| Control | Definition |

|---|---|

| Toggle Buttons | All Iterations: Show all the iterations in the entire network Profiled Iterations: Zooms in to only show the iterations that were profiled |

| Hover | When mouse hovers over an iteration, a popup window appears displaying the values of all the constituents of the iteration. |

| Select Iter Start | Specification of a new “iteration start” value. Used for re-aggregating the data inside the viewer. The value can be changed by typing into the field, by clicking the up/down arrow spinners, or by hovering the cursor over the field and spinning the mouse wheel. When this value changes, the left black bar will move accordingly. |

| Select Iter Stop | Specification of a new “iteration stop” value. Used for re-aggregating the data inside the viewer. The value can be changed by typing into the field, by clicking the up/down arrow spinners, or by hovering the cursor over the field and spinning the mouse wheel. When this value changes, the right black bar will move accordingly. |

| Select Key Node | The existing key node value is displayed in a read-only field. Sometimes key nodes can be very long. If a long key node is selected, the first few characters of the key node will appear in the field. The entire length of the key node field will appear when the cursor hovers over the field. Click the Key Node Picker button to view and pick a new key node. See the dialog below. |

| Reaggregate | Instructs the DLProf back-end server to reaggregate the network with the specified parameters. |

Workflow

Sometimes the original aggregation parameters on the DLProf command-line specified an iteration start value, an iteration stop value, or even key node that yielded a suboptimal representation of the network’s profile (such as including warm-up nodes). This feature allows the user to change those values and re-aggregate. Here is an example:

- Notice in the Iterations View screenshot above:

- Iteration eight contains no work, and

- Iteration nine through fifteen (among others) contain way too much work. The aggregation values throughout the viewer contain those ops and kernels and potentially skews the results.

- You can change the iteration start and iteration stop values in a number of ways: typing and clicking the up and down spinners. The best way is to hover over the field and spin the mouse wheel.

- Some platforms do not have a default key node for a neural network. Sometimes the predefined key node is suboptimal. By clicking on the ‘Select Key Node’ picker, a key node can be selected. This is a full-featured panel that allows for filtering, sorting, and pagination to find a Key Node. See the view called Ops and Kernels for more details.

Note:

Note: There are fewer columns here than in the view, but the usability is the same.

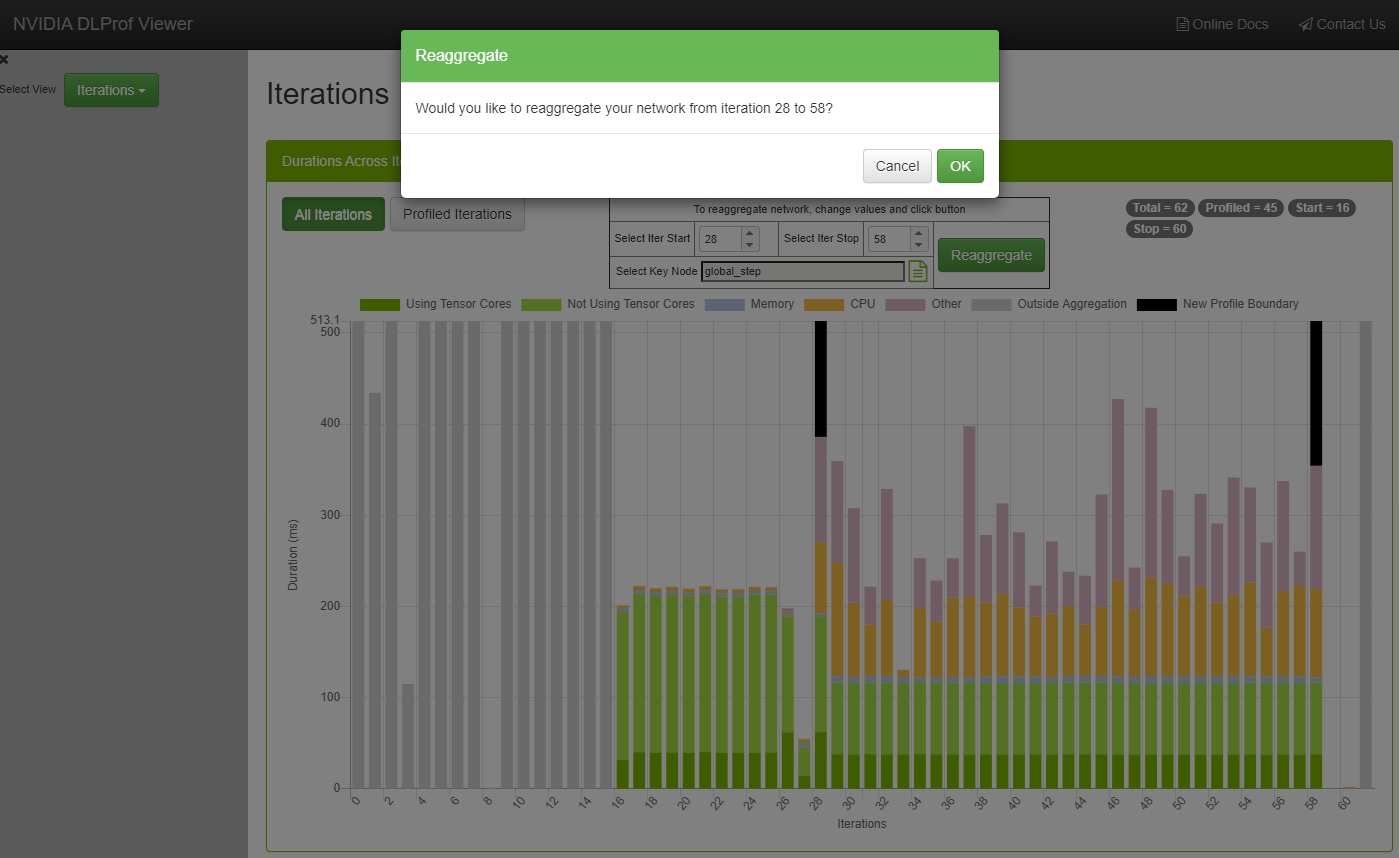

4. Once any of these three fields change, click the Reaggregate button. A confirmation dialog is displayed:

4. Once any of these three fields change, click the Reaggregate button. A confirmation dialog is displayed:

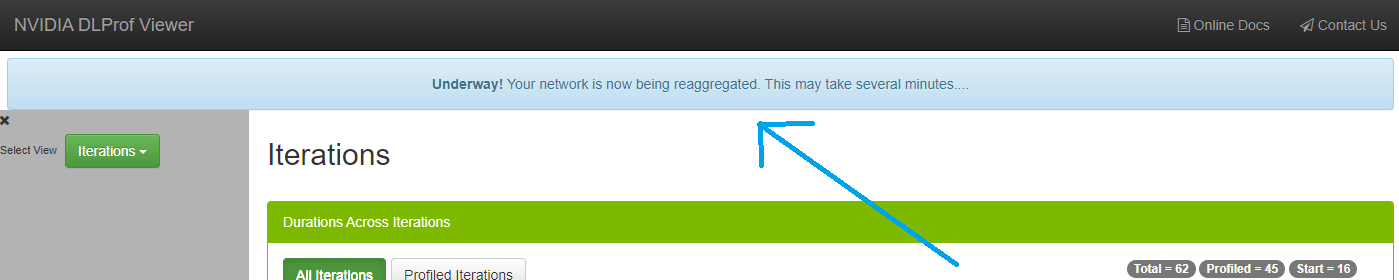

The viewer is fully functional while the re-aggregation is taking place. The “Underway!” panel will remain in view above all other views and panels until reaggregation is complete.

Note:

Note: Do not click the browser’s BACK or REFRESH button. If either are accidentally, the “Underway!” panel will no longer appear. The re-aggregation will continue.

5. After confirmation, a message is sent to the back-end DLProf server to reaggregate the profile with these values. This reaggregation process could potentially take minutes, so the following panel appears above all panels:

The viewer is fully functional while the re-aggregation is taking place. The “Underway!” panel will remain in view above all other views and panels until reaggregation is complete.

Note:

Note: Do not click the browser’s BACK or REFRESH button. If either are accidentally, the “Underway!” panel will no longer appear. The re-aggregation will continue regardless.

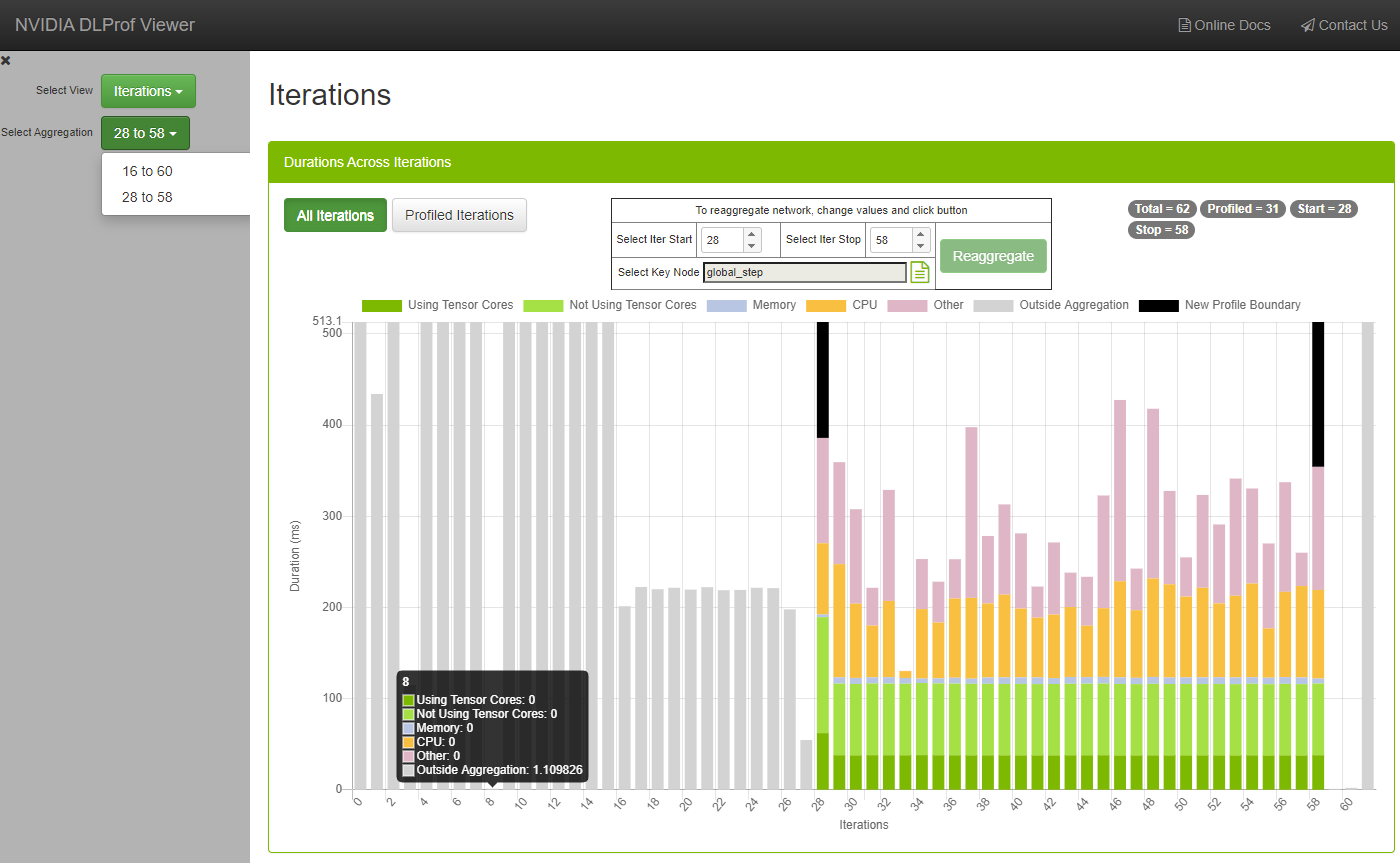

6. When the reaggregation is complete, a “Success!” panel will appear like this: 7. At this point, clicking the REFRESH button will load all the newly reaggregated data into the viewer. If it’s not already there, the droplist called Select Aggregation is displayed in the Navigation Pane:

7. At this point, clicking the REFRESH button will load all the newly reaggregated data into the viewer. If it’s not already there, the droplist called Select Aggregation is displayed in the Navigation Pane:

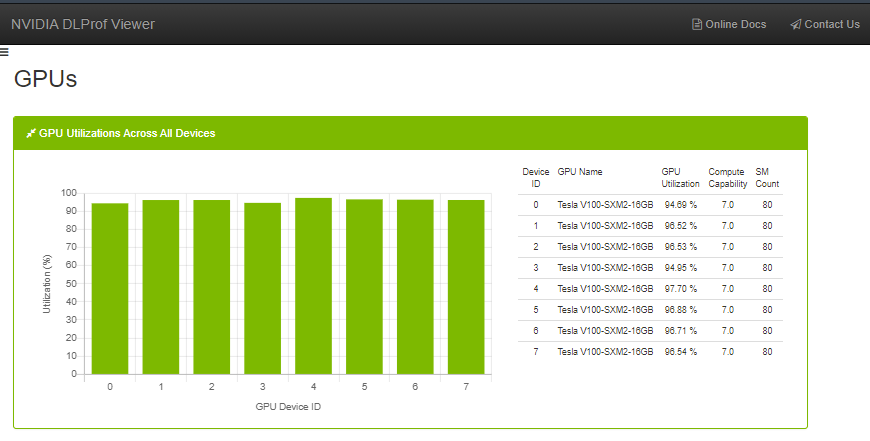

4.7. GPUs View

This view shows the utilization of all GPUs during the profile run. It is broken down into two different but related elements:

- Bar Chart - Quick visualization where you can see the GPU utilization for every GPU used during the profile. This view appears only when more than one GPU is used in a profile.

- Table - This table shows a little more detail about each GPU device, including its name, Compute Capability, and SM count.