ZoeDepth (original) (raw)

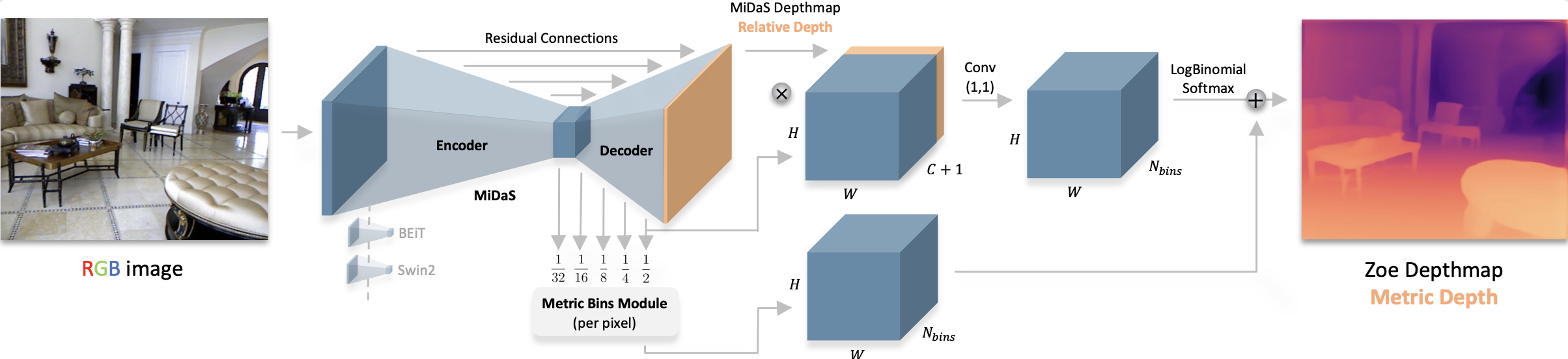

ZoeDepth is a depth estimation model that combines the generalization performance of relative depth estimation (how far objects are from each other) and metric depth estimation (precise depth measurement on metric scale) from a single image. It is pre-trained on 12 datasets using relative depth and 2 datasets (NYU Depth v2 and KITTI) for metric accuracy. A lightweight head with a metric bin module for each domain is used, and during inference, it automatically selects the appropriate head for each input image with a latent classifier.

You can find all the original ZoeDepth checkpoints under the Intel organization.

The example below demonstrates how to estimate depth with Pipeline or the AutoModel class.

import requests import torch from transformers import pipeline from PIL import Image

url = "https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/pipeline-cat-chonk.jpeg" image = Image.open(requests.get(url, stream=True).raw) pipeline = pipeline( task="depth-estimation", model="Intel/zoedepth-nyu-kitti", torch_dtype=torch.float16, device=0 ) results = pipeline(image) results["depth"]

Notes

- In the original implementation ZoeDepth performs inference on both the original and flipped images and averages the results. The

post_process_depth_estimationfunction handles this by passing the flipped outputs to the optionaloutputs_flippedargument as shown below.

with torch.no_grad():

outputs = model(pixel_values)

outputs_flipped = model(pixel_values=torch.flip(inputs.pixel_values, dims=[3]))

post_processed_output = image_processor.post_process_depth_estimation(

outputs,

source_sizes=[(image.height, image.width)],

outputs_flipped=outputs_flipped,

)

Resources

- Refer to this notebook for an inference example.

ZoeDepthConfig

class transformers.ZoeDepthConfig

( backbone_config = None backbone = None use_pretrained_backbone = False backbone_kwargs = None hidden_act = 'gelu' initializer_range = 0.02 batch_norm_eps = 1e-05 readout_type = 'project' reassemble_factors = [4, 2, 1, 0.5] neck_hidden_sizes = [96, 192, 384, 768] fusion_hidden_size = 256 head_in_index = -1 use_batch_norm_in_fusion_residual = False use_bias_in_fusion_residual = None num_relative_features = 32 add_projection = False bottleneck_features = 256 num_attractors = [16, 8, 4, 1] bin_embedding_dim = 128 attractor_alpha = 1000 attractor_gamma = 2 attractor_kind = 'mean' min_temp = 0.0212 max_temp = 50.0 bin_centers_type = 'softplus' bin_configurations = [{'n_bins': 64, 'min_depth': 0.001, 'max_depth': 10.0}] num_patch_transformer_layers = None patch_transformer_hidden_size = None patch_transformer_intermediate_size = None patch_transformer_num_attention_heads = None **kwargs )

Parameters

- backbone_config (

Union[Dict[str, Any], PretrainedConfig], optional, defaults toBeitConfig()) — The configuration of the backbone model. - backbone (

str, optional) — Name of backbone to use whenbackbone_configisNone. Ifuse_pretrained_backboneisTrue, this will load the corresponding pretrained weights from the timm or transformers library. Ifuse_pretrained_backboneisFalse, this loads the backbone’s config and uses that to initialize the backbone with random weights. - use_pretrained_backbone (

bool, optional, defaults toFalse) — Whether to use pretrained weights for the backbone. - backbone_kwargs (

dict, optional) — Keyword arguments to be passed to AutoBackbone when loading from a checkpoint e.g.{'out_indices': (0, 1, 2, 3)}. Cannot be specified ifbackbone_configis set. - hidden_act (

strorfunction, optional, defaults to"gelu") — The non-linear activation function (function or string) in the encoder and pooler. If string,"gelu","relu","selu"and"gelu_new"are supported. - initializer_range (

float, optional, defaults to 0.02) — The standard deviation of the truncated_normal_initializer for initializing all weight matrices. - batch_norm_eps (

float, optional, defaults to 1e-05) — The epsilon used by the batch normalization layers. - readout_type (

str, optional, defaults to"project") — The readout type to use when processing the readout token (CLS token) of the intermediate hidden states of the ViT backbone. Can be one of ["ignore","add","project"].- “ignore” simply ignores the CLS token.

- “add” passes the information from the CLS token to all other tokens by adding the representations.

- “project” passes information to the other tokens by concatenating the readout to all other tokens before projecting the representation to the original feature dimension D using a linear layer followed by a GELU non-linearity.

- reassemble_factors (

List[int], optional, defaults to[4, 2, 1, 0.5]) — The up/downsampling factors of the reassemble layers. - neck_hidden_sizes (

List[str], optional, defaults to[96, 192, 384, 768]) — The hidden sizes to project to for the feature maps of the backbone. - fusion_hidden_size (

int, optional, defaults to 256) — The number of channels before fusion. - head_in_index (

int, optional, defaults to -1) — The index of the features to use in the heads. - use_batch_norm_in_fusion_residual (

bool, optional, defaults toFalse) — Whether to use batch normalization in the pre-activate residual units of the fusion blocks. - use_bias_in_fusion_residual (

bool, optional, defaults toTrue) — Whether to use bias in the pre-activate residual units of the fusion blocks. - num_relative_features (

int, optional, defaults to 32) — The number of features to use in the relative depth estimation head. - add_projection (

bool, optional, defaults toFalse) — Whether to add a projection layer before the depth estimation head. - bottleneck_features (

int, optional, defaults to 256) — The number of features in the bottleneck layer. - num_attractors (

List[int], *optional*, defaults to[16, 8, 4, 1]`) — The number of attractors to use in each stage. - bin_embedding_dim (

int, optional, defaults to 128) — The dimension of the bin embeddings. - attractor_alpha (

int, optional, defaults to 1000) — The alpha value to use in the attractor. - attractor_gamma (

int, optional, defaults to 2) — The gamma value to use in the attractor. - attractor_kind (

str, optional, defaults to"mean") — The kind of attractor to use. Can be one of ["mean","sum"]. - min_temp (

float, optional, defaults to 0.0212) — The minimum temperature value to consider. - max_temp (

float, optional, defaults to 50.0) — The maximum temperature value to consider. - bin_centers_type (

str, optional, defaults to"softplus") — Activation type used for bin centers. Can be “normed” or “softplus”. For “normed” bin centers, linear normalization trick is applied. This results in bounded bin centers. For “softplus”, softplus activation is used and thus are unbounded. - bin_configurations (

List[dict], optional, defaults to[{'n_bins' -- 64, 'min_depth': 0.001, 'max_depth': 10.0}]): Configuration for each of the bin heads. Each configuration should consist of the following keys:- name (

str): The name of the bin head - only required in case of multiple bin configurations. n_bins(int): The number of bins to use.min_depth(float): The minimum depth value to consider.max_depth(float): The maximum depth value to consider. In case only a single configuration is passed, the model will use a single head with the specified configuration. In case multiple configurations are passed, the model will use multiple heads with the specified configurations.

- name (

- num_patch_transformer_layers (

int, optional) — The number of transformer layers to use in the patch transformer. Only used in case of multiple bin configurations. - patch_transformer_hidden_size (

int, optional) — The hidden size to use in the patch transformer. Only used in case of multiple bin configurations. - patch_transformer_intermediate_size (

int, optional) — The intermediate size to use in the patch transformer. Only used in case of multiple bin configurations. - patch_transformer_num_attention_heads (

int, optional) — The number of attention heads to use in the patch transformer. Only used in case of multiple bin configurations.

This is the configuration class to store the configuration of a ZoeDepthForDepthEstimation. It is used to instantiate an ZoeDepth model according to the specified arguments, defining the model architecture. Instantiating a configuration with the defaults will yield a similar configuration to that of the ZoeDepthIntel/zoedepth-nyu architecture.

Configuration objects inherit from PretrainedConfig and can be used to control the model outputs. Read the documentation from PretrainedConfig for more information.

Example:

from transformers import ZoeDepthConfig, ZoeDepthForDepthEstimation

configuration = ZoeDepthConfig()

model = ZoeDepthForDepthEstimation(configuration)

configuration = model.config

ZoeDepthImageProcessor

class transformers.ZoeDepthImageProcessor

( do_pad: bool = True do_rescale: bool = True rescale_factor: typing.Union[int, float] = 0.00392156862745098 do_normalize: bool = True image_mean: typing.Union[float, typing.List[float], NoneType] = None image_std: typing.Union[float, typing.List[float], NoneType] = None do_resize: bool = True size: typing.Optional[typing.Dict[str, int]] = None resample: Resampling = <Resampling.BILINEAR: 2> keep_aspect_ratio: bool = True ensure_multiple_of: int = 32 **kwargs )

Parameters

- do_pad (

bool, optional, defaults toTrue) — Whether to apply pad the input. - do_rescale (

bool, optional, defaults toTrue) — Whether to rescale the image by the specified scalerescale_factor. Can be overridden bydo_rescaleinpreprocess. - rescale_factor (

intorfloat, optional, defaults to1/255) — Scale factor to use if rescaling the image. Can be overridden byrescale_factorinpreprocess. - do_normalize (

bool, optional, defaults toTrue) — Whether to normalize the image. Can be overridden by thedo_normalizeparameter in thepreprocessmethod. - image_mean (

floatorList[float], optional, defaults toIMAGENET_STANDARD_MEAN) — Mean to use if normalizing the image. This is a float or list of floats the length of the number of channels in the image. Can be overridden by theimage_meanparameter in thepreprocessmethod. - image_std (

floatorList[float], optional, defaults toIMAGENET_STANDARD_STD) — Standard deviation to use if normalizing the image. This is a float or list of floats the length of the number of channels in the image. Can be overridden by theimage_stdparameter in thepreprocessmethod. - do_resize (

bool, optional, defaults toTrue) — Whether to resize the image’s (height, width) dimensions. Can be overridden bydo_resizeinpreprocess. - size (

Dict[str, int]optional, defaults to{"height" -- 384, "width": 512}): Size of the image after resizing. Size of the image after resizing. Ifkeep_aspect_ratioisTrue, the image is resized by choosing the smaller of the height and width scaling factors and using it for both dimensions. Ifensure_multiple_ofis also set, the image is further resized to a size that is a multiple of this value. Can be overridden bysizeinpreprocess. - resample (

PILImageResampling, optional, defaults toResampling.BILINEAR) — Defines the resampling filter to use if resizing the image. Can be overridden byresampleinpreprocess. - keep_aspect_ratio (

bool, optional, defaults toTrue) — IfTrue, the image is resized by choosing the smaller of the height and width scaling factors and using it for both dimensions. This ensures that the image is scaled down as little as possible while still fitting within the desired output size. In caseensure_multiple_ofis also set, the image is further resized to a size that is a multiple of this value by flooring the height and width to the nearest multiple of this value. Can be overridden bykeep_aspect_ratioinpreprocess. - ensure_multiple_of (

int, optional, defaults to 32) — Ifdo_resizeisTrue, the image is resized to a size that is a multiple of this value. Works by flooring the height and width to the nearest multiple of this value.

Works both with and withoutkeep_aspect_ratiobeing set toTrue. Can be overridden byensure_multiple_ofinpreprocess.

Constructs a ZoeDepth image processor.

preprocess

( images: typing.Union[ForwardRef('PIL.Image.Image'), numpy.ndarray, ForwardRef('torch.Tensor'), list['PIL.Image.Image'], list[numpy.ndarray], list['torch.Tensor']] do_pad: typing.Optional[bool] = None do_rescale: typing.Optional[bool] = None rescale_factor: typing.Optional[float] = None do_normalize: typing.Optional[bool] = None image_mean: typing.Union[float, typing.List[float], NoneType] = None image_std: typing.Union[float, typing.List[float], NoneType] = None do_resize: typing.Optional[bool] = None size: typing.Optional[int] = None keep_aspect_ratio: typing.Optional[bool] = None ensure_multiple_of: typing.Optional[int] = None resample: Resampling = None return_tensors: typing.Union[str, transformers.utils.generic.TensorType, NoneType] = None data_format: ChannelDimension = <ChannelDimension.FIRST: 'channels_first'> input_data_format: typing.Union[str, transformers.image_utils.ChannelDimension, NoneType] = None )

Parameters

- images (

ImageInput) — Image to preprocess. Expects a single or batch of images with pixel values ranging from 0 to 255. If passing in images with pixel values between 0 and 1, setdo_rescale=False. - do_pad (

bool, optional, defaults toself.do_pad) — Whether to pad the input image. - do_rescale (

bool, optional, defaults toself.do_rescale) — Whether to rescale the image values between [0 - 1]. - rescale_factor (

float, optional, defaults toself.rescale_factor) — Rescale factor to rescale the image by ifdo_rescaleis set toTrue. - do_normalize (

bool, optional, defaults toself.do_normalize) — Whether to normalize the image. - image_mean (

floatorList[float], optional, defaults toself.image_mean) — Image mean. - image_std (

floatorList[float], optional, defaults toself.image_std) — Image standard deviation. - do_resize (

bool, optional, defaults toself.do_resize) — Whether to resize the image. - size (

Dict[str, int], optional, defaults toself.size) — Size of the image after resizing. Ifkeep_aspect_ratioisTrue, he image is resized by choosing the smaller of the height and width scaling factors and using it for both dimensions. Ifensure_multiple_ofis also set, the image is further resized to a size that is a multiple of this value. - keep_aspect_ratio (

bool, optional, defaults toself.keep_aspect_ratio) — IfTrueanddo_resize=True, the image is resized by choosing the smaller of the height and width scaling factors and using it for both dimensions. This ensures that the image is scaled down as little as possible while still fitting within the desired output size. In caseensure_multiple_ofis also set, the image is further resized to a size that is a multiple of this value by flooring the height and width to the nearest multiple of this value. - ensure_multiple_of (

int, optional, defaults toself.ensure_multiple_of) — Ifdo_resizeisTrue, the image is resized to a size that is a multiple of this value. Works by flooring the height and width to the nearest multiple of this value.

Works both with and withoutkeep_aspect_ratiobeing set toTrue. Can be overridden byensure_multiple_ofinpreprocess. - resample (

int, optional, defaults toself.resample) — Resampling filter to use if resizing the image. This can be one of the enumPILImageResampling, Only has an effect ifdo_resizeis set toTrue. - return_tensors (

strorTensorType, optional) — The type of tensors to return. Can be one of:- Unset: Return a list of

np.ndarray. TensorType.TENSORFLOWor'tf': Return a batch of typetf.Tensor.TensorType.PYTORCHor'pt': Return a batch of typetorch.Tensor.TensorType.NUMPYor'np': Return a batch of typenp.ndarray.TensorType.JAXor'jax': Return a batch of typejax.numpy.ndarray.

- Unset: Return a list of

- data_format (

ChannelDimensionorstr, optional, defaults toChannelDimension.FIRST) — The channel dimension format for the output image. Can be one of:ChannelDimension.FIRST: image in (num_channels, height, width) format.ChannelDimension.LAST: image in (height, width, num_channels) format.

- input_data_format (

ChannelDimensionorstr, optional) — The channel dimension format for the input image. If unset, the channel dimension format is inferred from the input image. Can be one of:"channels_first"orChannelDimension.FIRST: image in (num_channels, height, width) format."channels_last"orChannelDimension.LAST: image in (height, width, num_channels) format."none"orChannelDimension.NONE: image in (height, width) format.

Preprocess an image or batch of images.

ZoeDepthImageProcessorFast

class transformers.ZoeDepthImageProcessorFast

( **kwargs: typing_extensions.Unpack[transformers.models.zoedepth.image_processing_zoedepth_fast.ZoeDepthFastImageProcessorKwargs] )

Parameters

- do_resize (

bool, optional, defaults toTrue) — Whether to resize the image. - size (

dict[str, int], optional, defaults to{'height' -- 384, 'width': 512}): Describes the maximum input dimensions to the model. - default_to_square (

bool, optional, defaults toTrue) — Whether to default to a square image when resizing, if size is an int. - resample (

Union[PILImageResampling, F.InterpolationMode, NoneType], defaults toResampling.BILINEAR) — Resampling filter to use if resizing the image. This can be one of the enumPILImageResampling. Only has an effect ifdo_resizeis set toTrue. - do_center_crop (

bool, optional, defaults toNone) — Whether to center crop the image. - crop_size (

dict[str, int], optional, defaults toNone) — Size of the output image after applyingcenter_crop. - do_rescale (

bool, optional, defaults toTrue) — Whether to rescale the image. - rescale_factor (

Union[int, float, NoneType], defaults to0.00392156862745098) — Rescale factor to rescale the image by ifdo_rescaleis set toTrue. - do_normalize (

bool, optional, defaults toTrue) — Whether to normalize the image. - image_mean (

Union[float, list[float], NoneType], defaults to[0.5, 0.5, 0.5]) — Image mean to use for normalization. Only has an effect ifdo_normalizeis set toTrue. - image_std (

Union[float, list[float], NoneType], defaults to[0.5, 0.5, 0.5]) — Image standard deviation to use for normalization. Only has an effect ifdo_normalizeis set toTrue. - do_convert_rgb (

bool, optional, defaults toNone) — Whether to convert the image to RGB. - return_tensors (

Union[str, ~utils.generic.TensorType, NoneType], defaults toNone) — Returns stacked tensors if set to `pt, otherwise returns a list of tensors. - data_format (

~image_utils.ChannelDimension, optional, defaults toChannelDimension.FIRST) — OnlyChannelDimension.FIRSTis supported. Added for compatibility with slow processors. - input_data_format (

Union[~image_utils.ChannelDimension, str, NoneType], defaults toNone) — The channel dimension format for the input image. If unset, the channel dimension format is inferred from the input image. Can be one of:"channels_first"orChannelDimension.FIRST: image in (num_channels, height, width) format."channels_last"orChannelDimension.LAST: image in (height, width, num_channels) format."none"orChannelDimension.NONE: image in (height, width) format.

- device (

torch.device, optional, defaults toNone) — The device to process the images on. If unset, the device is inferred from the input images. - do_pad (

bool, optional, defaults toTrue) — Whether to apply pad the input. - keep_aspect_ratio (

bool, optional, defaults toTrue) — IfTrue, the image is resized by choosing the smaller of the height and width scaling factors and using it for both dimensions. This ensures that the image is scaled down as little as possible while still fitting within the desired output size. In caseensure_multiple_ofis also set, the image is further resized to a size that is a multiple of this value by flooring the height and width to the nearest multiple of this value. Can be overridden bykeep_aspect_ratioinpreprocess. - ensure_multiple_of (

int, optional, defaults to 32) — Ifdo_resizeisTrue, the image is resized to a size that is a multiple of this value. Works by flooring the height and width to the nearest multiple of this value. Works both with and withoutkeep_aspect_ratiobeing set toTrue. Can be overridden byensure_multiple_ofinpreprocess.

Constructs a fast Zoedepth image processor.

preprocess

( images: typing.Union[ForwardRef('PIL.Image.Image'), numpy.ndarray, ForwardRef('torch.Tensor'), list['PIL.Image.Image'], list[numpy.ndarray], list['torch.Tensor']] **kwargs: typing_extensions.Unpack[transformers.models.zoedepth.image_processing_zoedepth_fast.ZoeDepthFastImageProcessorKwargs] ) → <class 'transformers.image_processing_base.BatchFeature'>

Parameters

- images (

Union[PIL.Image.Image, numpy.ndarray, torch.Tensor, list['PIL.Image.Image'], list[numpy.ndarray], list['torch.Tensor']]) — Image to preprocess. Expects a single or batch of images with pixel values ranging from 0 to 255. If passing in images with pixel values between 0 and 1, setdo_rescale=False. - do_resize (

bool, optional) — Whether to resize the image. - size (

dict[str, int], optional) — Describes the maximum input dimensions to the model. - default_to_square (

bool, optional) — Whether to default to a square image when resizing, if size is an int. - resample (

Union[PILImageResampling, F.InterpolationMode, NoneType]) — Resampling filter to use if resizing the image. This can be one of the enumPILImageResampling. Only has an effect ifdo_resizeis set toTrue. - do_center_crop (

bool, optional) — Whether to center crop the image. - crop_size (

dict[str, int], optional) — Size of the output image after applyingcenter_crop. - do_rescale (

bool, optional) — Whether to rescale the image. - rescale_factor (

Union[int, float, NoneType]) — Rescale factor to rescale the image by ifdo_rescaleis set toTrue. - do_normalize (

bool, optional) — Whether to normalize the image. - image_mean (

Union[float, list[float], NoneType]) — Image mean to use for normalization. Only has an effect ifdo_normalizeis set toTrue. - image_std (

Union[float, list[float], NoneType]) — Image standard deviation to use for normalization. Only has an effect ifdo_normalizeis set toTrue. - do_convert_rgb (

bool, optional) — Whether to convert the image to RGB. - return_tensors (

Union[str, ~utils.generic.TensorType, NoneType]) — Returns stacked tensors if set to `pt, otherwise returns a list of tensors. - data_format (

~image_utils.ChannelDimension, optional) — OnlyChannelDimension.FIRSTis supported. Added for compatibility with slow processors. - input_data_format (

Union[~image_utils.ChannelDimension, str, NoneType]) — The channel dimension format for the input image. If unset, the channel dimension format is inferred from the input image. Can be one of:"channels_first"orChannelDimension.FIRST: image in (num_channels, height, width) format."channels_last"orChannelDimension.LAST: image in (height, width, num_channels) format."none"orChannelDimension.NONE: image in (height, width) format.

- device (

torch.device, optional) — The device to process the images on. If unset, the device is inferred from the input images. - do_pad (

bool, optional, defaults toTrue) — Whether to apply pad the input. - keep_aspect_ratio (

bool, optional, defaults toTrue) — IfTrue, the image is resized by choosing the smaller of the height and width scaling factors and using it for both dimensions. This ensures that the image is scaled down as little as possible while still fitting within the desired output size. In caseensure_multiple_ofis also set, the image is further resized to a size that is a multiple of this value by flooring the height and width to the nearest multiple of this value. Can be overridden bykeep_aspect_ratioinpreprocess. - ensure_multiple_of (

int, optional, defaults to 32) — Ifdo_resizeisTrue, the image is resized to a size that is a multiple of this value. Works by flooring the height and width to the nearest multiple of this value. Works both with and withoutkeep_aspect_ratiobeing set toTrue. Can be overridden byensure_multiple_ofinpreprocess.

Returns

<class 'transformers.image_processing_base.BatchFeature'>

- data (

dict) — Dictionary of lists/arrays/tensors returned by the call method (‘pixel_values’, etc.). - tensor_type (

Union[None, str, TensorType], optional) — You can give a tensor_type here to convert the lists of integers in PyTorch/TensorFlow/Numpy Tensors at initialization.

ZoeDepthForDepthEstimation

class transformers.ZoeDepthForDepthEstimation

( config )

Parameters

- config (ZoeDepthForDepthEstimation) — Model configuration class with all the parameters of the model. Initializing with a config file does not load the weights associated with the model, only the configuration. Check out thefrom_pretrained() method to load the model weights.

ZoeDepth model with one or multiple metric depth estimation head(s) on top.

This model inherits from PreTrainedModel. Check the superclass documentation for the generic methods the library implements for all its model (such as downloading or saving, resizing the input embeddings, pruning heads etc.)

This model is also a PyTorch torch.nn.Module subclass. Use it as a regular PyTorch Module and refer to the PyTorch documentation for all matter related to general usage and behavior.

forward

( pixel_values: FloatTensor labels: typing.Optional[torch.LongTensor] = None output_attentions: typing.Optional[bool] = None output_hidden_states: typing.Optional[bool] = None return_dict: typing.Optional[bool] = None ) → transformers.modeling_outputs.DepthEstimatorOutput or tuple(torch.FloatTensor)

Parameters

- pixel_values (

torch.FloatTensorof shape(batch_size, num_channels, image_size, image_size)) — The tensors corresponding to the input images. Pixel values can be obtained using{image_processor_class}. See{image_processor_class}.__call__for details ({processor_class}uses{image_processor_class}for processing images). - labels (

torch.LongTensorof shape(batch_size, height, width), optional) — Ground truth depth estimation maps for computing the loss. - output_attentions (

bool, optional) — Whether or not to return the attentions tensors of all attention layers. Seeattentionsunder returned tensors for more detail. - output_hidden_states (

bool, optional) — Whether or not to return the hidden states of all layers. Seehidden_statesunder returned tensors for more detail. - return_dict (

bool, optional) — Whether or not to return a ModelOutput instead of a plain tuple.

A transformers.modeling_outputs.DepthEstimatorOutput or a tuple oftorch.FloatTensor (if return_dict=False is passed or when config.return_dict=False) comprising various elements depending on the configuration (ZoeDepthConfig) and inputs.

- loss (

torch.FloatTensorof shape(1,), optional, returned whenlabelsis provided) — Classification (or regression if config.num_labels==1) loss. - predicted_depth (

torch.FloatTensorof shape(batch_size, height, width)) — Predicted depth for each pixel. - hidden_states (

tuple(torch.FloatTensor), optional, returned whenoutput_hidden_states=Trueis passed or whenconfig.output_hidden_states=True) — Tuple oftorch.FloatTensor(one for the output of the embeddings, if the model has an embedding layer, + one for the output of each layer) of shape(batch_size, num_channels, height, width).

Hidden-states of the model at the output of each layer plus the optional initial embedding outputs. - attentions (

tuple(torch.FloatTensor), optional, returned whenoutput_attentions=Trueis passed or whenconfig.output_attentions=True) — Tuple oftorch.FloatTensor(one for each layer) of shape(batch_size, num_heads, patch_size, sequence_length).

Attentions weights after the attention softmax, used to compute the weighted average in the self-attention heads.

The ZoeDepthForDepthEstimation forward method, overrides the __call__ special method.

Although the recipe for forward pass needs to be defined within this function, one should call the Moduleinstance afterwards instead of this since the former takes care of running the pre and post processing steps while the latter silently ignores them.

Examples:

from transformers import AutoImageProcessor, ZoeDepthForDepthEstimation import torch import numpy as np from PIL import Image import requests

url = "http://images.cocodataset.org/val2017/000000039769.jpg" image = Image.open(requests.get(url, stream=True).raw)

image_processor = AutoImageProcessor.from_pretrained("Intel/zoedepth-nyu-kitti") model = ZoeDepthForDepthEstimation.from_pretrained("Intel/zoedepth-nyu-kitti")

inputs = image_processor(images=image, return_tensors="pt")

with torch.no_grad(): ... outputs = model(**inputs)

post_processed_output = image_processor.post_process_depth_estimation( ... outputs, ... source_sizes=[(image.height, image.width)], ... )

predicted_depth = post_processed_output[0]["predicted_depth"] depth = predicted_depth * 255 / predicted_depth.max() depth = depth.detach().cpu().numpy() depth = Image.fromarray(depth.astype("uint8"))