Lex Fridman (original) (raw)

Lex Fridman (pronounced: Freedman)

Research Scientist, MIT, 2015 - current (2023)

Laboratory for Information and Decision Systems (LIDS)

Research: Human-robot interaction and machine learning.

Hiring: I'm hiring

Teaching: deeplearning.mit.edu

Podcast: Lex Fridman Podcast

Sample Conversations: Elon Musk,Mark Zuckerberg,Sam Harris,Joe Rogan,Vitalik Buterin,Grimes,Dan Carlin,Roger Penrose,Jordan Peterson,Richard Dawkins,Liv Boeree,Leonard Susskind,David Fravor,Kanye West,Donald Hoffman,Rick Rubin, etc.

Connect with me @lexfridman onTwitter,LinkedIn,Instagram,Facebook,YouTube,Medium.

Outside of research and teaching, I enjoy:

- playing guitar & piano

- practicing jiu jitsu & judo

Research & Publications (Google Scholar)

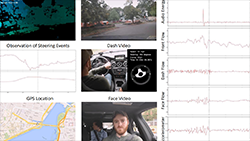

Automated Synchronization of Driving Data Using Vibration and Steering Events

Summary: A method for automated synchronization of vehicle sensors using accelerometer, telemetry, audio, and dense optical flow from three video sensors.

Arguing Machines: Human Supervision of Black Box AI Systems

Summary: Framework for providing human supervision of a black box AI system that makes life-critical decisions. We demonstrate this approach on two applications: (1) image classification and (2) real-world data of AI-assisted steering in Tesla vehicles.

Human-Centered Autonomous Vehicle Systems

Summary: We propose a set of shared autonomy principles for designing and building autonomous vehicle systems in a human-centered way, and demonstrate these principles on a full-scale semi-autonomous vehicle.

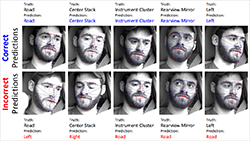

What Can Be Predicted from 6 Seconds of Driver Glances?

Summary: Winner of the CHI 2017 Best Paper Award. We consider a dataset of real-world, on-road driving to explore the predictive power of driver glances.

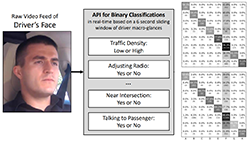

Driver Gaze Region Estimation without Use of Eye Movement

Summary: We propose a simplification of the general gaze estimation task by framing it as a gaze region estimation task in the driving context, thereby making it amenable to machine learning approaches. We go on to describe and evaluate one such learning-based approach.

MIT Advanced Vehicle Technology Study

Summary: Large-scale real-world AI-assisted driving data collection study to understand how human-AI interaction in driving can be safe and enjoyable. The emphasis is on computer vision based analysis of driver behavior in the context of automation use.

DeepTraffic

Summary: Traffic simulation and optimization with deep reinforcement learning. Primary goal is to make the hands-on study of deep RL accessible to thousands of students, educators, and researchers.

Active Authentication on Mobile Devices

Summary: An approach for verifying the identity of a smartphone user with with four biometric modalities. We evaluate the approach by collecting real-world behavioral biometrics data from smartphones of 200 subjects over a period of at least 30 days.

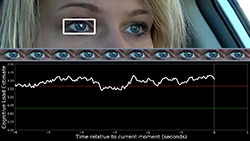

Cognitive Load Estimation in the Wild

Summary: Winner of the CHI 2018 Honorable Mention Award. We propose two novel vision-based methods for cognitive load estimation and evaluate them on a large-scale dataset collected under real-world driving conditions.

Crowdsourced Assessment of External Vehicle-to-Pedestrian Displays

Summary: 30 external vehicle-to-pedestrian display concepts for autonomous vehicles were evaluated. Simple, minimalist displays performed best.