|

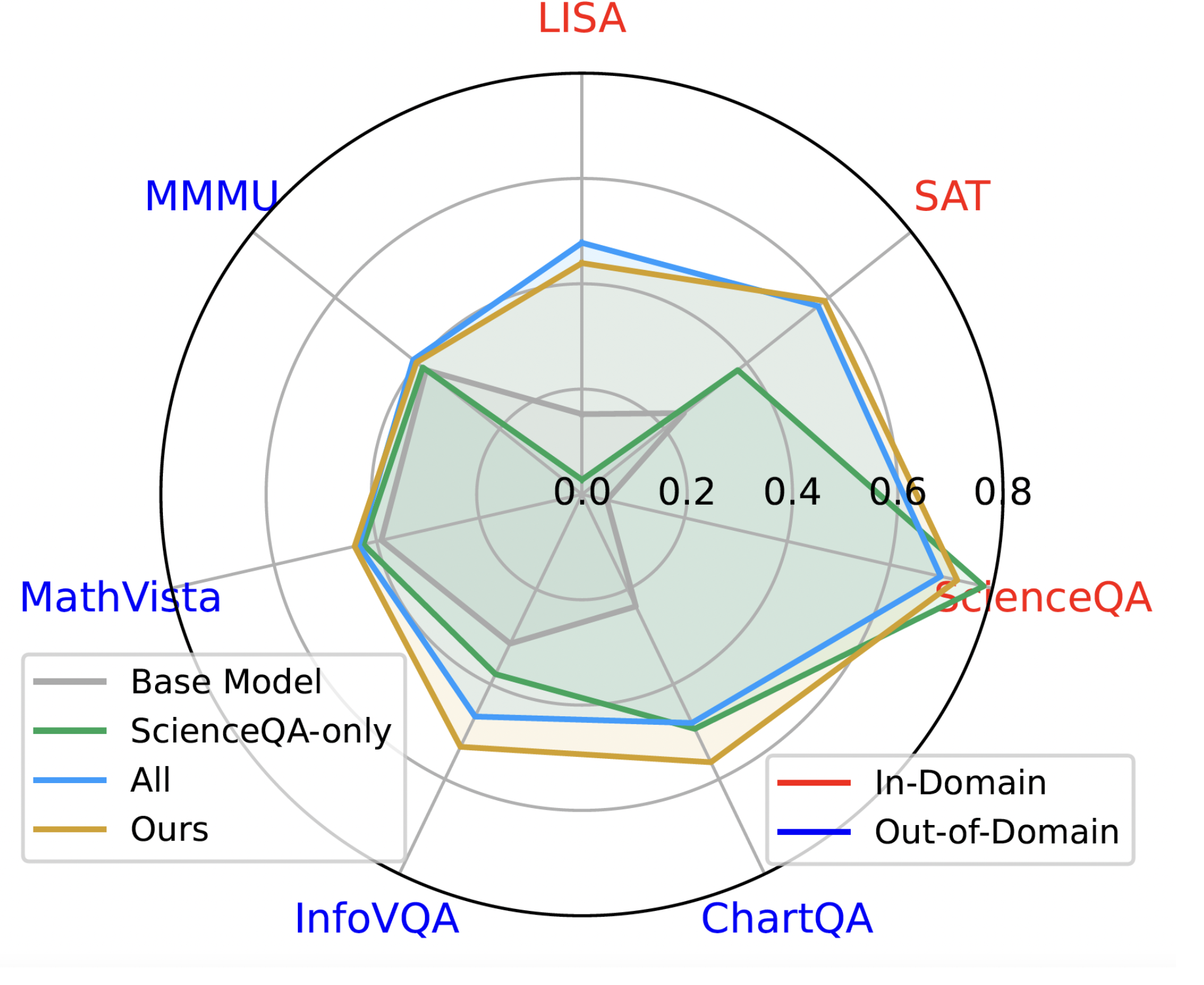

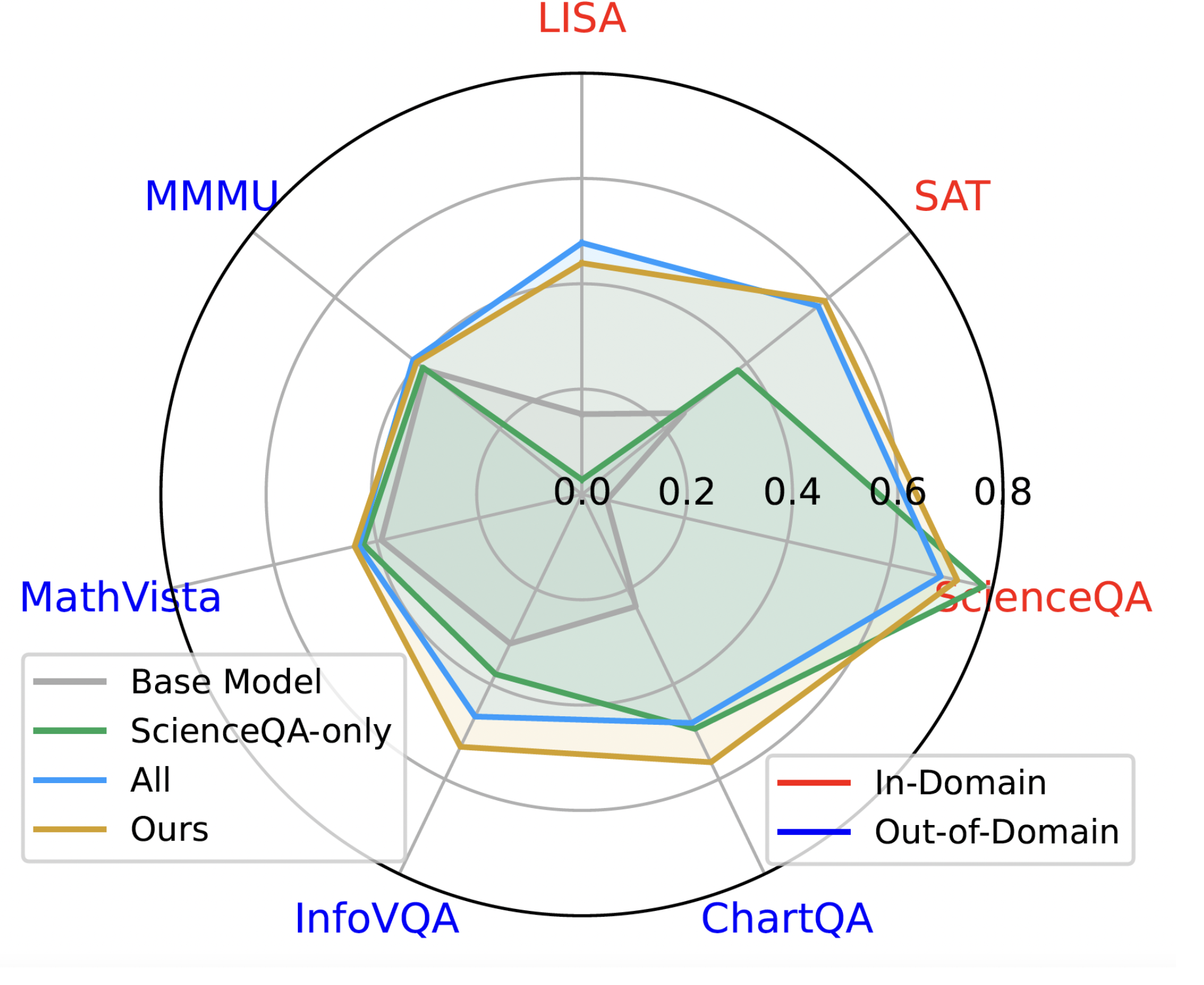

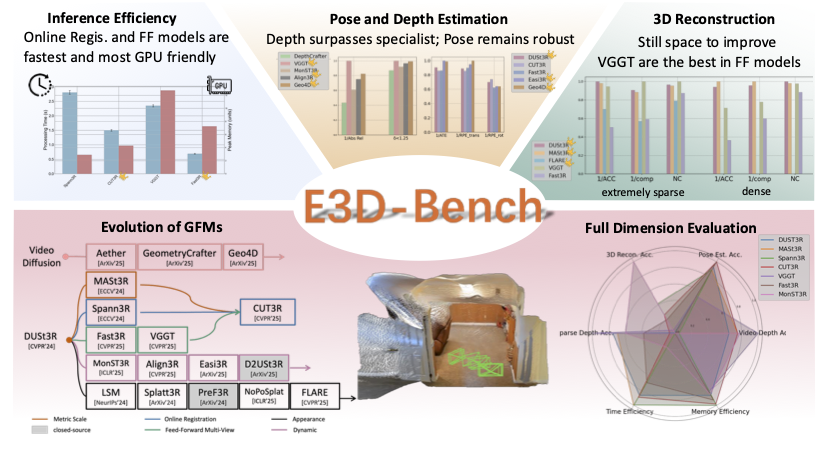

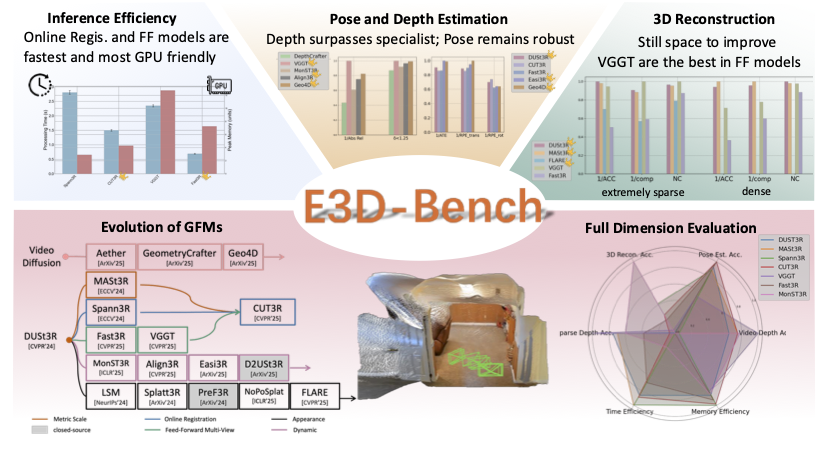

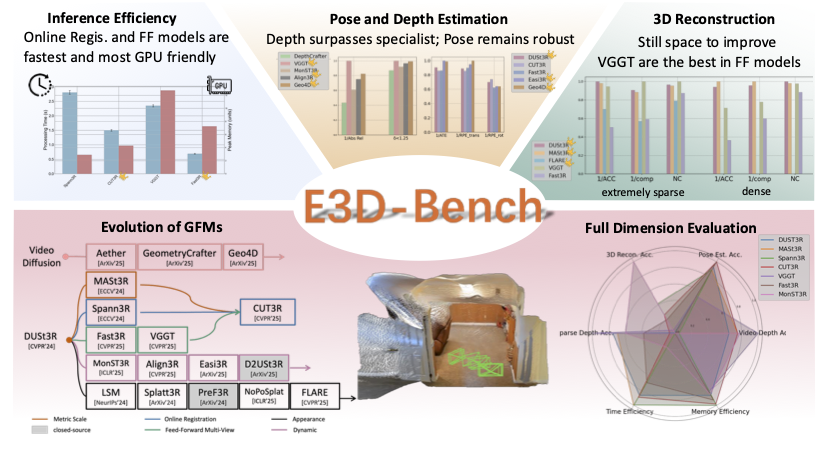

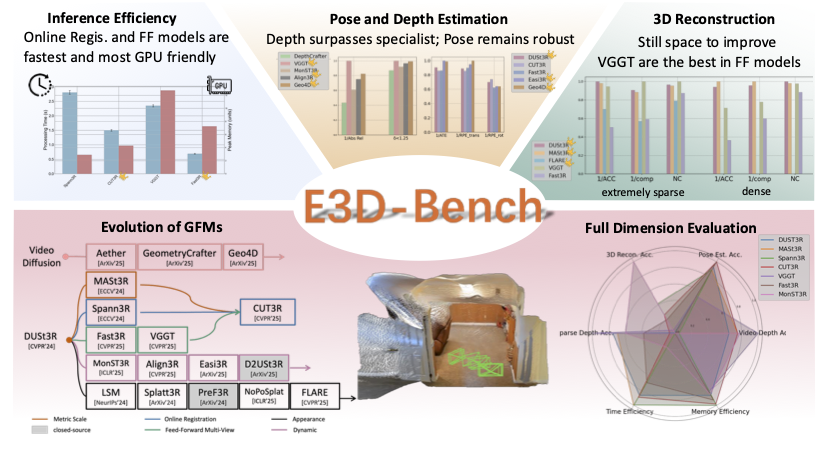

E3D-Bench: A Benchmark for End-to-End 3D Geometric Foundation Models Wenyan Cong,Yiqing Liang, Yancheng Zhang, Ziyi Yang, Yan Wang, Boris Ivanovic, Marco Pavone, Chen Chen, Zhangyang Wang, Zhiwen Fan Under Review, 2025 paper / code / bibtex We present the first comprehensive benchmark for 3D end‑to‑end 3D geometric foundation models, covering five core tasks: sparse-view depth estimation, video depth estimation, 3D reconstruction, multi-view pose estimation, novel view synthesis, and spanning both standard and challenging out-of-distribution datasets. |

|

Zero-Shot Monocular Scene Flow Estimation in the Wild Yiqing Liang, Abhishek Badki*, Hang Su*, James Tompkin, Orazio Gallo CVPR, 2025 Oral, Award Candidate (0.48%) paper / video / code / bibtex We present ZeroMSF, the first generalizable 3D foundation model that understands monocular scene flow for diverse real-world scenarios, utilizing our curated data receipe of 1M synthetic training samples. |

|

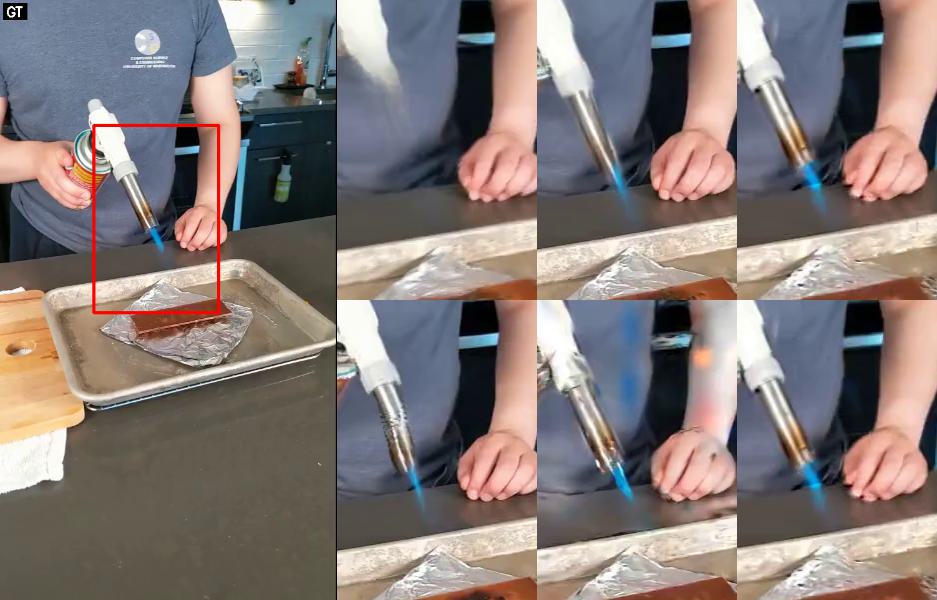

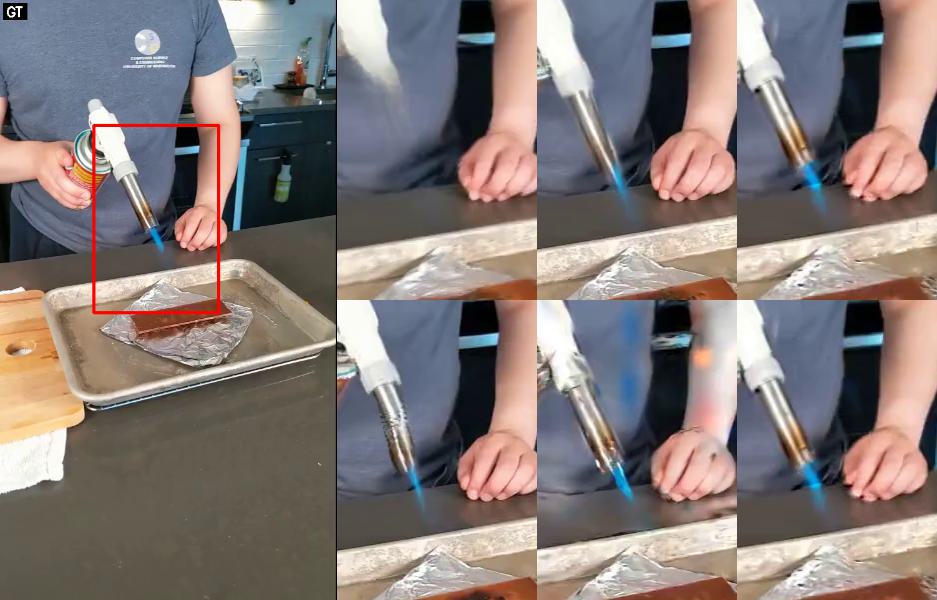

Monocular Dynamic Gaussian Splatting is Fast and Brittle and Scene Complexity Rules Yiqing Liang, Mikhail Okunev, Mikaela Angelina Uy, Runfeng Li, Leonidas J. Guibas, James Tompkin, Adam Harley TMLR, 2025 paper / data / code / bibtex We present a benchmark of dynamic Gaussian Splatting methods for monocular view synthesis, combining existing datasets and a new synthetic dataset to provide standardized comparisons and identify key factors affecting efficiency and quality. |

|

GauFRe: Gaussian Deformation Fields for Real-time Dynamic Novel View Synthesis Yiqing Liang, Numair Khan, Zhengqin Li, Thu Nguyen-Phuoc, Douglas Lanman, James Tompkin, Lei Xiao CVPR, 2024, CV4MR WACV, 2025 [paper](gaufre/static/pdfs/WACV%5F2025%5F%5F%5FGauFRe %281%29.pdf) / code / bibtex We propose GauFRe: a dynamic scene reconstruction method using deformable 3D Gaussians for monocular video that is efficient to train, renders in real-time and separates static and dynamic regions. |

|

Semantic Attention Flow Fields for Monocular Dynamic Scene Decomposition Yiqing Liang, Eliot Laidlaw, Alexander Meyerowitz,Srinath Sridhar,James Tompkin ICCV, 2023 paper / code / bibtex We present SAFF: a dynamic neural volume reconstruction of a casual monocular video that consists of time-varying color, density, scene flow, semantics, and attention information. |

|

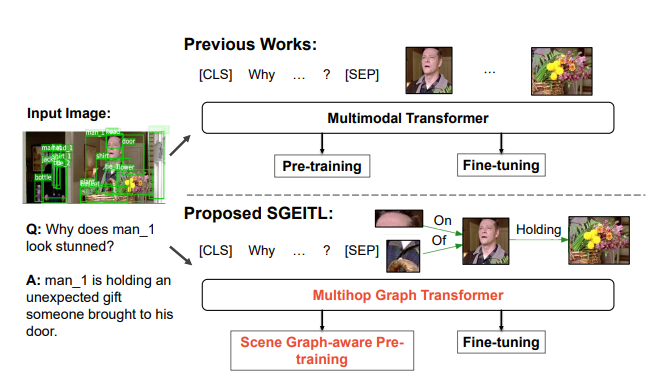

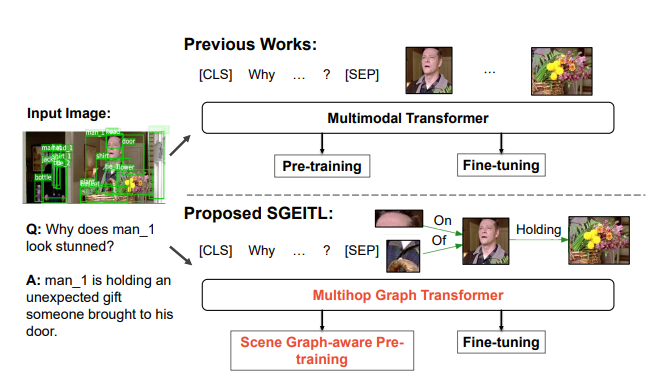

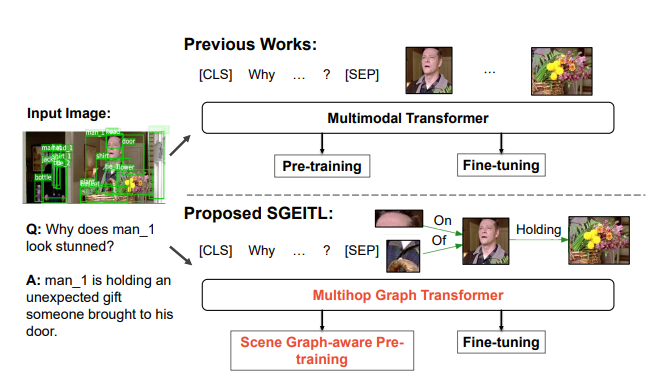

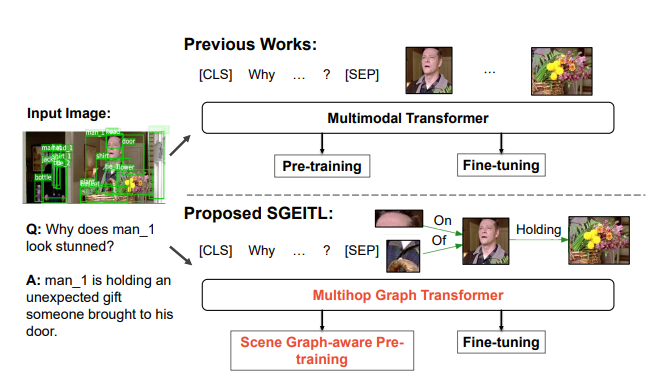

SGEITL: Scene Graph Enhanced Image-Text Learning for Visual Commonsense Reasoning Zhecan Wang*, Haoxuan You*, Liunian Harold Li,Alireza Zareian, Suji Park, Yiqing Liang, Kai-Wei Chang, Shih-Fu Chang AAAI, 2022 paper / bibtex We propose a Scene Graph Enhanced Image-Text Learning (SGEITL) framework to incorporate visual scene graph in commonsense reasoning |

|

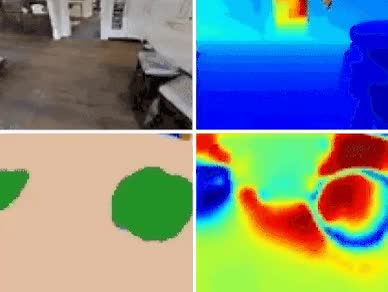

SSCNav: Confidence-Aware Semantic Scene Completion for Visual Semantic Navigation Yiqing Liang, Boyuan Chen, Shuran Song ICRA, 2021 paper / video / code / bibtex We explicitly model scene priors using a confidence-aware semantic scene completion module to complete the scene and guide the agent's navigation planning. |