Magpie| (original) (raw)

Introduction

❓ Alignment Data Generation: High-quality alignment data is critical for aligning large language models (LLMs). Is it possible to synthesize high-quality instructions at scale by directly extracting data from advanced aligned LLMs themselves?

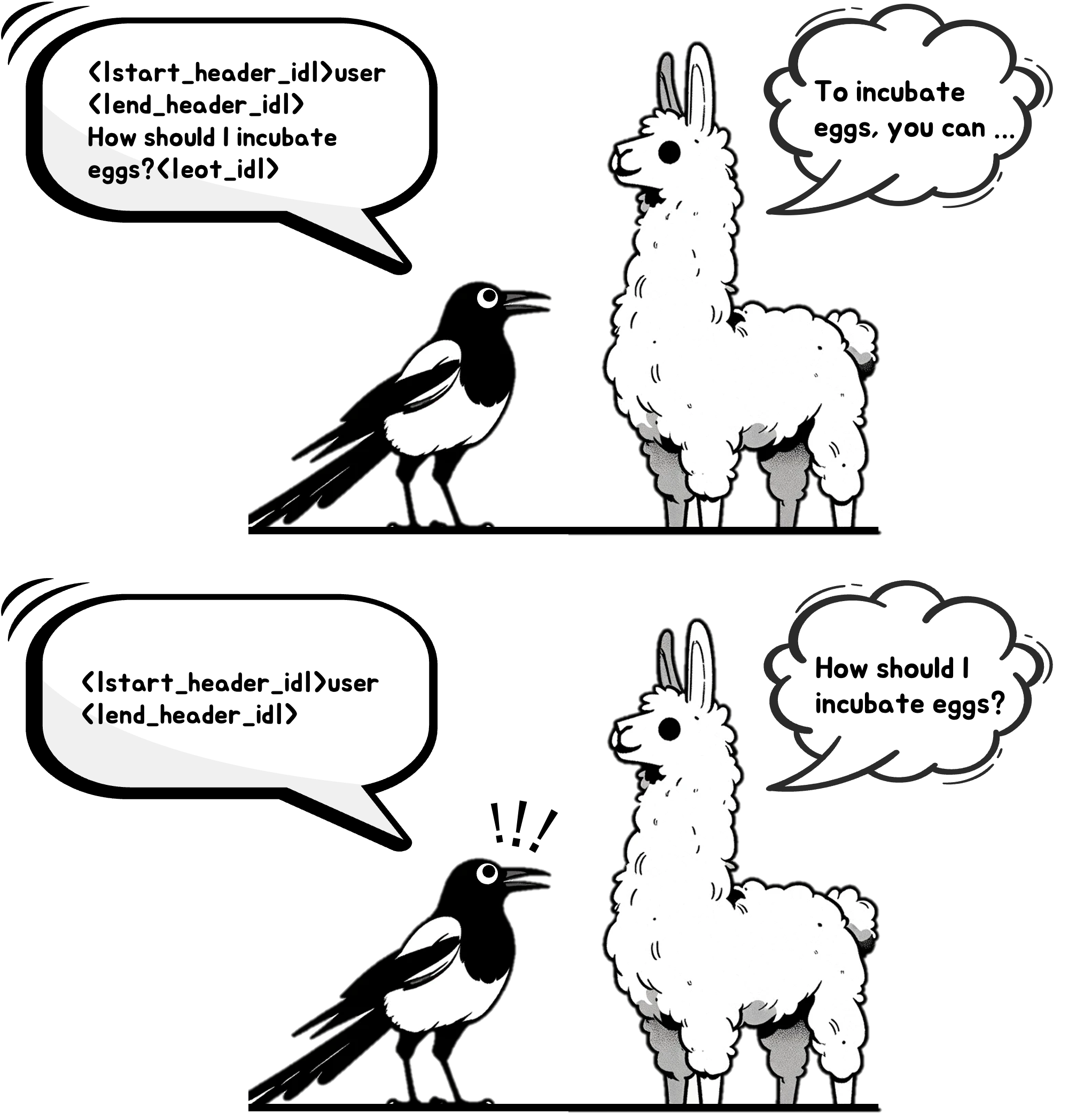

🔍 Conversation Template: A typical input to an aligned LLM contains three key components: the pre-query template, the query, and the post-query template. For instance, an input to Llama-2-chat could be [INST] Hi! [/INST], where [INST] is the pre-query template and [/INST] is the post-query template. These templates are predefined by the creators of the aligned LLMs to ensure the correct prompting of the models. We observe that when we only input the pre-query template to aligned LLMs such as Llama-3-Instruct, they self-synthesize a user query due to their auto-regressive nature!

🐦 Magpie Dataset: 4M high-quality alignment data extracted from Llama-3 Series with SOTA performance!

Citation

@article{xu2024magpie, title={Magpie: Alignment Data Synthesis from Scratch by Prompting Aligned LLMs with Nothing}, author={Zhangchen Xu and Fengqing Jiang and Luyao Niu and Yuntian Deng and Radha Poovendran and Yejin Choi and Bill Yuchen Lin}, journal={ArXiv}, year={2024}, volume={abs/2406.08464}, url={https://api.semanticscholar.org/CorpusID:270391432} }