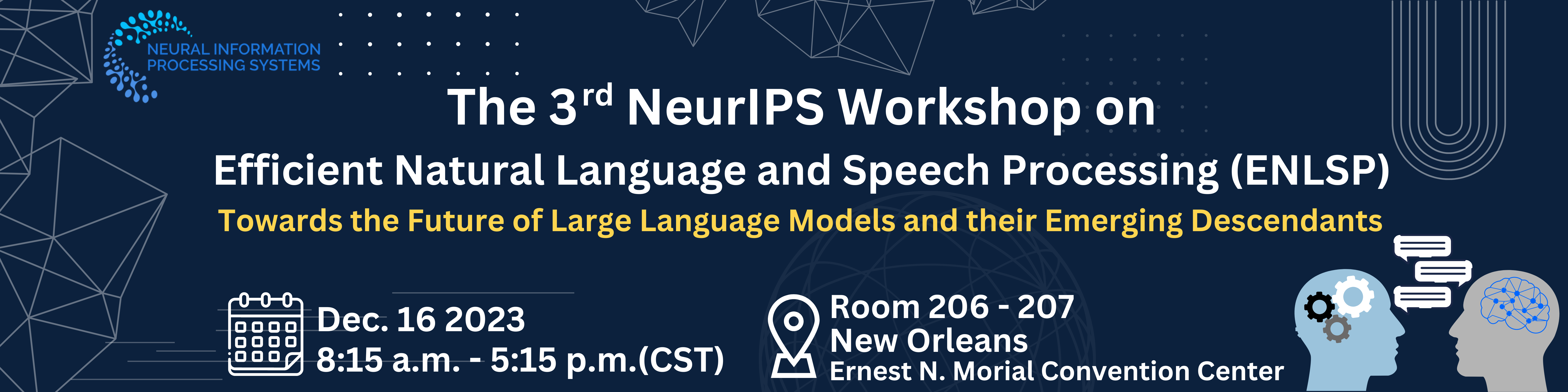

ENLSP NeurIPS Workshop 2023 (original) (raw)

The third version of the Efficient Natural Language and Speech Processing (ENLSP-III) workshop will focus on the future of large language models and their emerging applications on different domains such as natural language, speech processing, and biological sequences; and the target is on how to make them more efficient in terms of Data, Model, Training, and Inference for real-world applications as well as academic research. The workshop program offers an interactive platform for gathering different experts and talents from academia and industry through invited talks, panel discussion, paper submissions, reviews, interactive posters, oral presentations and a mentorship program. This will be a unique opportunity to discuss and share challenging problems, build connections, exchange ideas and brainstorm solutions, and foster future collaborations. The topics of this workshop can be of interest for people working on general machine learning, deep learning, optimization, theory and NLP & Speech applications.

Overview

With the emergence of large language and speech models (such as GPT-3, GPT-4, wav2vec, Hubert, wavLM, Whisper, PALM, LLaMA, and PALM 2) and then their variants which are fine-tuned on following instructions (such as Instruct-GPT, Alpaca, Dolly) and especially, conversational language models (such as ChatGPT, Bard, Vicuna, StableLM-Tuned, OpenAssistant), we have taken one significant step towards mimicking the human intelligence by machines. The contributions of Language models did not even stop at this level, their emerging usage in biological (protein language models such as protTrans models, ESM, protGPT2) and chemical domains (small molecule and polymer language models like SMILESBERT, polyBERT, and transPolymers) have further expanded their applications across various scientific disciplines. As a result, these models are revolutionizing the way we approach research and knowledge discovery, paving the way for more innovative breakthroughs in diverse fields. This great success has come with the price of pre-training these large models on a huge amount of data, fine-tuning them with instruction-based data and human supervised fine-tuning data, and extensive engineering efforts. Despite the great success of these large models, it is evident that most of them are largely over-parameterized and their efficiency is under question. Lack of efficiency can largely limit the application of these advanced techniques in practice. Training, adapting or deploying these large models on devices or even cloud services with limited memory and computational power can be very challenging.

While these current achievements have paved the road for the faster progress of improving large foundation models from different aspects in the future, we need to address different efficiency issues of these models at the same time. For example, in natural language, it has been shown that larger model sizes reveal more zero-shot or few-shot (in-context learning) capabilities in handling different tasks. However, collecting data, pre-training and maintaining such large models can be very expensive. In terms of training data, it is still not very clear to what extent expanding the training data can improve these pre-trained models and whether we can compress pre-training data without much sacrificing the performance. The problem of efficiency becomes more critical when we think about pre-training multimodal models. At the time of deployment, designing proper prompts for different tasks can be very arbitrary and time consuming. Fine-tuning large language models with billions of parameters is very costly. One can think of transferring the knowledge of large foundation models to smaller models (by distillation or symbolic distillation) but still we do not have a straightforward recipe for this task. Furthermore, there is a debate in the literature that to what extent we can transfer the knowledge of powerful large black-box models such as ChatGPT to smaller models in specific or general domains. Additionally, due to the huge size of the foundation models, applying model compression techniques to them is not an easy task. In the light of advances in large protein language models and their application in biology, this year there will be a special track focused on emerging protein language models, their pretraining, fine tuning, applications, and approaches for improving their efficiency.

Call for Papers

It is of vital importance to invest on future of large foundation models by enhancing their efficiency in terms of data, modeling, training and inference from different perspectives highlighted in the workshop. In this regard, we share some active research topics in this domain which might be of interest to the NeurIPS community to get their participation, ideas and contributions. The scope of this workshop includes, but not limited to, the following topics:

Efficient Pre-Training How can we reduce the cost of pre-training new models?

- Accelerating the pre-training process

- Efficient initialization and hyper-parameter tuning (HPT)

- Data vs. scale of pre-trained models

- Efficient Multimodal (e.g., text–speech) pre-trained models and efficiency issues related to it

- New efficient architectures (e.g. using sparse structures or mixture of experts (MoEs)) or new training objectives for pre-trained models

Efficient Fine-tuning Fine-tuning the entire parameters of large pre-trained models on downstream tasks can be expensive and it is prone to overfitting.

- Efficient prompt engineering and in-context learning

- Parameter-efficient tuning solutions (i.e. training only a portion of the entire network)

- Accelerating the fine-tuning process (e.g. by improving the optimizer, and layer-skipping)

Data Efficiency Pre-training (with unlabeled data) and fine-tuning (with labeled data) are both data hungry processes. Labeling data and incorporating human annotated data or human feedback are very time consuming and costly. Here we would like to address "how to reduced the costs borne by data?"

- Sample efficient training, training with less data, few-shot and zero-shot learning

- How to reduce the requirements for human labeled data?

- Can we rely on machine generated data for training models? (e.g. data collected from ChatGPT)

- Data compression, data distillation

Efficient Deployment How can we reduce the inference time or memory footprint of a trained model for a particular task?

- Relying on in-context learning and prompt engineering of large language models or fine-tuning smaller models (by knowledge transfer from larger models)?

- Neural model compression techniques such as (post-training) quantization, pruning, layer decom- position and knowledge distillation (KD) for NLP and Speech

- Impact of different efficient deployment solutions on the inductive biases learned by the original models (such as OOD generalization, in-context learning, in-domain performance, hallucination).

Special track: Protein Language Models Emergence and the future of language models for biological sequences and how to make them more efficient.

- Protein language models and their applications

- Refining the pretraining algorithm and/or model architecture of LLMs to optimize performance in the protein domain.

- Optimizing the curriculum learning (order of pretraining data presentation) for more efficient pre-training or fine tuning of protein language models

- Efficient remote homology via dense retrieval using protein language models

- Combining sequence and 3D structure in pretraining or fine-tuning of the models

- Multi-modal language models for biological sequences.

Other Efficient Applications

- Knowledge localization, knowledge editing, or targeted editing/training of foundation models

- Efficient dense retrieval and search

- Efficient graphs for NLP

- Training models on device

- Incorporating external knowledge into pre-trained models

- Efficient Federated learning for NLP: reduce the communication costs, tackling heterogeneous data, heterogeneous models.

Submission Instructions

You are invited to submit your papers in our CMT submission portal (Link). All the submitted papers have to be anonymous for double-blind review. We expect each paper will be reviewed by at least three reviewers. The content of the paper (excluding the references and supplementary materials) should not be longer than 4 pages, strictly following the NeurIPS template style.

Authors can submit up to 100 MB of supplementary materials separately. Authors are highly encouraged to submit their codes for reproducibility purposes. According to the guideline of the NeurIPS workshops, already published papers are not encouraged for submission, but you are allowed to submit your ArXiv papers or the ones which are under submission. Moreover, a work that is presented at the main NeurIPS conference should not appear in a workshop. Please make sure to indicate the complete list of conflict of interests for all the authors of your paper. To encourage higher quality submissions, our sponsors are offering the Best Paper and the Best Poster Award to qualified outstanding original oral and poster presentations (upon nomination of the reviewers). Also, we will give one outstanding paper certification for our special track of protein language models. Bear in mind that our workshop is not archival, but the accepted papers will be hosted on the workshop website.

Important Dates:

- Submission Deadline: October 2, 2023 AOE

- Acceptance Notification: October 27, 2023 AOE

- Camera-Ready Submission: November 3, 2023 AOE

- Workshop Date: December 16, 2023

Confirmed Keynote Speakers

Samy Bengio

Apple

Tatiana Likhomanenko

Apple

Luke Zettelmoyer

University of Washington & Meta AI

Tara Sainath

Sarath Chandar

MILA / Polytechnique Montreal

Kenneth Heafield

University of Edinburgh

Ali Madani

Profluent

Panelists

Nazneen Rajani

Huggingface

Minjia Zhang

Microsoft / Deepspeed

Tim Dettmers

University of Washington

Schedule

| Time | Title | Presenter | |

|---|---|---|---|

| 8:15M - 8:20AM | Breakfast | ||

| 8:15AM - 8:20AM | Opening Speech | ||

| 8:20AM - 8:45AM | (KeyNote Talk) Deploying efficient translation at every level of the stack | Kenneth Heafield | |

| 8:45AM - 9:30AM | (KeyNote Talk) Simple and efficient self-training approaches for speech recognition | Samy Bengio Tatiana Likhomanenko | |

| 9:30AM - 9:36AM | (Spotlight 1) Query-Dependent Prompt Evaluation and Optimization with Offline Inverse RL | Hao Sun | |

| 9:36AM - 9:42AM | (Spotlight 2) MatFormer:Nested Transformer for Elastic Inference | Fnu Devvrit | |

| 9:42AM - 9:48AM | (Spotlight 3) Decoding Data Quality via Synthetic Corruptions:Embedding-guided Pruning of Code Data | Yu Yang | |

| 9:48AM - 9:54AM | (Spotlight 4) FlashFFTConv:Efficient Convolutions for Long Sequences with Tensor Cores | Dan Fu | |

| 9:54AM - 10:00AM | (Spotlight 5) Ensemble of low-rank adapters for large language model fine-tuning | Xi Wang | |

| 10:00AM - 10:30AM | Morning Break and Poster Setup | ||

| 10:30AM - 11:00AM | (KeyNote Talk) Branch-Train-Merge:Embarrassingly Parallel Training of Expert Language Models | Luke Zettelmoyer | |

| 11:00AM - 11:30AM | (KeyNote Talk) Knowledge Consolidation and Utilization (In)Ability of Large Language Models | Sarath Chandar | |

| 11:30AM - 11:36AM | (Spotlight 6) LoDA:Low-Dimensional Adaptation of Large Language Models | Jing Liu | |

| 11:36AM - 11:42AM | (Spotlight 7) MultiPrompter:Cooperative Prompt Optimization with Multi-Agent Reinforcement Learning | Dong-Ki Kim | |

| 11:42AM - 11:48PM | (Spotlight 8) LoftQ:LoRA-Fine-Tuning-Aware Quantization for Large Language Models | Yixiao Li | |

| 11:48AM - 11:54PM | (Spotlight 9) Improving Linear Attention via Softmax Mimicry | Michael Zhang | |

| 11:54AM - 12:00PM | (Spotlight 10) PaSS:Parallel Speculative Sampling | Giovanni Monea | |

| 12:00PM - 1:00PM | Lunch Break | ||

| 1:00PM - 2:00PM | Poster Session I (Paper IDs:# 1-45) | ||

| 2:00PM - 2:30PM | (KeyNote Talk) LLMs for Protein Design:A Research Journey | Ali Madani | |

| 2:30PM-3:00PM | (KeyNote Talk) End-to-End Speech Recognition:The Journey from Research to Production | Tara Sainath | |

| 03:00PM - 03:20PM | Afternoon Break and Poster Setup II | ||

| 3:20PM - 4:10PM | Interactive Panel Discussion | Nazneen RajaniMinjia ZhangTim Dettmers | |

| 4:10PM-4:15PM | Best Paper and Poster Awards | ||

| 4:15PM - 5:15PM | Poster Session II (Paper IDs:# 46-96) |

Organizers

Mehdi Rezagholizadeh

Huawei Noah's Ark Lab

Peyman Passban

BenchSci

Yue Dong

University of California

Yu Cheng

Microsoft

Soheila Samiee

BASF

Lili Mou

University of Alberta

Qun Liu

Huawei Noah's Ark Lab

Boxing Chen

Huawei Noah's Ark Lab

Volunteers

Khalil Bibi

Technical Committee

| Dan Alistarh (Institute of Science and Technology Austria) Bang Liu (University of Montreal (UdM)) Hassan Sajjad (Dalhousie University) Tiago Falk(INRS University) Yu Cheng (Microsoft) Anderson R. Avila (INRS University) Peyman Passban (BenchSci) Rasoul Kaljahi (Oracle) Joao Monteiro (Service Now) Shahab Jalalvand (Interactions) Ivan Kobyzev (Huawei Noah's Ark Lab) Aref Jafari (University of Waterloo) Jad Kabbara (MIT) Ahmad Rashid (University of Waterloo) Ehsan Kamalloo (University of Alberta) Abbas Ghaddar (Huawei Noah's Ark Lab) Marzieh Tahaei (Huawei Noah's Ark Lab) Soheila Samiee (BASF) Hamidreza Mahyar (McMaster University) Flávio Ávila (Verisk Analytics) Peng Lu (UdeM) Xiaoguang Li (Huawei Noah's Ark Lab) Mauajama Firdaus (University of Alberta) David Alfonso Hermelo (Huawei Noah's Ark Lab) Ankur Agarwal (Huawei Noah's Ark Lab) Khalil Bibi (Huawei Noah's Ark Lab) Tanya Roosta (Amazon) Tianyu Jiang (University of Utah, US) | Juncheng Yin (Western University, Canada) Jingjing Li (Alibaba) Meng Cao (McGill and Mila, Canada) Wen Xiao (UBC, Canada) Chenyang Huang (University of Alberta) Sunyam Bagga (Huawei Noah's Ark Lab) Mojtaba Valipour (University of Waterloo) Lili Mou (University of Alberta) Yue Dong (University of California, Riverside) Makesh Sreedhar (NVIDIA) Vahid Partovi Nia (Huawei Noah's Ark Lab) Hossein Rajabzadeh (University of Waterloo) Mohammadreza Tayaranian (McGill University) Suyuchen Wang (MILA) Alireza Ghaffari (Huawei Noah's Ark Lab) Mohammad Dehghan (Huawei Noah's Ark Lab) Crystina Zhang (University of Waterloo) Parsa Kavehzadeh (York University) Ali Edalati (Huawei Noah's Ark Lab) Nandan Thakur (University of Waterloo) Heitor Guimarães (INRS University) Amirhossein Kazemnejad (McGill University/MILA) Hamidreza Saghir (Microsoft) Yuqiao Wen (University of Alberta) Ning Shi (University of Alberta) Peng Zhang (Tianjin University) Arthur Pimentel (INRS University) |

|---|

Diamond Sponsors

Platinum Sponsor

Gold Sponsor