NumPy (original) (raw)

NumPy

The fundamental package for scientific computing with Python

Powerful N-dimensional arrays

Fast and versatile, the NumPy vectorization, indexing, and broadcasting concepts are the de-facto standards of array computing today.

Numerical computing tools

NumPy offers comprehensive mathematical functions, random number generators, linear algebra routines, Fourier transforms, and more.

Interoperable

NumPy supports a wide range of hardware and computing platforms, and plays well with distributed, GPU, and sparse array libraries.

Performant

The core of NumPy is well-optimized C code. Enjoy the flexibility of Python with the speed of compiled code.

Easy to use

NumPy’s high level syntax makes it accessible and productive for programmers from any background or experience level.

Try NumPy

Use the interactive shell to try NumPy in the browser

"""

To try the examples in the browser:

1. Type code in the input cell and press

Shift + Enter to execute

2. Or copy paste the code, and click on

the "Run" button in the toolbar

"""

# The standard way to import NumPy:

import numpy as np

# Create a 2-D array, set every second element in

# some rows and find max per row:

x = np.arange(15, dtype=np.int64).reshape(3, 5)

x[1:, ::2] = -99

x

# array([[ 0, 1, 2, 3, 4],

# [-99, 6, -99, 8, -99],

# [-99, 11, -99, 13, -99]])

x.max(axis=1)

# array([ 4, 8, 13])

# Generate normally distributed random numbers:

rng = np.random.default_rng()

samples = rng.normal(size=2500)

samplesECOSYSTEM

Nearly every scientist working in Python draws on the power of NumPy.

NumPy brings the computational power of languages like C and Fortran to Python, a language much easier to learn and use. With this power comes simplicity: a solution in NumPy is often clear and elegant.

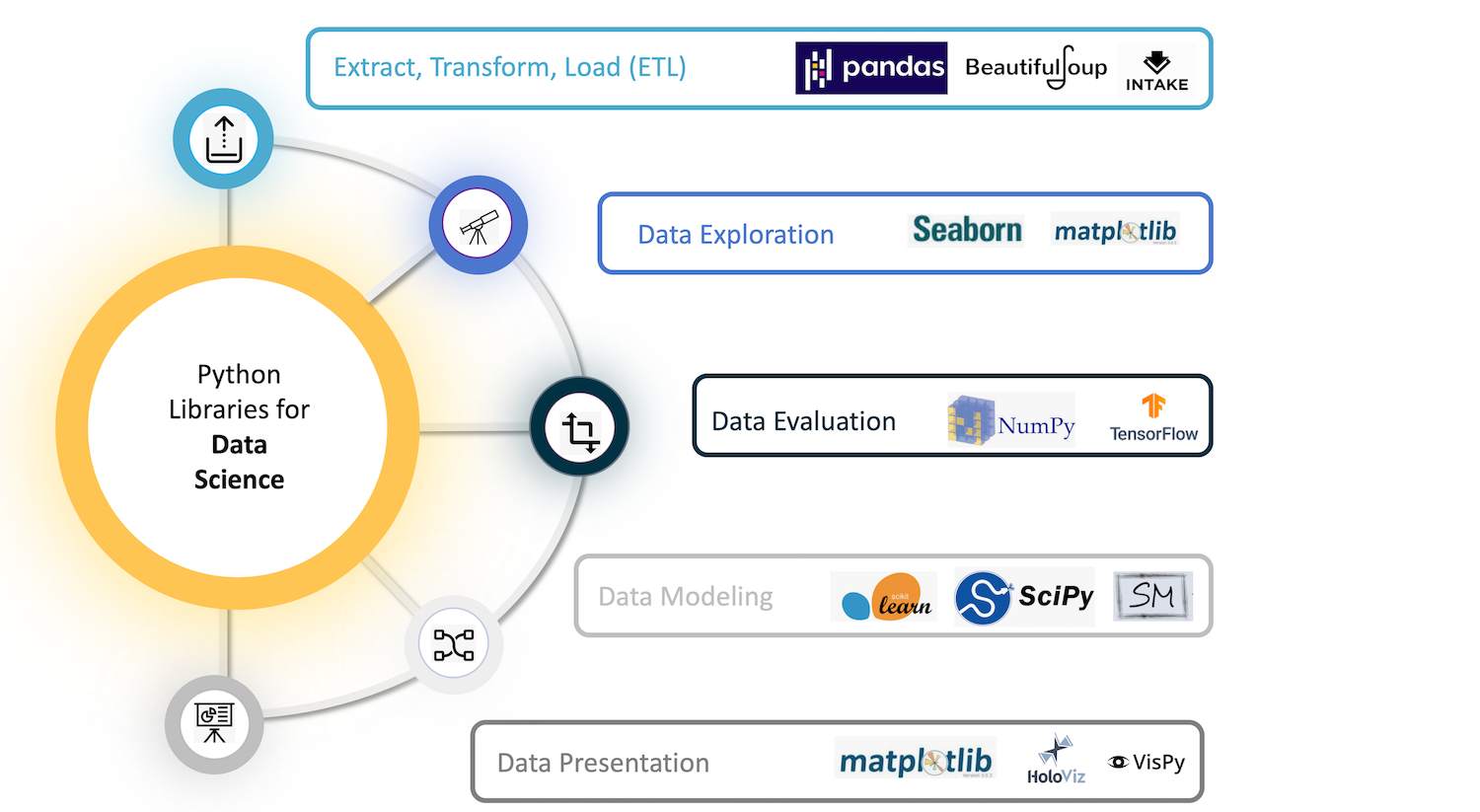

NumPy lies at the core of a rich ecosystem of data science libraries. A typical exploratory data science workflow might look like:

- Extract, Transform, Load: Pandas, Intake, PyJanitor

- Exploratory analysis: Jupyter, Seaborn, Matplotlib, Altair

- Model and evaluate: scikit-learn, statsmodels, PyMC3, spaCy

- Report in a dashboard: Dash, Panel, Voila

For high data volumes, Dask and Ray are designed to scale. Stable deployments rely on data versioning (DVC), experiment tracking (MLFlow), and workflow automation (Airflow, Dagster and Prefect).

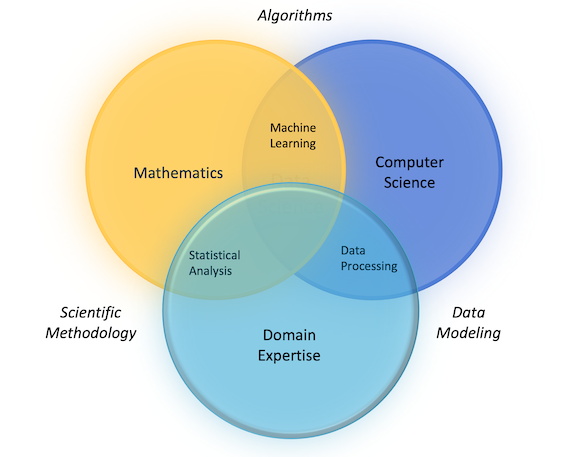

NumPy forms the basis of powerful machine learning libraries like scikit-learn and SciPy. As machine learning grows, so does the list of libraries built on NumPy. TensorFlow’s deep learning capabilities have broad applications — among them speech and image recognition, text-based applications, time-series analysis, and video detection. PyTorch, another deep learning library, is popular among researchers in computer vision and natural language processing.

Statistical techniques called ensemble methods such as binning, bagging, stacking, and boosting are among the ML algorithms implemented by tools such as XGBoost, LightGBM, and CatBoost — one of the fastest inference engines. Yellowbrick and Eli5 offer machine learning visualizations.

NumPy is an essential component in the burgeoning Python visualization landscape, which includes Matplotlib, Seaborn, Plotly, Altair, Bokeh, Holoviz, Vispy, Napari, and PyVista, to name a few.

NumPy’s accelerated processing of large arrays allows researchers to visualize datasets far larger than native Python could handle.