The world is relying on a flawed psychological test to fight racism (original) (raw)

In 1998, the incoming freshman class at Yale University was shown a psychological test that claimed to reveal and measure unconscious racism. The implications were intensely personal. Even students who insisted they were egalitarian were found to have unconscious prejudices (or “implicit bias” in psychological lingo) that made them behave in small, but accumulatively significant, discriminatory ways. Mahzarin Banaji, one of the psychologists who designed the test and leader of the discussion with Yale’s freshmen, remembers the tumult it caused. “It was mayhem,” she wrote in a recent email to Quartz. “They were confused, they were irritated, they were thoughtful and challenged, and they formed groups to discuss it.”

Finally, psychologists had found a way to crack open people’s unconscious, racist minds. This apparently incredible insight has taken the test in question, the Implicit Association Test (IAT), from Yale’s freshmen to millions of people worldwide. Referencing the role of implicit bias in perpetuating the gender pay gap or racist police shootings is widely considered woke, while IAT-focused diversity training is now a litmus test for whether an organization is progressive.

This acclaimed and hugely influential test, though, has repeatedly fallen short of basic scientific standards.

There’s little doubt we all have some form of unconscious prejudice. Nearly all our thoughts and actions are influenced, at least in part, by unconscious impulses. There’s no reason prejudice should be any different.

But we don’t yet know how to accurately measure unconscious prejudice. We certainly don’t know how to reduce implicit bias, and we don’t know how to influence unconscious views to decrease racism or sexism. There are now thousands of workplace talks and police trainings and jury guidelines that focus on implicit bias, but we still we have no strong scientific proof that these programs work.

The implicit bias narrative also lets us off the hook. We can’t feel as guilty or be held to account for racism that isn’t conscious. The forgiving notion of unconscious prejudice has become the go-to explanation for all manner of discrimination, but the shaky science behind the IAT suggests this theory isn’t simply easy, but false. And if implicit bias is a weak scapegoat, we must confront the troubling reality that society is still, disturbingly, all too consciously racist and sexist.

There are various psychological tests purporting to measure implicit bias; the IAT is by far the most widely used. When social psychologists Banaji (now at Harvard University) and Anthony Greenwald of the University of Washington first made the test public almost 20 years ago, the accompanying press release described it as revealing “the roots of” unconscious prejudice in 90-95% of people. It has been promoted as such in the years since then, most vigorously by “Project Implicit,” a nonprofit based at Harvard University and founded by the creators of the test, along with University of Virginia social psychologist Brian Nosek. Project Implicit’s stated aim is to “educate the public about hidden biases”; some 17 million implicit bias tests had been taken online by October 2015, courtesy of the nonprofit.

There are more than a dozen versions of the IAT, each designed to evaluate unconscious social attitudes towards a particular characteristic, such as weight, age, gender, sexual orientation, or race. They work by measuring how quick you are to associate certain words with certain groups.

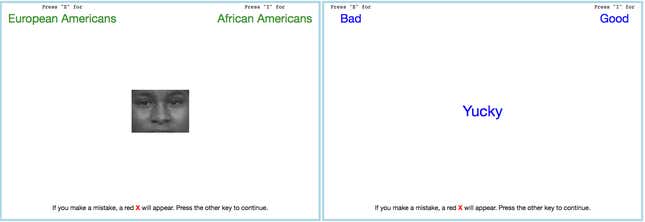

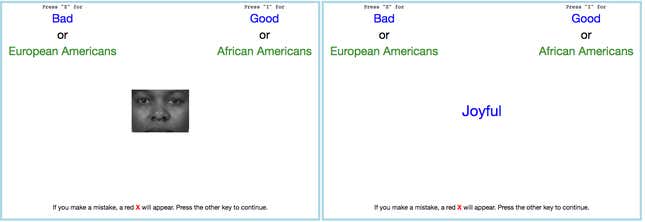

The test that has received the most attention, both within and outside psychology, is the black-white race IAT. It asks you to sort various items: Good words (e.g. appealing, excellent, joyful), bad words (e.g. poison, horrible), African-American faces, and European-American faces. In one stage (the order of these stages varies with each test), words flash by onscreen, and you have to identify them as “good” or “bad” as quickly as possible, by pressing “i” on the keyboard for good words and “e” for bad words. In another stage, faces appear, one at a time, and you have to identify them as African American or European American by pressing “i” or “e,” respectively.

Photo credit: Project Implicit

Then the test shows you both words and faces (separately, one at a time, but within the same stage). You’re told to hit “e” any time you see an European-American face or a good word, and “i” for an African-American face or a bad word. In yet another stage, you must hit “e” for African-American faces or good words, and “i” for European-American faces or bad words.

Photo credit: Project Implicit

The slower you are and the more mistakes you make when asked to categorize African-American faces and good words using the same key, the higher your level of anti-black implicit bias—according to the test.

“Implicit bias” became a buzzword largely thanks to claims that the IAT could measure unconscious prejudice. The IAT itself doesn’t purport to increase diversity or put an end to discriminatory managers. But it has certainly been deployed that way, partly due to its creators’ outreach. In 2006, Scientific American praised Banaji for telling investment bankers, media executives, and lawyers how their “buried biases” can cause “mistakes.” “Part of Mahzarin [Banaji]’s genius was to see the IAT’s potential impact on real-world issues,” Princeton University social psychologist Susan Fiske said at the time.

“There’s the idea that we can decrease biases by slightly overhyping the findings,” says Edouard Machery, professor at the Center for Philosophy of Science at the University of Pittsburgh. “I’m not sure it was intentional. Every scientist must persuade other people that what they do is worth doing.”

HR departments quickly picked up the theory, and implicit-bias workshops are now relied on by companies hoping to create more egalitarian workplaces. Google, Facebook, and other Silicon Valley giants proudly crow about their implicit-bias trainings. The results are underwhelming, at best. Facebook has made just incremental improvements in diversity; Google insists it’s trying but can’t show real results; and Pinterest found that unconscious bias training simply didn’t make a difference. Implicit bias workshops certainly didn’t influence the behavior of then-Google employee James Damore, who complained about the training days and wrote a scientifically ill-informed rant arguing that his female colleagues were biologically less capable of working at the company.

Silicon Valley companies aren’t the only ones working on their “implicit bias” problem. Police forces, The New York Times, countless private companies, US public school districts, and universities such as Harvard have also turned to implicit-bias training to address institutional inequality.

Pedestrians in midtown Manhattan.

Image: Kholood Eid for Quartz

There’s a typical format for workplace implicit-bias programs: Instructors first talk about how we all have unconscious prejudice. Then they run through related psychological studies—some of which, such as a commonly cited paper showing resumes with white names get more callbacks than those with non-white names, show prejudice rather than unconscious prejudice. Next, they have participants take the IAT, which purports to reveal their hidden biases, and conclude the program with discussions about how to be aware of and combat behavior driven by such biases.

The latest scientific research suggests there’s a very good reason why these well-meaning workshops have been so utterly ineffectual. A 2017 meta-analysis that looked at 494 previous studies (currently under peer review and not yet published in a journal) from several researchers, including Nosek, found that reducing implicit bias did not affect behavior. “Our findings suggest that changes in measured implicit bias are possible, but those changes do not necessarily translate into changes in explicit bias or behavior,” wrote the psychologists.

“I was pretty shocked that the meta-analysis found so little evidence of a change in behavior that corresponded with a change in implicit bias,” Patrick Forscher, psychology professor at the University of Arkansas and one of the co-authors of the meta-analysis, wrote in an email.

Forscher, who started graduate school believing that reducing implicit bias was a strong way of changing behavior and conducted research on how to do so, is now convinced that approach is misguided. “I currently believe that many (but not all) psychologists, in their desire to help solve social problems, have been way too overconfident in their interpretation of the evidence that they gather. I count myself in that number,” he wrote. “The impulse is understandable, but in the end it can do some harm by contributing to wasteful, and maybe even harmful policy.”

It’s highly plausible that the scientists who created the IAT, and now ardently defend it, believe their work will change the world for the better. Banaji sent me an email from a former student that compared her to Ta-Nehisi Coates, Bryan Stevenson, and Michelle Alexander “in elucidating the corrosive and terrifying vestiges of white supremacy in America.”

Greenwald explicitly discouraged me from writing this article. “Debates about scientific interpretation belong in scientific journals, not popular press,” he wrote. Banaji, Greenwald, and Nosek all declined to talk on the phone about their work, but answered most of my questions by email.

I saw a similar reluctance to criticize implicit bias among friends and colleagues. Taking the test, and buying into the concept of implicit bias, feels both open-minded and progressive.

I first took the IAT a few years ago, long before I was aware of these scientific disputes. It showed I had a moderate bias against African Americans and a slight bias towards associating men with careers and women with family. In other words, I told myself, I was both racist and sexist. It was shocking. Admitting this to myself also felt a little noble: I was recognizing my own involvement in structural inequalities.

Several others have told me they felt similarly when they received their own implicit-bias test results. One friend said her colleagues wouldn’t discuss diversity at all were it not for the implicit-bias workshops. So, as she so bluntly asked: Why was I stirring up shit?

One reason is, in a world where a widely held conspiracy theory claims that liberals invented climate change, I’m deeply uncomfortable with failing to report on scientific findings simply because they’re politically inconvenient. Even if IAT is eventually proven solid, there are currently heated academic debates on the subject—reaching levels of “personal antagonism,” says Forscher—and the public deserves to know about these scientific doubts.

There are also serious practical implications. Society is mired in prejudice, and implicit bias workshops attempting to solve the problem could be little more than an extremely well-funded but ineffectual distraction.

Finally, though there are plenty of good intentions behind public embracement of implicit bias, this enthusiasm also conveniently avoids the uncomfortable alternative: If the science behind implicit bias is flawed, and unconscious prejudice isn’t a major driver of discrimination, then society is likely far more consciously prejudiced than we pretend.

In recent years, a series of studies have led to significant concerns about the IAT’s reliability and validity. These findings, raising basic scientific questions about what the test actually does, can explain why trainings based on the IAT have failed to change discriminatory behavior.

Pedestrians in midtown Manhattan.

Image: Kholood Eid for Quartz

First, reliability: In psychology, a test has strong “test-retest reliability” when a user can retake it and get a roughly similar score. Perfect reliability is scored as a 1, and defined as when a group of people repeatedly take the same test and their scores are always ranked in the exact same order. It’s a tough ask. A psychological test is considered strong if it has a test-retest reliability of at least 0.7, and preferably over 0.8.

Current studies have found the race IAT to have a test-retest reliability score of 0.44, while the IAT overall is around 0.5 (pdf); even the high end of that range is considered “unacceptable” in psychology. It means users get wildly different scores whenever they retake the test.

Part (though not all) of these variations can be attributed to the “practice effect”: it’s easy to improve your score once you know how the test works. Psychologists typically counter the influence of “practice effects” by giving participants trial sessions before monitoring their scores, but this doesn’t help the IAT. Scores often continue to fluctuate after multiple sessions, and such a persistent practice effect is a serious concern. “For other aspects of psychology if you have a test that’s not replicated at 0.7, 0.8, you just don’t use it,” says Machery.

The second major concern is the IAT’s “validity,” a measure of how effective a test is at gauging what it aims to test. Validity is firmly established by showing that test results can predict related behaviors, and the creators of the IAT have long insisted their test can predict discriminatory behavior. This point is absolutely crucial: after all, if a test claiming to expose unconscious prejudice does not correlate with evidence of prejudice, there’s little reason to take it seriously.

In Blindspot, a 2013 book aimed at general audiences, Banaji and Greenwald wrote:

[T]he automatic White preference expressed on the Race IAT…predicts discriminatory behavior even among research participants who earnestly (and, we believe, honestly) espouse egalitarian beliefs. That last statement may sound like a self-contradiction, but it’s an empirical truth. Among research participants who describe themselves as racially egalitarian, the Race IAT has been shown, reliably and repeatedly, to predict discriminatory behavior that was observed in the research.

So it came as a major blow when four separate (pdf) meta–analyses (pdf), undertaken between 2009 and 2015—each examining between 46 and 167 individual studies—all showed the IAT to be a weak predictor of behavior. Two of the meta-analyses focus on the race IAT while two examine the IAT’s links with behavior more broadly, but all four show weak predictive abilities.

Proponents of the IAT tend to point to individual studies showing strong links between test scores and racist behavior. Opponents counter by highlighting those that, counterintuitively, show a link between biased IAT scores and less discriminatory behavior. They may quibble, but single studies are no longer considered compelling evidence in psychology. The field, wracked by a replication crisis that found key results of single studies often cannot be recreated, now acknowledges that any one study is fallible. “People [who continue to believe in the validity of the IAT] are looking at the one or two studies that seem to support their view,” says Machery.

The four meta-analyses undertaken so far suggest there’s little use for the IAT outside of academia. Forscher says that while the test may reflect a psychological process that’s interesting to researchers, he’s “not very confident at all” that it measures a thought process that causes real-life discrimination. “I don’t think that working scientists should completely abandon the IAT in their lab studies,” he wrote in an email. “At the same time, I don’t think that the race IAT should be used to claim that implicit bias is causing disparities in police use of force, for example.”

Machery argues that if the IAT cannot meaningfully predict behavior, the results of the test are largely irrelevant. He compares someone with a low anti-black IAT score but who behaves in a prejudiced way to someone who insists that they’re courageous but who behaves in a consistently cowardly manner. “You would not say that he’s explicitly courageous and implicitly a coward,” he says. “You would say he’s a coward.”

No psychologist or neuroscientist can convincingly point to a clear divide between conscious and unconscious thought. And so psychology’s attempt to solve discrimination by delineating between an amorphous collection of conscious and unconscious biases is both simplistic and misguided.

“I think a lot of habits we have work like muscle memory; we do them automatically without thinking about it explicitly,” says Luvell Anderson, philosophy professor at the University of Memphis. “But it’s not necessarily clear to me that those habits are unconscious in any deep or significant way.”

“Finding a way to measure attitudes that go around consciousness has always been a goal of social psychology,” adds Machery. “Again and again we fail.”

Several papers suggest the IAT does not measure truly unconscious thought. Even if people insist they’re egalitarian, they show awareness of their implicit biases, and seem able to predict the results they get from various IATs.

Pedestrians in midtown Manhattan.

Image: Kholood Eid for Quartz

When Banaji and Greenwald first came up with the phrase “implicit bias,” they claimed it reflected thinking that is “unavailable to self-report or introspection.” The research showing that people are aware of their implicit biases suggests this definition is suspect.

Though there are countless articles and academic references claiming the test reveals unconscious thinking, Nosek says the IAT is not strictly about unconscious bias. “The use of the term ‘implicit’ in our field, for example, is deliberate to avoid specific commitment about consciousness,” he wrote in an email. “Implicit,” he explained, describes a “variety of concepts” including “unaware, unintentional, fast, efficient, unconscious.”

But, ultimately, the effort to avoid “commitment about consciousness” can easily be interpreted as an attempt to use scientific jargon to obscure meaning.

The psychologists behind the IAT have emphasized the importance of unconscious bias in part by minimizing the role of conscious prejudice.

In Blindspot, Greenwald and Banaji wrote:

[G]iven the relatively small proportion of people who are overtly prejudiced and how clearly it is established that automatic race preference predicts discrimination, it is reasonable to conclude not only that implicit bias is a cause of Black disadvantage but also that it plausibly plays a greater role than does explicit bias in explaining the discrimination that contributes to Black disadvantage.

In an email, though, Greenwald acknowledged that there’s currently no perfect assessment of explicit bias. The “relatively small proportion of people” referenced in the above quote, he wrote, are the few Americans who voiced support for segregation and disapproval of racial intermarriage, equal employment, and African-American presidential candidates in national surveys. These questionnaires cannot identify those who hide their prejudice and only express such views “in a private setting with like-minded others, or only to themselves in a private diary, or only in their thoughts,” added Greenwald. The number of people who keep their prejudices private “must be higher than the ‘small proportion’ in the quote from Blindspot,” he wrote. “But how much larger—is it 20%? 25%? More?”

Academics know that self-reported attitudes are not a strong assessment of beliefs. Forscher believes much of the early excitement around the IAT “came from a feeling that self-report measures are untrustworthy and that we need something better that gets at people’s ‘true’ attitudes.”

In Blindspot, Banaji and Greenwald estimate some 40% of white Americans are “uncomfortable egalitarians” who are more likely to help white people than black—in situations ranging from job interviews to first aid response—but are “earnestly” unaware of their prejudiced behavior.

It’s certainly plausible that some section of the population is prejudiced and completely oblivious to this fact. But there’s also a significant number of people who are prejudiced, know that they’re not always egalitarian, but don’t acknowledge their biases in psychological questionnaires or in conversation. Even if they don’t agree with explicitly bigoted views, most should recognize that they don’t behave in a completely race- or gender-blind manner. Many are prejudiced but hope to escape the label of “racist” or “sexist.” And the theory of implicit bias has handed them an excuse.

“It puts some space between the actor and the act,” says Anderson. “If you can say, ‘Yes, I did some act that might be judged racist or sexist or homophobic but I didn’t do so intentionally or knowingly, it was the result of some bias that I wasn’t explicitly aware of,’ that seems to work as a way of distancing oneself from full responsibility for the action.”

In the face of current evidence, Forscher wrote, “most implicit-bias researchers no longer believe that implicit measures are assessing an attitude that is more ‘true’ than self-report measures.” Indeed, the meta-analyses showed that the IAT is no better at predicting discriminatory behavior (including microaggressions) than explicit measures of explicit bias, such as the Modern Racism Scale, which evaluates racism simply by asking participants to state their level of agreement with statements like, “Blacks are getting too demanding in their push for equal rights.”

Thanks to psychology’s focus on implicit bias, small discriminatory acts are all too often labelled as unconscious, offering a convenient scapegoat to those who claim they aren’t really prejudiced. This happens in academic literature, where researchers are quick to point to discrimination as signs of implicit bias, regardless of whether there’s any evidence to show that such behavior is unconscious. It’s also a disturbing feature of everyday conversations.

When I’ve experienced (subtler) forms of prejudice, such as a boyfriend who asked me to iron his shirts, a very senior editor who made sexual comments to me, and remarks apropos of nothing from male friends and acquaintances about my breasts, the perpetrators were quick to suggest that their behavior was utterly unintentional, that they were totally consciously unaware of their sexism.

Surely it shouldn’t take much reflection to recognize the prejudice in their actions. Anyone who carefully considers their behavior should be able to acknowledge that they don’t treat men and women perfectly equally. Their prejudice is not truly “introspectively unavailable” to them.

Pedestrians in midtown Manhattan.

Image: Kholood Eid for Quartz

That, for me, raises the uncomfortable question: What about my own prejudices? After researching this article, I retook both the black-white race IAT and the gender-career IAT and was told I had no implicit biases. Given the significant scientific doubts about the test, I didn’t think I could take this as happy confirmation that I’m racism- and sexism-free.

It’s deeply upsetting to admit, but I don’t think my actions are perfectly egalitarian. And though it would be easy to blame this on societal influences, if I perpetuate inequalities in my behavior, then I bear some responsibility. This doesn’t feel as noble as my previous (since-questioned) realization that I have implicit biases. I feel disgusted with myself, and am aware that simply recognizing my own prejudiced behavior does nothing to help those who face systematic discrimination.

Perhaps, I suggested to Anderson, a person holding onto such insidious prejudices could be compared to a mother who insists that she loves her two children equally, but who, deep down, knows she acts with favoritism towards one of them. He agreed the analogy works: Many of those who insist they’re totally egalitarian know, if they’re really honest, they don’t treat everyone equally.

None of the implicit-bias training-program instructors I spoke with were able to point to definitive positive results from their workshops, and they were largely unaware of the scientific controversies.

Holly Brittingham, head of talent and development at FCB, one of the largest global advertising networks, says more than 1,100 of the company’s employees have gone through its implicit-bias training, though there’s been no significant change in diversity since the program started. “That’s really still a challenge,” she says. The workshop, she insists, is “rooted in neuroscience, so it’s got a lot of validity to it.”

At Fair and Impartial Policy, which has delivered implicit-bias training to hundreds of police forces in the US and Canada, criminologist Lorie Fridell did know about the shaky evidence but said she hoped psychologists would eventually find a stronger link between the IAT and behavior. Though the effects of her police-force training had not been studied, “everything in our training is based on science,” she added. After we spoke, Fridell sent me a company memo claiming the existing meta-analyses show mixed results, when in fact they all show a weak link between IAT and behavior.

In 2014, following the shooting of Michael Brown, an unarmed black teenager in Ferguson, Missouri, the US Department of Justice set up a three-year, $4.75 million program to improve public relations with the police. The plan relied heavily on implicit-bias training. Though racist emails passed around the police department showed plenty of explicit prejudice in Ferguson, a statement published when the program launched announced plans to reduce implicit bias “where actual racism is not present.”

Phillip Atiba Goff, principal partner at the justice department program, professor in policing equity at John Jay College of Criminal Justice, and president of the New York-based Center for Policing Equity, says that though implicit bias is a feature of his work, his primary focus is behavior. “I’ve been black my entire life, with the possible exception of a week in college I took off,” says Goff. “I don’t care about the hearts and minds of the people who do racist behaviors towards me. I want the behaviors to stop. If you want to be a good scientist, there’s a difference between affecting bias and affecting the behavior.”

Why, then, bring in implicit bias at all? Goff’s work points to studies showing police officers with high anti-black IAT scores are quicker to shoot at African Americans. That finding, though, has been countered by research showing the exact opposite.

A police officer watches pedestrians in midtown Manhattan.

Image: Kholood Eid for Quartz

Nevertheless, Goff, who has developed implicit-bias police-training programs, insists the academic debates do not affect his own field research. Silicon Valley’s weak results, he says, can be explained simply: “The problems with the trainings is not the science, the problems with the trainings is the trainings.”

Imperfect scientific lab results, though, certainly cast doubt on how such theories are applied in the field. Plus, as Goff says later in our conversation, “Translating from the science to the field is incredibly difficult.”

Greenwald himself dismisses methods that claim to interrupt implicit biases, such as slowing down behavior so that the “conscious mind” can override unconscious impulses. “There is no scientific support for usefulness of these techniques,” he wrote in an email.

In public talks, Greenwald said he emphasizes ways of avoiding the effects of implicit bias. These methods, such as blind evaluations that obscure the race and gender of applicants, simply address prejudice, whether conscious or unconscious. Greenwald acknowledged that they do not focus on implicit bias: “The remedies I advocate are equally suitable for ALL forms of unintended discrimination,” he wrote in an email. “They are not limited to unintended discrimination due to implicit bias.” (“Unintended discrimination,” explained Greenwald, includes “institutional [structural] discrimination, ingroup favoritism, and implicit bias.”)

Both academic research on discrimination and workplace trainings could be considerably more powerful, argued several psychologists I spoke to, if they focused on behavior itself instead of trying to peer into the unconscious mind.

Behavioral targets will vary according to the institution. For example, diversity in Silicon Valley will never improve “as long as they continue to hire from the pools they hire from,” says Gregory Mitchell, a law professor at University of Virginia School of Law and co-author of several reports critical of the IAT. Meanwhile, he adds, “I suspect [police shootings have] much more to do with the local police policies and their training of officers than their implicit attitudes. When we portray police violence as a product of implicit bias without any real evidence to support that, we’re distracting ourselves from other possible causal factors.”

Hiring goals, diverse senior management, and penalties for those who repeatedly exhibit prejudiced behavior—rather than a soft talk about how we’re all biased but it’s not really our fault because it’s unconscious—would be effective alternative strategies for those serious about changing institutional inequality.

In a 2014 paper (pdf) by Banaji, Greenwald, and Nosek, the authors seemed to acknowledge the concerns raised about the test: “IAT measures have two properties that render them problematic to use to classify persons as likely to engage in discrimination,” they wrote, pointing to the test’s poor predictive abilities and test-retest reliability.

Pedestrians in midtown Manhattan.

Image: Kholood Eid for Quartz

But when I asked them directly, Greenwald and Banaji doubled down on their earlier claims. “The IAT can be used to select people who would be less likely than others to engage in discriminatory behavior,” wrote Greenwald in an email.

The meta-analyses and other psychologists I spoke to strongly disagree: “There is also little evidence that the IAT can meaningfully predict discrimination,” notes one paper, “and we thus strongly caution against any practical applications of the IAT that rest on this assumption.”

There remains the question of whether the IAT’s predictive abilities could be more meaningful in a broader context. Nosek emphasized that a weak link between the test and behavior is still a reliable correlation. The IAT “provides very little information about what the person is likely to do” for any single instance of real-life individual behavior, he wrote in an email, but the test’s predictive abilities could become more significant across large populations or periods of time.

To some extent, even if implicit bias has a large-scale impact, these possible accumulative effects are beside the point. The IAT is used in thousands of real-world workplace trainings to highlight individual discriminatory behavior; if the scientific evidence does not support this use, such practices urgently need to be revisited.

Nosek, whose Reproducibility Project played a key role in bringing the replication crises to light, claims his implicit bias work demonstrates the standards he calls for across psychology. The research is transparent, the data widely available, and there haven’t been major findings that couldn’t be replicated, he noted in an email. Nosek also qualified the importance of meta-analyses as a standard of proof, writing, “If the literature is highly biased, the conclusions of a meta-analysis will be highly biased too.” Meta-analyses can contain errors and are certainly not perfect—as with all scientific research, the findings are iterative rather than definitive—but they provide a powerful summary of knowledge, and are one of the strongest forms of evidence in psychology.

Overwhelmingly, the replication crisis highlights the flaws in assuming that any scientific research implies that findings have been definitively “proven.” Scientific truths evolve as further evidence and context is gathered. That is precisely why academics should be extremely cautious in applying their work outside the lab when their research is in early stages. Scientists could well still figure out precisely how our unconscious makes us more prejudiced, and manage to reduce discriminatory behavior by tackling these unconscious biases. But they don’t have that knowledge yet.

One likely reason implicit-bias testing and training became so popular is that it’s socially unacceptable to be seen as prejudiced. Discrimination still clearly exists; we needed an explanation; implicit bias provided one. It’s personally convenient to recast subtle forms of prejudice as unconscious bias. That doesn’t make it true.

There are further points and counterpoints that could not be fully explored in this article. There’s the critique that the IAT measures awareness of social status rather than biases; the one that suggests any link between implicit bias and inequality could be explained by reverse causation whereby a more unequal society leads to poorer IAT scores; and the one arguing the test became so popular in academia because of publication bias (any test that gives positive results tends to get more attention in the sciences, as it’s more likely to be accepted in journals than tests with negative or inconclusive results.)

Implicit bias research is mired in uncertainties, and the existing evidence neither definitively proves nor disproves current theories on the subject. Calvin Lai, director of research at Harvard’s Project Implicit and professor of psychological and brain sciences at Washington University-Saint Louis, notes that it’s extremely difficult to prove a test predicts behavior, and future larger studies could well find stronger evidence to bolster the IAT. For example, scientific confidence in the big five personality traits—openness, conscientiousness, extroversion, agreeableness, and neuroticism—has grown as more studies have confirmed that tests assessing these characteristics can effectively predict behavior.

In the meanwhile, how should government and corporate implicit-bias programs respond to the scientific uncertainty surrounding the IAT?

Lai says he worries about “throwing out the baby with the bathwater.” One clear benefit of the IAT is it helps people realize that, regardless of what they tell themselves, they are not necessarily bastions of equality. The personal responsibility I and others felt in discovering our own implicit biases can help motivate more considered, unprejudiced behavior. “A lot of other evidence focuses on large, system-wide patterns and so it’s easy to tell yourself, ‘Well that’s everyone else,’” says Lai. “I think demonstrations like the IAT show that no, it’s not just everyone else. It’s you as well.”

Pedestrians in midtown Manhattan.

Image: Kholood Eid for Quartz

Raising personal awareness with a highly flawed test, though, opens the door for those eager to resist such conversations to claim that, if the IAT itself isn’t valid, then prejudice itself is a myth.

If we really want to eliminate discrimination, discussions of implicit bias should play a smaller role in sparking personal responsibility. The theory should be presented with the appropriate caveats, and as only the beginning of a conversation. The current hype around implicit bias, which overstates its role in both causing and combating discriminatory behavior, is both unwarranted and unhelpful.

Instead of looking to implicit bias to eradicate prejudice in society, we should consider it an interesting but flawed tool. We need to acknowledge the limitations, to look for other tangible ways to reduce inequality, and to admit that our colleagues, friends, and ourselves might not just be implicitly biased, but might have explicitly racist and sexist tendencies. We should ask people to consciously recognize their prejudicial behavior, and take responsibility for it. And we certainly should stop assuming our unconscious will be the key to solving discrimination.

“A lot of folks see the IAT as a golden path to the unconscious, a tool that perfectly captures what’s going on behind the scenes and it’s not,” says Lai. “It’s a lot messier than that. The truth, as often, is a lot more complicated.”