Computers can now paint like Van Gogh and Picasso (original) (raw)

Computers are learning how to write sonnets, compose classical music, and now they’re mastering another high art form: painting. Researchers from the University of Tubingen in Germany recently published a paper on a new system that can interpret the styles of famous painters and turn a photograph into a digital painting in those styles.

The researchers’ system uses a deep artificial neural network—a form of machine learning—that intends to mimic the way a brain finds patterns in objects. It was a deep neural network that was behind Google’s crazy Deep Dream system, where images were turned into digital psychedelic fever dreams where everything was made up of dogs and eyeballs. The team at Tubingen’s system is a similar idea, with the craziness toned down a little bit. The researchers taught the system to see how different artists used color, shape, lines, and brushstrokes so that it could reinterpret regular images in the style of those artists.

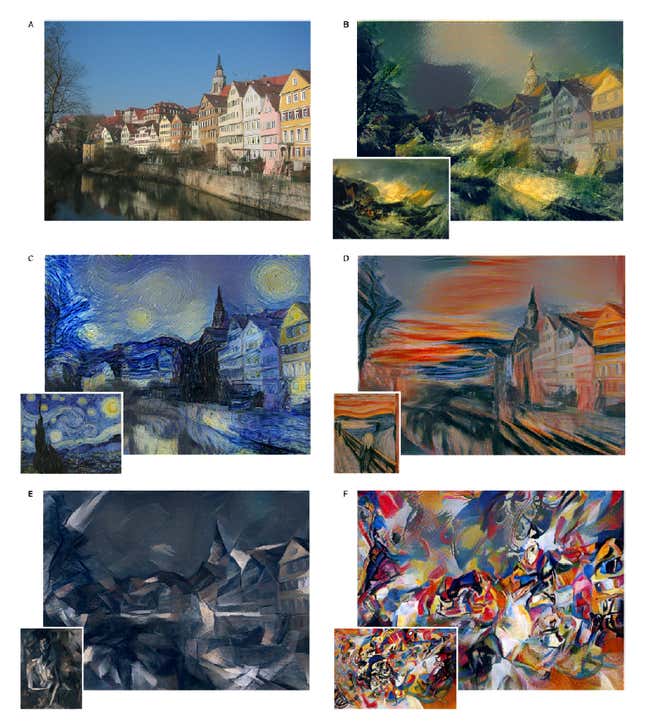

Image: arXiv/A Neural Algorithm of Artistic Style/Gatys, et al.

The main test was a picture of a row of houses in Tubingen overlooking the Neckar River. The researchers showed the image to their system, along with a picture of a painting representative of a famous artist’s style. The system then attempted to convert the houses picture into something that matched the style of the artist. The researchers tried out a range of artists, which the computer nailed to varying degrees of success, including Van Gogh, Picasso, Turner, Edvard Munch and Wassily Kandinsky. While in some cases, the end product does very much look like how that artist may have interpreted the row houses, based solely on the input painting, not every Van Gogh looks like “Starry Night.”

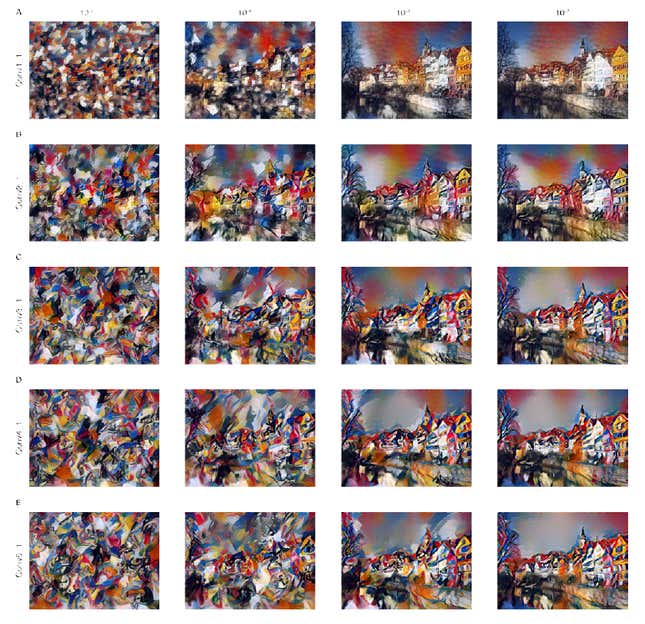

A sliding Kandinsky scale.

Image: arXiv/A Neural Algorithm of Artistic Style/Gatys, et al.

The system has a sliding scale for how much of the original image is kept versus the styling of the artists. For example, they let the system run various levels of intensity of a style that’s supposed to match Kandinsky’s work. Eventually, the image just becomes a series of unrecognizable color blotches—though Kandinsky may not have been entirely opposed to this style.

This system, like many neural networks attempting to mimic human creativity, explores what it actually means to create. The team’s paper is under consideration for publication in Nature Communications, according to Motherboard. Right now, it’s essentially a really, really complicated Instagram filter—adding a painting’s style to a photo—but it could pave the way to a computer vision system that really understands how humans create. As the paper says: ”Our work offers a path forward to an algorithmic understanding of how humans create and perceive artistic imagery.”

And there are other systems that are already learning how to create in much the same way humans are taught. The best musicians learn theory and styles, and then break the rules to create original works of art. MIT lecturer Donya Quick’s system is doing just that, going a step further than mimicking artistic styles and actually generating entirely new pieces of music (albeit still within conventional classical music forms).

Does this mean human creativity will one day be replaced by computer programs that can spit out art the way pharmacies spit out one-hour photos? Perhaps, but creating a computer as powerful and complex as the human brain is a daunting task that we’re quite far from completing. In the meantime, Instagram could definitely use a few new filters.