BaseModel vs HSTU for sequential recommendations (original) (raw)

In May 2024, a preprint titled "Actions Speak Louder than Words: Trillion-Parameter Sequential Transducers for Generative Recommendations" [1] was posted by Meta AI researchers to ArXiv. The preprint introduced a novel recommender, referred to as 'HSTU' which stands for “Hierarchical Sequential Transduction Units” promising new state-of-the-art results for sequential recommendation tasks, as well as scalability to exceptionally large datasets.

Overview of HSTU

HSTU is yet another attempt at adapting (modified) Transformers to generative recommendation, after DeepMind’s TIGER model (benchmarked in a previous post). The most interesting properties of Meta AI’s HSTU architecture are:

- Pointwise Aggregated Attention:

- Uses a pointwise normalization mechanism instead of softmax normalization, making it suitable for non-stationary vocabularies in streaming settings.

- Captures the intensity of user preferences and engagements effectively.

- Leveraging Sparsity:

- Efficient attention kernel designed for GPUs, transforming attention computation into grouped GEMMs.

- Algorithmically increases the sparsity of user history sequences via Stochastic Length (SL), reducing computational cost without degrading model quality.

- Memory Efficiency:

- Reduces the number of linear layers outside of attention from six to two, reducing activation memory usage significantly.

- Employs a simplified and fused design that reduces activation memory usage compared to standard Transformers.

- Training and Inference Efficiency:

- Shows up to 1.5x - 15.2x more efficiency in training and inference, respectively, compared to Transformer++.

- Can construct networks over 2x deeper due to reduced activation memory usage.

- Performance:

- Outperforms standard Transformers and popular Transformer variants like Transformer++ in ranking tasks.

- Demonstrates significant improvements in both traditional sequential settings and industrial-scale streaming settings.

- Stochastic Length (SL):

- Selects input sequences to maintain high sparsity and reduce training costs.

- Significantly outperforms existing length extrapolation techniques, making it highly effective for large-scale recommendation systems.

Details of HSTU Architecture

HSTU is utilizes a few interesting components:

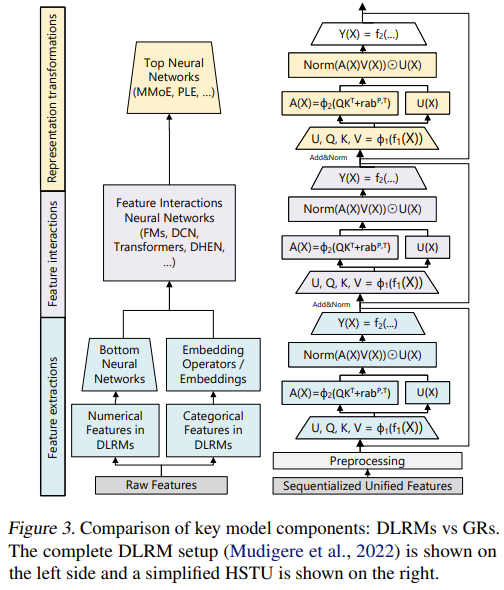

Source: [1]

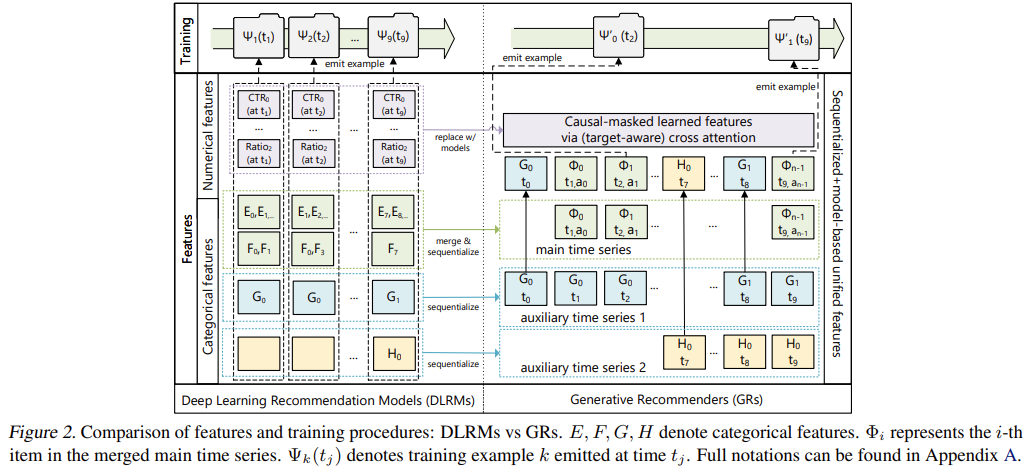

The HSTU model utilizes an intricate setup of representing categorical features as auxiliary events in a time-series.

This is best illustrated by the following diagram from the original preprint:

Source: [1]

On the left-hand side is a classic Deep Learning Recommender Model, while on the right side is the generative causal setup proposed by Meta AI. It can be seen how categorical features are transformed into auxiliary events which are then incorporated into the main time-series.

HSTU’s Benchmark Results

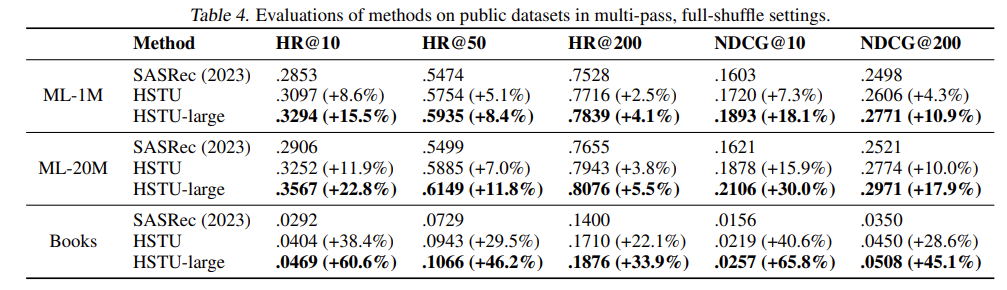

HSTU was benchmarked on 3 public datasets: MovieLens-1M, MovieLens-20M and Amazon Books.

HSTU outperformed prior strong baselines on the datasets used. Results below:

HSTU vs SASRec result comparison [1]

HSTU was able to achieve significant improvements over prior state-of-the-art (SASRec) on all metrics on all datasets.

It is worth noting that based on our own experiments at Synerise AI, MovieLens is an atypical dataset that should not be used as a benchmark for sequential recommendations due to the underlying data generation process. The temporal ordering of movies rated is very loosely correlated with the order of consumer choices – one may rate a movie seen a long time ago. Reviews are often done in short bursts, even though the movies were watched by the reviewer sequentially prior to the review or even many years in the past.

BaseModel vs HSTU Performance

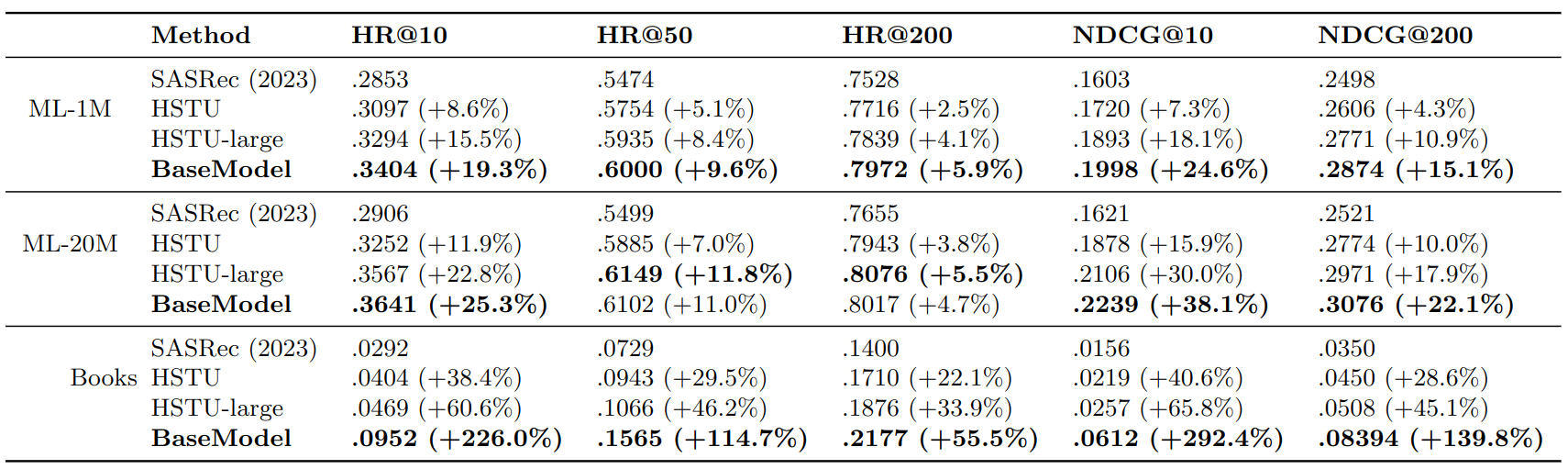

To evaluate BaseModel against HSTU, we replicated the exact data preparation, training, validation, and testing protocols described in the HSTU paper. The exact same implementations of HitRate and NDCG metrics were used for consistency.

For comparison of the models a few steps were performed:

- Implementation of the exact data preparation protocol as per HSTU paper

- Implementation of the exact training, validation, and testing protocol as per HSTU paper

- Sourcing the exact implementations of HitRate and NDCG metrics used in HSTU and incorporation into BaseModel

- model training and evaluation (on all 3 datasets)

The entire process took 5 hours from scratch to finish. The parameters of BaseModel were default for the Amazon Books dataset, and slightly modified for MovieLens datasets, to reflect their not-really-sequential structure. The results look as follows:

BaseModel vs HSTU and SASRec

Despite limited optimization of BaseModel’s parameters, the results are remarkably interesting. BaseModel achieved an +55.5% to +292.4% improvement over SASRec’s results on Amazon Books, significantly outperforming HSTU.

On MovieLens-1M, BaseModel had a clear advantage over both SASRec and HSTU, and on MovieLens-20M both BaseModel and HSTU were tied, while SASRec remained far behind.

We have internally confirmed MovieLens is a pathologically constructed dataset, and its sequential/temporal structure does not correspond to typical sequence recommendation scenarios in other public and private datasets. We hypothesize that both BaseModel and HSTU reach near-perfect achievable scores on MovieLens-20M.

While exact HSTU training and inference times are not reported, the model is based on a modified Transformer architecture. Meta AI’s team has optimized the architecture significantly allowing training 2-15x faster than Transformer++. Yet, even with those optimizations BaseModel’s training and inference processes are orders of magnitude faster.

Conclusion

The comparison between BaseModel and HSTU reveals substantial differences in their architectural choices and performance. While HSTU represents a notable advancement in generative retrieval recommender systems, BaseModel’s approach demonstrates superior efficiency and effectiveness in sequential recommendation tasks. In addition, we conclude that usage of MovieLens datasets should be discouraged for sequential recommendations, as the sequential/temporal information contained therein is very noisy.

We are continuously improving our methods to push the boundaries of what behavioral models can achieve, comparisons with alternative approaches are a vital part of our work.

References

[1] Zhai, et al., “Actions Speak Louder than Words: Trillion-Parameter Sequential Transducers for Generative Recommendations”, https://arxiv.org/abs/2402.17152