Why encryption and online safety go hand-in-hand (original) (raw)

Today, Oct. 21, is the first annual Global Encryption Day. Organized by the Global Encryption Coalition, the day highlights both the pressing need for greater data security and online privacy—and the importance of encryption in protecting those interests. Amid devastating hacks and massive data breaches, there’s never been a more urgent need to bolster our data security and online privacy. Encryption is a critical tool for protecting those interests.

Yet encryption is under constant threat from governments both at home and abroad. To justify their demands that providers of messaging apps, social media, and other online services weaken their encryption, regulators often cite safety concerns, especially children’s safety. They depict encryption, and end-to-end encryption (E2EE) in particular, as something that exists in opposition to public safety. That’s because encryption “completely hinders” platforms and law enforcement from detecting harmful content, impermissibly shielding those responsible from accountability—or so the reasoning goes.

There’s just one problem with this claim: It’s not true. Last month, I published a draft paper analyzing the results of a research survey I conducted this spring that polled online service providers about their trust and safety practices. I found that not only can providers detect abuse on their platforms even in end-to-end encrypted environments, but they even prefer detection techniques that don’t require access to the contents of users’ files and communications.

Surveying trust and safety approaches

The 14 online services included in my analysis range in size from a few thousand to several billion users. Some of the services are end-to-end encrypted, some are not. Collectively, they cover a large proportion, perhaps the majority, of the world’s internet users. The survey questions addressed twelve types of online abuse, from child sex abuse imagery (CSAI) and other child safety offenses such as grooming and enticement (which the study calls “child sexual exploitation,” or CSE for short), to spam, phishing, malware, hate speech, and more.

The study draws a distinction between techniques that require a provider to have the technical capability to access the contents of users’ files and communications at will, and those that do not. I call the first category “content-dependent” and the other “content-oblivious.” Content-dependent techniques include automated systems for scanning all content uploaded or transmitted on a service (to detect CSAI or potentially copyright-infringing uploads, for example). Content-oblivious techniques include metadata-based tools (such as those for spotting spammy behavior) and user reports flagging abuse that the provider didn’t or couldn’t detect on its own (i.e., due to end-to-end encryption). And, no, empowering users to report abusive content does not compromise end-to-end encryption, despite what the investigative outlet ProPublica recently reported.

In recent years, end-to-end encryption’s supposed impact on online child safety investigations has served as the cause célèbre for governments’ calls to break E2EE. But that impact has been overstated. When government officials say that end-to-end encryption “completely hinders” or “wholly precludes” investigations, those statements reflect a misconception that content-dependent techniques are the only way possible to detect online abuse. This overlooks the availability, prevalence, and effectiveness of content-oblivious approaches.

Every provider I surveyed uses some combination of content-oblivious and content-dependent techniques to detect, prevent, and mitigate abuse. All employ some kind of abuse reporting; almost all have an in-app reporting feature. By contrast, fewer providers use metadata-based tools, automated content scanning, or other techniques to detect abuse.

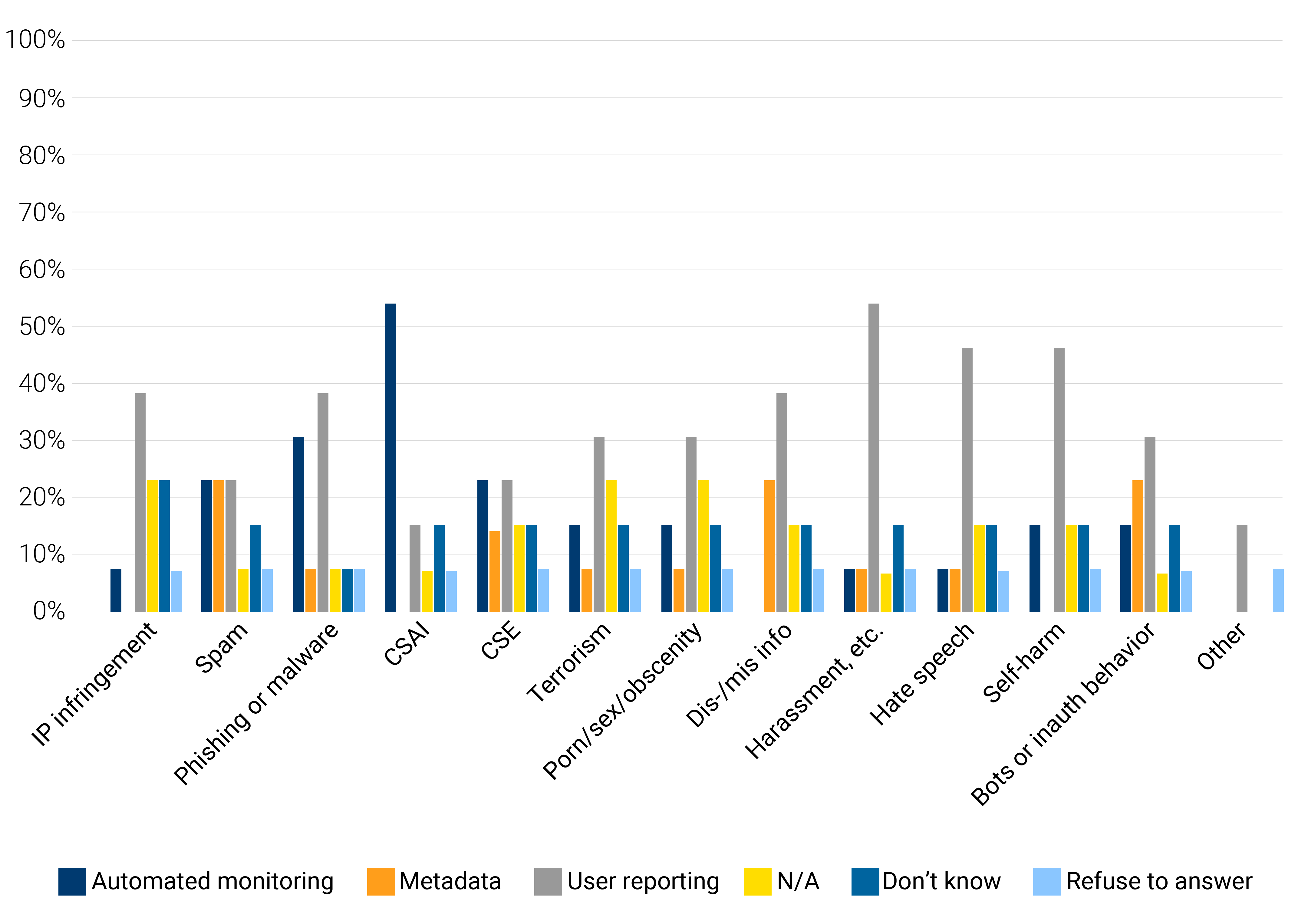

Which approach do providers think works the best against various types of abuse? Overall, the providers I surveyed deemed user reporting the most useful means of detecting nine of the twelve kinds of online abuse I asked about. There were three exceptions: CSAI, CSE, and spam.

Techniques providers find most useful for detecting each type of abuse

Policy implications

The usefulness of user reporting of abuse has important ramifications for encryption policy. If providers don’t find automated scanning to be very useful for detecting most types of abuse, then we can predict that the impact of end-to-end encryption on their trust and safety efforts may be less than assumed. Rather, E2EE’s impact on abuse detection will likely vary depending on the kind of abuse at issue.

The variance owes to an important difference between content-dependent and content-oblivious techniques. End-to-end encryption prevents outsiders (including the provider itself) from reading the contents of a user’s file or message, meaning it hampers providers’ content-dependent tools—but not content-oblivious ones. Automated scanning is affected by E2EE, but it isn’t the best way to detect very many sorts of abuse to begin with, according to our participants. User reporting, which is considered the most useful detection technique for most kinds of abuse, is wholly compatible with end-to-end encryption. And to the extent E2EE isn’t hampering providers from finding harmful content, it shouldn’t hinder criminal investigations either, since there are well-established processes for investigators to obtain that data from providers (as demonstrated by providers’ transparency reports).

As said, there are a few categories where user reporting was not deemed the most useful means of abuse detection: CSAI, CSE, and spam. For CSAI, the strong consensus among survey participants favors automated scanning, which implies that this is the area where E2EE’s impact is largest. CSAI is unique in that regard, however. For CSE and spam, the providers I surveyed are ambivalent about what works best: There was a tie in the rankings between content-dependent and content-oblivious techniques. This suggests that E2EE affects CSE and spam detection less than CSAI.

Put simply, CSAI just isn’t like other kinds of abuse online—not even other kinds of child safety offenses. What works best against CSAI doesn’t work best against other abuse types, and vice versa. That means you can’t build a trust and safety program—or pass laws—based solely on the exigencies of fighting CSAI, as though it’s all the same problem requiring the same response. It’s not.

And yet, as I described earlier, regulators have made child safety the primary justification for their proposals to make encryption less effective. But end-to-end encryption can’t be reduced or turned off solely for CSAI or other specific kinds of harmful content. Weakening an encryption design in the name of detecting a particular kind of abuse also unavoidably reduces the security, privacy, and integrity of all other information encrypted with that same design. Weakening encryption thus poses massive dangers for everyone, not only at the individual level, but also for the economy and national security.

What’s worse, my survey results indicate that weakening encryption wouldn’t even yield a countervailing benefit proportional to these harms. Since end-to-end encryption doesn’t hinder the best tool for fighting most types of online abuse outside of CSAI, weakening it is largely a non sequitur. My study demonstrates that officials’ repeated claim that encryption wholly stymies investigations of online harms is simply false. Their calls to weaken encryption are ignorant at best and dangerously reckless at worst. Instead of decrying providers for encrypting their services, authorities worried about online harms should first sit down with those providers’ trust and safety teams to learn more about their efforts to protect their users and their capabilities for discovering abusive and criminal content even in the context of E2EE.

Encryption is vital to protecting our privacy and security, and there are ways to fight online abuse effectively that are compatible with encryption. Strong encryption is an enhancement, not an obstacle, to our safety both online and off. On this inaugural Global Encryption Day, I hope you’ll make the switch to using end-to-end encrypted services and encourage those you care about to do the same.

Riana Pfefferkorn is a research scholar at the Stanford Internet Observatory and a member of the Global Encryption Coalition.