Roy Spencer, PhD (original) (raw)

Death Valley Temperatures, Part 3: Twelve Years of July Daily Tmax Estimates and the 134 deg. F Record

November 11th, 2024

Update (11/12/2024): New annotated version of Fig. 1 added. Corrected who the Greenland Ranch foreman was and associated correspondence. Will fix Fig. 2 (2021/2022) problem Wednesday morning.

Update (11/13/2024): Fixed Fig. 2

In Part 1 I claimed that using stations surrounding Death Valley is a good way to “fact check” warm season high temperatures (Tmax) at the Death Valley station, using a correction for elevation since all surrounding stations are at higher (and thus cooler) elevations. In July of each year, a large tropospheric ridge of high pressure makes the air mass in this region spatially uniform in temperature (at any given pressure altitude), and daily convective heating of the troposphere leads to a fairly predictable temperature lapse rate (the rate at which temperature falls off with height). This makes it possible to estimate Death Valley daytime temperatures from surrounding (cooler) stations even though those stations are thousands of feet higher in elevation than Greenland Ranch, which was 168 ft. below sea level.

Lapse Rates Computed from Stations Surrounding Death Valley

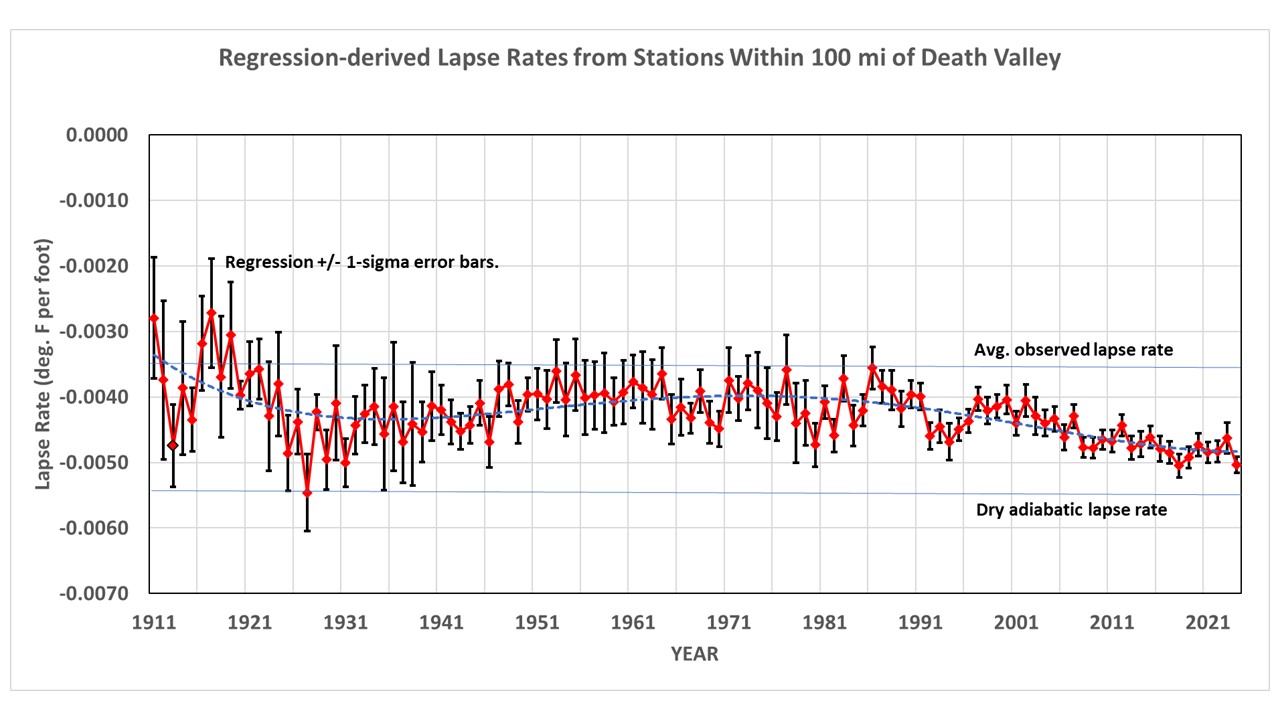

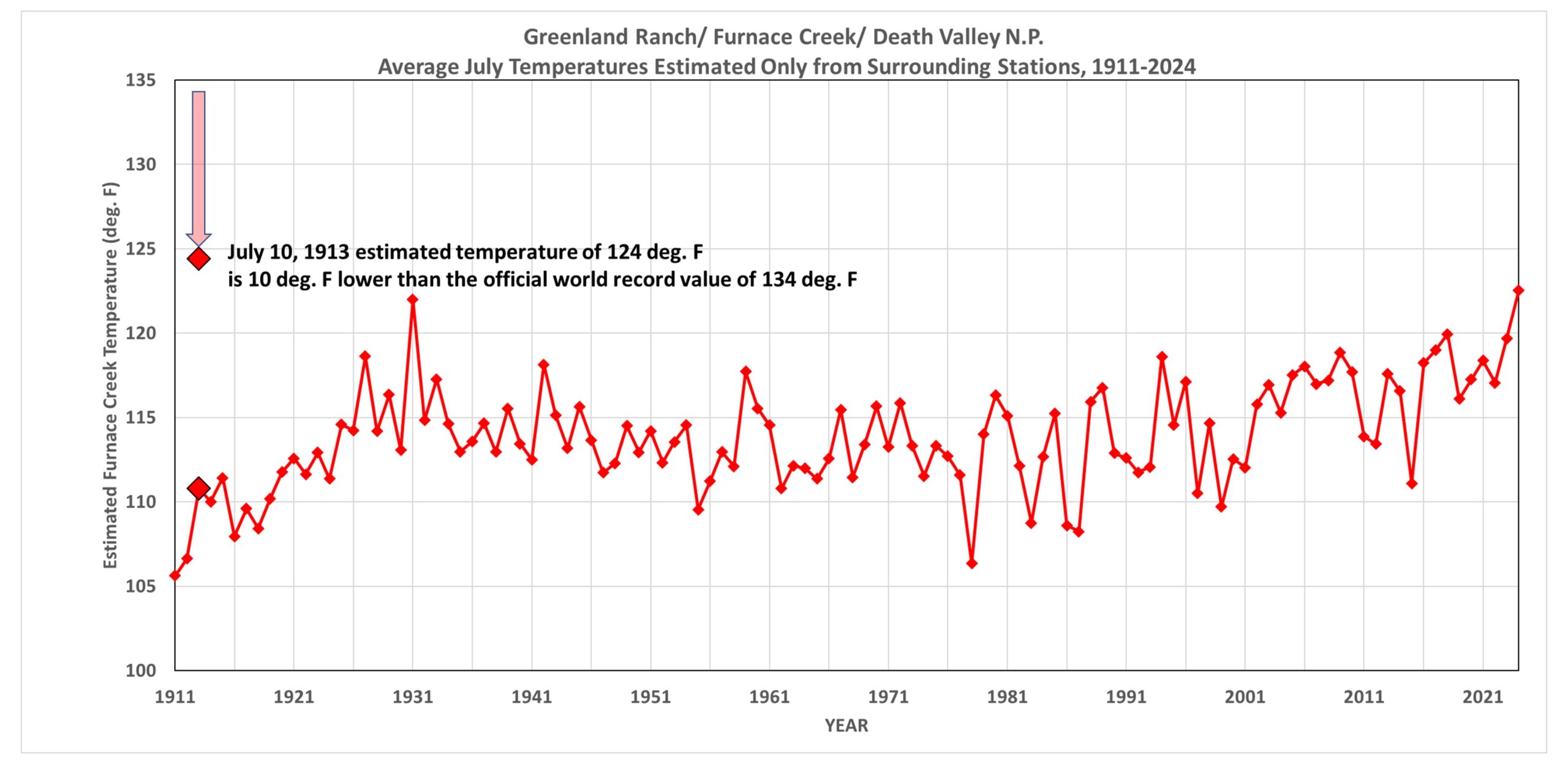

If I use all available GHCN daily stations within 100 miles of Greenland Ranch (aka Furnace Creek, aka Death Valley N.P.) in each July from 1911 to 2024 to compute the month-average lapse rate (excluding the Death Valley stations[s]), I get the results in Fig. 1.

Fig. 1. Lower tropospheric temperature lapse rate estimated from all stations within 100 miles of Greenland Ranch, Death Valley, CA. The number of available stations for these calculations range from several in the early years to 25 or more in the later years. Here I will assume a constant lapse rate of -0.004 during the 20th Century. The 4th order polynomial fit to the data would be another way to assume how the lapse rate changes over time.

The computed lapse rates mostly fall between the dry adiabatic value and the U.S. standard atmosphere value (except in the early years). Given the few stations available in the early years, I will base the calculations that follow on an assumed lapse rate of -0.004 deg F per ft. for the first half of the record, and will assume that the observed steepening of the lapse rate after the 1980s is real, with a value of -0.0048 deg. F per ft. in the early 2020s. In Part 1, I used the actual values in Fig. 1 in each year to estimate Death Valley temperatures. This time I’m using average lapse rate values over many years, keeping in mind the early decades had few stations and so their values in Fig. 1 are more uncertain.

Daily Estimated July Tmax at Death Valley: 2021-2024

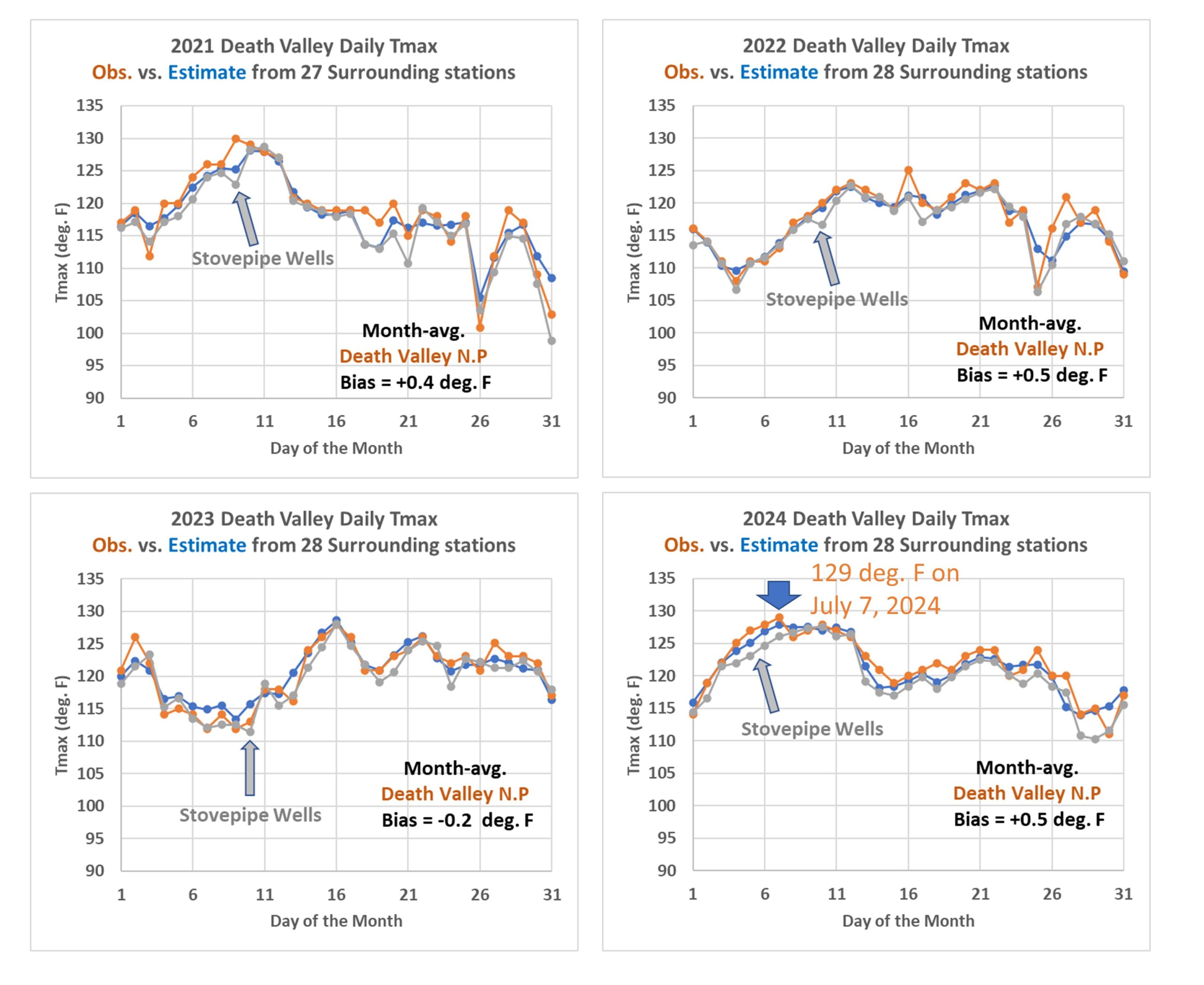

How accurately can we estimate daily Tmax temperatures in Death Valley from surrounding high-elevation stations? The following plot (Fig. 2) shows how the July daily observed Tmax temperatures in Death Valley (2021, 2022, 2023, 2024, orange for Death Valley N.P.) compare to estimates made based upon surrounding, high-elevations stations (blue), assuming a lapse rate of -0.0048 deg. F per ft (see Fig. 1).

Fig. 2. Daily estimated July Tmax temperatures for Death Valley N.P. from surrounding stations (blue) compared to those observed (orange, 194 ft. below sea level) for 2021, 2022, 2023 and 2024.

In each year the daily estimates from surrounding stations (blue) are reasonably close (within a couple of degrees) to the observed values at both Death Valley N. P. (orange) and at the nearby station Stovepipe Wells. For example, on July 7, 2024 the observed “near record” value of 129 deg. F degrees agrees well with the lapse-rate estimated value of 128 deg. F. Note there were many (27 of 28) stations within 100 miles of Death Valley available to make these estimates during these years.

Daily Estimated July Tmax at Death Valley: 1935-1938

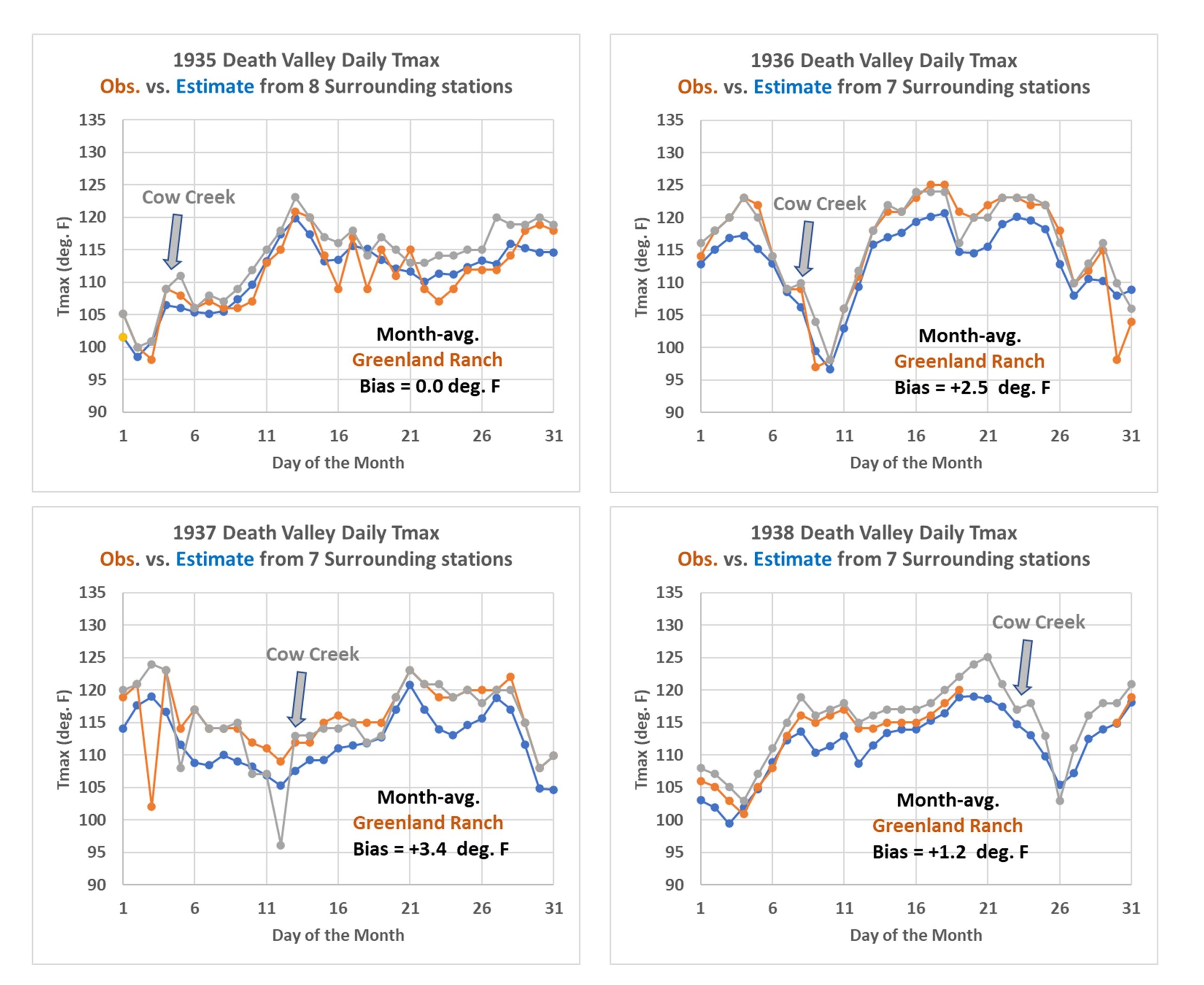

Next, let’s travel back to the 1930s, when there were fewer stations to do these estimates (Fig. 3).

Fig. 3. Daily estimated Tmax temperatures for Death Valley during 1935, 1936, 1937, 1938 from surrounding stations (blue) compared to those observed at Greenland Ranch (orange, 168 ft. below sea level) and Cow Creek (grey, 151 t. below sea level).

Despite only having 7 or 8 stations from which to estimate Death Valley temperatures, the agreement is still reasonably good in 1935, with no bias between observed and estimated, but 1-3 deg. F bias at Greenland Ranch vs. estimated in the following 3 years. There are also a few low temperature outliers in 1937-38 at Greenland Ranch and Cow Creek; I don’t know the reason for these.

Daily Estimated July Tmax at Death Valley: 1912-1915

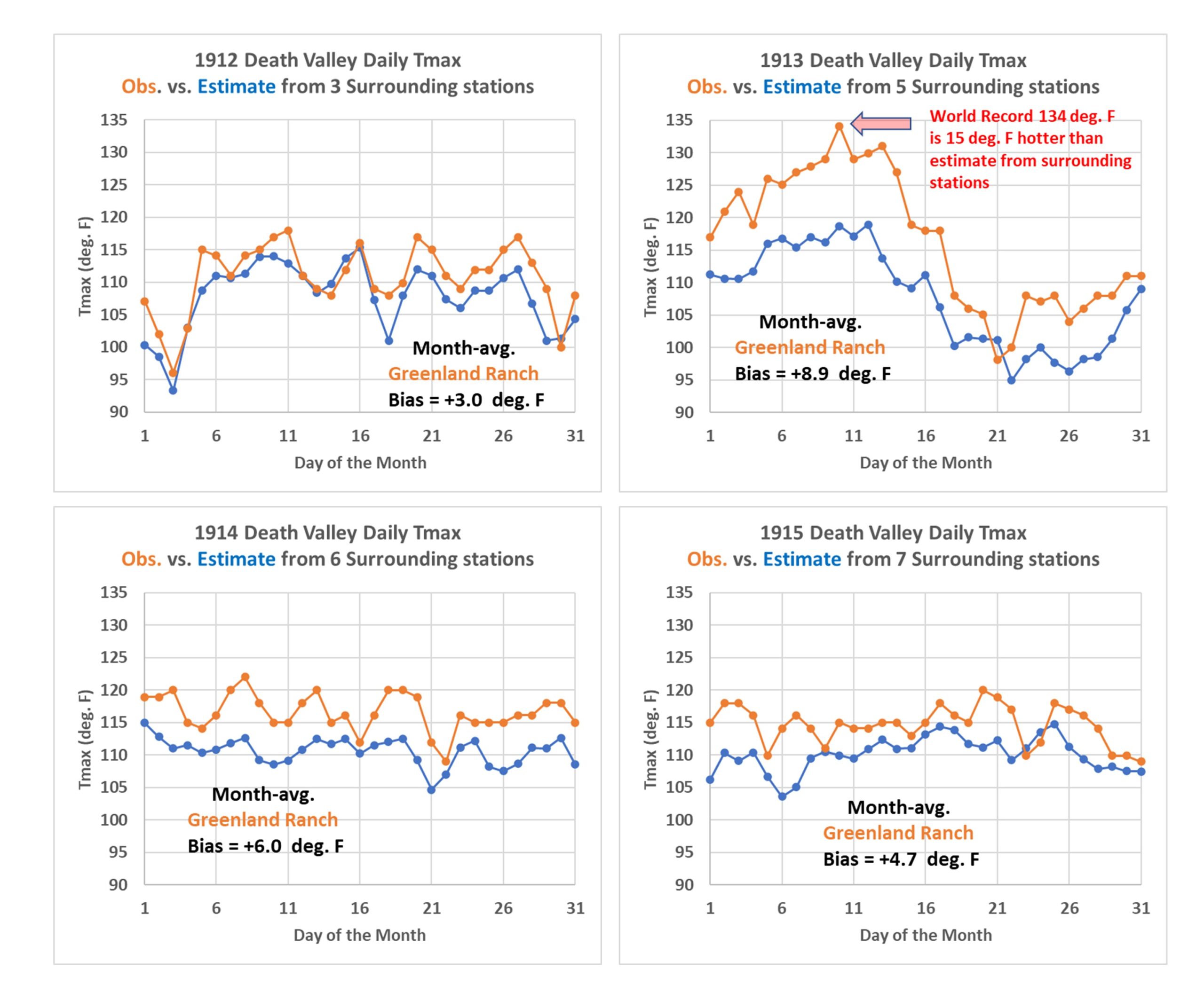

Finally we examine the period in question, when the 134 deg. F world record temperature was recorded on July 10, 1913 (Fig. 4).

Fig. 4. Daily estimated Tmax temperatures for Death Valley during 1912, 1913, 1914, 1915 from surrounding stations (blue) compared to those observed at Greenland Ranch (orange, 168 ft. below sea level).

During these years there were only 3 to 7 stations from which to compute Death Valley Tmax. In 1912, despite only 3 stations, the reported temperatures averaged only 3 deg. F above those estimated from surrounding stations. But in 1913 (the year of the record) the observations averaged an astounding 9 deg. F warmer than the surrounding 5 stations would have suggested. On July 10, the excess was 15 deg. F!

That second week of July 1913 was indeed unusually hot, and it was during this time that the ranch foreman (Oscar Denton) responsible for making the temperature readings from an official instrument shelter provided by the U.S. Weather Bureau in 1911 might have replaced the official values with values that more accorded with the heat he and his supervisor (Fred Corkill) were feeling on his veranda, away from the USWB instrument shelter which was sited next to an irrigated field. Bill Reid covers the details of correspondence between Corkill and a USWB official in San Francisco regarding the shelter temperatures and how much cooler they were compared to what was measured by a second thermometer farther away from the irrigated field. Reid believes (and I agree) that the shelter temperatures were, at least for a time while Denton was responsible for tabulating the daily measurements, replaced with measurements from a separate thermometer having uncertain quality and siting away from hot surfaces exposed to the sun.

So, How Much Hot Bias Exists in the 134 deg. F “World Record”?

We will never know exactly how much warm bias exists in the world record value. But from comparison to the biases in 1912 and 1914, I would say 9 to 12 deg. F is a reasonable estimate.

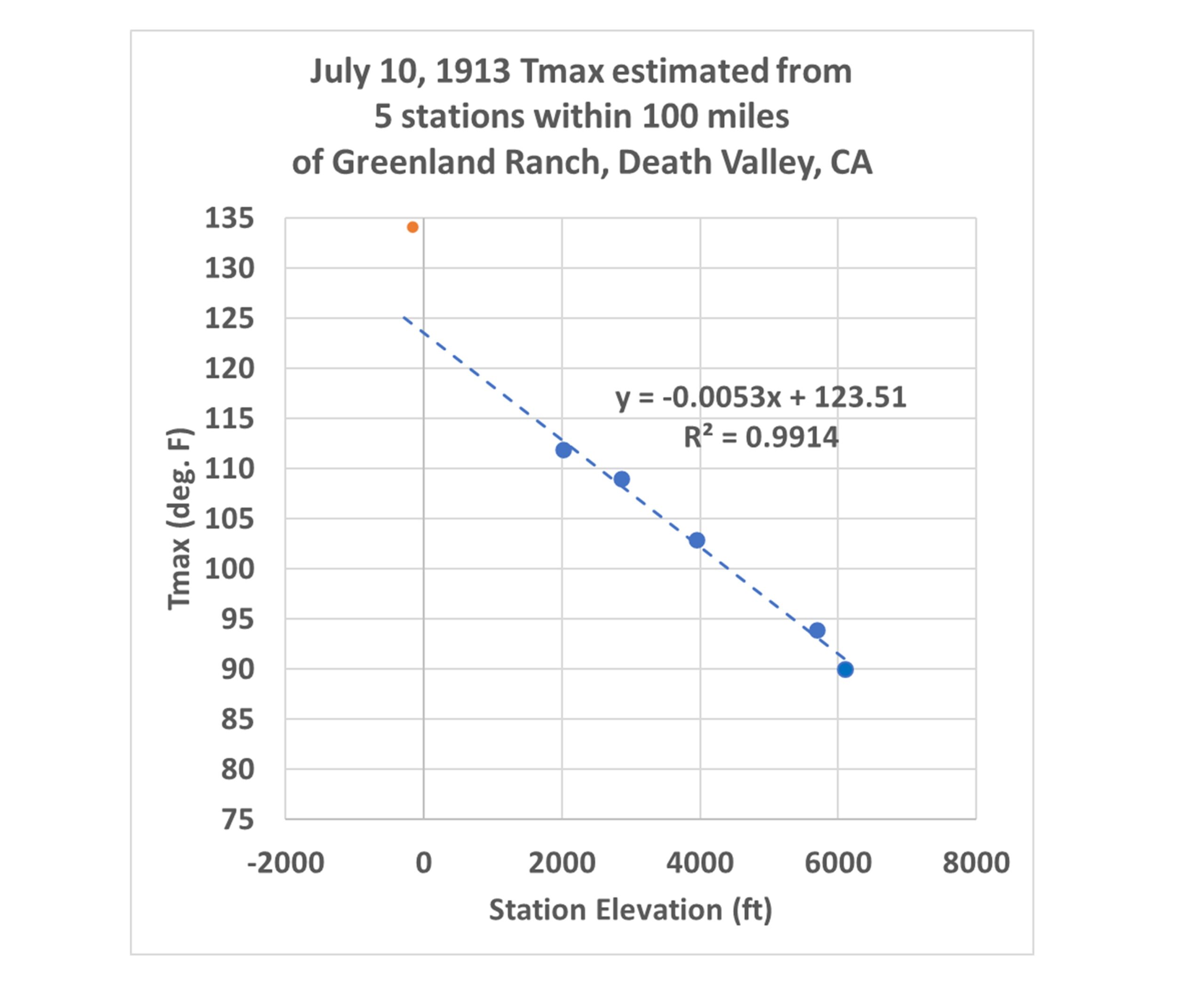

Of course, this might be adjusted somewhat if one assumes a slightly different lapse rate than the -0.004 deg. F per ft. I have assumed here (see Fig. 1). For instance, what if the air mass on July 10, 1913 had an exceptionally steep lapse rate, such that an even greater adjustment for elevation needed to be made to estimate the hot temperature in Death Valley? If I use use the lapse rate estimated from the 5 surrounding stations on July 10, 1913 (see Fig. 5), that lapse rate value is indeed “steeper”, at -0.0053 deg. F per ft. But if we use that value to estimate the Death Valley temperature, it is still 10 deg. cooler than the 134 deg. F recorded value. This is still within the 9 to 12 degree bias range I mentioned above.

Fig. 5. The world record value of 134 deg. F (red) is 10 deg. F warmer than that suggested by the surrounding higher-elevations stations’ temperature variations with elevation on July 10, 1913.

Conclusion

The 134 deg. F world record hottest temperature from Death Valley is likely around 10 deg. F too high, compared to elevation-adjusted temperatures from surrounding stations. The most likely cause is that the ranch foreman’s reported measurements were (shall we say) unacademically recorded. I find it rather remarkable that the world record hottest temperature from Death Valley was not revised many years ago, since the methods for “fact checking” the record are fairly simple, and based upon meteorological principles known for well over 50 years.

Posted in Blog Article | 16 Comments »

Death Valley Temperatures, Part II: Thoughts from William T. Reid

November 9th, 2024

NOTE: Since he has done extensive investigation into some implausibly hot temperatures reported in Death Valley, I asked Bill Reid to comment on my previous blog post where I maintain that the world record 134 deg. F highest recorded air temperature was likely biased warm by about 10 deg., and should not be accepted as a world record. What follows are Bill’s initial thoughts on the subject. Also, based upon his comments, I will likely update the charts found in my previous blog post with more realistic temperature lapse rate values in the early 20th Century when insufficient stations were available to determine accurate lapse rates.

by William T. Reid

A big thank you to Dr. Spencer for investigating the current (very dubious) world high-temperature record and for bringing attention to my Death Valley climate research. There are a handful of ways, both climatologically and meteorologically, to show that Greenland Ranch’s reported maximum of 134F on July 10, 1913, is likely not valid.

Dr. Spencer’s methodology here (comparing the Death Valley maximums to those the closest surrounding stations, with adjustments for station elevation) is indeed a devastating blow to the authenticity of the suspect observations. What it basically demonstrates is that the lower troposphere was not hot enough to support temperatures much above 125F in July, 1913. I have compared regional maximums for all of the hottest summertime events since 1911. In practically all instances (in which the Greenland Ranch and Death Valley reports appear reasonable), ALL of the maximums at the closest surrounding stations lend support to the maximums for Death Valley.

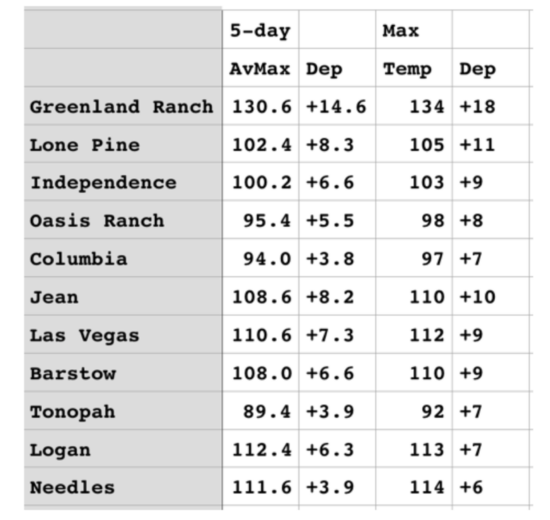

From July 7 to 14 of 1913, when eight consecutive afternoons had reported maximums of 127, 128, 129, 134, 129, 130, 131 and 127F in Death Valley, NONE of the maximums from the closest surrounding stations supported the Greenland Ranch maximums! The departures from average for maximums for the hottest five-day stretch were about +4 to +8 at the closest stations, while maximums at Greenland Ranch were nearly 15 degrees F above the average for July. (see table)

Annual maximums at Greenland Ranch from 1911 to 1960 ranged from 120F to 127F, except for the 134F in 1913. If the reported maximums at Greenland Ranch in July, 1913, were authentic, then the maximums at the closest surrounding stations in that month would have been much higher than reported. In addition, numerous regional heat waves have been hotter than the one during the first half of July, 1913. Why have Death Valley maximums failed to exceed 130F in the interim when three days in July 1913 purportedly reached 134, 130 and 131F?

In his “bias” chart, Dr. Spencer notes the “substantial warm biases in the temperatures reported at Greenland Ranch in the first 10-15 years.” And, he mentions that the observer(s) may have been relying to some extent on thermometers other than the official instrumentation. I do think that the observer was comparing “household thermometer” readings with the official equipment on occasion from spring to summer of 1913. Higher readings off of the poorly-exposed thermometers near the ranch house and under the veranda were probably (and inappropriately) entered onto the official climate form. But, I have not uncovered much evidence of this particular type of deviation from standard observational procedures outside of 1913.

I would contend that the generally higher “bias” numbers from the early years comparably are due primarily to changes at the closest area weather stations which promoted cooler maximums early on and warmer maximums later. For example, two of the closest stations to Greenland Ranch in 1913 were Independence and Lone Pine, in Owens Valley. In 1913, Owens River water was diverted to Los Angeles, and the Owens Valley gradually dried up. Summer maximums increased as Owens Lake evaporated, irrigation was not possible and farmland was abandoned, and desert-like conditions developed. (Roy’s note: The early years had very few stations within 100 miles of Death Valley, and the temperature lapse rates I computed from those few stations appear to be biased as a result. I will correct this in a future blog post, and will provide what should be better estimates of average July daily maximum Death Valley temperatures.)

Also, in the early decades of the 20th century, thermometer shelters were (almost invariably) sited above grass. This resulted in very conservative (i.e., coolish) maximums at desert stations. Low humidities promoted cooling due to evapotranspiration effects. In the early decades of the 20th century, desert weather stations were generally in towns, amidst shade trees and lawns. The resulting maximum temperature reports were very conservative. By mid-century and thereafter, the town weather stations were more likely to be at the airport or at a municipal utility site, fire station or equipment yard. Grass cover and shade trees were usually absent at these locales. Today, desert weather stations in towns and cities are (almost invariably!) above bare ground.

You can imagine the difference in maximums between desert stations above oft-irrigated grass and those above bare ground. (Roy’s note: In my experience, unless the vegetation area is rather large, and there is almost no wind, a weather station’s daily maximum temperature will still be largely determined by air flowing from the larger-scale desert surroundings. But note… this is different from, say a poorly sited thermometer next to a brick wall or heat pump where hot air from an isolated source can elevate the daily maximum temperature recorded).

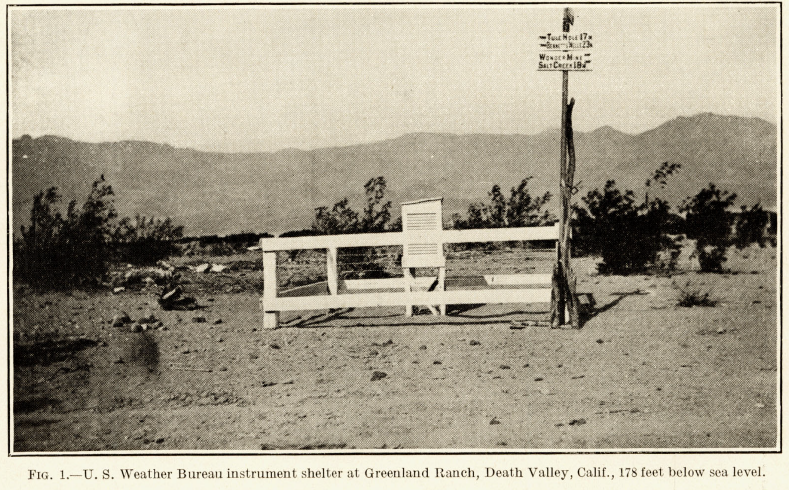

The Greenland Ranch station was originally sited above a patch of alfalfa grass, immediately adjacent to forty acres of cultivated and irrigated land.

It is my belief that the new observer in 1913 (Oscar Denton) was rather disillusioned with the conservative maximums from the official station above grass and next to the evaporatively-cooled farmland. I think he felt compelled to fudge the maximums upwards in 1913. Photographs of the Greenland Ranch weather station show that it was above bare ground by about 1920 (see example photo at top of post).

Posted in Blog Article | 38 Comments »

Death Valley World Record 134 deg. F is Biased ~10 deg. Too High

November 8th, 2024

Key Points:

- Over the years, a few commentators have have argued that the world record highest temperature of 134 deg. F at Death Valley, CA recorded on July 10, 1913 is physically implausible.

- Here I show quantitatively that the 134 deg F temperature is biased high, by about 10 deg. F.

- Extensive historical research by William T . Reid has suggested the person making the temperature observations at Greenland Ranch likely replaced the official measurements from a thermometer in a Stevenson screen shelter with other measurements made next to the adobe living quarters.

Update #1 (11/8/2024): Fixed a few typos.

Update #2 (11/8/2024): For those messaging me about Furnace Creek temperatures reaching 130 deg. F in recent years, see Bill Reid’s summary of side-by-side measurements made there [and reported at an AMS meeting] showing the non-aspirated “official” equipment produces temperatures 2-3 deg. too high during light wind conditions.

Update #3 (11/9/2024): Bill Reid corrected my use of the term “mountain” stations to describe those not in Death Valley. Many of those stations are at desert plateau sites, so I have changed the term to “higher elevation” stations. I have also asked Bill to provide additional thoughts on this issue, which will be the next blog post here.

Background

The “official” world record highest near-surface air temperature is 134 deg. F, recorded in Death Valley, California on July, 10, 1913 at Greenland Ranch, California an isolated location with no similarly sited stations with which to compare (say, within 10 miles and below sea level). Greenland Ranch was a manmade oasis created by the Borax people around the turn of the 20th Century, with water piped in from a nearby mountain. It has a rich history, but always against a backdrop of oppressive summer heat that few visitors (and even workers) could endure.

As part of my new analyses of GHCN daily high and low temperatures, John Christy suggested I take a look at the Death Valley temperature data, and the record 134 deg. temperature in particular. Several people over the years have expressed concerns that the 134 deg. reading is implausibly high, but this has been difficult to prove. Both the World Meteorological Organization and NOAA’s National Weather Service continue to recognize 134 deg. F as the world record. It is quite likely that Death Valley remains the hottest location in North America; it’s the world record value that is in question here.

The most extensive meteorological and historical arguments against the record I’m aware of come from a series of blog posts by storm chaser, climatologist, and weather observer William T. Reid, re-posted at Weather Underground here, which I did not read until after I did the calculations which follow. I have since read some of what Bill has written, and I encourage anyone with an interest in history to read his extensive summaries (along with old photos) of Greenland Ranch, where the world record temperature was recorded. He has done considerable library research and he found a letter from the ranch foreman who expressed disappointment that the measurements from the instrumented shelter provided by the U.S. Weather Bureau in 1911 were so much lower than what he measured under his veranda. Bill suspects (and I agree) that the reported values for some period of time might well have not been from the instrumented shelter.

The method I will use to demonstrate the near-certainty of a high bias was also included in a limited fashion in Reid’s blog post (which contains a variety of meteorological arguments). I will apply the method to all years since 1911, and will show that the 134 deg. F record was approximately 10 deg. higher than what it should have been. The analyses I present are based upon the NOAA GHCN daily temperatures, with basic NOAA quality control procedures applied, thus they are from an “official” source. The GHCN dataset includes the 134 deg. F record high temperature from Death Valley.

How Can One Quality-Check the World Record Highest Temperature?

The main reason that the world record hottest temperature cannot be easily “fact-checked” is that there were no other weather stations in Death Valley at the time, and the low elevation (below sea level) of Greenland Ranch is routinely tens of degrees F hotter than at the mountain stations, which are tens of miles away and thousands of feet higher.

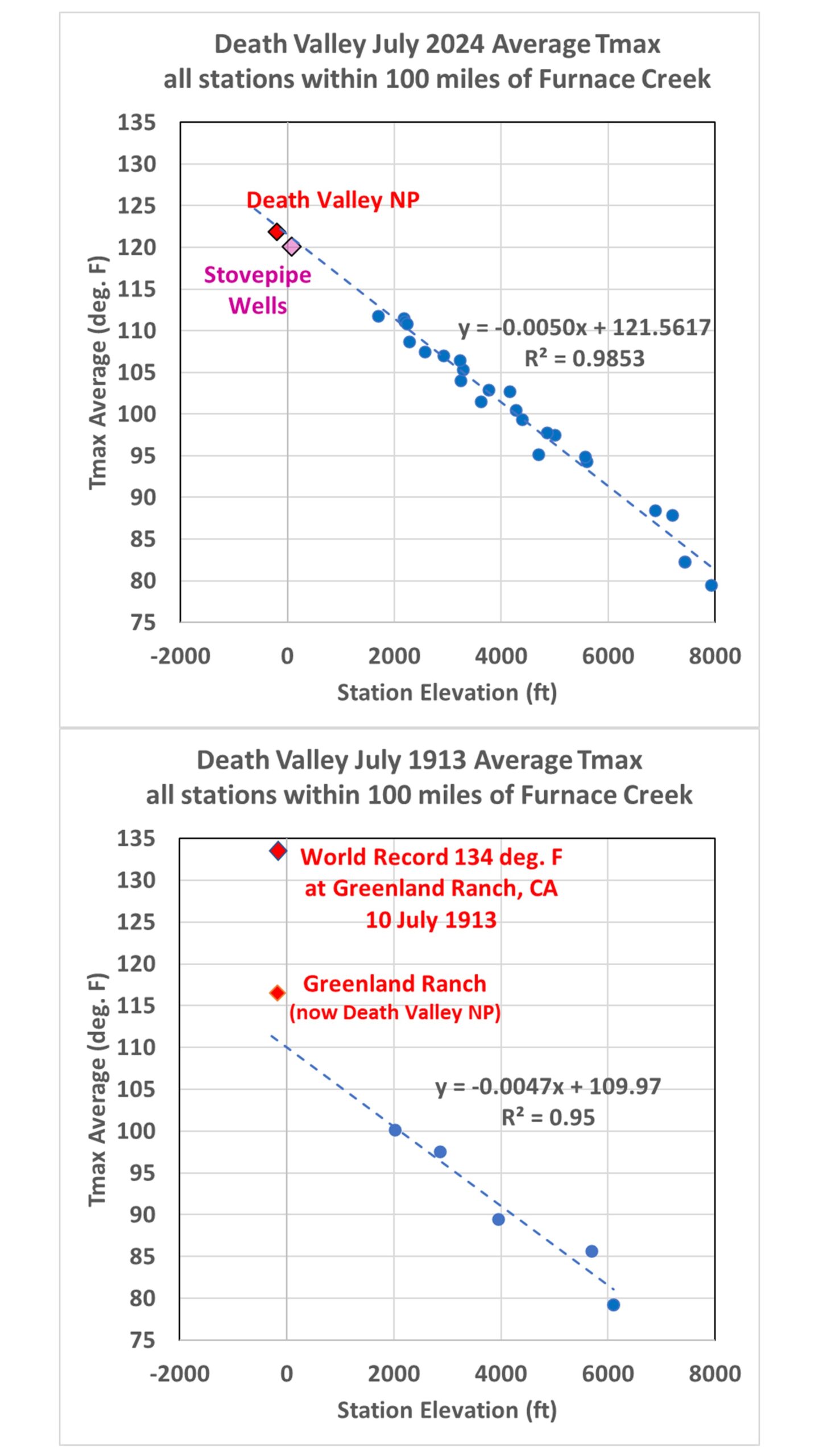

Yet, from a meteorological standpoint, Death Valley in the summer is the perfect place to quality check those hot temperatures from more distant, higher-elevations stations. Before I explain the reasons why, let’s first look at how Death Valley air temperatures compare to higher-elevation stations during July of this year (2024, which had near-record high temperatures), as well as during July of 1913. Following is a plot (Fig. 1) of July-average high temperatures (Tmax) for all stations within 100 miles of the Furnace Creek station (previously “Greenland Ranch”, and today called Death Valley National Park [NP] station). Importantly, I’m plotting these average temperatures versus station elevation:

Fig. 1. July average maximum temperatures at stations within 100 miles of Furnace Creek, CA in 2024 (top) and 1913 (bottom), plotted against station elevation. The regression lines are fit to all stations except those near Greenland Ranch/Furnace Creek/Death Valley N.P.

Note the strong relationship between station elevation and temperature in the 2024 data (top), something William Reid also noted. Significantly, the two lowest-elevation stations (in Death Valley) in 2024 have temperatures which are very close to the regression line that relates how the July-average high temperatures vary with station altitude (the two Death Valley stations, located below sea level, are not included in the regression). But in 1913 (bottom plot), the Greenland Ranch value departs substantially from what would be expected from the surrounding stations.

Regression lines like those in Fig. 1 can be computed in the other years, too, and used to statistically estimate what temperature the Furnace Creek station in Death Valley should have measured, based upon the surrounding, higher-altitude stations. This method allows us to estimate the Death Valley temperatures in each year, which is shown next in Fig. 2. (Bill Reid correctly points out that in the plots which follow, the results in the early years are from very few stations, and I agree that I should probably have used regression-derived lapse rates averaged from other years with more stations.)

Fig. 2. Yearly estimates of July average temperature at Furnace Creek, CA based upon all available GHCN stations within 100 miles of Furnace Creek. Each yearly estimate is based upon the station data from that year. The indicated estimate on July 10, 1913 (the date of the world record 134 deg. F reported reading) is 10 degrees cooler than the world record, at 124 deg. F. This 1-day estimate is based upon the surrounding stations only on that date. Note the 2024 value is about 0.5 deg. F above the value in 1931. Note that urban heat island effects (not accounted for here) might have biased the later half of the record to the warm side.

Meteorological Justification for the Methodology

There are solid meteorological reasons why one can use fairly distant, higher-elevation stations to check Death Valley temperatures in July. (Remember, my formal training is as a meteorologist… I only work in climate because it pays better. I actually took some of the temperature measurements contained in the GHCN daily dataset during summers in the late 1970s when I interned at the National Weather Service Office in Sault Ste. Marie, Michigan).

In simple terms, daytime temperatures during the warm season in dry, semi-desert or desert climates vary with altitude in a predictable and repeatable manner, and with little change over substantial distances. Evidence of this is shown in Fig. 1. This is much less true during the cool season, at night, or during cloudy (or even rainy) weather. This makes Death Valley in July one of the best places on Earth for fact-checking of very warm daytime temperature values. This applies very well to the southwestern U.S. in the summer (at least before monsoon rains arrive), where a semi-permanent high pressure ridge in May-July gets set up every year, with slowly subsiding (sinking) air producing mostly clear skies. This kind of weather feature has a large and uniform regional extent (unlike low pressure troughs, which can be sharp with strong horizontal temperature changes). This is related to something called the “Rossby radius of deformation“.

In simple terms, the warm, high pressure airmasses that settle in over the SW U.S. in July are spatially uniform, with strong daytime vertical mixing producing temperature lapse rates approaching the dry adiabatic value. This allows comparisons between temperature at stations up to (for example) 100 miles away. The big differences in temperatures between neighboring stations, then, are primarily due to altitude. Daytime temperatures in the summer in dry climates decrease rapidly with height (see Fig. 1), providing perfect meteorological conditions for doing the kind of comparison I’m describing here.

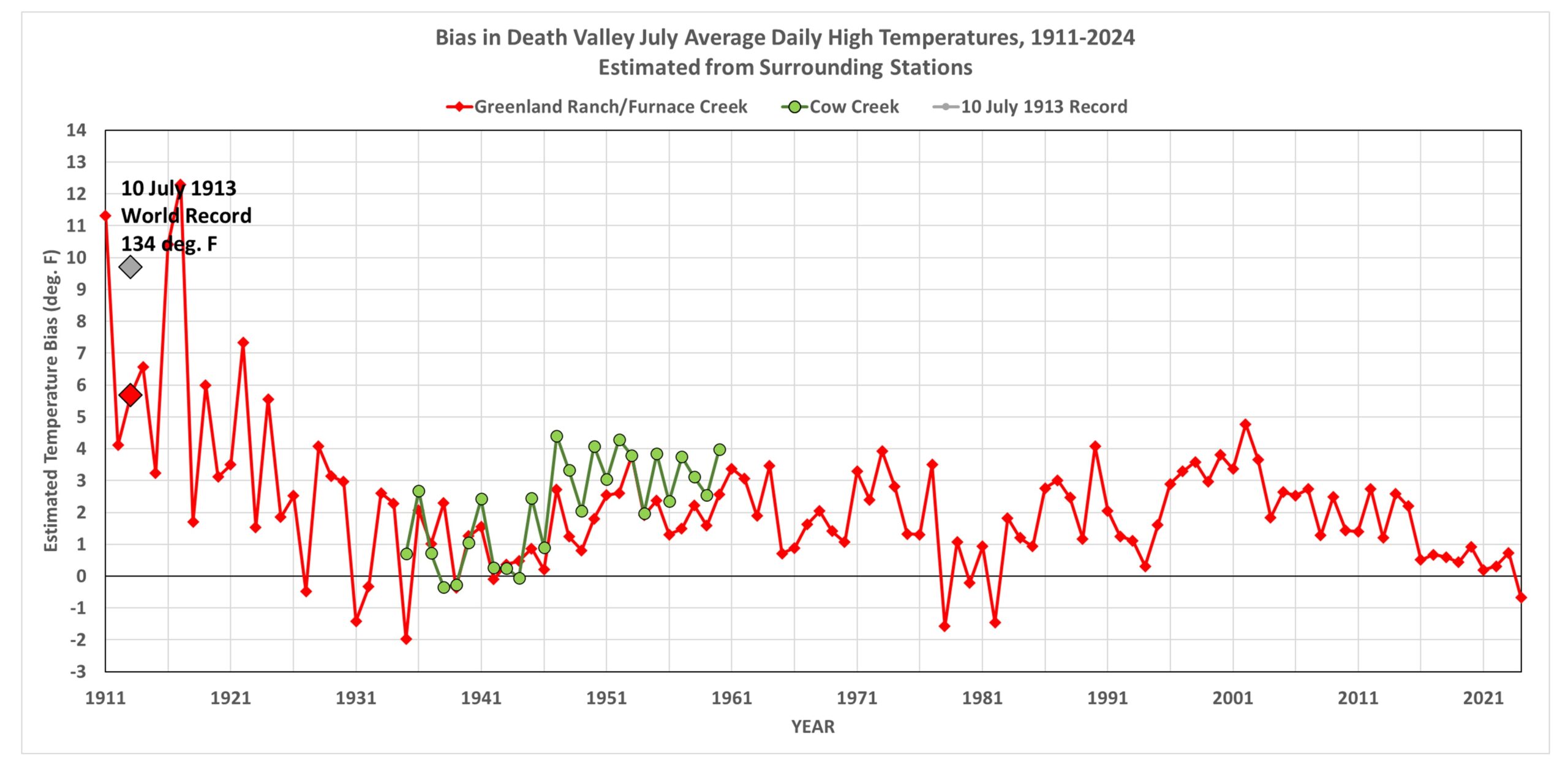

Estimated July Biases in the Greenland Ranch/Furnace Creek/Death Valley N.P.

Finally, we can examine the difference between the reported July average temperatures in Death Valley from the GHCN data and the estimates from the surrounding stations (Fig. 3).

Fig. 3. Estimated biases in the official GHCN July-average temperatures in Death Valley, CA based upon comparison to Death Valley estimates made from all stations within 100 miles of Furnace Creek. The 1-2 deg. F average bias over most of the record might well not be an actual bias in the official measurements, but instead a bias in the method described. UPDATE: I will be updating Figs. 2 and 3 in a future blog post; I need to use more realistic lapse rate values in the early 20th Century when there were few stations with which to compute the lapse rate.

Note the substantial warm biases in the temperatures reported at Greenland Ranch in the first 10-15 years after the USWB installed the instrumented shelter. Again, as Bill Reid surmises, the ranch foreman was likely reporting values from a different thermometer that had poor exposure, next to a building. Also shown in green are data from a nearby station (5 miles away, at essentially the same elevation) called Cow Creek. Note that in the most recent decade, the Death Valley temperature estimates from surrounding stations agree with those measured at the current Death Valley N.P. observation site.

[I have not looked at how increasing urban heat island effects would change the details in Fig. 1-3. To the extent that urbanization has made the higher elevation stations warmer with time (I doubt urbanization is an issue at Furnace Creek), the method-estimated estimates will be correspondingly too warm, which will then cause a spurious upward trend in Fig. 2 and would reduce the computed biases seen in Fig. 3 over time, which is what we see.]

Conclusion

The bottom line is that I believe there to be sufficient quantitative evidence to say that the 134 deg. F world record hottest temperature, still recognized by the WMO and NWS, is as much as 10 deg. F too warm, likely due to observer error (which might well have been intentional). Again, for those interested in the history of Greenland Ranch (which includes the stories of those who died trying to escape the oppressive summer heat), read the fascinating history uncovered by Bill Reid, starting here.

Posted in Blog Article | 4 Comments »

Urban Legends of Climate Change: Palm Springs, California

November 5th, 2024

This is the first of what will likely be a series of posts regarding urban heat island (UHI) effects in daily record high temperatures. My previous UHI work has been using the GHCN monthly average station data of “Tavg” (the average of the daily maximum [Tmax] and minimum [Tmin] temperatures). So, I’m moving from Tavg to Tmax (since record high temperatures are of so much interest), and daily rather than monthly values (although I will also sometimes include monthly results to provide context).

This post is mostly a teaser. Toward the end I will describe a new dimension to our UHI work I’m just starting.

The 2024 Poster Child for U.S. Warming: Palm Springs, CA

I was guided by a Google search on U.S. record high temperatures for 2024, and it seems Palm Springs, California was the place to start.

With a name like “Palm Springs” this place sounds like a wonderful spot to lounge under palm trees and enjoy the cool, refreshing spring water that surrounds you. Instead, the location is largely a desert, with the original downtown spring spitting out 26 gallons a minute of hot water. The “palms” do exist… they are “desert palms”, naturally growing in clusters where groundwater from mountain snowmelt seeps up through fissures connected to the San Andreas fault.

Like all U.S. metropolitan areas, the population growth at Palm Springs in the last 100+ years has been rapid. Even in the last 50 years the population has nearly doubled. Natural desert surfaces have been replaced with pavement and rooftops, which reach higher temperatures than the original desert soil, and the “impervious” nature of artificial surfaces (little air content) means the heat is conducted downward, leading to long-term storage of excess heat energy and, on average, higher temperatures. More on “impervious” surfaces later….

The Palm Springs Airport Weather Observation Site

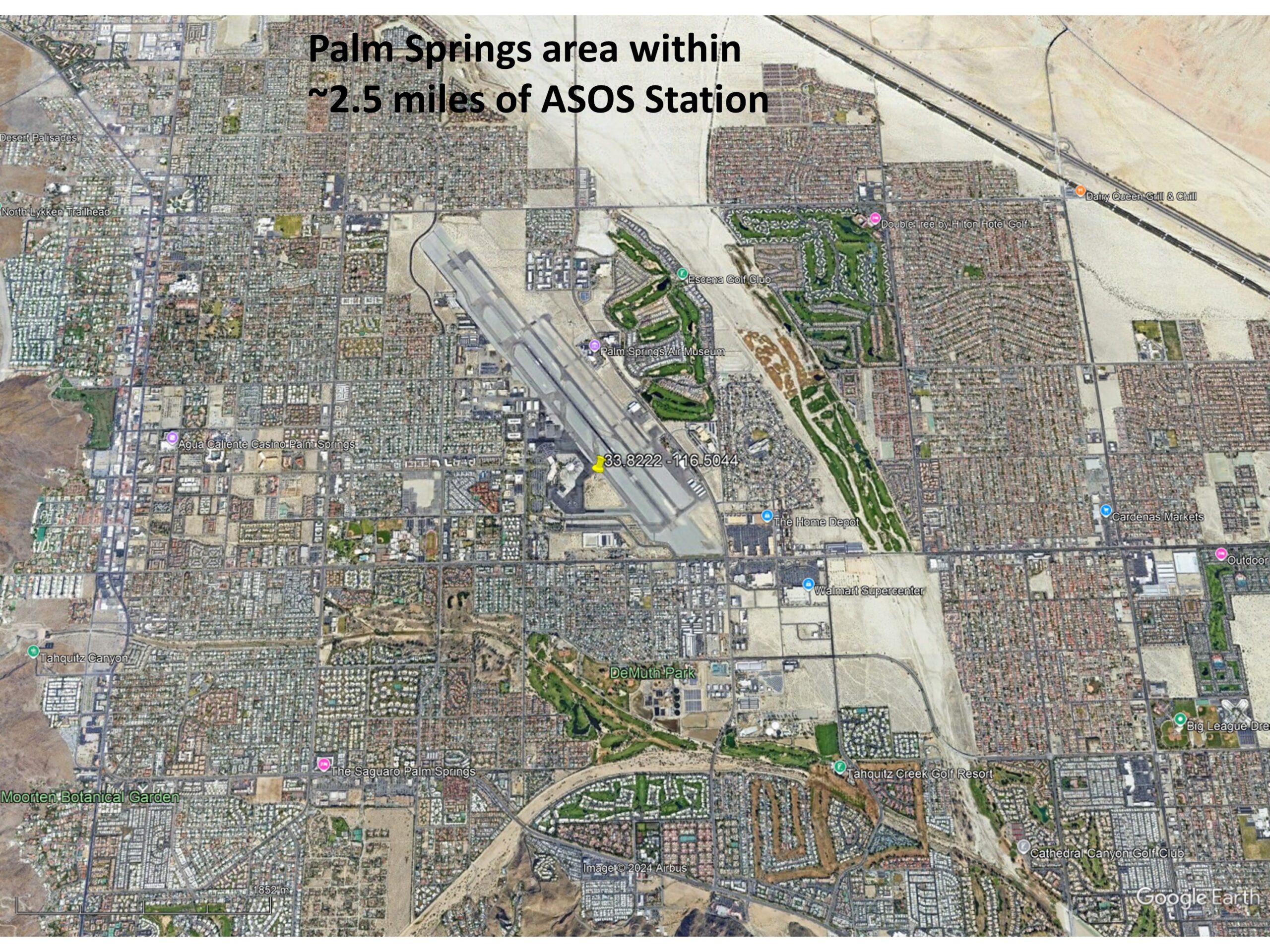

The following Google Earth image shows the current location of the official ASOS (Automated Surface Observing System) site at the Palm Springs Airport, which recorded an all-time record high temperature of 124 deg. F on July 5 of this year.

What is somewhat amusing is that ASOS meteorological instrument siting guidance favors natural surfaces for placement, but since most of these weather stations are at airports (and since they primarily support aviation weather needs, not climate monitoring needs), the “natural” location is usually right next to runways, aircraft, and paved roads.

The next Google Earth image is zoomed out to show the greater Palm Springs area, with the ASOS site in the center (click on the image to zoom, then click to zoom more).

Record July Temperatures and Urbanization

It only makes sense that people want to know the temperature where they live, and most of the U.S. population resides in urban or suburban locations. Yet, the temperatures they experience are, probably without exception, higher than before people moved there and started building roads, buildings, and airports.

But what is misleading for those following the global warming narrative is that record high temperatures reported at these locations almost always mention climate change as a cause, yet they have no way of knowing how much urbanization has contributed to those record high temperatures. (Remember, even without global warming, high temperature records will continue to be broken as urbanization increases).

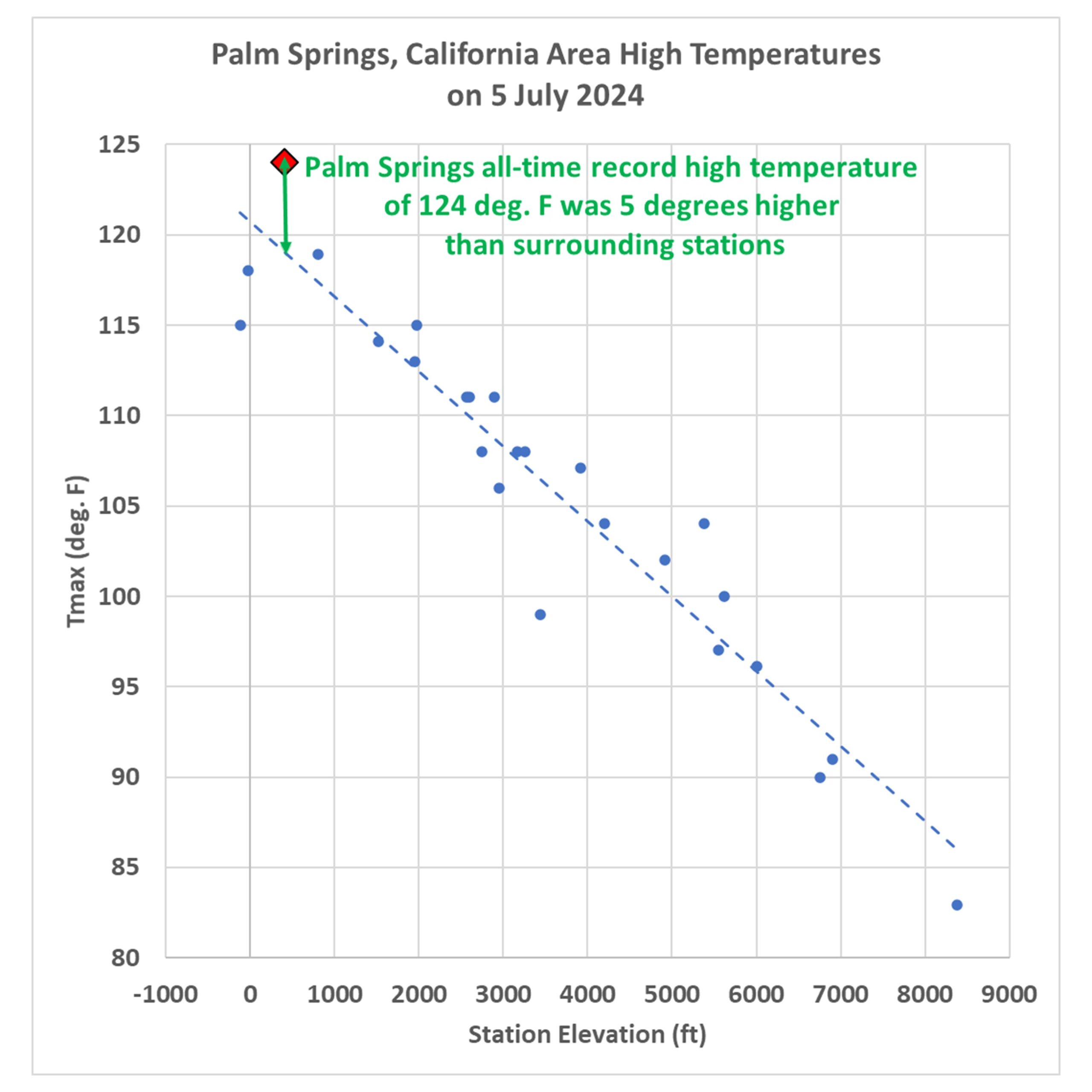

As mentioned above, on July 5, 2024 Palm Springs broke its all-time high temperature record, reaching 124 deg. F. There are 26 other daily GHCN stations within 40 miles of Palm Springs, all with varying levels of urbanization, but even more importantly, at very different elevations. If we plot the high temperatures reported for July 5 at those stations as a function of station elevation, we see that Palm Springs is an “outlier”, 5 degrees warmer than would be expected based upon its elevation-corrected expected temperature (the dashed regression line):

Now, keep in mind that many (if not most) of those 26 surrounding stations have their own levels of urbanization, making them hotter than they would be in the absence of pavement and roofs. So, that 5 deg. F excess is likely an underestimate of how much urban warming contributed to the Palm Springs record high temperature. Palm Springs was incorporated in 1938, and most population growth there has been since World War II.

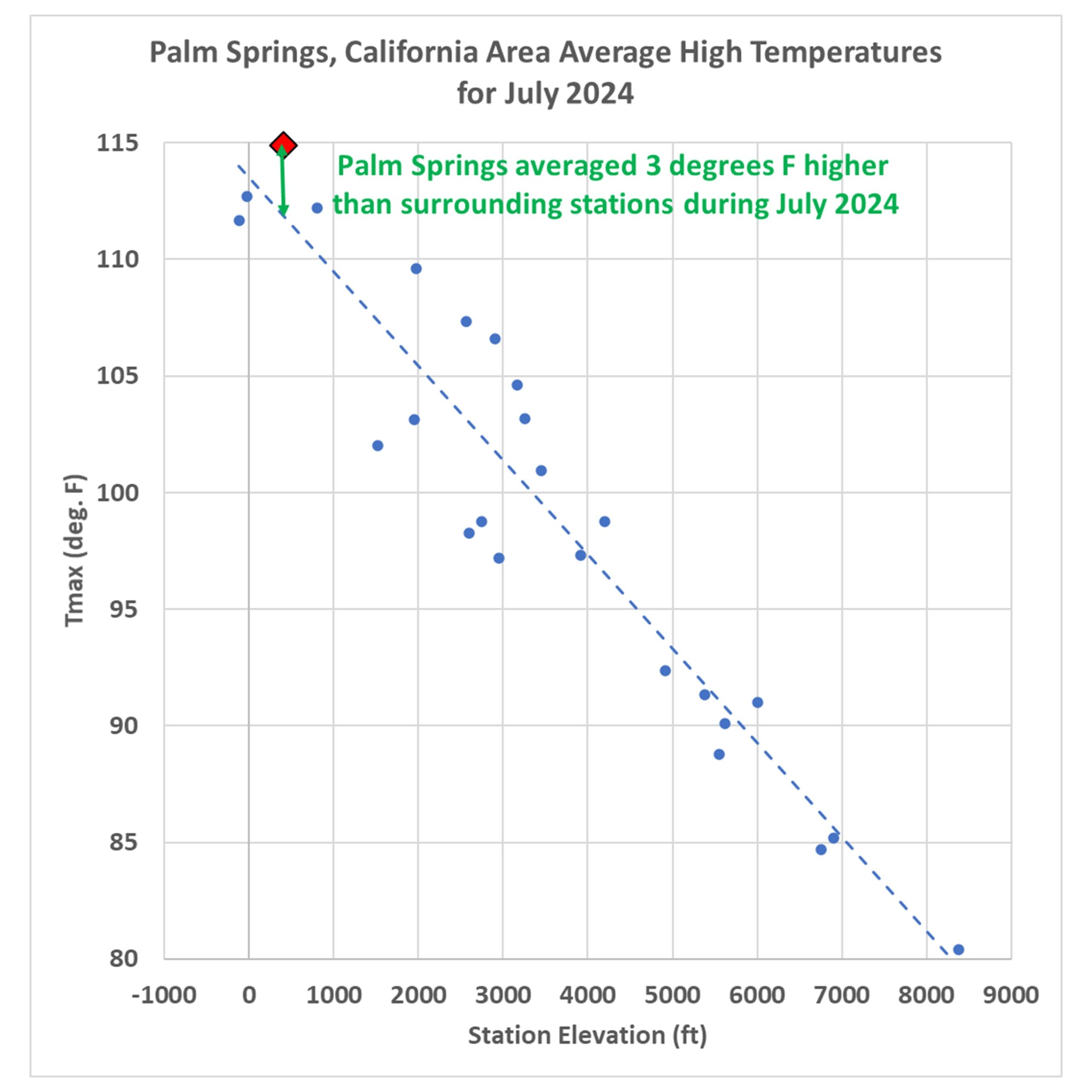

If you are curious how the previous plot looks for the average of all July temperatures, here it is:

For the month of July, Palm Springs averaged 3 deg. F warmer than the surrounding stations (after adjusting for elevation effects, and keeping in mind that most of the *other* stations likely have their own levels of urbanization).

Clearly, Palm Springs has had spurious warming influence from the airport and surrounding urbanization which did not exist 100 years ago. But how much?

Impervious Surface Data as a Surrogate for Urbanization

This blog post is a prelude to a new project we’ve started where we will compare daily (as well and monthly) temperatures to a relatively new USGS dataset of yearly impervious surface coverage from 1985 to 2023, based upon Landsat data. I had previously experimented with a “Built Up” dataset based upon Landsat data, but it turns out that was just buildings. The “impervious surface” dataset is what I believe will have the greatest direct physical connection to what causes most UHI warming: roads, parking lots, roofs, etc. I think this will produce more accurate results (despite being only ~40 years in length) than my population density work (which is, we hope, close to being accepted for publication).

Posted in Blog Article | 52 Comments »

UAH v6.1 Global Temperature Update for October, 2024: +0.73 deg. C After Truncation of the NOAA-19 Satellite Record

November 2nd, 2024

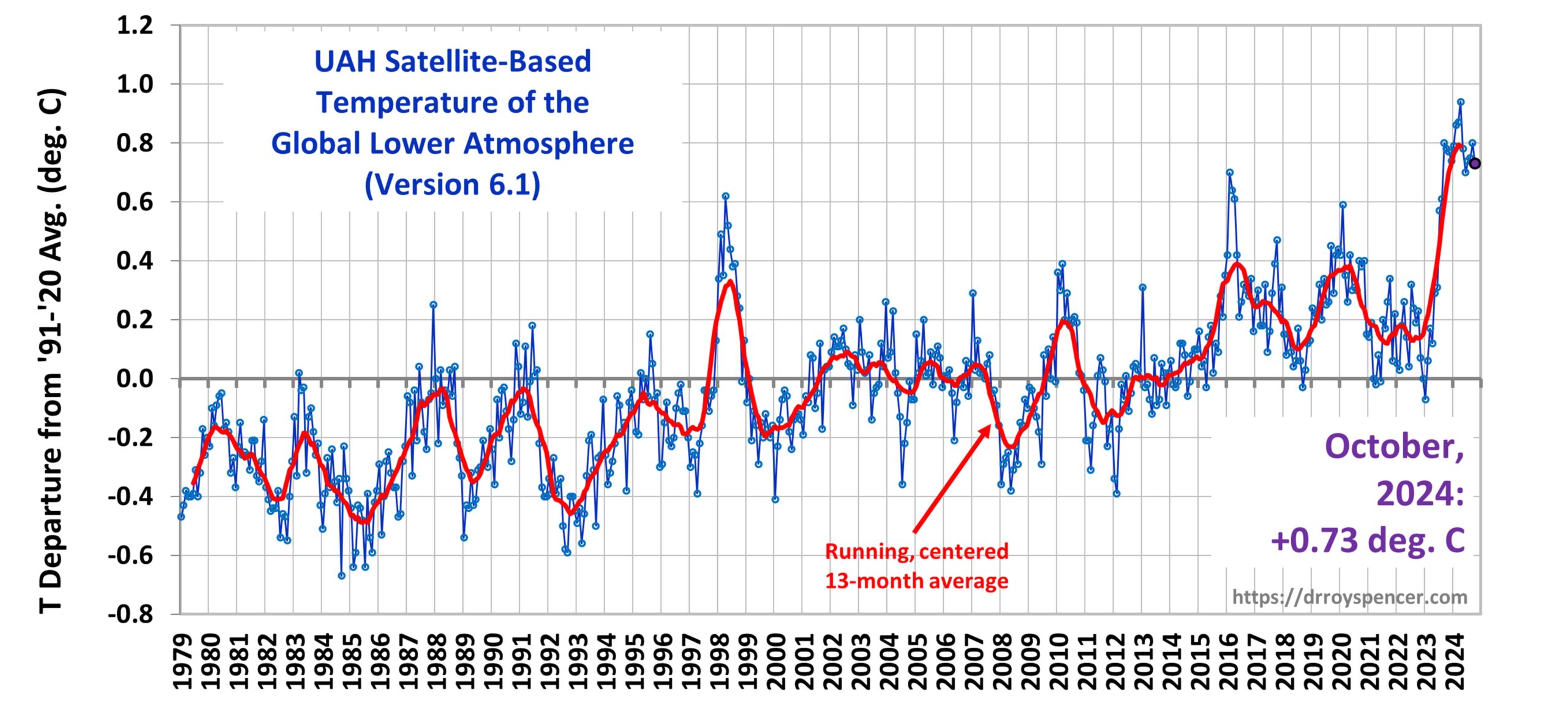

The Version 6.1 global average lower tropospheric temperature (LT) anomaly for October, 2024 was +0.73 deg. C departure from the 1991-2020 mean, down from the September, 2024 anomaly of +0.80 deg. C.

The new (Version 6.1) global area-averaged temperature trend (January 1979 through October 2024) is now +0.15 deg/ C/decade (+0.21 C/decade over land, +0.13 C/decade over oceans).

The previous (version 6.0) trends through September 2024 were +0.16 C/decade (global), +0.21 C/decade (land) and +0.14 C/decade (ocean).

The following provides background for the change leading to the new version (v6.1) of the UAH dataset.

NOTE: Snide comments which suggest someone has not read (and understood) that which follows will lead to comment privileges being revoked.

Key Points

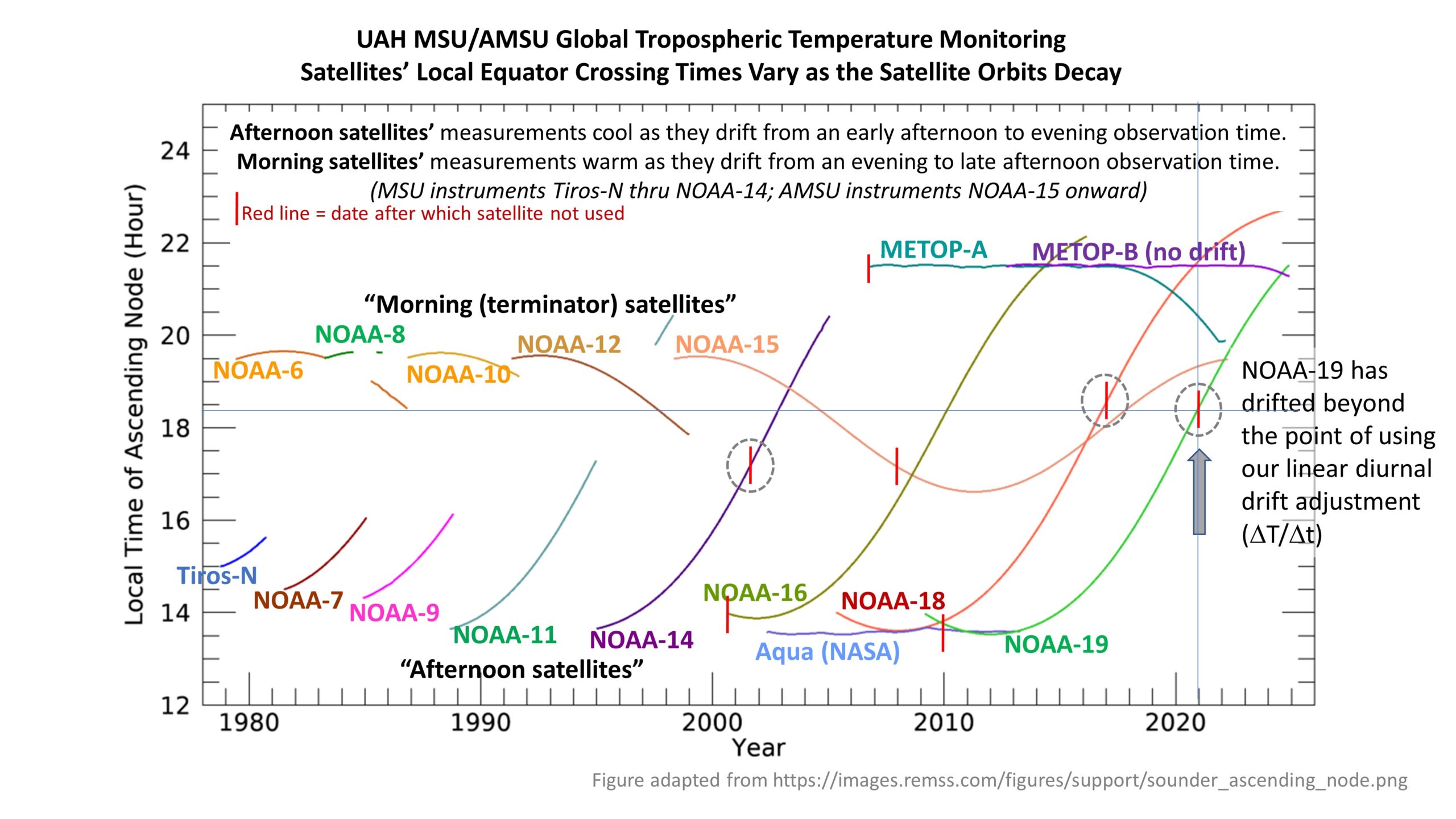

- The older NOAA-19 satellite has now drifted too far through the diurnal cycle for our drift correction methodology to provide useful adjustments. Therefore, we have decided to truncate the NOAA-19 data processing starting in 2021. This leaves Metop-B as the only satellite in the UAH dataset since that date. This truncation is consistent with those made to previous satellites after orbital drift began to impact temperature measurements.

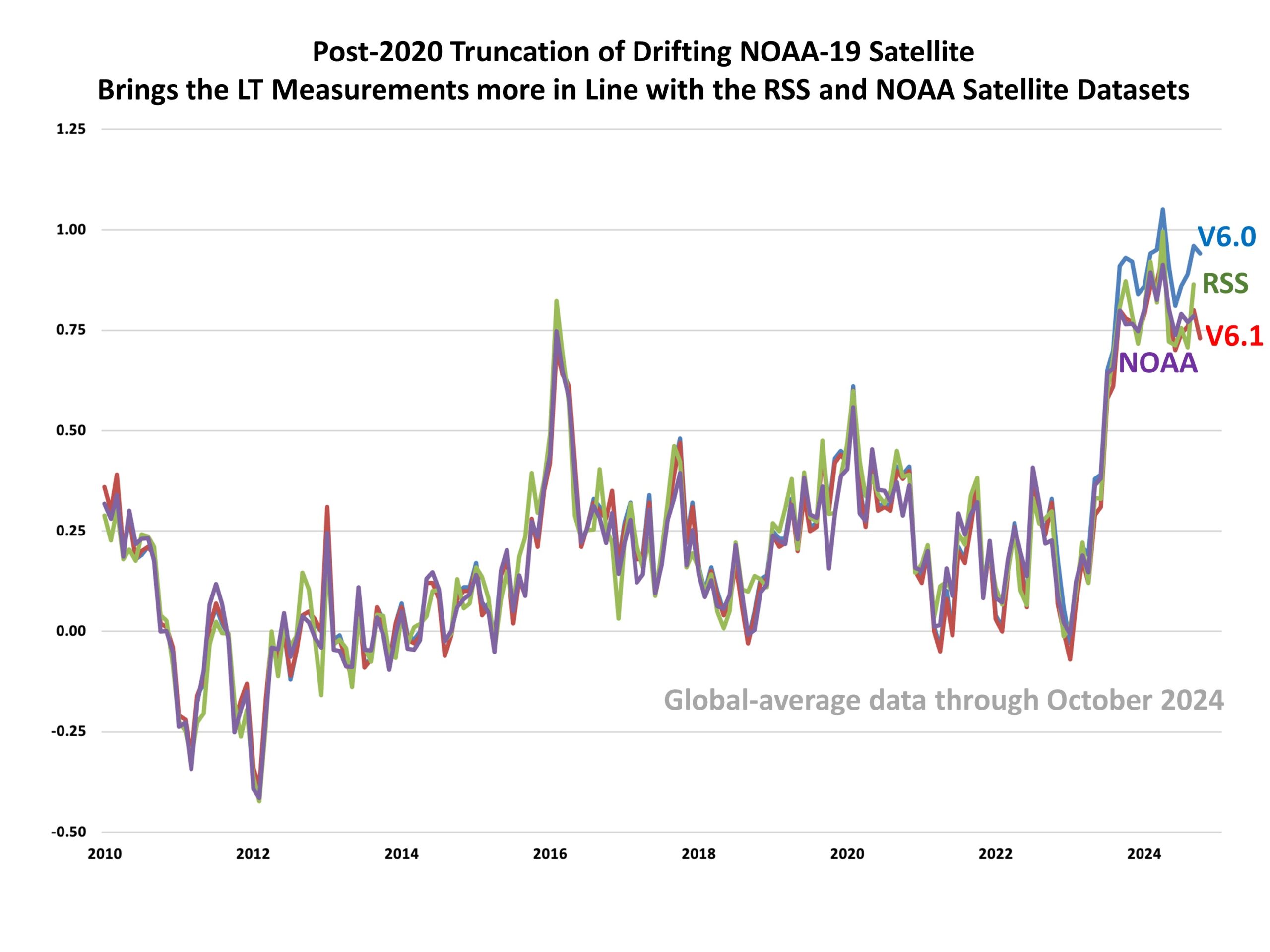

- This change reduces recent record global warmth only a little, bringing our calculated global temperatures more in line with the RSS and NOAA satellite datasets over the last 2-3 years.

- Despite the reduction in recent temperatures, the 1979-2024 trend is reduced by only 0.01 deg/ C/decade, from +0.16 C/decade to +0.15 C per decade. Recent warmth during 2023-2024 remains record-setting for the satellite era, with each month since October 2023 setting a record for that calendar month.

Background

Monitoring of global atmospheric deep-layer temperatures with satellite microwave radiometers (systems originally designed for daily global weather monitoring) has always required corrections and adjustments to the calibrated data to enable long-term trend detection. The most important of these corrections/adjustments are:

- Satellite calibration biases, requiring intercalibration between successively launched satellites during overlaps in operational coverage. These adjustments are typically tenths of a degree C.

- Drift of the orbits from their nominal sun-synchronous observation times, requiring empirical corrections from comparison of a drifting satellite to a non-drifting satellite (the UAH method), or from climate models (the Remote Sensing Systems [RSS] method, which I believe the NOAA dataset also uses). These corrections can reach 1 deg. C or more for the lower tropospheric (LT) temperature product, especially over land and during the summer.

- Correction for instrument body temperature effects on the calibrated temperature (an issue with only the older MSU instruments, which produced spurious warming).

- Orbital altitude decay adjustment for the multi-view angle version of the lower tropospheric (LT) product (no longer needed for the UAH dataset as of V6.0, which uses multiple channels instead of multiple angles from a single channel.)

The second of these adjustments (diurnal drift) is the subject of the change made going from from UAH v6.0 to v6.1. The following chart shows the equator crossing times (local solar time) for the various satellites making up the satellite temperature record. The drift of the satellites (except the non-drifting Aqua and MetOp satellites, which have fuel onboard to allow orbit maintenance) produces cooling for the afternoon satellites’ LT measurements as the afternoon observation transitions from early afternoon to evening. Drift of the morning satellites makes their LT temperatures warm as their evening observations transition to the late afternoon.

The red vertical lines indicate the dates after which a satellite’s data are no longer included in the v6.0 (UAH) processing, with the NOAA-19 truncation added for v6.1. Note that the NOAA-19 satellite has drifted further in local observation time than any of the previous afternoon satellites. The NOAA-19 local observation times have been running outside our training dataset which includes the assumption of a linear diurnal temperature drift with time. So we have decided it is now necessary to truncate the data from NOAA-19 starting in 2021, which we are now doing as of the October, 2024 update.

Thus begins Version 6.1 of our dataset, a name change meant to reduce confusion and indicate a significant change in our processing. As seen in the above figure, 2020 as the last year of NOAA-19 data inclusion is roughly consistent with the v6.0 cutoff times from the NOAA-18 and NOAA-14 (afternoon) satellites.

This type of change in our processing is analogous to changes we have made in previous years, after a few years of data being collected to firmly establish a problem exists. The time lag is necessary because we have previously found that two operating satellites in different orbits can diverge in their processed temperatures, only to converge again later. As will be shown below, we now have sufficient reason to truncate the NOAA-19 data record starting in 2021.

Why Do We Even Include a Satellite if it is Drifting in Local Observation Time?

The reasons why a diurnally drifting satellite is included in processing (with imperfect adjustments) are three-fold: (1) most satellites in the 1979-2024 period of record drifted, and so their inclusion was necessary to make a complete, intercalibrated satellite record of temperatures; (2) two operational satellites (usually one drifting much more than the other) provide more complete sampling during the month for our gridded dataset, which has 2.5 deg. lat/lon resolution; (3) having two (or sometimes 3) satellites allows monitoring of potential drifts, i.e., the time series of the difference between 2 satellite measurements should remain relatively stable over time.

Version 6.1 Brings the UAH Data closer to RSS and NOAA in the Last Few Years

Several people have noted that our temperature anomalies have been running warmer than those from the RSS or NOAA satellite products. It now appears this was due to the orbital drift of NOAA-19 beyond the useful range of our drift correction. The following plot (preliminary, provided to me by John Christy) shows that truncation of the NOAA-19 record now brings the UAH anomalies more in line with the RSS and NOAA products.

As can be seen, this change has lowered recent global-average temperatures considerably. For example, without truncation of NOAA-19, the October anomaly would have been +0.94 deg. C, but with only MetOp-B after 2020 it is now +0.73 deg. C.

The following table lists various regional Version 6.1 LT departures from the 30-year (1991-2020) average for the last 22 months (record highs are in red):

| YEAR | MO | GLOBE | NHEM. | SHEM. | TROPIC | USA48 | ARCTIC | AUST |

|---|---|---|---|---|---|---|---|---|

| 2023 | Jan | -0.07 | +0.06 | -0.21 | -0.42 | +0.14 | -0.11 | -0.45 |

| 2023 | Feb | +0.06 | +0.12 | +0.01 | -0.15 | +0.64 | -0.28 | +0.11 |

| 2023 | Mar | +0.17 | +0.21 | +0.14 | -0.18 | -1.35 | +0.15 | +0.57 |

| 2023 | Apr | +0.12 | +0.04 | +0.20 | -0.10 | -0.43 | +0.46 | +0.38 |

| 2023 | May | +0.29 | +0.16 | +0.42 | +0.33 | +0.38 | +0.54 | +0.13 |

| 2023 | June | +0.31 | +0.34 | +0.28 | +0.51 | -0.54 | +0.32 | +0.24 |

| 2023 | July | +0.57 | +0.60 | +0.55 | +0.83 | +0.28 | +0.81 | +1.49 |

| 2023 | Aug | +0.61 | +0.77 | +0.44 | +0.77 | +0.69 | +1.49 | +1.29 |

| 2023 | Sep | +0.80 | +0.83 | +0.77 | +0.82 | +0.28 | +1.12 | +1.15 |

| 2023 | Oct | **+**0.78 | +0.84 | +0.72 | +0.84 | +0.81 | +0.81 | +0.56 |

| 2023 | Nov | +0.77 | +0.87 | +0.67 | +0.87 | +0.52 | +1.07 | +0.28 |

| 2023 | Dec | +0.74 | +0.91 | +0.57 | +1.00 | +1.23 | +0.31 | +0.64 |

| 2024 | Jan | +0.79 | +1.01 | +0.57 | +1.18 | -0.19 | +0.39 | +1.10 |

| 2024 | Feb | +0.86 | +0.93 | +0.79 | +1.14 | +1.30 | +0.84 | +1.14 |

| 2024 | Mar | +0.87 | +0.95 | +0.80 | +1. 24 | +0.23 | +1.05 | +1.27 |

| 2024 | Apr | +0.94 | + 1.12 | +0.76 | +1.14 | +0.87 | +0.89 | +0.51 |

| 2024 | May | +0.78 | +0.78 | +0.79 | +1.20 | +0.06 | +0.23 | +0.53 |

| 2024 | June | +0.70 | +0.78 | +0.61 | +0.85 | +1.38 | +0.65 | +0.92 |

| 2024 | July | +0.74 | +0.86 | +0.62 | +0.97 | +0.42 | +0.58 | -0.13 |

| 2024 | Aug | +0.75 | +0.81 | +0.69 | +0.73 | +0.38 | +0.90 | +1. 73 |

| 2024 | Sep | +0.80 | +1.03 | +0.56 | +0.80 | +1.28 | +1.49 | +0.96 |

| 2024 | Oct | +0.73 | +0.87 | +0.59 | +0.61 | +1.84 | +0.81 | +1.07 |

The full UAH Global Temperature Report, along with the LT global gridpoint anomaly image for October, 2024, and a more detailed analysis by John Christy, should be available within the next several days here. This could take a little longer this time due to the changes resulting from going from v6.0 to v6.1 of the dataset.

The monthly anomalies for various regions for the four deep layers we monitor from satellites will be available in the next several days (also possibly delayed):

Lower Troposphere:

http://vortex.nsstc.uah.edu/data/msu/v6.1/tlt/uahncdc_lt_6.1.txt

Mid-Troposphere:

http://vortex.nsstc.uah.edu/data/msu/v6.1/tmt/uahncdc_mt_6.1.txt

Tropopause:

http://vortex.nsstc.uah.edu/data/msu/v6.1/ttp/uahncdc_tp_6.1.txt

Lower Stratosphere:

http://vortex.nsstc.uah.edu/data/msu/v6.1/tls/uahncdc_ls_6.1.txt

Posted in Blog Article | 117 Comments »

A Demonstration: Low Correlations Do Not Necessarily Lead to Low Confidence in Data Regressions

October 28th, 2024

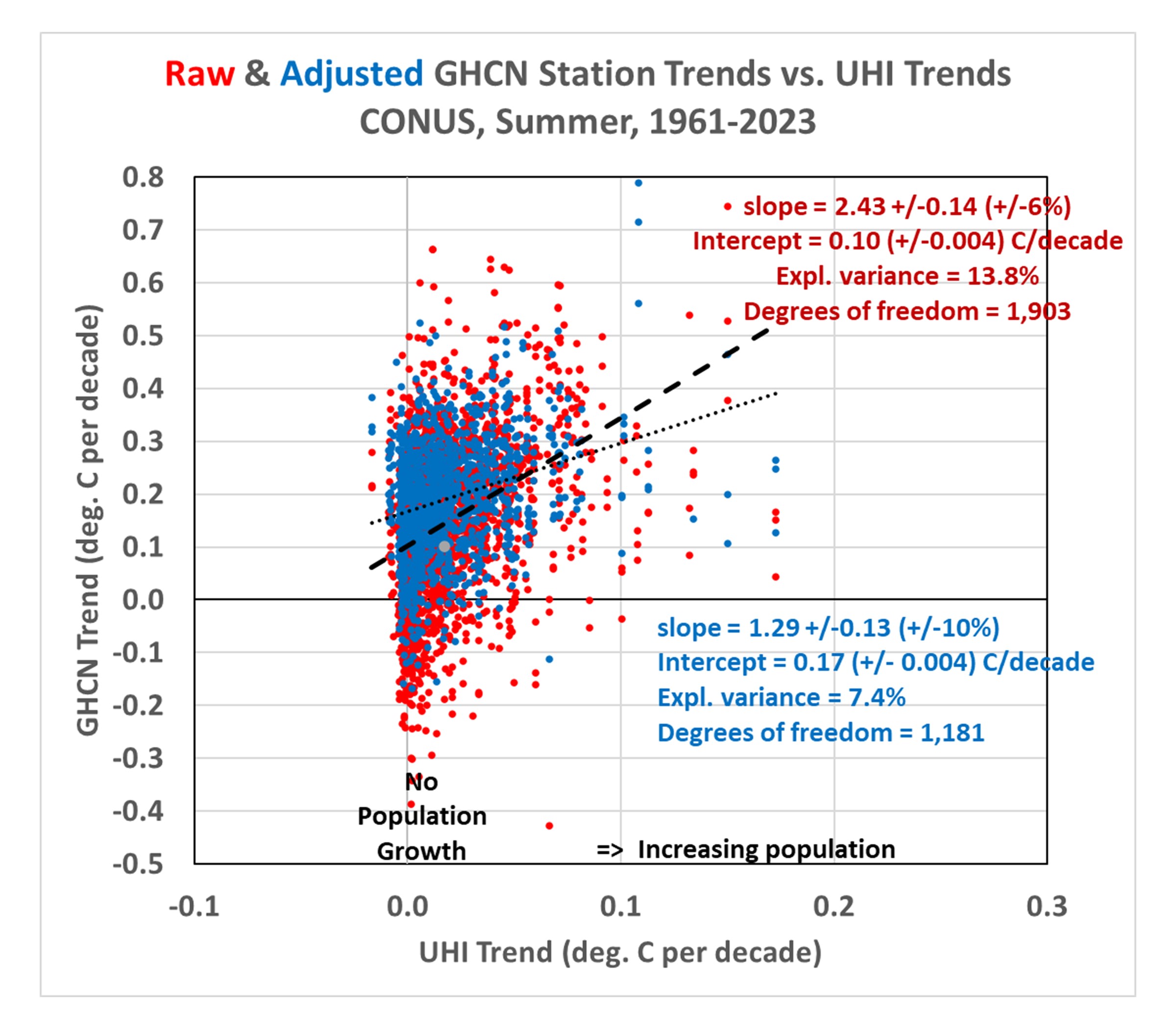

In a recent post I used our new Urban Heat Island (UHI) warming estimates at individual U.S. GHCN stations having at least 120 years of data to demonstrate that the homogenized (adjusted) GHCN data still contain substantial UHI effects. Therefore, spurious warming from UHI effects is inflating reported U.S. warming trends.

The data plots I presented had considerable scatter, though, leading to concerns that there is large uncertainty in my quantitative estimates of how much UHI warming remains in the GHCN data. So, I updated that post to include additional statistics of the regressions.

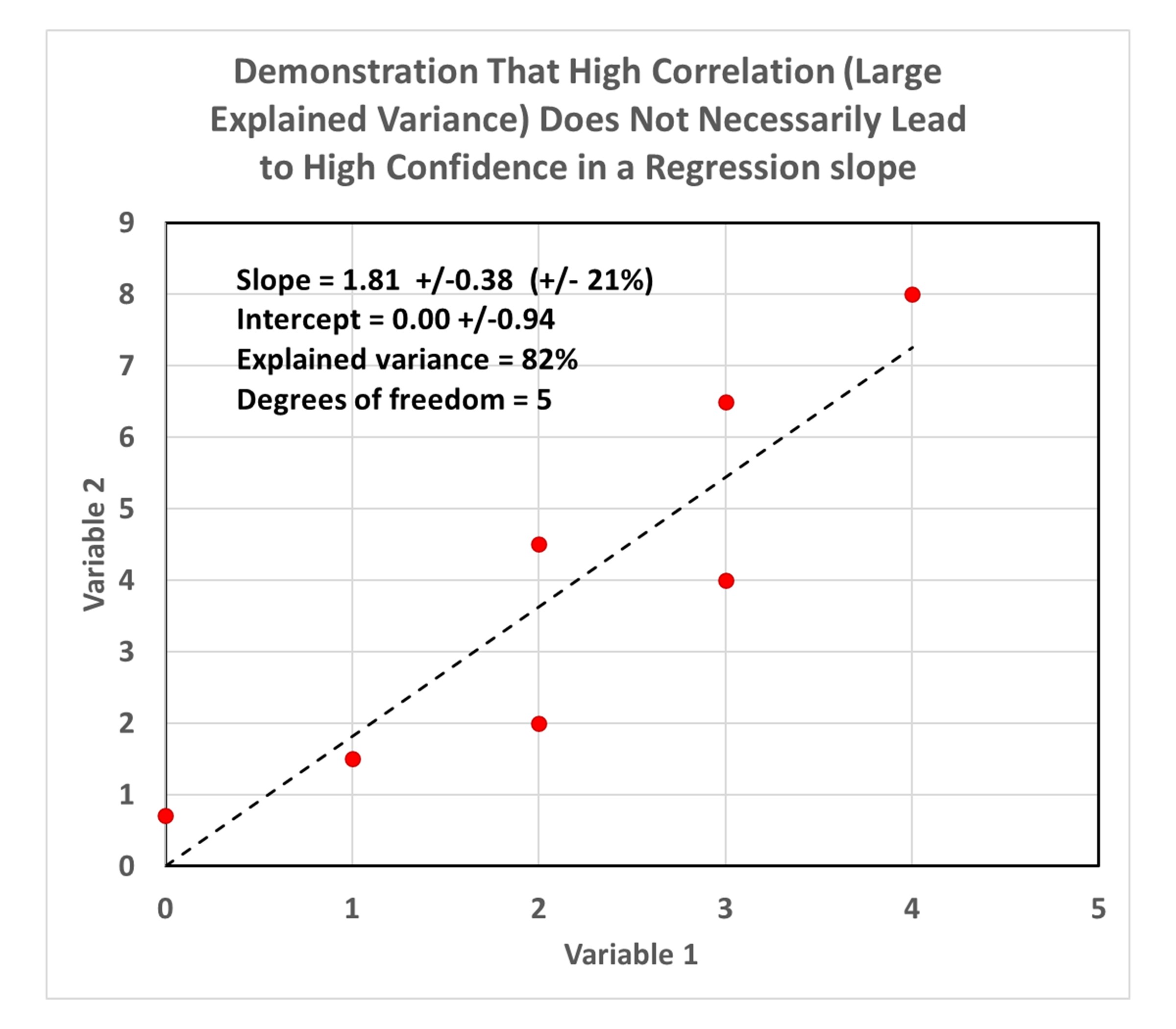

A Simple Example: High Correlation, But Low Confidence In the Regression Slope

The following plot of a small amount of data I created shows what looks like a pretty strong linear relationship between 2 variables, with a regression explained variance of 82% (correlation coefficient of 0.91).

But because there are so few data points, there is large statistical uncertainty in the resulting diagnosed regression slope (21% uncertainty), as well as the regression intercept (which is diagnosed as 0.0, but with an uncertainty of +/- 0.94).

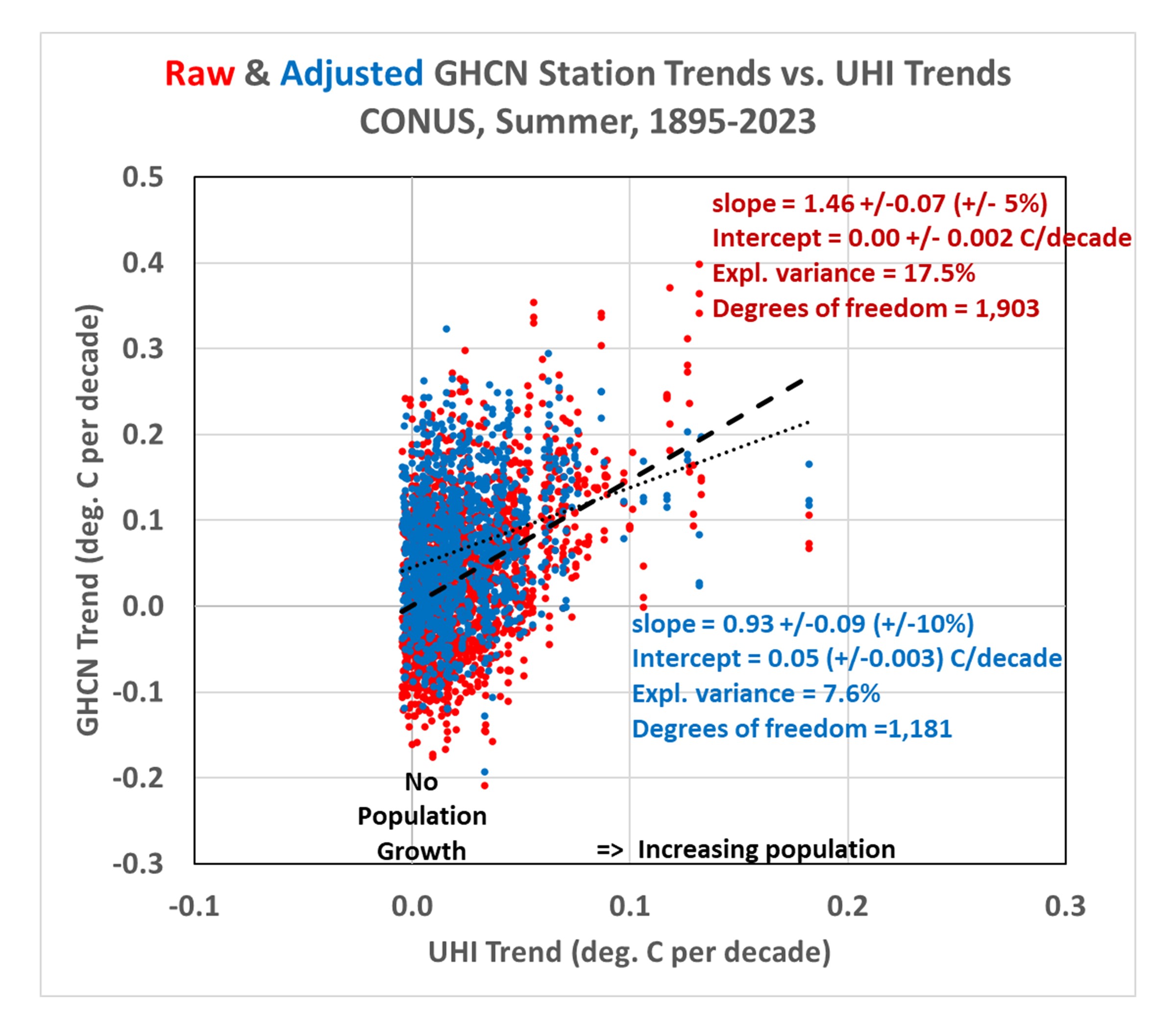

Now let’s look at the third data plot from my previous blog post, which demonstrated that there is UHI warming in not only the raw GHCN data, but in the homogenized data as well:

Importantly, even though the regression explained variances are rather low (17.5% for the raw data, 7.6% for the adjusted data), the confidence in the regression slopes is quite high (+/-5% for the raw GHCN regressions, and +/-10% for the homogenized GHCN regressions). Confidence is also high in the regression intercepts (+/-0.002 C/decade for the raw GHCN data, +/-0.003 C/decade for the homogenized GHCN data).

Compare these to the first plot above containing very few data points, which had a very high explained variance (82%) but a rather uncertain regression slope (+/- 21%).

The points I was making in my previous blog post depended upon both the regression slopes and the regression intercepts. The positive slopes demonstrated that the greater the population growth at GHCN stations, the greater the warming trend… not only in the raw data, but in the homogenized data as well. The regression intercepts of zero indicated that the data, taken as a whole, suggested zero warming trend (1895-2023) if the stations had not experienced population growth.

But it must be emphasized that these are all-station averages for the U.S., not area averages. It is “possible” that there has (by chance) actually been more climate warming at the locations where there has been more population growth. So it would be premature to claim there has been no warming trend in the U.S. after taking into account spurious UHI warming effects. I also showed that there has been warming if we look at more recent data (1961-2023).

But the main point of this post is to demonstrate that low correlations between two dataset variables do not necessarily mean low confidence in regression slopes (and intercepts). The confidence intervals also depend upon how much data are contained in the dataset.

Posted in Blog Article | 67 Comments »

As Retirement Approaches…

October 26th, 2024

Since I’ve been getting questions about my retirement plans, I decided it’s time to address what I know so far.

John Christy will be retiring from UAH July 2026. Because my funding has been tied to his projects (including the Alabama Office of the State Climatologist, which he heads), there is a good chance I will also be retiring on or before that date.

The main issue with me continuing employment past his retirement date is the lack of funding from the federal government. We had a Department of Energy contract, but it is ending and we have very few friends in Washington since we remain on the “wrong side” of the science. The peer review process (which determines what proposals the government will fund) has been stacked against us for many years making it almost impossible to get funded to investigate the issues we believe are important to the climate debate.

It’s a little ironic that even though both John and I are “lukewarmers” that’s just not alarmist enough for us to be allowed to play in the climate sandbox with the big dogs (sorry for the mixed metaphor).

John and I still need to discuss how to keep the monthly satellite temperature updates going (if possible). There are three of us contributing to this effort. Danny Braswell (retired from UAH, but working part time) has been trying to get the newer ATMS instruments folded into our processing, but downloading the historical data is taking forever due to NOAA limitations on the number of files that can be requested on a daily basis. Also, much of the software had to be re-written to handle the differences between the AMSU and ATMS instruments scan geometries. John Christy is a good planner, and I’m hopeful we can work out something to keep the global temperature updates going, keeping in mind none of us is getting any younger.

On that subject, I am often asked if there are new, young researchers who can take our place. The problem is that their careers depend upon getting those same federal contracts we depended upon. Unfortunately, any projects that smell like climate skepticism are generally not funded, and young researchers will likely hurt their careers if they are considered to be replacements for John or me.

It has been many years since we received funding specifically to support the global temperature monitoring effort. The Remote Sensing Systems satellite temperature monitoring effort has much more funding success due to (in my opinion) Frank Wentz’s long-term, close friendship with one of NASA’s top managers. It helps to have friends in high places.

I will keep everyone updated as I learn more. Personally, I would like to continue the work I have started (especially the urban heat island work) if possible. Staying working, even part-time, helps keep me sane… I need to keep my mind active.

But advancing any science that doesn’t support global warming being a “crisis” remains an uphill battle. Several months before his death, Rush Limbaugh told me he thought we were losing that battle. But I’m willing to continue to fight it, anyway. I’m old enough to remember when the Soviet Union was believed to be an ever-present danger to the world that would never end, and yet it imploded. Maybe one day climate alarmism will suffer the same fate.

Posted in Blog Article | 46 Comments »

Urban Heat Island Effects Have Not Yet Been Removed from Official GHCN Warming Trends

October 25th, 2024

UPDATE (28 Oct. 2024): In response to concerns regarding the large amount of scatter in the data plots presented below, and the claims I make based upon those regressions, I have replaced all plots which now contain additional regression statistics. These statistics demonstrate high confidence. What many people don’t realize is that diagnosed regression slopes (and regression intercepts) can have high confidence despite low correlations (large scatter) if there are many data points. I will be posting a new article today showing an example of this.

Our paper (co-authored by John Christy and Danny Braswell) on computing the urban heat island (UHI) effect as a function of population density (PD) is now in the final stages of review after a 3rd round of edits, and I’m hopeful it will be accepted for publication soon. So far, I’ve only used Tavg data (the average of daily maximum and minimum temperatures) in developing and testing the method, and the paper uses only contiguous U.S. summertime data (June, July, August), which is what I will address here.

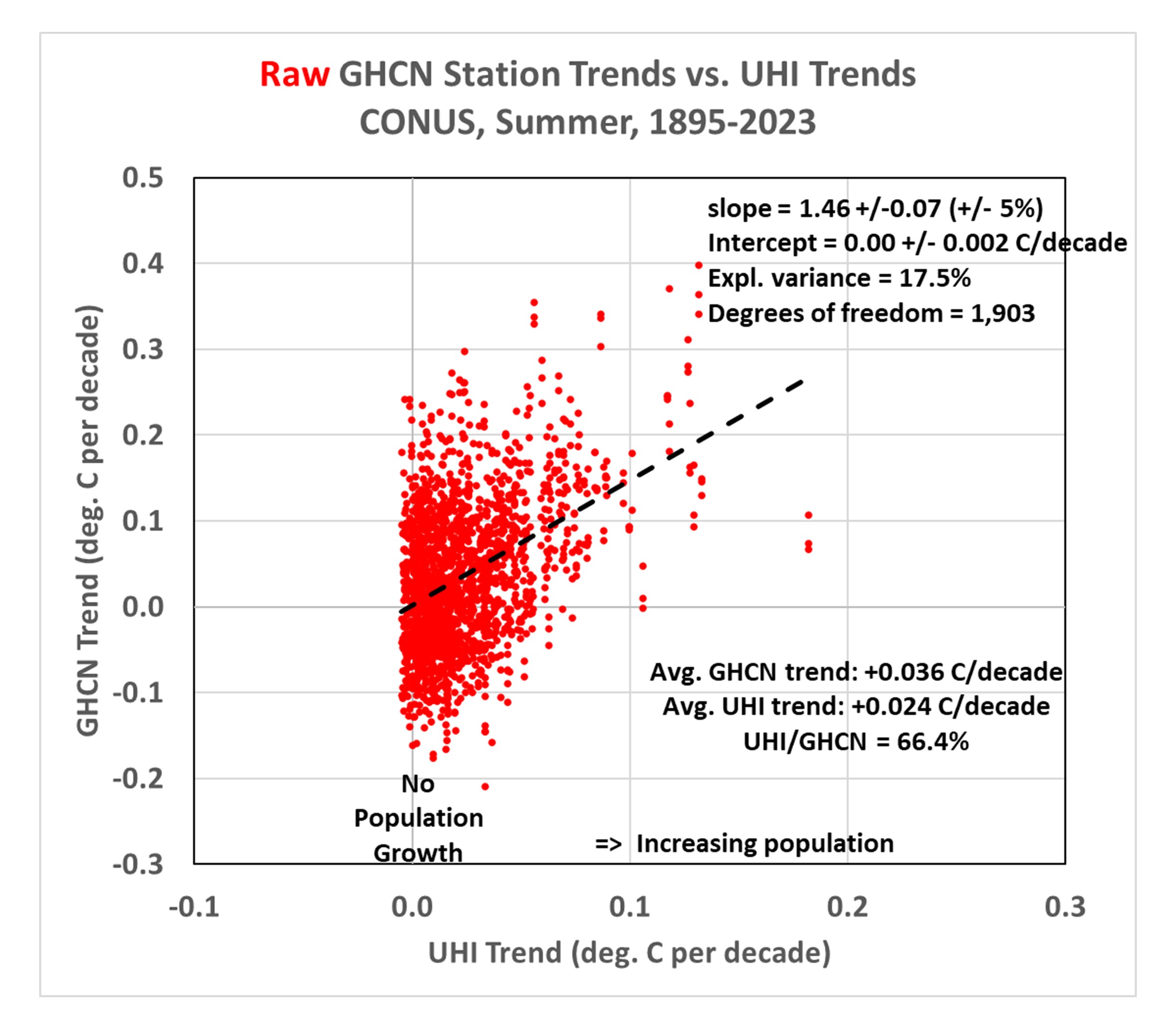

The method allows us to compute UHI trends using global gridded PD datasets that extend back to the 1800s. These UHI trends can then be compared to GHCN station temperature trends. If I do this for all U.S. GHCN stations having at least 120 years of complete monthly (June, July, or August) data out of 129 potential years during 1895-2023, the following plot shows some interesting results. (I begin with the “raw” data so we can then examine how homogenization changes the results.) Note the following plots have been updated to include regression statistics which demonstrate, despite low explained variances, the resulting regression slopes and intercepts have high confidence, due to the large number of data points (GHCN stations) contained in the plots.

- The greater a station’s population growth, the greater the observed warming trend. This is pretty convincing evidence that the raw GHCN data has substantial UHI effects impacting the computed trends (probably no surprise here). Note the UHI temperature trend averages 66% of the raw temperature trends.

- The regression line fit to the data intercepting zero shows that those stations with no population growth have, on average, no warming trend. While this might lead some to conclude there has been no net warming in the U.S. during 1895-2023, it must be remembered these are raw data, with no adjustments for time-of-observation (TOBS) changes or instrumentation type changes which might have biased most or all of the stations toward lower temperature trends.

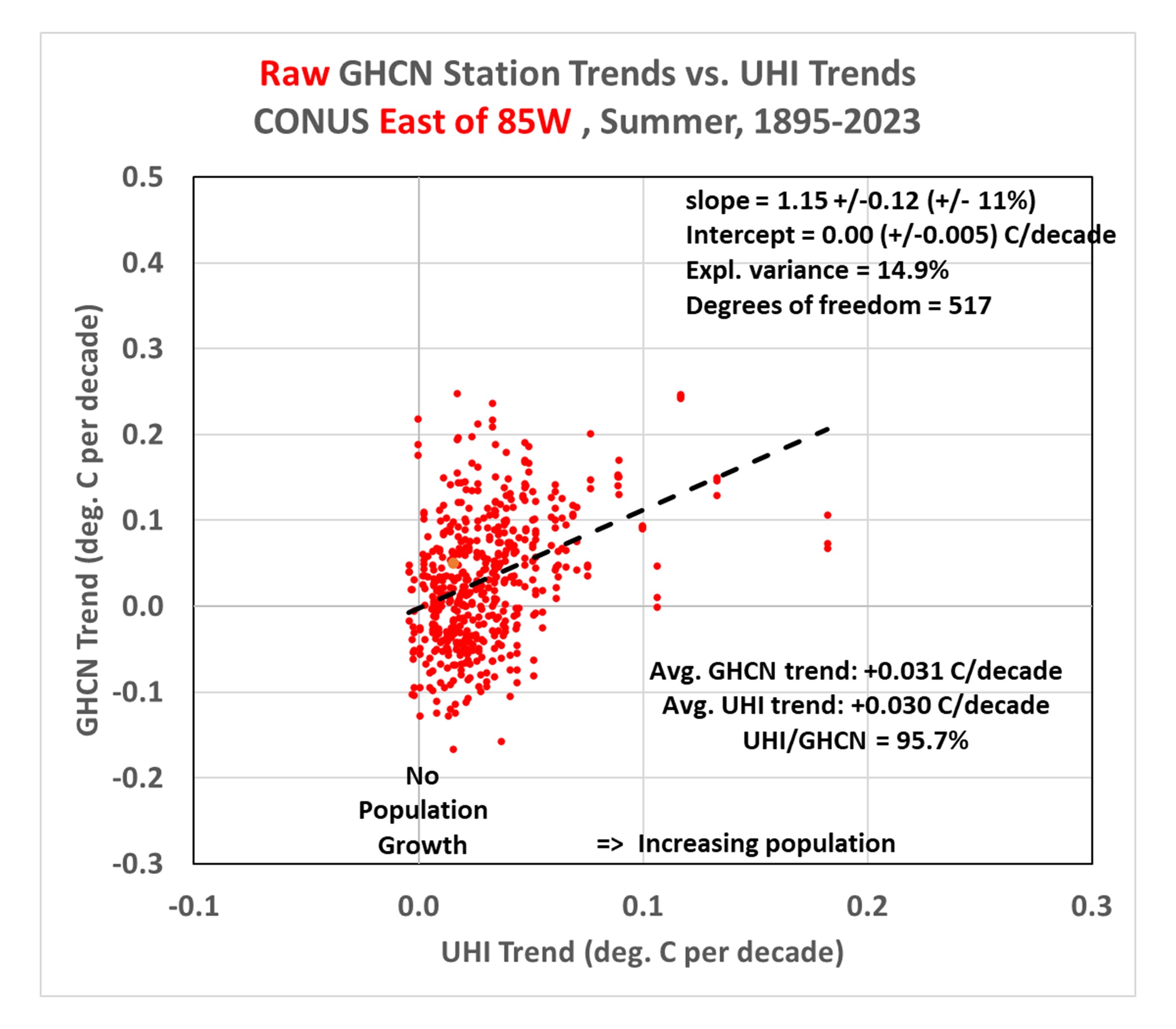

Since most of the rural stations (many of which have experienced little population growth) are in the western U.S., and there can be differences in actual temperature trends between the eastern and western U.S., let’s look at how things change if we just examine just the eastern U.S. (Ohio to the Florida peninsula, eastward):

This shows the Eastern U.S. has features similar to the U.S. as a whole, with a regression line intercept of zero (again) indicating those stations with no population growth have (on average) no warming trend in the raw GHCN data. But now, amazingly, the average UHI trend is over 95% of the raw station trends (!) This would seemingly suggest essentially all of the reported warming during 1895-2023 over the eastern U.S. has been due to the urbanization effect… if there are no systematic biases in the raw Tavg data that would cause those trends to be biased low. Also, as will be discussed below, this is the the period 1895-2023… the results for more recent decades are somewhat different.

Homogenization of the GHCN Data Produces Some Strange Effects

Next, let’s look at how the adjusted (homogenized) GHCN temperature trends compare to the UHI warming trends. Recall that the Pairwise Homogenization Algorithm (PHA) used by NOAA to create the “adjusted” GHCN dataset (which is the basis for official temperature statistics coming from the government) identifies and adjusts for step-changes in time at individual stations by comparing their temperature time series to the time series from surrounding stations. If we plot the adjusted data trend along with the raw data trends, the following figure shows some curious changes.

Here’s what homogenizations has done to the raw temperature data:

- Stations with no population growth (that had on average no warming trend) now have a warming trend. I can’t explain this. It might be the “urban blending” artifact of the PHA algorithm discussed by Katata et al. (2023, and references therein) whereby homogenization doesn’t adjust urban stations to “look like” rural stations, but instead tends to smooth out the differences between neighboring stations, causing a “bleeding” of urban effects into the rural stations.

- Stations with large population growth have had their warming trends reduced. This is the intended effect of homogenization.

- There still exists a UHI effect in the homogenized trends, but it has been reduced by about 50% compared to the raw trends. This suggests the PHA algorithm is only partially removing spurious warming signals from increasing urbanization.

- **Homogenization has caused the all-station average warming trend to nearly double (+89%), from +0.036 to +0.067 deg. C per decade.**I cannot explain this. It might be due to real effects from changes in instrumentation, the time-of-observation (TOBS) adjustment, an unintended artifact of the PHA algorithm, or some combination of all three.

Does This Mean Recent Warming In The U.S. Is Negligible?

Maybe not. While it does suggest problems with warming trends since 1895, if we examine the most recent period of warming (say, since 1961…a date I chose arbitrarily), we find considerably stronger warming trends.

Note that the GHCN trends since 1961 are nearly the same from raw (+0.192 C/decade) as from homogenized (+0.193 C/decade) data. The average UHI warming trend is only about 13% of the raw GHCN trend, and 10% of the homogenized trend, indicating little of the GHCN warming trend can be attributed to increases in population density.

But there still remains an urbanization signal in both the raw and adjusted data, as indicated by the non-zero regression slopes. One possible interpretation of these results is that, if the homogenization algorithm is distorting the station trends, and if we can use the raw GHCN data as a more accurate representation of reality, then the regression intercept of +0.10 deg. C/decade becomes the best estimate of the all-station average warming trend if NONE of the stations had any growth in population. That is little more than 50% of the homogenized data warming trend of +0.192 deg. C/decade.

What Does It All Mean?

First, there is evidence supporting the “urban blending” hypothesis of Katata et al., whereby the homogenization algorithm inadvertently blends urban station characteristics into rural temperature data. This appears to increase the all-station average temperature trend.

Second, homogenization appears to only remove about 50% of the UHI signal. Even after homogenization, GHCN temperature trends tend to be higher for stations with large population growth, lower for stations with little population growth. There is some evidence that truly rural stations would have only about 50% of the warming averaged across all U.S. stations, which is consistent with Anthony Watts’ estimates based upon restricting analysis to only those best-sited stations.

These results suggest there is now additional reason to distrust the official temperature trends reported for U.S. weather stations. They are, on average, too warm. By how much? That remains to be determined. Our method provides the first way (that I know of) to independently estimate the urban warming effect over time, albeit in an average sense (that is, it is accurate for the average across many stations, but its accuracy at individual stations is unknown). As my career winds down, I hope others in the future will extend this type of analysis.

[To see what the total UHI signal is in various calendar months around the world as of 2023, here are the hi-res images: Jan, Feb, Mar, Apr, May, Jun, Jul, Aug, Sep, Oct, Nov, Dec. More details of our method, along with links to monthly ArcGIS-format files of global UHI grids since 1800 (Version 0) are contained in my blog post from November, 2023.]

Posted in Blog Article | 85 Comments »

NC Floods, CA Drought, and The Role of Randomness

October 22nd, 2024

The recent devastating floods in western North Carolina were not unprecedented, but were certainly rare. A recent masters thesis examining flood deposits in the banks of the French Broad River over the last 250-300 years found that a flood in 1769 produced water levels approximately as high as those reported in the recent flood from Hurricane Helene. So, yes, the flood was historic.

Like all severe weather events, a superposition of several contributing factors are necessary to make an event “severe”, such as those that led to the NC floods. In that case, a strong hurricane combined with steering currents that would carry the hurricane on a track that would produce a maximum amount of orographic uplift on the east side of the Smoky Mountains was necessary in order to produce the widespread 12-20 inch rainfall amounts, and the steering currents had to be so strong that the hurricane would penetrate far inland with little weakening.

Again, all severe weather events represent the somewhat random combining of amplifying components: In the case of Hurricane Helene, they produced the unlucky massive flooding result that the region had not seen in hundreds of years.

The Random Component of Precipitation Variability

The rare superposition of several rare contributing factors, or the more common superposition of more common factors, can be examined through statistics when one examines many events. For example, it has long been known that precipitation statistics gathered over many years exhibit a log-normal frequency distribution. Simply put, the lowest precipitation amounts are the most frequent, and the highest amounts are the least frequent. This is the statistical result of the superposition of contributing factors, such as (in the case of excessive rainfall) abundant humidity, an unstable air mass, low-level convergence of air, a stationary or slow-moving storm (In western NC, the mountains providing uplift are stationary), etc.

Extreme precipitation events are (of course) the most rare, and as such, they can exhibit somewhat weird behavior. This is why hydrologists disagree over the usefulness of the term “100-year flood”, since most weather records don’t even extend beyond 100 years. One would probably need 1,000 years of rainfall records to get a good estimate of what constitutes a 100-year event.

Simulating Extreme Rainfall Events through Statistics

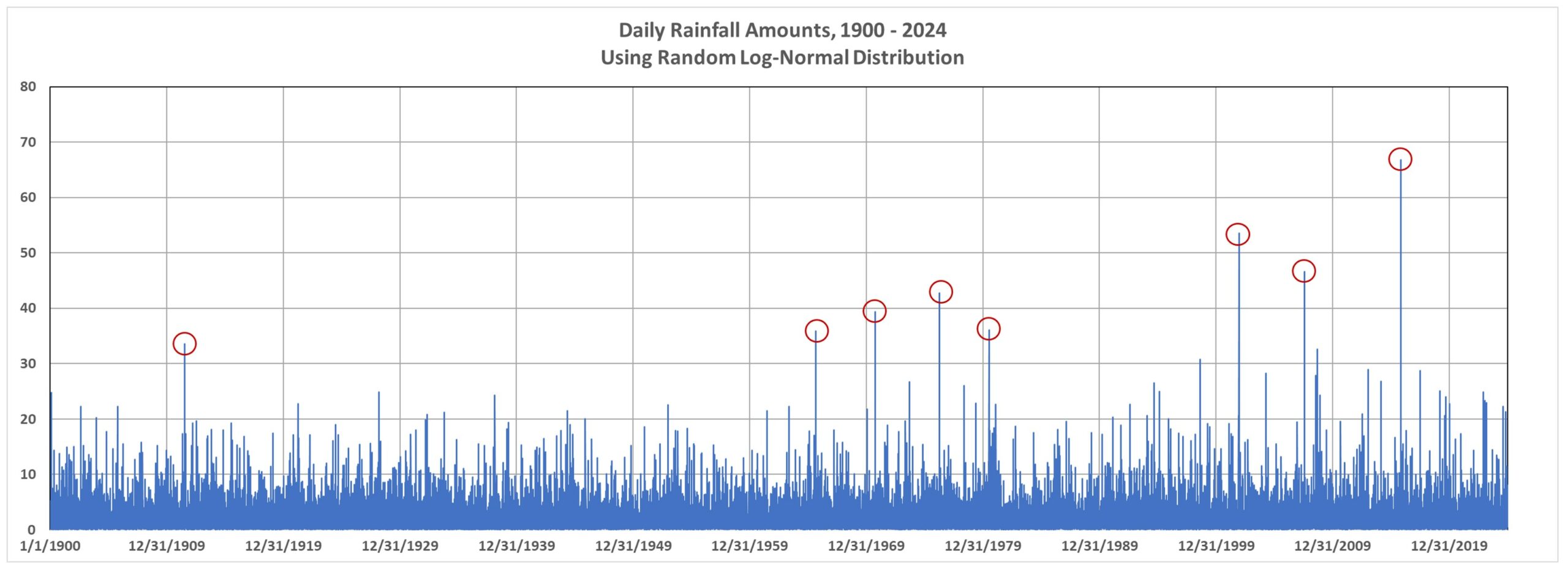

It is easy in Excel to make a simulated time series of rainfall totals having a log-normal distribution. For example, the following plot of hypothetical daily totals for the period 1900 through 2024 shows an seemingly increasing incidence of days with the heaviest rainfall (red circles). Could this be climate change?

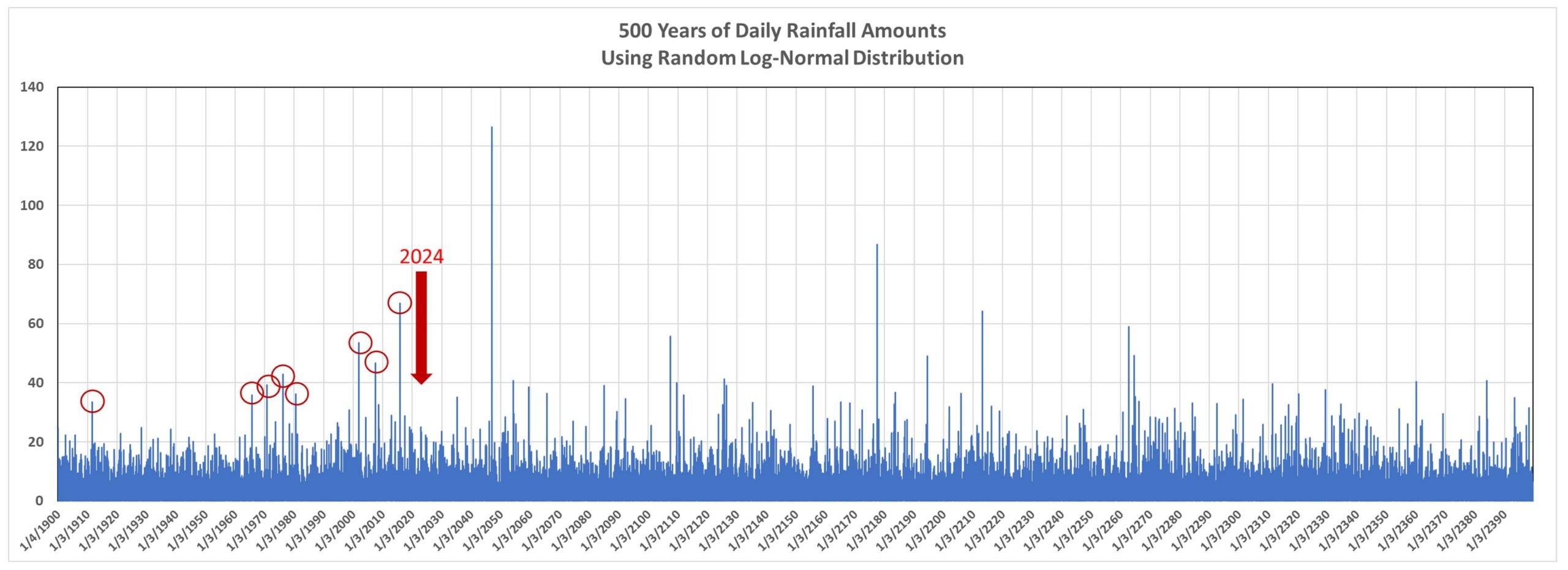

But remember, these are randomly generated numbers. Just like you can flip a coin and sometimes get 4 heads (or 4 tails) in a row doesn’t mean there is some underlying cause for getting the same result several times in a row. If we extend the above plot from 125 years to 500 years, we see (following plot) that there is no long-term increasing trend in heavy rainfall amounts:

Black Swan Events

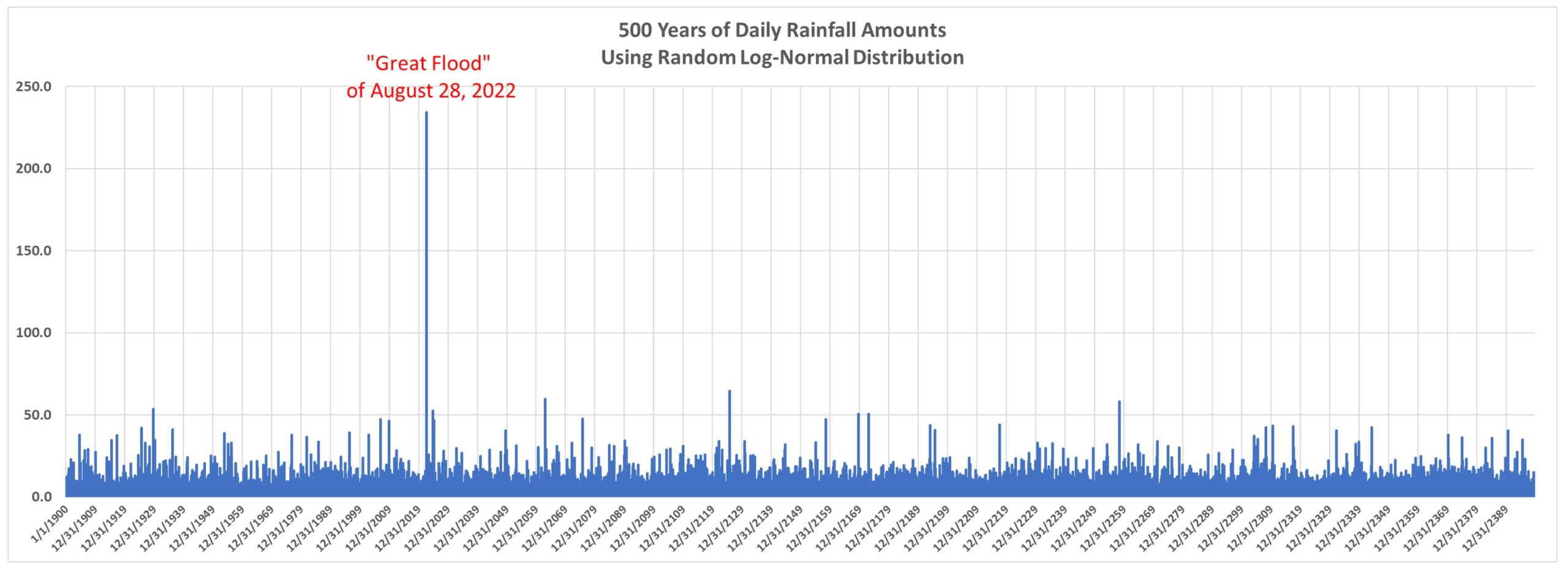

Or, how about this one, which I will call “The Great Flood of August 28, 2022”?:

Note that this event (generated with just log-normally distributed random numbers) far exceeds any other daily event in that 500-year plot.

The point here is that too often we tend to attribute severe weather events to some underlying cause that is emerging over time, such as global warming. And, I believe, some of the changes we have seen in nature are due to the (weak and largely benign) warming trend most regions of the world have experienced in the last 100 years.

But these events can occur without any underlying long-term change in the climate system. To attribute every change we see to global warming is just silly, especially when it comes to precipitation related events, such as flood… or even drought.

A “Random Drought”

Now changing our daily random log-normal precipitation generator to a monthly time scale, we can look at how precipitation amounts change from decade to decade. Why monthly? Well, weather variations (and even climate cycles) tend to have preferred time scales. Several days for synoptic weather patterns, quasi-monthly for some kinds of persistent weather patterns, and even yearly or decadal for some natural internal climate cycles.

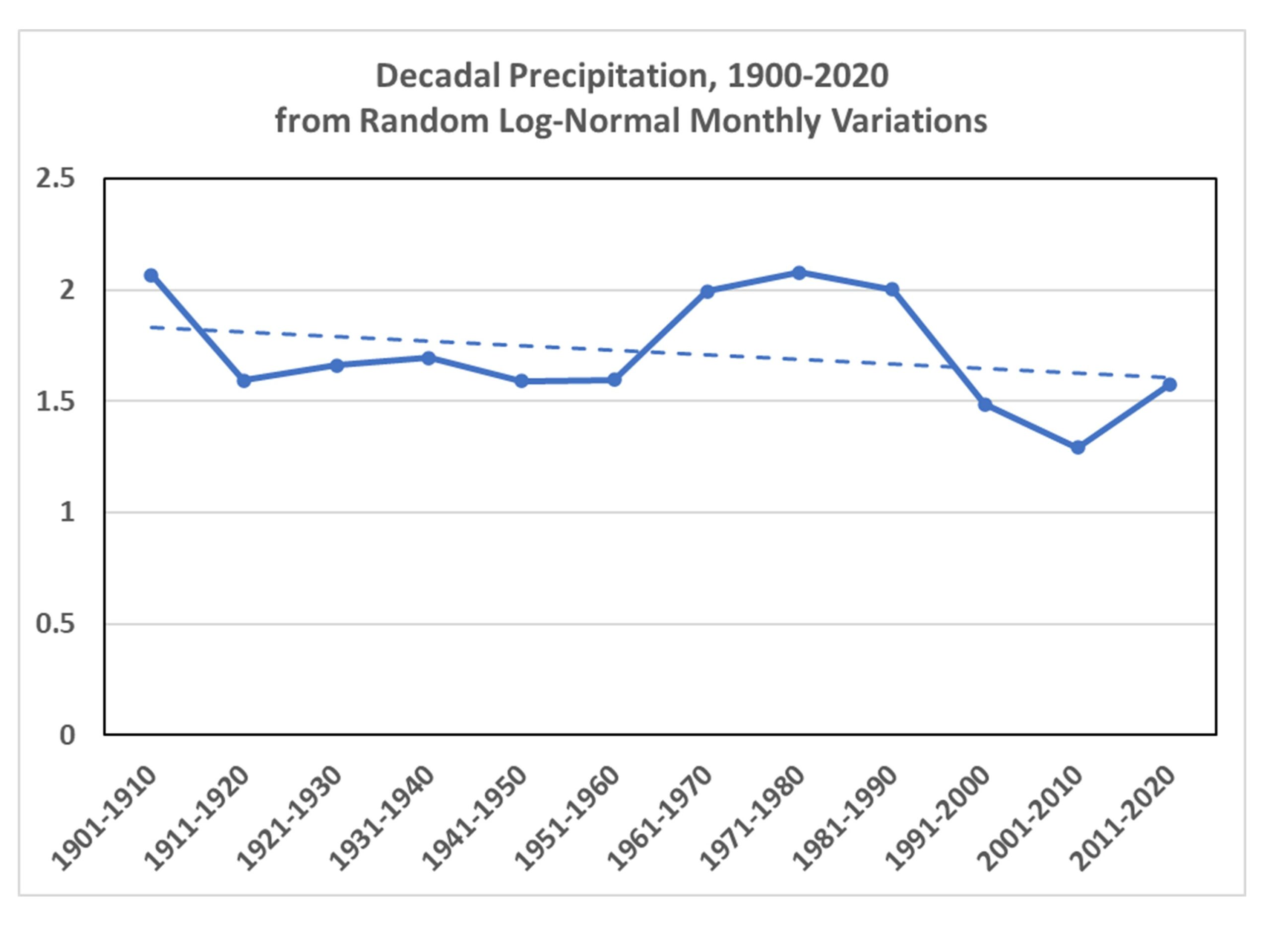

When I generate random log-normal time series at monthly time scales, and compute decadal averages over the last 120 years, seldom is the long-term trend close to zero. Here’s one what shows low precipitation for the most recent three decades, just purely through chance:

That looks like something we could attribute to drought in California, right? Yet, it’s just the result of random numbers.

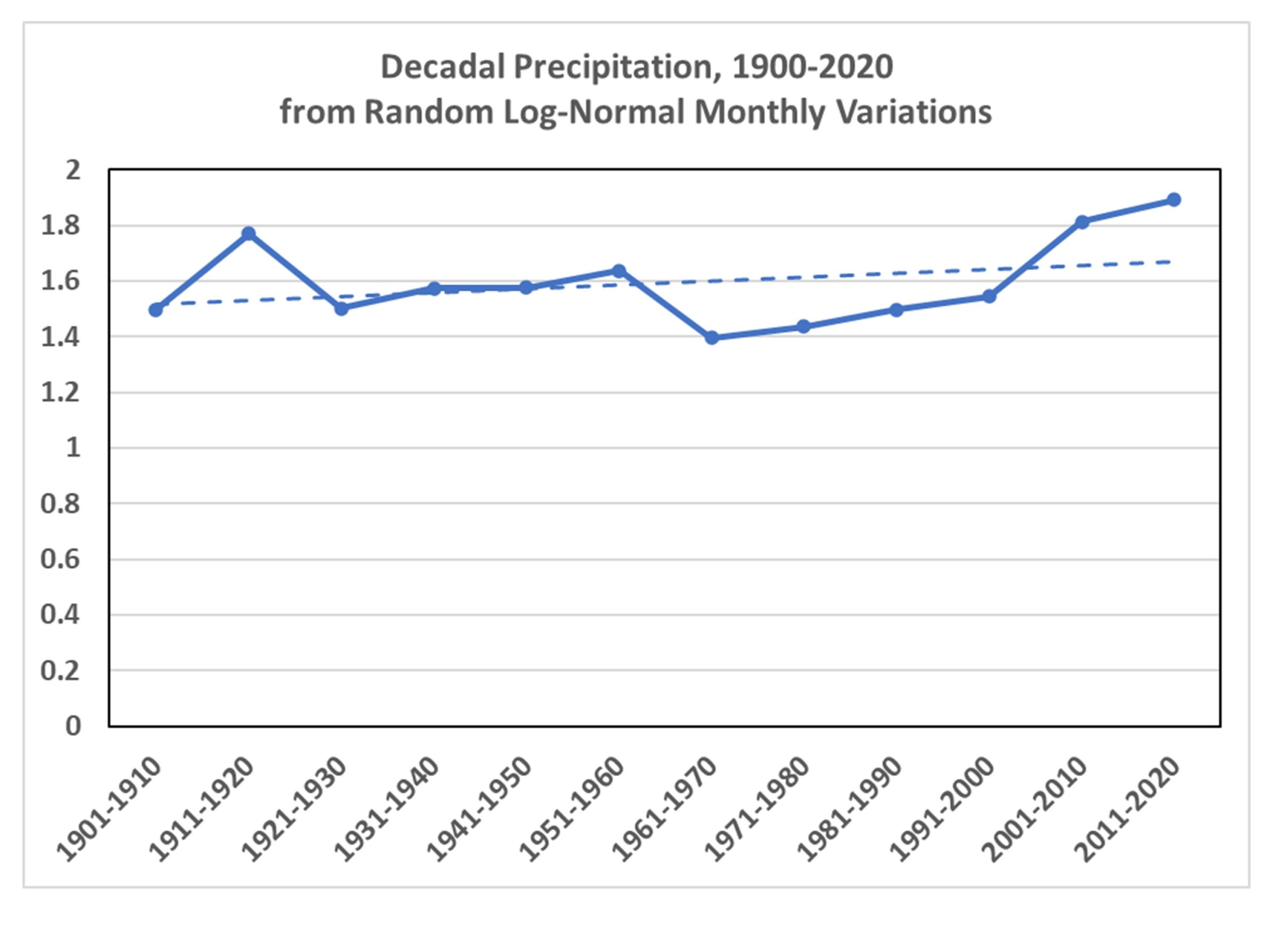

Or, we can choose one of the random simulations that has an increasing trend:

I’m sure someone could tie that to global warming.

A Final Word About 100-Year Flood Events

There seems to be some misunderstanding about 100-year events. These almost always apply to a specific location. So, you could have 100-year events every year in the U.S., and as long they are in different locations, there is nothing unusual about it. A 100-year flood in western North Carolina this year could be followed by a 100-year flood in eastern North Carolina next year. That doesn’t mean 100-year floods are getting more frequent.

I’m not claiming that all severe weather is due to randomness. Only that there is a huge random component to it, and that’s what makes attribution of any kind of severe weather event to climate change essentially impossible.

Posted in Blog Article | 101 Comments »

Florida Major Hurricanes, 1900-2024: What Do the Statistics Show?

October 7th, 2024

Florida residents must feel like they have been taking a beating from major hurricanes in recent years, but what do the data show?

The problem with human perception of such things is that the time scale of hurricane activity fluctuations is often longer than human experience. For example, a person born in the 1950s would have no memory of the beating Florida took in the 1940s from major hurricanes (a total of 5). But they would have many memories of the hurricane lull period of the 1970s and 1980s, each decade having only one major hurricane strike in Florida. Then, when an upswing in hurricane strikes occurs, it seems very unusual to them, and they assume that “hurricanes are getting worse”.

Another problem is that any statistics for an area as small as Florida, even over 100+ years, will be pretty noisy. Landfalling hurricanes for the eastern U.S. would be a better metric. And statistics for the entire Atlantic basin would be even better, except that satellite coverage didn’t start until the 1970s and hurricane intensity in remote areas before then would be poorly measured (or not measured at all).

Finally, tropical cyclone statistics for the entire tropics would be the best (if one was trying to determine if climate change is impacting cyclone intensity or frequency). But satellite data for the global tropics is, again, limited to the period since the 1970s. Global tropical cyclone data before the 1970s is sketchy, at best.

So, keeping in mind that any trends we see for Florida are going to be strongly influenced by the “luck of the draw” and the quasi-random nature of hurricane tracks (hurricanes are steered by the large-scale flow of air in the mid-troposphere, say around 20,000 ft altitude or so), what are the statistics of Florida major hurricane intensity and frequency since 1900?

Florida Major Hurricane Intensity & Number

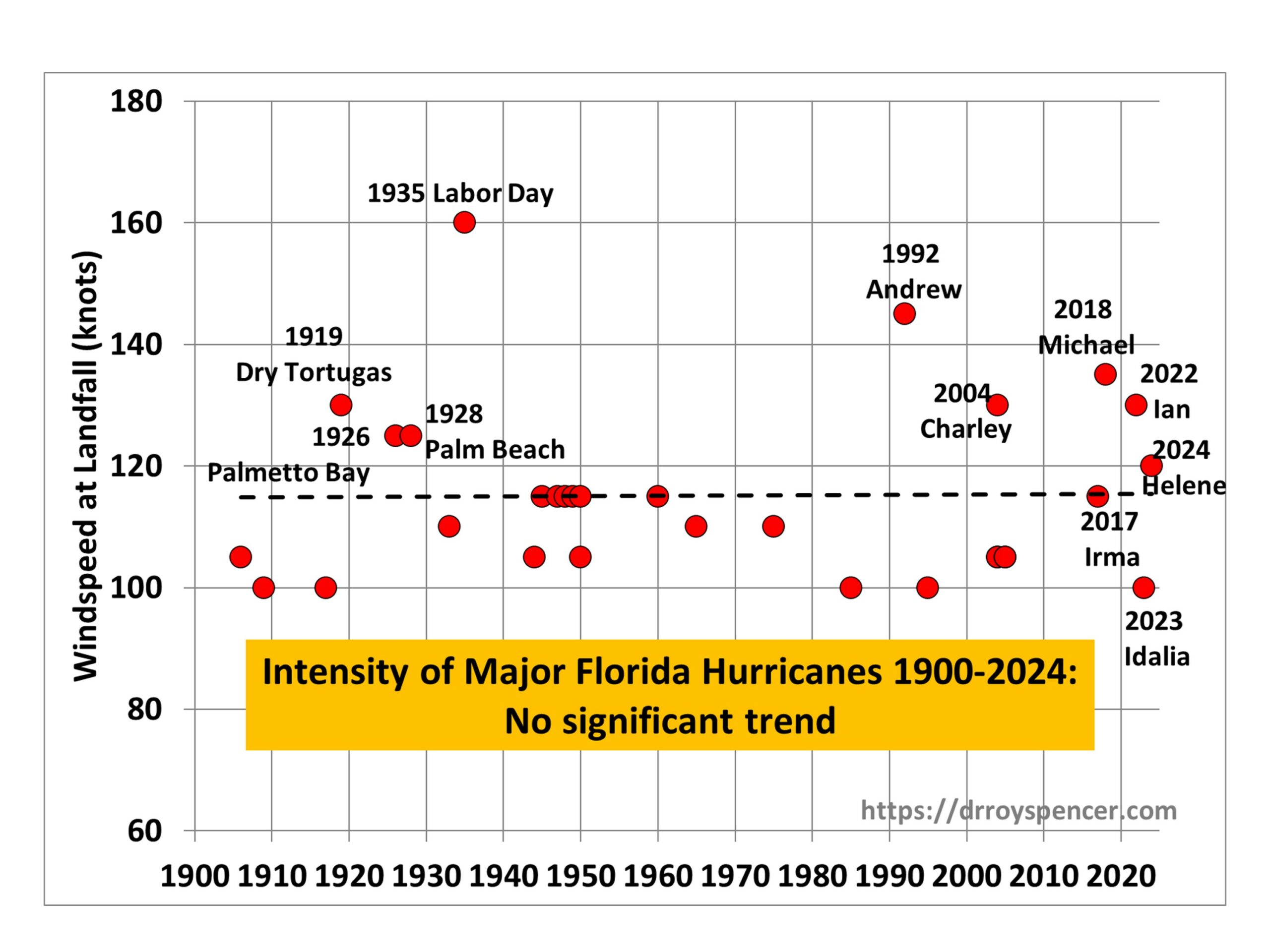

The following plot shows the intensity of major hurricanes (100 knots or greater maximum sustained wind speed) striking Florida since 1900, updated through recent (2024) Hurricane Helene:

As can be seen from the linear trend line, there has been no significant trend in the intensity of major hurricanes striking Florida since 1900.

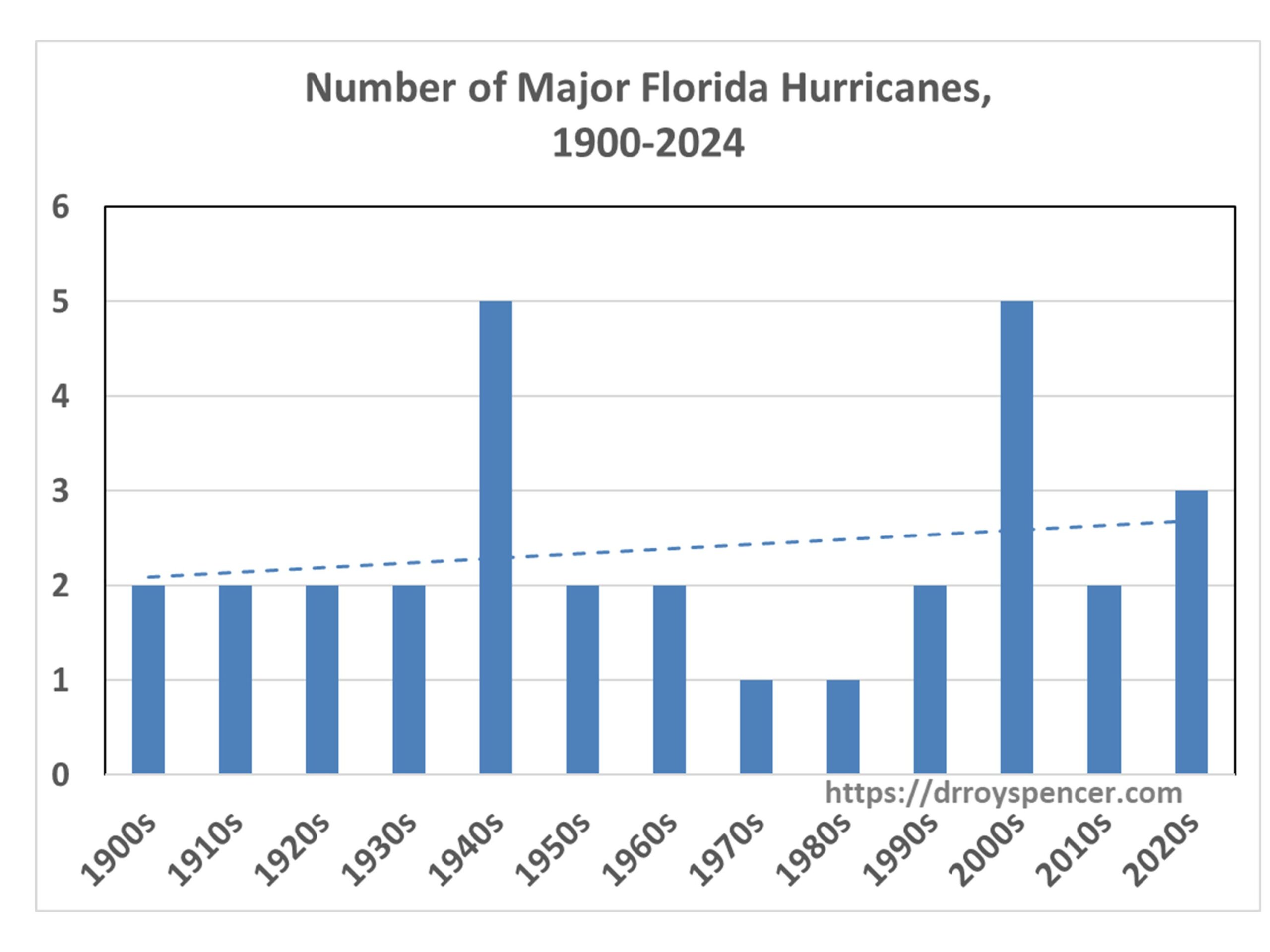

But what about the number of hurricanes? The next plot shows there has been a weak upward trend in the decadal totals of major hurricanes striking Florida since 1900:

Note that the 2020s number might well increase, since the end of the current (2024) hurricane season will be only half-way through the 2020s. While Hurricane Milton has just been classified as a major hurricane, in 2 days time it is expected to be under increasing wind shear, so it is not obvious it will strike Florida as a major hurricane, and so I did not include it in the above charts.

Another feature of the second chart above shows that a native Floridian born in the 1960s or 1970s would indeed have experienced an increase in major hurricanes striking Florida during their lifetime. But their first couple of decades of personal experience would have occurred during a historic lull in hurricane activity.

Why Start In 1900?

There is reason to believe that the number and/or intensity of major hurricanes striking Florida in the early 1900s has been underestimated, which would bias the trends in the above plots in the upward direction, spuriously suggesting a long-term increase in activity. First of all, there were virtually no people living in Florida in 1900. The population of Miami in 1896 was 444 persons. The intensity of a hurricane is based upon its maximum sustained 1 minute windspeed, which usually covers a very small area. Even with people now inhabiting much of the Florida coastline, it is rare for a coastal anemometer to measure the intensity that the National Hurricane Center gives to a hurricane, because those winds cover such a small area. So, how could it ever be known how intense some hurricanes were in the early 1900s?

Evidence for Long-Term Hurricane Fluctuations Unrelated to Water Temperature

Modern concern centers on the possibility that warm sea surface temperatures from global warming caused by anthropogenic CO2 emissions is making hurricanes stronger or more frequent. But studies of coastal lagoon sediments along the Gulf coast and Caribbean deposited by catastrophic hurricane landfalls show large fluctuations in activity on centennial to millennial time scales, even in the absence of the unusually warm sea surface temperatures measured today. (Example here.)

It should also be remembered that not long ago the U.S. experienced an “unprecedented” 11-year drought in major hurricane strikes. That significantly impacts our perception of what is “normal”. When the lull had reached 9 years, a NASA study found such an event was a 1-in-177-years occurrence. As I recall, that was increased to 1-in-250 years when the lull reached 11 years.

The point is that there is a huge amount of natural decadal- to centennial-time scale variability in hurricane activity in Florida (or any other hurricane-prone state). But with increasing numbers of people thinking that the government is somehow influencing hurricane activity (I’m seeing a lot of this on Twitter), I doubt that actual data will have much influence on those people, and as I approach 70 years on this Earth I have noticed a long-term decline in critical thinking regarding weather, climate, and causation. I doubt that trend will change any time soon.

Posted in Blog Article | 892 Comments »