Pandas dataframe.drop_duplicates() (original) (raw)

Last Updated : 25 Nov, 2024

Pandas **drop_duplicates() method helps in removing duplicates from the Pandas Dataframe allows to remove duplicate rows from a DataFrame, either based on all columns or specific ones in python.

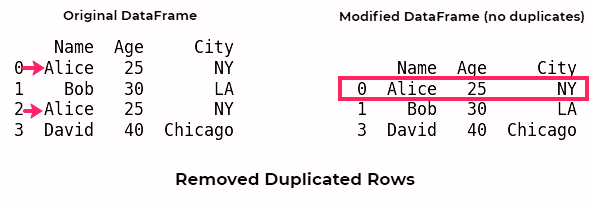

By default, drop_duplicates() scans the entire DataFrame for duplicate rows and removes all subsequent occurrences, retaining only the first instance being the simple and efficient method. Let’s see a quick example:

Python `

import pandas as pd data = { "Name": ["Alice", "Bob", "Alice", "David"], "Age": [25, 30, 25, 40], "City": ["NY", "LA", "NY", "Chicago"] } df = pd.DataFrame(data) display(df)

Removing duplicates

unique_df = df.drop_duplicates() display(unique_df)

`

**Output:

Pandas dataframe.drop_duplicates()

This example demonstrates how duplicate rows are removed while retaining the first occurrence using pandas.DataFrame.drop_duplicates() since it’s commonly used and recommended.

**dataframe.drop_duplicates() Syntax in Python :

**Syntax: DataFrame.drop_duplicates(subset=None, keep=’first’, inplace=False)

**Parameters:

- **subset: Subset takes a column or list of column label. It’s default value is none. After passing columns, it will consider them only for duplicates. ( Optional)

- **keep: keep is to control how to consider duplicate value. It has only three distinct value and default is ‘first’.

- If ‘**first‘, it considers first value as unique and rest of the same values as duplicate.

- If ‘**last‘, it considers last value as unique and rest of the same values as duplicate.

- If **False, it consider all of the same values as duplicates

- **inplace: Boolean values, removes rows with duplicates if True.

**Return type: DataFrame with removed duplicate rows depending on Arguments passed.

Python dataframe.drop_duplicates() : Examples

Duplicate rows can arise due to merging datasets, incorrect data entry, or other reasons. The drop_duplicates() **works by identifying duplicates based on all columns (default) or specified columns and removing them as per your requirements. Below, we are discussing examples of dataframe.drop_duplicates() method:

**1. Dropping Duplicates Based on Specific Columns

You can target duplicates in specific columns using the subset parameter. This helps when certain fields are more relevant for identifying duplicates.

Python `

import pandas as pd df = pd.DataFrame({ 'Name': ['Alice', 'Bob', 'Alice', 'David'], 'Age': [25, 30, 25, 40], 'City': ['NY', 'LA', 'SF', 'Chicago'] })

Drop duplicates based on the 'Name' column

result = df.drop_duplicates(subset=['Name']) print(result)

`

Output

Name Age City0 Alice 25 NY 1 Bob 30 LA 3 David 40 Chicago

Here, duplicates are removed based **solely on the Name column, ignoring the other fields. This is helpful when specific columns uniquely identify rows.

**2. Keeping the Last Occurrence

By default, drop_duplicates() retains the first occurrence of duplicates. However, you can retain the last duplicate instead using keep='last'.

Python `

import pandas as pd

df = pd.DataFrame({ 'Name': ['Alice', 'Bob', 'Alice', 'David'], 'Age': [25, 30, 25, 40], 'City': ['NY', 'LA', 'NY', 'Chicago'] })

Keep the last occurrence of duplicates

result = df.drop_duplicates(keep='last') print(result)

`

Output

Name Age City1 Bob 30 LA 2 Alice 25 NY 3 David 40 Chicago

The keep='last' parameter ensures the last occurrence of each duplicate is retained instead of the first.

**3. Dropping All Duplicates

To remove all rows with duplicates, use keep=False. This keeps only rows that are entirely unique.

Python `

import pandas as pd

df = pd.DataFrame({ 'Name': ['Alice', 'Bob', 'Alice', 'David'], 'Age': [25, 30, 25, 40], 'City': ['NY', 'LA', 'NY', 'Chicago'] })

Drop all duplicates

result = df.drop_duplicates(keep=False) print(result)

`

Output

Name Age City1 Bob 30 LA 3 David 40 Chicago

With keep=False, all occurrences of duplicate rows are removed, leaving only rows that are entirely unique across all columns.

4. Modifying the Original DataFrame Directly

To modify the original DataFrame directly without creating a new one, use inplace=True.

Python `

import pandas as pd

df = pd.DataFrame({ 'Name': ['Alice', 'Bob', 'Alice', 'David'], 'Age': [25, 30, 25, 40], 'City': ['NY', 'LA', 'NY', 'Chicago'] })

Modify the DataFrame in place

df.drop_duplicates(inplace=True) print(df)

`

Output

Name Age City0 Alice 25 NY 1 Bob 30 LA 3 David 40 Chicago

Using inplace=True modifies the original DataFrame directly, saving memory and avoiding the need to assign the result to a new variable.