Creating an OData API for StackOverflow including XML and JSON in 30 minutes (original) (raw)

I emailed Jeff Atwood last night a one line email. "You should make a StackOverflow API using OData." Then I realized that, as Linus says, Talk is Cheap, Show me the Code. So I created an initial prototype of a StackOverflow API using OData on an Airplane. I allocated the whole 12 hour flight. Unfortunately it took 30 minutes so I watched movies the rest of the time.

You can follow along and do this yourself if you like.

Preparation

Before I left for my flight, I downloaded two things.

First, I got Sam Saffron's"So Slow" StackOverflow SQL Server Importer. This is a little spike of Sam's that takes the 3gigs of XML Dump Files from StackOverflow's monthly dump and imports it into SQL Server.

Second, I got the StackOverflow Monthly Dump. I downloaded it with uTorrent and unzipped it in preparation for the flight.

Importing into SQL Server

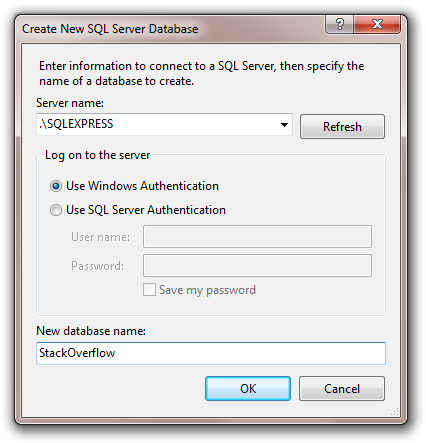

I went into Visual Studio 2010 (although I could have used 2008, I like the Entity Framework improvements in 2010 enough that it made this job easier). I right clicked on the Data Connections node in the Server Explorer and created a database in SQL Express called, ahem, "StackOverflow."

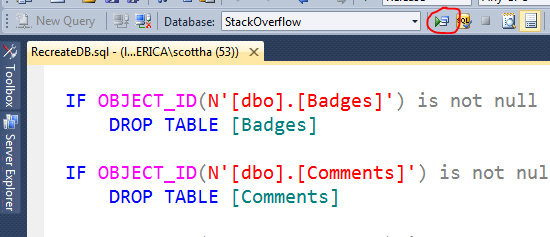

Next, I opened up Sam's RecreateDB.sql file from his project in Visual Studio (I avoid using SQL Server Management Studio when I can) and connected to the ".\SQLEXPRESS" instance, selected the new StackOverflow database and hit "execute."

One nit about Sam's SQL file, it creates tables that line up nicely with the dump, but it includes no referential integrity. The tables don't know about each other and there's no cardinality setup. I've overwritten the brain cells in my head that know how to do that stuff without Google Bing so I figured I'd deal with it later. You will too.

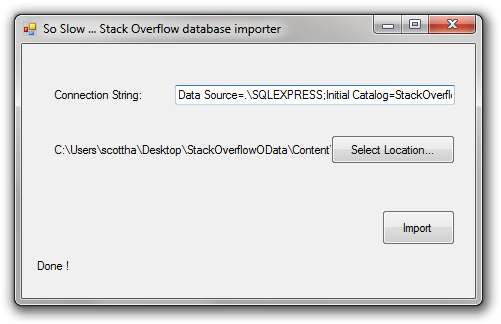

Next, I opened Sam's SoSlow application and ran it. Lovely little app that works as advertised with a gloriously intuitive user interface. I probably would have named the "Import" button something like "Release the Hounds!" but that's just me.

At this point I have a lovely database of a few hundred megs filled with StackOverflow's public data.

Making a Web Project and an Entity Model

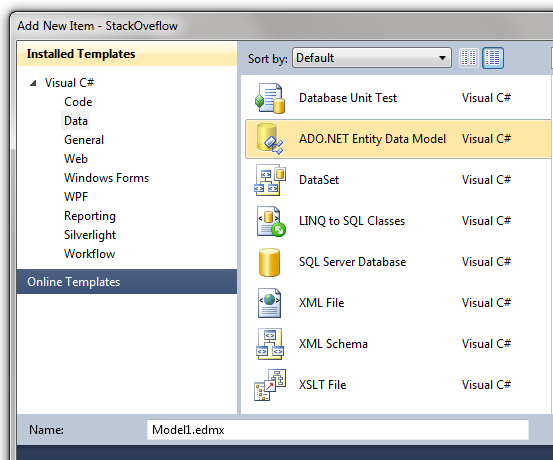

Now, from within Visual Studio I selected File | New Project | ASP.NET Web Application. Then I right clicked on the resulting project and selected Add | New Item, then clicked Data, then ADO.NET Entity Data Model.

What's the deal with that, Hanselman? You know StackOverflow uses LINQ to SQL? Have you finally sold out and are trying to force Entity Framework on us sneakily within this cleverly disguised blog post?

No. I used EF for a few reasons. One, it's fast enough (both at runtime and at design time) in Visual Studio 2010 that I don't notice the difference anymore. Two, I knew that the lack of formal referential integrity was going to be a problem (remember I mentioned that earlier?) and since LINQ to SQL is 1:1 physical/logical and EF offers flexible mapping, I figured it be easier with EF. Thirdly, "WCF Data Services" (the data services formerly known as ADO.NET Data Services or "Astoria") maps nicely to EF.

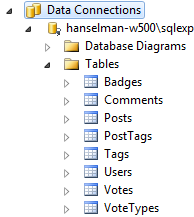

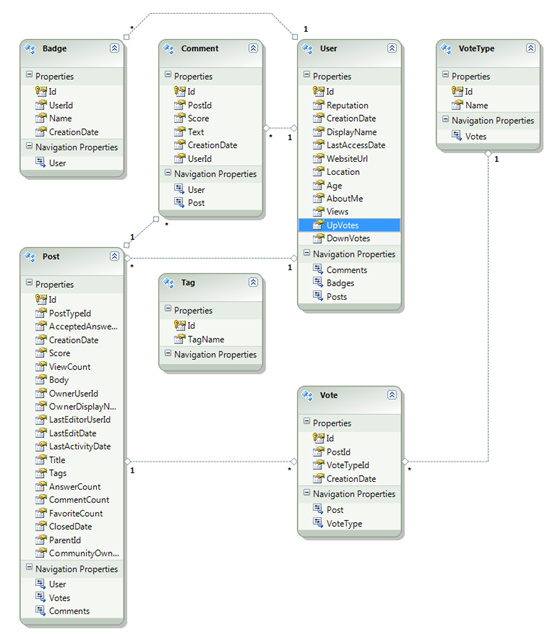

I named it StackOverflowEntities.edmx and selected "Update Model from Database" and selected all the tables just to get started. When the designer opened, I noticed there were no reference lines, just tables in islands by themselves.

So I was right about there being no relationships between the tables in SQL Server. If I was a smarter person, I'd have hooked up the SQL to include these relationships, but I figured I could add them here as well as a few other things that would make our OData Service more pleasant to use.

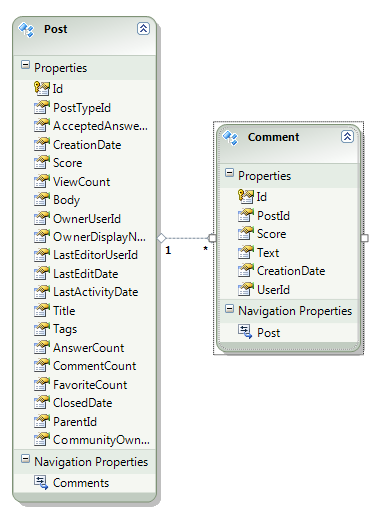

I started by looking at Posts and thinking that if I was looking at a Post in this API, I'd want to see Comments. So, I right-clicked on a Post and click Add | Association. The dialog took me a second to understand (I'd never seen it before) be then I realized that it was creating an English sentence at the bottom, so I just focused on getting that sentence correct.

In this case, "Post can have * (Many) instances of Comment. Use Post.Comments to access the Comment instances. Comment can have 1 (One) instance of Post. Use Comment.Post to access the Post instance." was exactly what I wanted. I also already had the foreign keys properties, so I unchecked that and clicked OK.

_3.png)

That got me here in the Designer. Note the line with the 1...* and the Comments Navigation Property on Post and the Post Navigation Property on Comment. That all came from that dialog.

Next, I figured since I didn't have it auto-generate the foreign key properties, I'd need to map them myself. I double clicked on the Association Line. I selected Post as the Principal and mapped its Id to the PostId property in Comments.

_3.png)

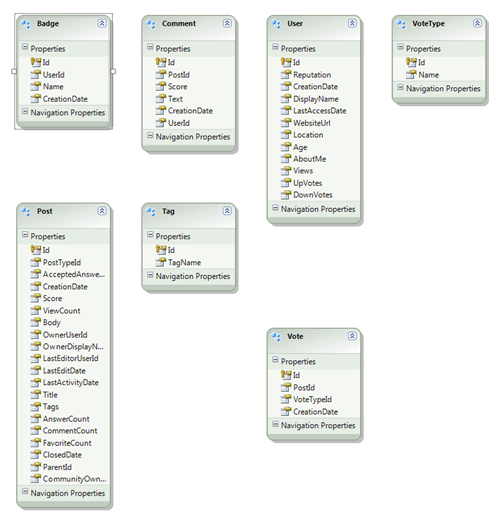

Having figured this out, I just did the same thing a bunch more times for the obvious stuff, as seen in this diagram where Users have Badges, and Posts have Votes, etc.

Now, let's make a service.

Creating an OData Service

Right-click on the Project in Solution Explorer and select Add | New Item | Web | WCF Data Service. I named mine Service.svc. All you technically need to do to have a full, working OData service is add a class in between the angle brackets (DataService) and include one line for config.EntitySetAccessRule. Here's my initial minimal class. I added the SetEntitySetPageSize after I tried to get all the posts. ;)

public class Service : DataService

{

// This method is called only once to initialize service-wide policies.

public static void InitializeService(DataServiceConfiguration config)

{

config.SetEntitySetAccessRule("*", EntitySetRights.AllRead);

//Set a reasonable paging site

config.SetEntitySetPageSize("*", 25);

config.DataServiceBehavior.MaxProtocolVersion = DataServiceProtocolVersion.V2;

} }

Expanding on this class, I added caching, and an example Service Operation, as well as WCF Data Services support for JSONP. Note that the Service Operation is just an example there to show StackOverflow that they CAN have total control. Using OData doesn't mean checking a box and putting your database on the web. It means exposing specific entities with as much or as little granularity as you like. You can intercept queries, make custom behaviors (like the JSONP one), make custom Service Operations (they can include query strings, of course), and much more. OData supports JSON natively and will return JSON when an accept: header is set, but I added the JSONP support to allow cross-domain use of the service as well as allow the format parameter in the URL, which is preferred by man as it's just easier.

namespace StackOveflow

{

[JSONPSupportBehavior]

public class Service : DataService

{

// This method is called only once to initialize service-wide policies.

public static void InitializeService(DataServiceConfiguration config)

{

config.SetEntitySetAccessRule("*", EntitySetRights.AllRead);

//This could be "*" and could also be ReadSingle, etc, etc.

config.SetServiceOperationAccessRule("GetPopularPosts", ServiceOperationRights.AllRead);

//Set a reasonable paging site

config.SetEntitySetPageSize("*", 25);

config.DataServiceBehavior.MaxProtocolVersion = DataServiceProtocolVersion.V2;

}

protected override void OnStartProcessingRequest(ProcessRequestArgs args)

{

base.OnStartProcessingRequest(args);

//Cache for a minute based on querystring

HttpContext context = HttpContext.Current;

HttpCachePolicy c = HttpContext.Current.Response.Cache;

c.SetCacheability(HttpCacheability.ServerAndPrivate);

c.SetExpires(HttpContext.Current.Timestamp.AddSeconds(60));

c.VaryByHeaders["Accept"] = true;

c.VaryByHeaders["Accept-Charset"] = true;

c.VaryByHeaders["Accept-Encoding"] = true;

c.VaryByParams["*"] = true;

}

[WebGet]

public IQueryable<Post> GetPopularPosts()

{

var popularPosts = (from p in this.CurrentDataSource.Posts

orderby p.ViewCount

select p).Take(20);

return popularPosts;

}

} }

But what does this get us? So what?

Accessing StackOverflow's Data via OData

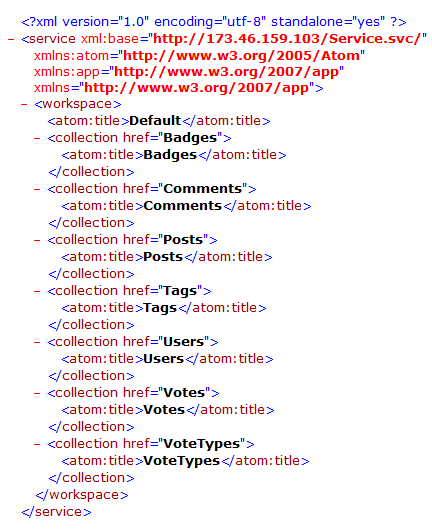

Well, if I hit http://mysite/service.svc I see this service. Note the relative HREFs.

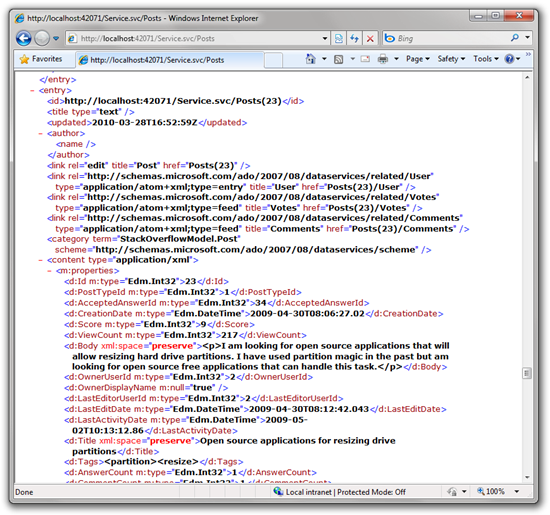

If I hit http://173.46.159.103/service.svc/Posts I get the posts (paged, as I mentioned). Look real close in there. Notice the stuff before the content? Notice the relative href="Posts(23)"?

Remember all those associations I set up before? Now I can see:

- Get a post: http://173.46.159.103/service.svc/Posts(23)/

- Votes on a post http://173.46.159.103/service.svc/Posts(23)/Votes

- Get a post and get all the comments at the same time: http://173.46.159.103/service.svc/Posts(23)?$expand=Comments

- I can even navigate to a user's details off a comment off a post: http://173.46.159.103/service.svc/Posts(23)/Comments(55049)/User

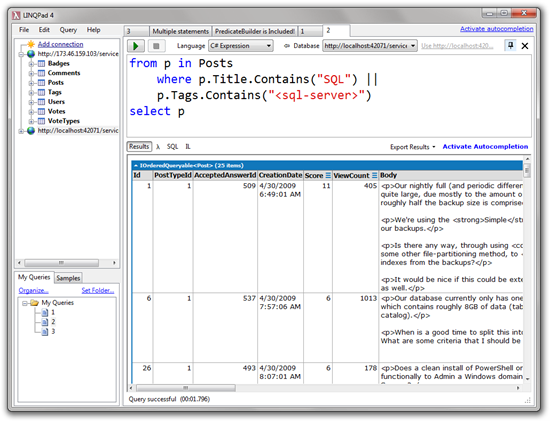

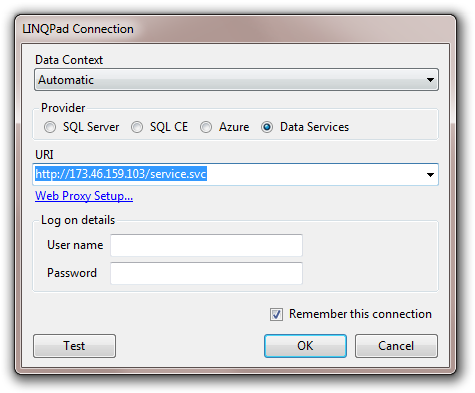

But that's just navigation. I can also do queries. Go download LINQPad Beta for .NET 4. Peep this. Click on Add Connection, and put in my little Orcsweb test server.

Disclaimer: This is a test server that Orcsweb may yank at any moment. Note also, that you can sign up for your own at http://www.vs2010host.com or find a host at ASP.NET or host your own OData in the cloud.

I put this in and hit OK.

Now I'm writing LINQ queries against StackOverflow over the web. No Twitter-style API, JSON or otherwise can do this. StackOverflow data was meant for OData. The more I mess around with this, the more I realize it's true.

This LINQ query actually turns into this URL. Again, you don't need .NET for this, it's just HTTP:

Try the same thing with an accept header of accept: application/json or just add $format=json

It'll automatically return the same data as JSON or Atom, as you like.

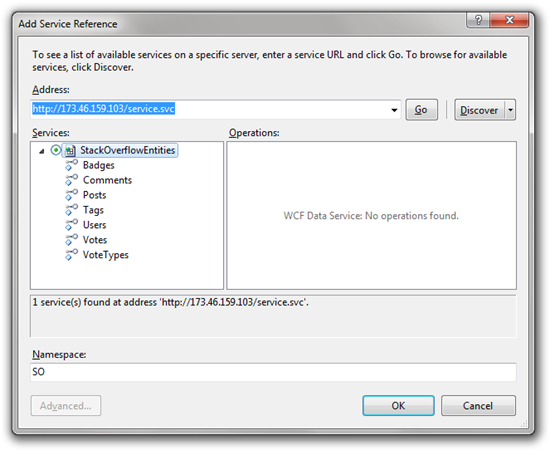

If you've got Visual Studio, just go bust out a Console App real quick. File | New Console App, then right-click in references and hit Add Service Reference. Put in http://173.46.159.103/service.svc and hit OK.

Try something like this. I put the URIs in comments to show you there's no trickery.

class Program

{

static void Main(string[] args)

{

StackOverflowEntities so = new StackOverflowEntities(new Uri("http://173.46.159.103/service.svc"));

//{http://173.46.159.103/service.svc/Users()?$filter=substringof('Hanselman',DisplayName)}

var user = from u in so.Users

where u.DisplayName.Contains("Hanselman")

select u;

//{http://173.46.159.103/service.svc/Posts()?$filter=OwnerUserId eq 209}

var posts =

from p in so.Posts

where p.OwnerUserId == user.Single().Id

select p;

foreach (Post p in posts)

{

Console.WriteLine(p.Body);

}

Console.ReadLine();

} }

I could keep going with examples in PHP, JavaScript, etc, but you get the point.

Conclusion

StackOverflow has always been incredibly open and generous with their data. I propose that an OData endpint would give us much more flexible access to their data than a custom XML and/or JSON API that they'll need be constantly rev'ing.

With a proprietary API, folks will rush to create StackOverflow clients in many languages, but that work is already done with OData including libraries for iPhone, PHP and Java. There's a growing list of OData SDKs that could all be used to talk to a service like this. I could load it into Excel using PowerPivot if I like as well.

Also, this service could totally be extended beyond this simple GET example. You can do complete CRUD with OData and it's not tied to .NET in anyway. TweetDeck for StackOverflow perhaps?

I propose we encourage StackOverflow to put more than the 30 minutes that I have put into it and make a proper OData service for their data, rather than a custom API. I volunteer to help. If not, we can do it ourselves with their dump data (perhaps weekly if they can step it up?) and a cloud instance.

Thoughts?

About Scott

Scott Hanselman is a former professor, former Chief Architect in finance, now speaker, consultant, father, diabetic, and Microsoft employee. He is a failed stand-up comic, a cornrower, and a book author.