NazTok: An organized neo-Nazi TikTok network is getting millions of views (original) (raw)

29 July 2024

By: Nathan Doctor, Guy Fiennes, and Ciarán O’Connor

Self-identified Nazis are openly promoting hate speech and real-world recruitment on TikTok. Not only is the platform failing to remove these videos and accounts, but its algorithm is amplifying their reach.

Content warning: This report includes examples of racist extremist content.

Summary

TikTok hosts hundreds of accounts which openly support Nazism and use the video app to promote their ideology and propaganda, as identified in the analysis laid out in this report. Our research began after finding one such account, which was expanded into a wider network of 200 accounts by examining the pro-Nazi accounts it followed and some of the accounts those accounts followed, as well as searching for violative content and using TikTok’s algorithm. Accounts were included in this study if they produced several videos expressly promoting Nazism or if they featured Nazi symbols in their usernames, profile pictures, etc. Key findings include:

- Pro-Nazi content is receiving tens of millions of views on TikTok. This includes videos featuring Holocaust denial; glorification of Hitler, Nazi-era Germany, and Nazism as a solution to contemporary issues; support for white supremacist mass shooters, and livestreamed footage or recreations of these massacres. Self-identified Nazis discuss TikTok as an amenable platform to spread their ideology which can be used to reach ‘much more people’, even if accounts are brand new or have a low following.

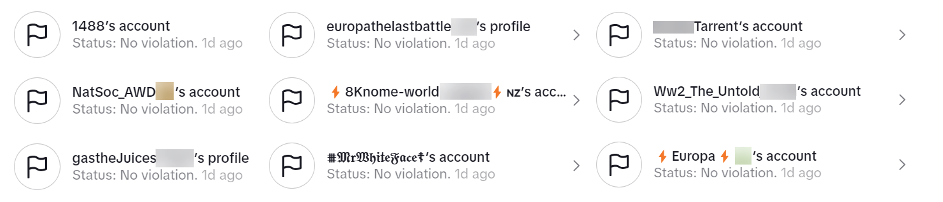

- TikTok is failing to take down violative videos and accounts, even when the content is reported by users. ISD reported 50 accounts in the network that violated TikTok’s community guidelines around hateful ideologies, promotion of violence, Holocaust denial, and other rules. The accounts, which collectively received over 6.2 million views, included those that either promoted Nazism in their videos or featured hate speech so long as they also included Nazi symbols in their profile pictures, usernames, nicknames or descriptions. After one day, all 50 accounts remained active, with all reports returning “no violation”.

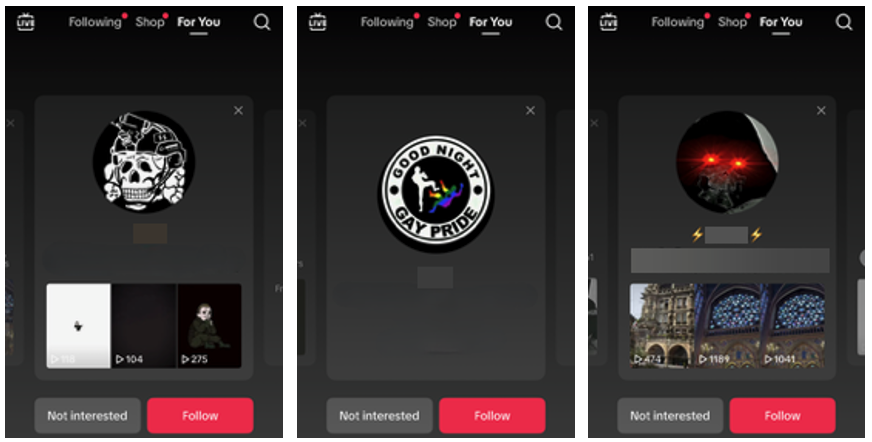

- Accounts and videos promoting Nazism are algorithmically amplified, with TikTok quickly recommending such content to users engaging with similar far-right hate speech. After viewing the pages of 10 accounts in the network and 10 videos total, a new dummy account scrolled through just three videos in TikTok’s For You Page (FYP) before receiving a recommendation for Nazi propaganda. For a longer-standing dummy account which has engaged with more such content, most videos in TikTok’s FYP promote Nazism or hate speech.

- These videos often feature AI-generated media. This content frequently denigrates minorities and promotes Nazism, including, for example, an AI-generated translation of a speech from Hitler which continues to go viral. Such content helps modernize Nazi propaganda, reach a broader international audience and can be used to skirt platform moderation.

- The prominence of these videos and accounts is partly driven by cross-platform coordination. Self-identified Nazi activists on Telegram are seeking to reach wider audiences through short-form video content. They are coordinating an effort to “juice” videos through mass engagement; sharing downloadable videos, images, and sounds to share on platforms like TikTok and encouraging users to post certain kinds of propaganda, such as promotions of the neo-Nazi documentary Europa: The Last Battle.

Figure 1: Sample results of ISD’s mass report of pro-Nazi TikTok accounts.

Methodology

This report was built off a snowball approach to surface the wider network. Beginning with a single self-identified Nazi account on TikTok, we were able to find hundreds of similar users, often with well-known and explicit Nazi slogans or imagery. We collected a list of 200 accounts, particularly those actively posting content which was manually verified to promote Nazi ideology or right-wing extremism. We also followed dozens of these accounts to gain access to private videos and the content on channels set to private.

After discovering numerous accounts and videos using a newly-created dummy account, we explored TikTok’s For You Page (FYP) and found that it immediately began recommending similar content via algorithm from previously unsurfaced accounts. We discovered additional users by searching for the unique sounds and songs played on TikTok.

Our research found that Telegram channels were sometimes shared by accounts in the network. By following popular out-links, including in newly discovered Telegram feeds, we were able to develop a list of more than 100 pro-Nazi Telegram channels.

The section below outlines the key findings from our research and is split into four parts – the first focuses on trends in the content shared by Nazis on TikTok, the second on their tactics, the third examines the impact of TikTok’s algorithm on their success and the fourth details how TikTok is responding to the issue.

Trends

Generative AI

The first trend we uncovered was the use of generative artificial intelligence (genAI) by the network, often to create dehumanizing caricatures of non-white racial and ethnic groups as violent or otherwise threatening to white communities. The effort is chiefly to modernize Nazi propaganda: one popular example is an AI-generated translation of a speech by Adolf Hitler, which remains prominent on TikTok. Clips of the audio were used in videos celebrating Joseph Goebbels, promoting neo-Nazi documentary Europa: The Last Battle, or disseminating coded calls for violence against Jews and other pro-Nazi content, collectively receiving millions of views.

Figure 2: AI-generated propaganda. The top middle image was not created by AI but is exemplary of how speeches of Hitler are spread. The video in question (105k views) is backdropped with an AI-translated speech from Hitler set to Washing Machine Heart by Mitski, a particular TikTok Sound with 1.3m views. 109 is shorthand for the antisemitic claim that Jews have been expelled from 109 countries.

TikTok Sounds & Save Europe

Sounds and audio on TikTok remain a critical vulnerability that is routinely exploited by white supremacists. In 2021, ISD detailed how MGMT’s ‘Little Dark Age’ was highly popular in photo slideshows and compilations of prominent fascists from the 20th century. Songs like this become in-group references that other extremists recognize; they follow the format, create their own TikTok post using the song – usually without any explicit text reference to the extremist content – and contribute to the participatory trend. At its core, the use of music and Sounds, used as coded references, are tactics used to evade TikTok content moderation.

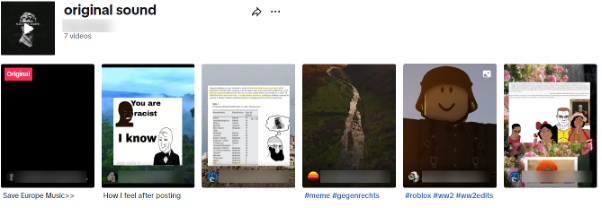

As documented in this Dispatch, this is still the case. Analysis of one account exclusively posting videos with music and visuals drawn from the anti-immigrant ‘Save Europe’ aesthetic illustrates how TikTok Sounds are being used to spread extremist content and ideologies. Music from the account (name redacted) is mostly in the style of 2000’s techno and does not violate TikTok’s rules. But this music is commonly paired with memes and images that promote Nazism, fascism and white supremacism.

Figure 3: An original sound created by the aforementioned account was employed in 6 other videos, all promoting racism, Nazism or other far right discourse. The song was created just 6 days prior to the screengrab, and other original sounds from the account have been shared in hundreds of videos collectively.

In many cases, ISD researchers were able to more easily surface content promoting Nazi ideology and glorifying far-right mass shooters by navigating through relevant music than searching relevant keywords. A search on TikTok for a song played by the Christchurch shooter as he drove away after killing 51 Muslim worshipers – yielded 1,200 videos. 7 of the top 10 videos celebrated the attack, its motivation, the shooter or even recreated it in a video game.

These seven videos alone received a collective viewership of 1.5m views, and there are likely hundreds of other similar videos freely circulating on the platform. One video included a link in the comment section to footage of the massacre recorded by the attacker to explain a coded reference. We also surfaced several instances of the actual footage which, despite being distorted to evade moderation, was still recognizable.

In some cases, the music used in the videos was explicitly hateful, such as one sound used on 119 videos which included the lyrics: “Kill the f*****s and Jews.” Others included excerpts from speeches by Mussolini and Hitler. Though such speeches can have historical importance, they were mostly used to celebrate the leaders or their messages.

Tactics

Coded Language

Coded language serves as a covert means for users on TikTok to communicate and signal ideology to community members while evading detection by platform moderators. It is a key tactic by which extremist actors on TikTok express support for and propagate their ideologies, as noted in ISD’s 2021 Hatescape report. Nazi-coded emojis, acronyms, numbers, and imagery were frequently shared among accounts analyzed in this study, which likely contributed to TikTok’s slowness in catching violative extremist accounts.

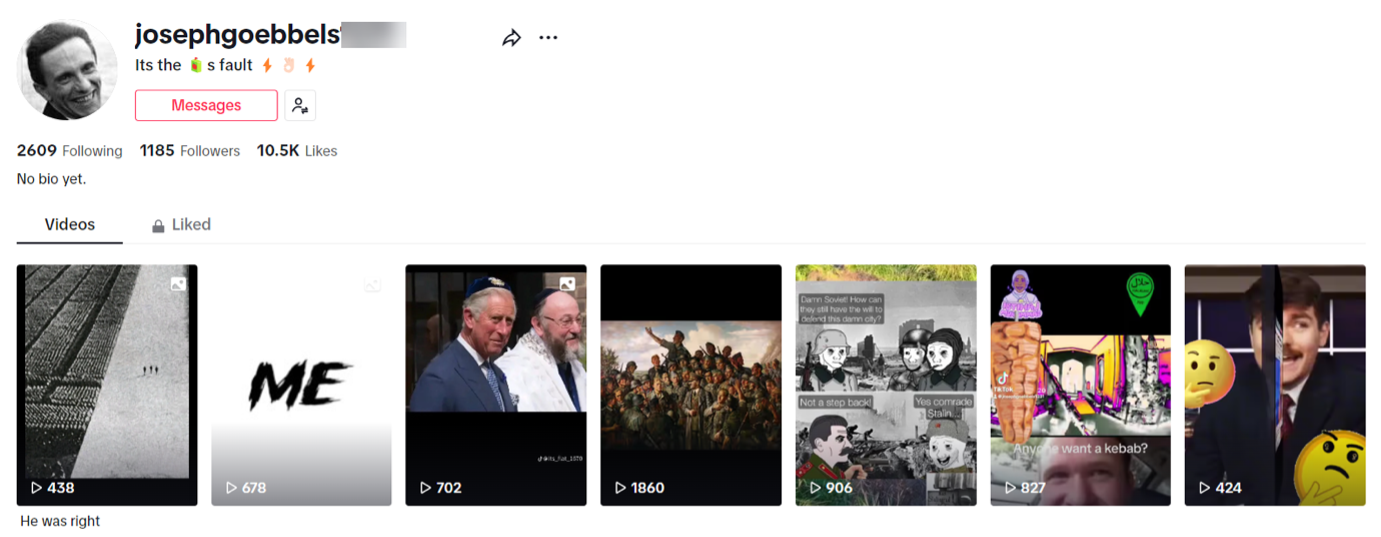

Figure 4: A TikTok account profile of @joseophgoebbels[numbers redacted], nicknamed “Its the 🧃s fault ⚡️👌🏻⚡️” shows how Nazi accounts openly signal ideology to one another and evade detection. The account’s 30 videos feature holocaust denial, antisemitic conspiracies, celebration of the Christchurch shooting, and glorification of Hitler and Nazi-era Germany. Though the account was banned before this report’s completion, by 3 June 2024, its videos had received over 10k likes and roughly 87k views.

Image Obfuscation

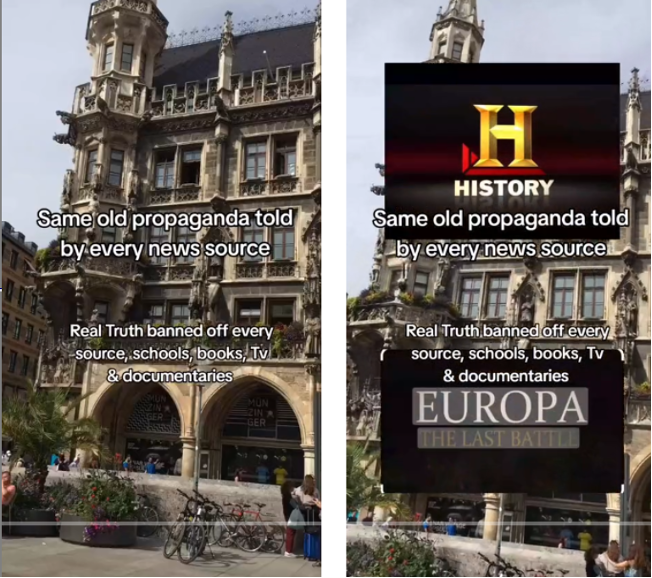

Another frequent tactic involves using innocuous or nostalgic images on videos and as thumbnails. These are often images of European architecture such as cathedrals, designed to evoke pride in European heritage while appearing mundane at first glance. However, these videos quickly transition to extremist content, including Nazi propaganda, hate speech targeting racial minorities, and other extremist material.

Figure 5: Two frames from the same video. Almost immediately after starting, an image promoting Europa: The Last Battle – a neo-Nazi documentary – appears on screen.

Cross-Platform Coordination

The effort to spread propaganda on TikTok is being coordinated on and off the platform by a network of self-identified Nazis. While the campaign includes efforts to “wake people up” on other platforms, such as X and Facebook, TikTok is commonly a focal point for discourse. As one account posted on Telegram: “We posted stuff on our brandnew (sic) tiktok account with 0 followers but had more views than you could ever have on bitchute or twitter. It just reaches much more people.” Another prominent neo-Nazi has frequently urged his thousands of Telegram followers to “juice” his TikTok videos to increase their viral potential.

The most clear-cut example of coordination was demonstrated by an “activist” Telegram channel with over 12k subscribers dedicated to ‘waking people up’ by promoting ‘Europa: The Last Battle’ – a neo-Nazi documentary commonly promoted to move users off TikTok onto more explicitly Nazi propaganda. The channel features several posts encouraging users to promote the film – one tells subscribers to blanket TikTok with reaction videos to make the film go viral.

During ISD’s investigation, we found countless promotions for ‘Europa: The Last Battle’, including several videos with more than 100k views. TikTok searches for the film, as well as minor variations on its title, yield dozens of videos. Some posted clips using tweaked tags like #EuropaTheLastBattel. One account posting such snippets has received nearly 900k views on their videos, which include claims that the Rothschild family control the media and handpick presidents, as well as other false or antisemitic claims.

Coordination also takes place heavily on TikTok itself. One user posted that they were on their final strike while advertising their backup account. The account was banned soon after. Their new account reached nearly 100,000 total views across five videos within just three days of starting to post Nazi propaganda, including a video celebrating neo-Nazi organizer and alleged murderer Dmitry Borovikov.

This account and many others take a ‘follow-for-follow’ tactic, a common method to achieve a closely linked network with higher engagement/followings and facilitate rapid growth. Dozens of accounts feature nearly identical usernames, profile photos, and numbers of followers and followings, indicating they are duplicate accounts from the same user.

Such tactics reveal why TikTok is failing to address the problem: the platform appears to take down accounts or videos individually rather than addressing a wider network as an aggregate. As a result, when users are banned, they are often able to re-create their accounts and experience rapid amplification of their new content.

Recruitment & Off-Platform User Journeys

The evidence of recruitment efforts and real-world harms in the pro-Nazi networks analyzed by ISD is alarming. Several real-world fascist or far-right organizations were found openly recruiting on the platform. A website of antisemitic flyers and instructions on how to print and distribute them was widely disseminated by the accounts monitored. Telegram channels featuring more violent and explicitly extremist discourse and calls to mobilize were commonly shared.

As a case study into how accounts propagate and recruit, ISD analyzed one account whose username contains an antisemitic slur, and its bio reads, “Armed Revolution now. Complete Annihilation of ✡️”. An example post includes the text: “The owners of the West (✡️) will soon have to answer for their crimes against us… These rats must be completely destroyed for their bloodline to end… the only answer is to get weapons and destroy them”, accompanied by a hateful remixed speech, which similarly calls to “kill every single Jew”, “learn to make bombs” and “stop relying on Jewish rats”. The account has 3.8k followers, 5k likes and has been active since 6 February 2023, on which date it published 37 videos. It has shared incomplete instructions to build improvised explosive devices, 3D-printed guns, and “napalm on a budget.” It has further sought to move users into a “secure groupchat” on Element and Tox, presumably where users could access the full instructions. Users in the comment sections indicated they had joined these off-platform groups, illustrating how TikTok is also used to coordinate and mobilize possible terror activity among neo-Nazis.

As of June 6, 2024, the account was following 10k users, many of whom appeared to share similar ideological inclinations. TikTok’s maximum follow limit is 10k, with a cap of 200 new follows per day. This demonstrates that the account was unusually dedicated to following self-identified Nazis and similar extremists on TikTok, which was not uncommon among accounts analyzed, likely to network with like-minded individuals. ISD reported the account for hate speech. TikTok found no violations, and the account remained active for over a week before eventually being banned.

Algorithmic Recommendations

Much of the content explored in this investigation was surfaced through TikTok’s algorithm, which provides a flow of pro-Nazi accounts and videos to the “For You Page” (FYP) of users. The FYP is a scrolling list of videos that appears by default when opening the app. Two dummy accounts created by ISD began receiving Nazi propaganda shortly after scrolling through similar content.

One account, created at the end of May 2024, took a similar approach to a 2021 Media Matters investigation. ISD watched 10 videos from the network of pro-Nazi users, occasionally clicking on comment sections but without engaging (e.g. liking, commenting or bookmarking), and viewed 10 accounts’ pages. After this superficial interaction, ISD scrolled through TikTok’s FYP, which almost immediately recommended Nazi propaganda. Within just three videos, the algorithm suggested a video featuring a World War II-era Nazi soldier overlayed with a chart of the US murder rate, broken down by race. While most of the early content on the FYP consisted of standard viral TikTok videos, mostly humorous in nature, it took less than 25 videos for an AI-translated speech from Hitler to appear, which was overlaid onto a recruitment poster for a white nationalist group.

ISD’s other account, created in early May and used throughout most of our investigation, observed most of its algorithm recommend such content. 7 out of 10 of its recently recommended videos came from self-identified Nazis or featured Nazi propaganda.

Figure 6: Dummy account’s recently recommended videos.

This dummy account followed several pro-Nazi users, mainly to identify private content and see videos on channels set to private. TikTok periodically recommended accounts to follow, which almost exclusively showed this account similar users. All 10 of the first accounts scrolled through incorporated Nazi symbols or keywords in their usernames or profile photos, or featured Nazi propaganda in their videos.

Figure 7: Examples of dummy account’s recommended accounts to follow. The skull face and “good night gay pride” (among other variants) are common symbols used by Nazis.

Recent reports by GNET have similarly pointed to the role of TikTok’s algorithm in spreading far-right extremist content in evident violation of platform policies, from the veneration of minor fascist ideologues to the adaption of Eurocentric supremacist narratives in Southeast Asia.

Content Flagging & Platform Response

After identifying and recording 200 accounts, ISD reported a sample of 50 actors that violated TikTok’s community guidelines by dehumanizing and inciting violence against protected groups, promoting hateful ideologies, celebrating violent extremists, denying the Holocaust, and/or sharing materials that promote hate speech and hateful ideologies. We divided these users into two main categories:

- Users who posted several videos promoting Nazism, including the ideology, neo-Nazi mass shooters, Nazi leaders (e.g. Hitler, Goebbels, etc.), symbolism, recruitment and/or distribution of pro-Nazi materials (documentaries, flyers, etc.)

- Users who posted several videos featuring broader hate speech, provided that their accounts also featured known Nazi symbols or other flagrant signals of their ideology in the username, nickname, biography and/or profile picture.

ISD reported these 50 accounts for hate speech to assess how TikTok would moderate them. The platform found no violations, and all accounts remained active the next day. However, two weeks later, 15 of these accounts had been banned. After one month, almost half (23/50) of the accounts had been banned. This suggests that while the platform eventually removes violative content and channels, in many cases it does so only after accounts have had the opportunity to accumulate significant viewership. For example, the 23 banned accounts from the sample dataset managed to accrue at least 2 million views across their content prior to getting banned.

Conclusion

TikTok is failing to adequately and promptly take down accounts pushing pro-Nazi hate speech and propaganda. Although the platform manages to periodically take down accounts, this often comes weeks and months after flagrant rule-breaking activity, during which time the accounts were able to accrue significant viewership. Moreover, the networked nature of the campaign and cross-platform coordination entails that after bans, accounts are able to re-emerge and rack up substantial audiences again and again.

One TikTok channel, for example, has been banned at least four times, posting after each ban on Telegram to follow their new TikTok account. Videos from the user, which center on promoting historical Nazi propaganda, collectively received 88.1k likes, based on screenshots shared to Telegram. Such activity is likely to continue as Nazis apply simple cost-benefit analysis and find that their measures to mitigate bans are successful and their content is reaching a sufficient audience to make the hassle of periodically creating new accounts worthwhile.

Success is not about routing out all forms of Nazism the moment it appears on the platform but rather sufficiently raising the barrier to entry. Instead, at present, self-identified Nazis are discussing TikTok as amenable platform to spread their ideology, especially when employing a series of countermeasures to evade moderation and amplify content as a network.

In essence, TikTok may not be equipped to handle the coordinated nature of hate speech propagation. The network of accounts explored in this study, using follow-for-follow tactics and organizing their efforts off platform indicates a level of sophistication that individual account reviews will likely miss. This approach enables faster amplification of their content than they would otherwise achieve. Additionally, providing users with final warnings has repeatedly allowed accounts to activate their back-ups in advance, and move their followers to new, warning-free accounts.