Measuring research impact: bibliometrics, social media, altmetrics, and the BJGP (original) (raw)

INTRODUCTION

Social media platforms such as blogs, Twitter, Facebook, and article reference managers such as Mendeley are now being used to communicate and discuss research. Alternative metrics (‘altmetrics’), first described by Priem and colleagues,1 is a term used to describe ‘web-based metrics for the impact of scholarly material with an emphasis on social media outlets as a source of data’.2 These article-level metrics are increasingly being used in conjunction with traditional bibliometric methods, such as the Impact Factor (IF), the immediacy index, and citation counts, to assess the impact of journals and journal articles and the outputs of individual researchers.

A journal’s IF is calculated as the average number of times articles published in the past 2 years have been cited in the Journal Citation Reports (JCR).3 The IF is not article specific, does not show the immediacy of the citation count, and may not be an appropriate or accurate means of assessing the overall impact of research in an article. The h-index4 is another measure that is frequently used to define an individual researcher’s citation records. There is a growing consensus that the IF as a single measure of quality is outdated. The San Francisco Declaration on Research Assessment (DORA)5 recommends the need to eliminate the use of journal-based metrics. It suggests that publishers should offer a range of performance measures to assess and evaluate scholarly output. Altmetrics have the potential to add another dimension to this.

Traditional bibliometric analysis and peer review have formed the standard methods to assess the ‘scientific status of disciplines, research institutes and scientists’,6 and it is well known that unless the ‘discipline’ or research is published in a journal with a high IF then it may be ‘lost’. Databases such as PubMed and Thomson Reuters Web of Science are the most prominent databases that measure IF, or ‘visibility’. Utilising online tools, such as social media, has the potential to increase this ‘visibility’.

BJGP ONLINE

The _BJGP’_s online readership is increasing every year with the introduction of a ‘paper short: web long’ publishing format, open access publishing, and more editorial attention to the traditional news media and newer social media, all of which have the potential to increase attention.7 The way the BJGP is accessed online changed at the end of 2013 by switching platforms and to its own URL, which is directly accessed from PubMed.com. This has corresponded with a sharp rise in direct downloads from bjgp.org. Direct access to bjgp.org articles from social media sites such as Twitter may potentially further augment this trend.

High online visibility of an article has the potential to draw attention to the publishing journal, to the authors, as well as to the study itself. Online platforms have the capacity to introduce greater transparency into the research publication and dissemination process, because they provide a new opportunity for the data, analyses, and results to be exchanged, stored, and discussed,8 and for post-publication peer review.

MEASURING RESEARCH IMPACT

Peer review of research output has traditionally been used as the basis for research funding decisions by the Higher Education Funding Councils. In the new Research Excellence Framework (REF) 25% of the overall quality assessment is attributable to the impact of the research. It is possible that altmetrics could be used to provide additional indicators of impact.

A combined bibliometric indicator has been proposed to measure the impact of studies in the social sciences: the weighted sum of IF and the number of citations of the article within a citation window.9 Alternative measures of citation may better predict article impact for short citation windows in particular, compared with long-term citation using standard indicators. In the same way, altmetrics could potentially be incorporated in similar alternative indicators to aid quality assessment by peer review. Indicators other than the standard indicator of citation for short citation windows can correlate more highly with long-term citation than does the standard indicator of citation. This may be relevant to the more applied primary care research that is likely to have a wide citation window including, perhaps, health services and health policy research.

Additionally, metrics of social impact based on Twitter and correlation with traditional metrics of scientific impact has been explored. One study reviewed whether these metrics are sensitive and specific enough to predict highly cited peer-reviewed articles in the Journal of Medical Internet Research (JMIR). It looked at all tweets containing links to JMIR articles within a certain time period, and different metrics of social media were calculated and compared against citation rates from Scopus and Google Scholar for the linked articles. Extrapolations using Pearson and Spearman rank correlations to predict highly cited articles through highly tweeted articles were validated. It found that highly tweeted articles were also highly cited: with the highest calculated correlation coefficient of 0.69. From this it can be argued that:

‘Tweets can predict highly cited articles within the first 3 days of article publication. Social media activity either increases citations or reflects the underlying qualities of the article that also predict citations, but the true use of these metrics is to measure the distinct concept of social impact.’10

However, it is important to note that JMIR is an IT journal, with readers who may be more likely to use social media platforms to share research. Being open access is advantageous to the BJGP because readers are more likely to disseminate articles to non-research users compared with articles that are not open access. Coverage in social media may help potential readers to find the work and then cite it. High-quality, timely articles are more likely to get tweeted more and also (independently) cited more.

ALTMETRICS

The development of the concept of altmetrics has been accompanied by a growth in the diversity of web-based tools such as PLoS Article-Level Metrics (ALMs) that ‘provide a suite of established metrics that measure the overall performance and reach of published research articles’.6

Several studies have shown some relationship between altmetrics and previously established impact metrics such as citation analysis.11–13 However, more research is required, including amalgamating quantitative and qualitative studies.

Compared with downloads, citations are delayed by about 2 years, so download statistics and social media use may provide a useful indicator of eventual citations in advance and potentially a useful measure of the scientific value of a research paper.14 Additionally, social media ‘provides rapid dissemination and amplification of content and the ability to lead informal conversations and lead to increased readership and citation’.15 The use of online platforms provides an accessible means to read the most current research, guidelines, and journals, and make recommendations. This, in turn, allows readers to debate and even teach new findings before they emerge on citation registries. However, some prefer not to use social media because of concerns that they appear ‘unprofessional, or would post something incorrect or be misunderstood.’15

THE FUTURE

Altmetrics are new and changing indicators, and measurement is not standardised, making the choice of indicator challenging. However, it may be possible to select the sort of alternative metric to use depending on the type of impact being investigated. For example, if looking at scholarly impact, Mendeley readership or ‘F1000Prime’ reviews (recommendations from an online faculty of scientists on Faculty of 1000 — a publisher of services for life scientists and clinical researchers) can be used, whereas social media will look at public attention and engagement.

Bornmann16 also helpfully critiques the data quality and different aspects of altmetrics, looking at their advantages and disadvantages, concluding that ‘the use of altmetrics is becoming more and more popular and the (critical) discussions about possible application scenarios are increasing.’ Furthermore, he makes the valid point that ‘when altmetrics are used in research evaluation, they are in an informed peer review process, exactly like the traditional metrics.’ Importantly, altmetric data should not solely be used to make decisions about research funding but to aid and not supersede bibliometrics. Metrics in general should not trump peer review, just complement it.

Altmetric counts cannot be used at present as a measurement of societal impact because more information is needed about user groups; for example, whether impact has been measured by citations in policy documents or guidelines, or used in healthcare commissioning decisions, rather than simply appearing on social media sites.17

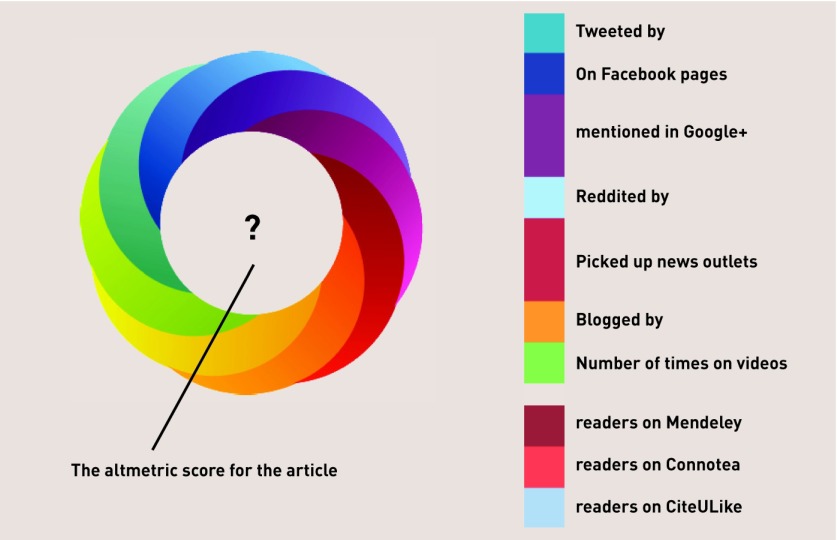

There is no conclusive evidence to link activity on social media platforms with citations or on the impact of the article. Social media may well have a role to play in academia in the future, and should not be ignored. Assessing the value and impact of scholarly work can be modernised, and altmetrics and social media potentially provide the tools to do this. The BJGP has recently started to use the Altmetric donut to indicate how much and what kind of attention each article has received on different platforms. Figure 1 illustrates this button or ‘donut’ and how the different sources can be represented. We envisage these new ways of measuring the effect that BJGP articles are having on their target communities as having an increasing role in the future.

Figure 1.

The Altmetric donut. The different colours represent the different sources mentioning an article. The Altmetric ‘score’ is a ‘measure derived from volume, sources and authors, each having an assigned value’. www.altmetric.com.

Acknowledgments

We are grateful to Euan Adie for valuable suggestions as well as Professor Roger Jones for his contributions, and the BJGP team for their comments.

Provenance

Freely submitted; externally peer reviewed.

Competing interests

The authors have declared no competing interests.

REFERENCES

- 1.Priem J, Taraborelli D, Groth P, Neylon C. altmetrics: a manifesto. 2010. http://altmetrics.org/manifesto/ (accessed 13 Nov 2015).

- 2.Shema H, Bar-Ilan J, Thelwall M. Do blog citations correlate with a higher number of future citations? Research blogs as a potential source for alternative metrics. J Assoc Inf Sci Technol. 2014;65(5):1018–1027. [Google Scholar]

- 3.McVeigh ME, Mann SJ. The journal impact factor denominator: defining citable (counted) items. JAMA. 2009;302(10):1107–1109. doi: 10.1001/jama.2009.1301. [DOI] [PubMed] [Google Scholar]

- 4.Hirsch JE. An index to quantify an individual’s scientific research output. Proc Natl Acad Sci USA. 2005;102(46):16569–16572. doi: 10.1073/pnas.0507655102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Assessment SFDoR. San Francisco Declaration on Research Assessment 2012. http://www.ascb.org/dora/ (accessed 13 Nov 2015).

- 6.van Weel C. Biomedical science matters for people — so its impact should be better assessed. Lancet. 2002;360(9339):1034–1035. doi: 10.1016/S0140-6736(02)11175-5. [DOI] [PubMed] [Google Scholar]

- 7.Jones R, Green E, Hull C, et al. Making an impact: research, publications, and bibliometrics in the BJGP. Br J Gen Pract. 2012 doi: 10.3399/bjgp12X630214. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Alhoori H, Furuta R. Do altmetrics follow the crowd or does the crowd follow altmetrics?. Proceedings of the 14th ACM/IEEE-CS Joint Conference on Digital Libraries; 8–12 September 2014; London. pp. 375–378. [Google Scholar]

- 9.Levitt JM, Thelwall M. A combined bibliometric indicator to predict article impact. Inform Process Manag. 2011;47(2):300–308. [Google Scholar]

- 10.Eysenbach G. Can tweets predict citations? Metrics of social impact based on Twitter and correlation with traditional metrics of scientific impact. J Med Internet Res. 2011;13(4):e123. doi: 10.2196/jmir.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Kousha K, Thelwall M. Google book search: citation analysis for social science and the humanities. J Assoc Inf Sci Technol. 2009;60(8):1537–1549. [Google Scholar]

- 12.Costas R, Zahedi Z, Wouters P. Do ‘altmetrics’ correlate with citations? Extensive comparison of altmetric indicators with citations from a multidisciplinary perspective. J Assoc Inf Sci Technol. 2015;66(10):2003–2019. [Google Scholar]

- 13.Vaughan L, Shaw D. A new look at evidence of scholarly citation in citation indexes and from web sources. Scientometrics. 2008;74(2):317–330. [Google Scholar]

- 14.Perneger TV. Relation between online ‘hit counts’ and subsequent citations: prospective study of research papers in the BMJ. BMJ (Clinical Research Ed) 2004;329(7465):546–547. doi: 10.1136/bmj.329.7465.546. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Osterrieder A. The value and use of social media as communication tool in the plant sciences. Plant Methods. 2013;9(1):26. doi: 10.1186/1746-4811-9-26. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Bornmann L. Do altmetrics point to the broader impact of research? An overview of benefits and disadvantages of altmetrics. J Informetrics. 2014;8(4):895–903. [Google Scholar]

- 17.Liu J, Adie E. Five challenges in altmetrics: a toolmaker’s perspective. Bul Am Soc Info Sci Tech. 2013;39(4):31–34. [Google Scholar]