Notes from the first Ray meetup (original) (raw)

Ray is beginning to be used to power large-scale, real-time AI applications.

August 15, 2018

Machine learning adoption is accelerating due to the growing number of large labeled data sets, languages aimed at data scientists (R, Julia, Python), frameworks (scikit-learn, PyTorch, TensorFlow, etc.), and tools for building infrastructure to support end-to-end applications. While some interesting applications of unsupervised learning are beginning to emerge, many current machine learning applications rely on supervised learning. In a recent series of posts, Ben Recht makes the case for why some of the most interesting problems might actually fall under reinforcement learning (RL), specifically systems that are able to act based upon past data and do so in a way that is safe, robust, and reliable.

But first we need RL tools that are accessible for practitioners. Unlike supervised learning, in the past there hasn’t been a good open source tool for easily trying RL at scale. I think things are about to change. I was fortunate enough to receive an invite to the first meetup devoted to Ray—RISE Lab’s high-performance, distributed execution engine, which targets emerging AI applications, including those that rely on reinforcement learning. This was a small, invite-only affair held at OpenAI, and most of the attendees were interested in reinforcement learning.

Learn faster. Dig deeper. See farther.

Here’s a brief rundown of the program:

- Robert Nishihara and Philipp Moritz gave a brief overview and update on the Ray project, including a description of items on the near-term roadmap.

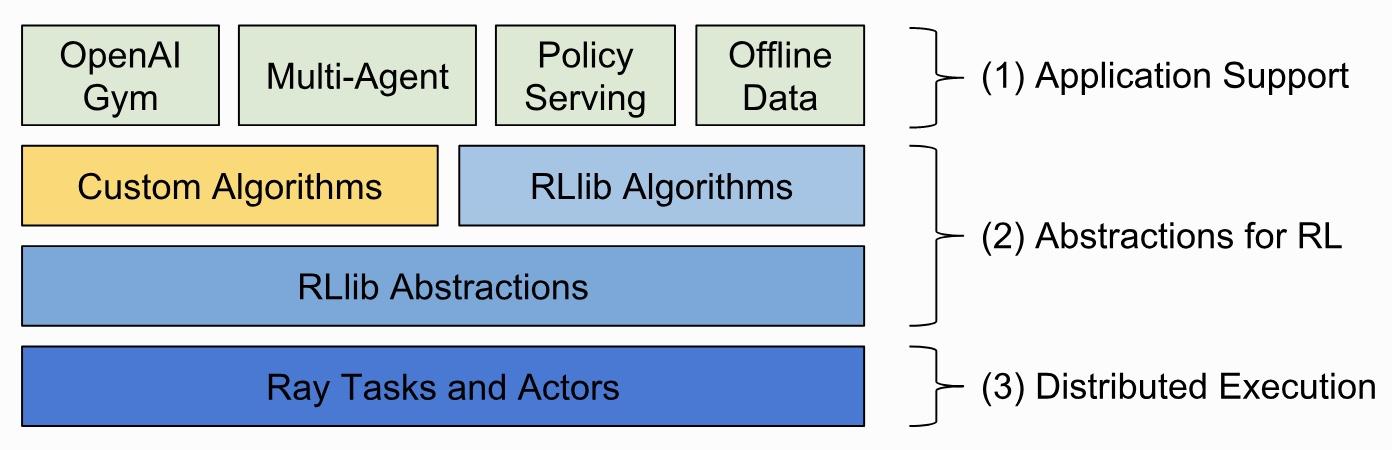

- Eric Liang and Richard Liaw gave a quick tour of two libraries built on top of Ray: RLlib (scalable reinforcement learning) and Tune (a hyperparameter optimization framework). They also pointed a to a recent ICML paper on RLlib. Both of these libraries are easily accessible to anyone familiar with Python, and both should prove popular among industrial data scientists.

Figure 1. RLlib and reinforcement learning. Image courtesy of RISE Lab.

- Eugene Vinitsky showed some amazing videos of how Ray is helping them understand and predict traffic patterns in real time, and in the process help researchers study large transportation networks. The videos were some of the best examples of the combination of IoT, sensor networks, and reinforcement learning that I’ve seen.

- Alex Bao of Ant Financial described three applications they’ve identified for Ray. I’m not sure I’m allowed to describe them here, but they were all very interesting and important use cases. The most important takeaway for the evening was Ant Financial is already using Ray in production in two of the three use cases (and they are close to deploying Ray to production for the third)! Given that Ant Financial is the largest unicorn company in the world, this is amazing validation for Ray.

With the buzz generated by the evening’s presentations and early examples of production deployments beginning to happen, I expect meetups on Ray to start springing up in other geographic areas. We are still in the early stages of adoption of machine learning technologies. The presentations at this meetup confirm that an accessible and scalable platform like Ray, opens up many possible applications of reinforcement learning and online learning.

For more on Ray:

- Visit the project page on GitHub.

- Attend upcoming three-hour tutorials on “Building reinforcement learning applications with Ray” at the Artificial Intelligence conference: San Francisco (September 5, 2018) and in London (October 9, 2018)

- “Introducing RLlib: A composable and scalable reinforcement learning library”

- Robert Nishihara and Philipp Moritz on the O’Reilly Data Show podcast: “How Ray makes continuous learning accessible and easy to scale”