5 key things to know about the margin of error in election polls (original) (raw)

In presidential elections, even the smallest changes in horse-race poll results seem to become imbued with deep meaning. But they are often overstated. Pollsters disclose a margin of error so that consumers can have an understanding of how much precision they can reasonably expect. But cool-headed reporting on polls is harder than it looks, because some of the better-known statistical rules of thumb that a smart consumer might think apply are more nuanced than they seem. In other words, as is so often true in life, it’s complicated.

Here are some tips on how to think about a poll’s margin of error and what it means for the different kinds of things we often try to learn from survey data.

What is the margin of error anyway?

Because surveys only talk to a sample of the population, we know that the result probably won’t exactly match the “true” result that we would get if we interviewed everyone in the population. The margin of sampling error describes how close we can reasonably expect a survey result to fall relative to the true population value. A margin of error of plus or minus 3 percentage points at the 95% confidence level means that if we fielded the same survey 100 times, we would expect the result to be within 3 percentage points of the true population value 95 of those times.

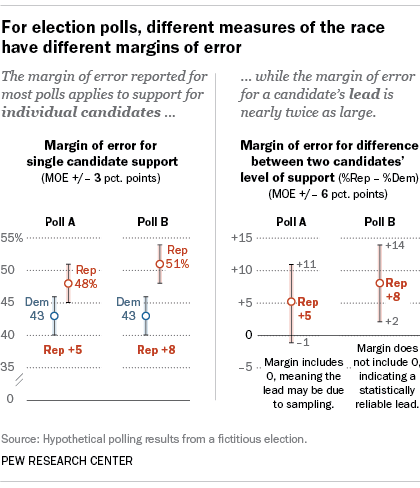

The margin of error that pollsters customarily report describes the amount of variability we can expect around an individual candidate’s level of support. For example, in the accompanying graphic, a hypothetical Poll A shows the Republican candidate with 48% support. A plus or minus 3 percentage point margin of error would mean that 48% Republican support is within the range of what we would expect if the true level of support in the full population lies somewhere 3 points in either direction – i.e., between 45% and 51%.

How do I know if a candidate’s lead is ‘outside the margin of error’?

News reports about polling will often say that a candidate’s lead is “outside the margin of error” to indicate that a candidate’s lead is greater than what we would expect from sampling error, or that a race is “a statistical tie” if it’s too close to call. It is not enough for one candidate to be ahead by more than the margin of error that is reported for individual candidates (i.e., ahead by more than 3 points, in our example). To determine whether or not the race is too close to call, we need to calculate a new margin of error for the difference between the two candidates’ levels of support. The size of this margin is generally about twice that of the margin for an individual candidate. The larger margin of error is due to the fact that if the Republican share is too high by chance, it follows that the Democratic share is likely too low, and vice versa.

For Poll A, the 3-percentage-point margin of error for each candidate individually becomes approximately a 6-point margin of error for the difference between the two. This means that although we have observed a 5-point lead for the Republican, we could reasonably expect their true position relative to the Democrat to lie somewhere between –1 and +11 percentage points. The Republican would need to be ahead by 6 percentage points or more for us to be confident that the lead is not simply the result of sampling error.

In Poll B, which also has a 3-point margin of error for each individual candidate and a 6-point margin for the difference, the Republican lead of 8 percentage points is large enough that it is unlikely to be due to sampling error alone.

How do I know if there has been a change in the race?

With new polling numbers coming out daily, it is common to see media reports that describe a candidate’s lead as growing or shrinking from poll to poll. But how can we distinguish real change from statistical noise? As with the difference between two candidates, the margin of error for the difference between two polls may be larger than you think.

In the example in our graphic, the Republican candidate moves from a lead of 5 percentage points in Poll A to a lead of 8 points in Poll B, for a net change of +3 percentage points. But taking into account sampling variability, the margin of error for that 3-point shift is plus or minus 8 percentage points. In other words, the shift that we have observed is statistically consistent with anything from a 5-point decline to an 11-point increase in the Republican’s position relative to the Democrat. This is not to say such large shifts are likely to have actually occurred (or that no change has occurred), but rather that we cannot reliably distinguish real change from noise based on just these two surveys. The level of observed change from one poll to the next would need to be quite large in order for us to say with confidence that a change in the horse-race margin is due to more than sampling variability.

Even when we do see large swings in support from one poll to the next, one should exercise caution in accepting them at face value. From Jan. 1, 2012, through the election in November, Huffpost Pollster listed 590 national polls on the presidential contest between Barack Obama and Mitt Romney. Using the traditional 95% threshold, we would expect 5% (about 30) of those polls to produce estimates that differ from the true population value by more than the margin of error. Some of these might be quite far from the truth.

Yet often these outlier polls end up receiving a great deal of attention because they imply a big change in the state of the race and tell a dramatic story. When confronted with a particularly surprising or dramatic result, it’s always best to be patient and see if it is replicated in subsequent surveys. A result that is inconsistent with other polling is not necessarily wrong, but real changes in the state of a campaign should show up in other surveys as well.

The amount of precision that can be expected for comparisons between two polls will depend on the details of the specific polls being compared. In practice, almost any two polls on their own will prove insufficient for reliably measuring a change in the horse race. But a series of polls showing a gradual increase in a candidate’s lead can often be taken as evidence for a real trend, even if the difference between individual surveys is within the margin of error. As a general rule, looking at trends and patterns that emerge from a number of different polls can provide more confidence than looking at only one or two.

How does the margin of error apply to subgroups?

Generally, the reported margin of error for a poll applies to estimates that use the whole sample (e.g., all adults, all registered voters or all likely voters who were surveyed). But polls often report on subgroups, such as young people, white men or Hispanics. Because survey estimates on subgroups of the population have fewer cases, their margins of error are larger – in some cases much larger.

A simple random sample of 1,067 cases has a margin of error of plus or minus 3 percentage points for estimates of overall support for individual candidates. For a subgroup such as Hispanics, who make up about 15% of the U.S. adult population, the sample size would be about 160 cases if represented proportionately. This would mean a margin of error of plus or minus 8 percentage points for individual candidates and a margin of error of plus or minus 16 percentage points for the difference between two candidates. In practice, some demographic subgroups such as minorities and young people are less likely to respond to surveys and need to be “weighted up,” meaning that estimates for these groups often rely on even smaller sample sizes. Some polling organizations, including Pew Research Center, report margins of error for subgroups or make them available upon request.

What determines the amount of error in survey estimates?

Many poll watchers know that the margin of error for a survey is driven primarily by the sample size. But there are other factors that also affect the variability of estimates. For public opinion polls, a particularly important contributor is weighting. Without adjustment, polls tend to overrepresent people who are easier to reach and underrepresent those types of people who are harder to interview. In order to make their results more representative pollsters weight their data so that it matches the population – usually based on a number of demographic measures. Weighting is a crucial step for avoiding biased results, but it also has the effect of making the margin of error larger. Statisticians call this increase in variability the design effect.

It is important that pollsters take the design effect into account when they report the margin of error for a survey. If they do not, they are claiming more precision than their survey actually warrants. Members of the American Association for Public Opinion Research’s Transparency Initiative (including Pew Research Center) are required to disclose how their weighting was performed and whether or not the reported margin of error accounts for the design effect.

It is also important to bear in mind that the sampling variability described by the margin of error is only one of many possible sources of error that can affect survey estimates. Different survey firms use different procedures or question wording that can affect the results. Certain kinds of respondents may be less likely to be sampled or respond to some surveys (for instance, people without internet access cannot take online surveys). Respondents might not be candid about controversial opinions when talking to an interviewer on the phone, or might answer in ways that present themselves in a favorable light (such as claiming to be registered to vote when they are not).

For election surveys in particular, estimates that look at “likely voters” rely on models and predictions about who will turn out to vote that may also introduce error. Unlike sampling error, which can be calculated, these other sorts of error are much more difficult to quantify and are rarely reported. But they are present nonetheless, and polling consumers should keep them in mind when interpreting survey results.