Fears grow about big tech guiding U.S. AI policy (original) (raw)

Listen to this article. This audio was generated by AI.

Academics and industry experts fear that big tech companies are playing an outsized role in U.S. AI policy, as the federal government lacks regulations cementing AI safety guardrails to hold companies accountable.

While a handful of U.S. states passed laws governing AI use, Congress has yet to pass such legislation. Without regulation, President Joe Biden's administration relies on companies voluntarily developing safe AI.

Bruce Schneier, an adjunct lecturer in public policy at Harvard Kennedy School, said his concern is that leading AI companies are "making the rules." He said there's "nothing magic about AI companies that makes them do things to the benefit of others" and that technology like AI needs to be regulated similarly to airline industries and pharmaceutical companies due to its dangers.

"If you're an ax murderer and you're in charge of the murder laws, that'd be great, right?" Schneier said. "Do I want the tech companies writing the laws to regulate themselves? No. I don't want the ax murderers writing the murder code laws. It's just not good policy."

Gartner analyst Avivah Litan said the Biden administration's reliance on big tech to self-govern is concerning.

"The Biden administration, they're just listening to these big companies," Litan said. "They're built to be self-serving for profit -- they're not built to protect the public."

Academics lament reliance on big tech for safe AI use

Without regulation, the Biden administration cannot enforce AI safety measures and guardrails, meaning it's up to the companies to decide what steps to take. If companies get AI wrong, Schneier said, it's a technology that can be "very dangerous."

The fact that these companies are unregulated is a disaster.

Bruce SchneierAdjunct lecturer in public policy, Harvard Kennedy School

"The fact that these companies are unregulated is a disaster," Schneier said. "We don't want actual killer robots when we start regulating."

The idea that big tech is following its own rules for AI is "unacceptable," said Moshe Vardi, a computational engineering professor at Rice University. Vardi was one of more than 33,000 signatories on an open letter circulated in 2023 that called on companies to pause training large AI systems due to AI's risks.

Social media companies like Meta, operator of Facebook and Instagram, have demonstrated the risks of leaving the tech industry unregulated and without accountability, Vardi said. The social media giant knew its platforms harmed teens' mental health, he noted. Facebook whistleblower Frances Haugen testified before Congress about Facebook's lack of transparency on internal studies about social media's harms.

Vardi said his concern is that the same companies that ignored the risks of social media are now largely behind the use and deployment of massive AI systems. He said he's concerned that the tech industry faces no liability when harm occurs.

"They knew about the risks of too much screen time," Vardi said. "Now you take this industry that has a proven track record of being socially irresponsible ... they're rushing ahead into even more powerful technology. I know what is going to drive Silicon Valley is just profits, end of story. That's what scares me."

Holding the tech industry accountable should be a priority, he said.

"We have an industry that's so powerful, so rich and not accountable," Vardi said. "It's scary for democracy."

U.S. AI policy hangs on voluntary commitments

In July 2023, Biden secured voluntary commitments to the safe and secure development of AI from Google, Amazon, Microsoft, Meta, OpenAI, Anthropic and Inflection. Apple a year later joined the list of companies committed to developing safe AI systems.

Vardi said the voluntary commitments are vague and not enough to ensure safe AI. He added that he is skeptical of companies' "good intentions."

"Imagine that was big pharma," Vardi said. "Imagine that the Biden administration made voluntary commitments the only market safeguard. It would be laughable."

As Congress stalls on AI regulation, the Biden administration continues to rely on big tech's guidance, particularly as the global AI race escalates.

The White House hosted a roundtable with big tech companies on U.S. leadership in AI infrastructure earlier this month. To accelerate public-private collaboration on U.S. AI leadership, the Biden administration launched a new Task Force on AI Datacenter Infrastructure to help coordinate policy across government. "The Task Force will work with AI infrastructure leaders" to prioritize AI data center development, according to a news release. Tech companies deploying large AI systems need AI data centers to power large language models, and plan to build such data centers in multiple countries, not just the U.S.

The announcement sparked backlash from entities like the Athena Coalition, a group focused on empowering small businesses and challenging big tech companies. Emily Peterson-Cassin, director of corporate power at the nonprofit Demand Progress, pointed out that many industry participants in the White House roundtable are under federal investigation for anticompetitive business practices and "in some cases because of the way they are running their AI businesses."

"These are not the people we should trust to provide input on building responsible AI infrastructure that serves the public good," she said in a statement.

Big tech companies provide input not only to the Biden administration, but to different federal agencies as well.

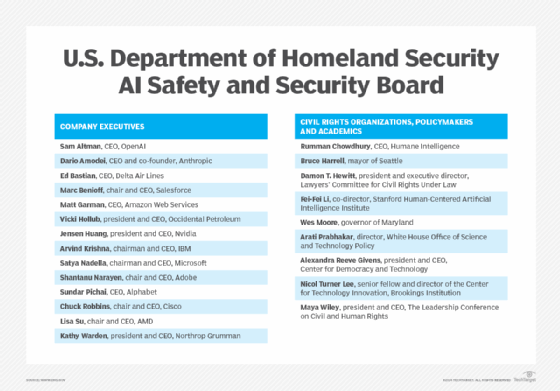

Multiple tech companies, including Nvidia, Google, Amazon, Microsoft and OpenAI, serve on the Department of Homeland Security's Artificial Intelligence Safety and Security Board to advise on secure AI deployment in critical infrastructure. They also participate in the Artificial Intelligence Safety Institute Consortium, companion to the U.S. Artificial Intelligence Safety Institute within the National Institute of Standards and Technology, to "enable the development and deployment of safe and trustworthy AI systems."

"They're not being leaned on," Schneier said of big tech companies. "They're actually making the rules."

CEOs of several big tech companies, including OpenAI, Microsoft and AWS, serve on the Department of Homeland Security's AI Safety and Security Board.

Big tech involvement in AI policy could harm small businesses

Gartner's Litan worries that big tech companies' involvement at the federal level might harm innovation in the startup community. For example, the 23-member AI Safety and Security Board includes CEOs from big-name companies and leadership from civil liberties organizations, but no one from smaller AI companies.

"There's no one representing innovation in startups," she said.

Litan also raised concerns about Biden's executive order on AI. It directs the Department of Commerce to use the Defense Production Act to compel AI systems developers to report information like AI safety tests, which she said could be anticompetitive for smaller businesses.

She said the Defense Production Act is being used to "squash innovation and competition" because the only companies that can afford to comply with the testing requirements are large companies.

"I think the administration is getting manipulated," she said.

Large companies might push for new rules and regulations that could disadvantage smaller businesses, said David Inserra, a fellow at libertarian think tank Cato Institute. He added that these regulations might create requirements that smaller companies cannot meet.

"My worry would be that we would be seeing a world where the rules are written in a way which maybe help current big actors or certain viewpoints," Inserra said.

Big tech companies outline work on voluntary commitments

TechTarget Editorial reached out to all seven companies that originally made voluntary commitments for an update on what steps the companies took to meet those commitments. The reported voluntary measures included internal and external testing of AI systems before release, enabling third-party reporting of AI vulnerabilities within the companies' systems, and creating technical measures to identify AI-generated content. Meta, Anthropic, Google and Inflection did not respond.

Amazon said it has embedded invisible watermarks by default into images generated by its Amazon Titan Image Generator. The company created AI service cards that provide details on its AI use and launched additional safeguard capabilities for Amazon Bedrock, its generative AI tool on AWS.

Meanwhile, Microsoft said it expanded its AI red team to identify and assess AI security vulnerabilities. In February, Microsoft released the Python Risk Identification Tool for generative AI, which helps developers find risks in their generative AI apps. The company also invested in tools to identify AI-generated audio and visual content. In a statement to TechTarget, Microsoft said it began automatically attaching "provenance metadata to images generated with OpenAI's Dall-E 3 model in our Azure OpenAI Service, Microsoft Designer and Microsoft Paint."

In a statement provided to TechTarget by OpenAI, the company described the White House's voluntary commitments as a "crucial first step toward our shared goal of promoting the development of safe, secure and trustworthy AI."

"These commitments have helped guide our work over the past year, and we continue to work alongside governments, civil society and other industry leaders to advance AI governance going forward," an OpenAI spokesperson said.

Makenzie Holland is a senior news writer covering big tech and federal regulation. Prior to joining TechTarget Editorial, she was a general assignment reporter for the Wilmington StarNews and a crime and education reporter at the Wabash Plain Dealer_._