Object detection with Model Garden (original) (raw)

TensorFlow basics

Keras

Build with Core

TensorFlow in depth

Customization

Data input pipelines

Import and export

Accelerators

Performance

Model Garden

Estimators

Appendix

Object detection with Model Garden

This tutorial fine-tunes a RetinaNet with ResNet-50 as backbone model from the TensorFlow Model Garden package (tensorflow-models) to detect three different Blood Cells in BCCD dataset. The RetinaNet is pretrained on COCO train2017 and evaluated on COCO val2017

Model Garden contains a collection of state-of-the-art models, implemented with TensorFlow's high-level APIs. The implementations demonstrate the best practices for modeling, letting users to take full advantage of TensorFlow for their research and product development.

This tutorial demonstrates how to:

- Use models from the Tensorflow Model Garden(TFM) package.

- Fine-tune a pre-trained RetinanNet with ResNet-50 as backbone for object detection.

- Export the tuned RetinaNet model

Install necessary dependencies

pip install -U -q "tf-models-official"Import required libraries

import os

import io

import pprint

import tempfile

import matplotlib

import numpy as np

import tensorflow as tf

import matplotlib.pyplot as plt

from PIL import Image

from six import BytesIO

from IPython import display

from urllib.request import urlopen

2023-11-09 12:15:18.455434: E tensorflow/compiler/xla/stream_executor/cuda/cuda_dnn.cc:9342] Unable to register cuDNN factory: Attempting to register factory for plugin cuDNN when one has already been registered 2023-11-09 12:15:18.455488: E tensorflow/compiler/xla/stream_executor/cuda/cuda_fft.cc:609] Unable to register cuFFT factory: Attempting to register factory for plugin cuFFT when one has already been registered 2023-11-09 12:15:18.455537: E tensorflow/compiler/xla/stream_executor/cuda/cuda_blas.cc:1518] Unable to register cuBLAS factory: Attempting to register factory for plugin cuBLAS when one has already been registered

Import required libraries from tensorflow models

import orbit

import tensorflow_models as tfm

from official.core import exp_factory

from official.core import config_definitions as cfg

from official.vision.serving import export_saved_model_lib

from official.vision.ops.preprocess_ops import normalize_image

from official.vision.ops.preprocess_ops import resize_and_crop_image

from official.vision.utils.object_detection import visualization_utils

from official.vision.dataloaders.tf_example_decoder import TfExampleDecoder

pp = pprint.PrettyPrinter(indent=4) # Set Pretty Print Indentation

print(tf.__version__) # Check the version of tensorflow used

%matplotlib inline

2.14.0

Custom dataset preparation for object detection

Models in official repository(of model-garden) requires data in a TFRecords format.

Please check this resource to learn more about TFRecords data format.

Upload your custom data in drive or local disk of the notebook and unzip the data

curl -L 'https://public.roboflow.com/ds/ZpYLqHeT0W?key=ZXfZLRnhsc' > './BCCD.v1-bccd.coco.zip'

unzip -q -o './BCCD.v1-bccd.coco.zip' -d './BCC.v1-bccd.coco/'

rm './BCCD.v1-bccd.coco.zip'

% Total % Received % Xferd Average Speed Time Time Time Current Dload Upload Total Spent Left Speed 100 892 100 892 0 0 3391 0 --:--:-- --:--:-- --:--:-- 3391 100 15.2M 100 15.2M 0 0 28.7M 0 --:--:-- --:--:-- --:--:-- 288M

CLI command to convert data(train data).

`TRAIN_DATA_DIR='./BCC.v1-bccd.coco/train'` `TRAIN_ANNOTATION_FILE_DIR='./BCC.v1-bccd.coco/train/_annotations.coco.json'` `OUTPUT_TFRECORD_TRAIN='./bccd_coco_tfrecords/train'`

# Need to provide

# 1. image_dir: where images are present

# 2. object_annotations_file: where annotations are listed in json format

# 3. output_file_prefix: where to write output convered TFRecords files

python -m official.vision.data.create_coco_tf_record --logtostderr \

--image_dir=${TRAIN_DATA_DIR} \

--object_annotations_file=${TRAIN_ANNOTATION_FILE_DIR} \

--output_file_prefix=$OUTPUT_TFRECORD_TRAIN \

--num_shards=1

2023-11-09 12:15:24.203902: E tensorflow/compiler/xla/stream_executor/cuda/cuda_dnn.cc:9342] Unable to register cuDNN factory: Attempting to register factory for plugin cuDNN when one has already been registered 2023-11-09 12:15:24.203959: E tensorflow/compiler/xla/stream_executor/cuda/cuda_fft.cc:609] Unable to register cuFFT factory: Attempting to register factory for plugin cuFFT when one has already been registered 2023-11-09 12:15:24.203987: E tensorflow/compiler/xla/stream_executor/cuda/cuda_blas.cc:1518] Unable to register cuBLAS factory: Attempting to register factory for plugin cuBLAS when one has already been registered 2023-11-09 12:15:27.899086: W tensorflow/core/common_runtime/gpu/gpu_device.cc:2211] Cannot dlopen some GPU libraries. Please make sure the missing libraries mentioned above are installed properly if you would like to use GPU. Follow the guide at https://www.tensorflow.org/install/gpu for how to download and setup the required libraries for your platform. Skipping registering GPU devices... I1109 12:15:27.900500 140558803519296 create_coco_tf_record.py:502] writing to output path: ./bccd_coco_tfrecords/train I1109 12:15:28.110808 140558803519296 create_coco_tf_record.py:374] Building bounding box index. I1109 12:15:28.112704 140558803519296 create_coco_tf_record.py:385] 0 images are missing bboxes. I1109 12:15:28.376975 140558803519296 tfrecord_lib.py:168] On image 0 I1109 12:15:28.383184 140558803519296 tfrecord_lib.py:168] On image 100 I1109 12:15:28.388326 140558803519296 tfrecord_lib.py:168] On image 200 I1109 12:15:28.393295 140558803519296 tfrecord_lib.py:168] On image 300 I1109 12:15:28.398152 140558803519296 tfrecord_lib.py:168] On image 400 I1109 12:15:28.403067 140558803519296 tfrecord_lib.py:168] On image 500 I1109 12:15:28.407919 140558803519296 tfrecord_lib.py:168] On image 600 I1109 12:15:28.412595 140558803519296 tfrecord_lib.py:168] On image 700 I1109 12:15:28.438800 140558803519296 tfrecord_lib.py:180] Finished writing, skipped 6 annotations. I1109 12:15:28.445898 140558803519296 create_coco_tf_record.py:537] Finished writing, skipped 6 annotations.

CLI command to convert data(validation data).

`VALID_DATA_DIR='./BCC.v1-bccd.coco/valid'` `VALID_ANNOTATION_FILE_DIR='./BCC.v1-bccd.coco/valid/_annotations.coco.json'` `OUTPUT_TFRECORD_VALID='./bccd_coco_tfrecords/valid'`

python -m official.vision.data.create_coco_tf_record --logtostderr \

--image_dir=$VALID_DATA_DIR \

--object_annotations_file=$VALID_ANNOTATION_FILE_DIR \

--output_file_prefix=$OUTPUT_TFRECORD_VALID \

--num_shards=1

2023-11-09 12:15:29.695864: E tensorflow/compiler/xla/stream_executor/cuda/cuda_dnn.cc:9342] Unable to register cuDNN factory: Attempting to register factory for plugin cuDNN when one has already been registered 2023-11-09 12:15:29.695909: E tensorflow/compiler/xla/stream_executor/cuda/cuda_fft.cc:609] Unable to register cuFFT factory: Attempting to register factory for plugin cuFFT when one has already been registered 2023-11-09 12:15:29.695940: E tensorflow/compiler/xla/stream_executor/cuda/cuda_blas.cc:1518] Unable to register cuBLAS factory: Attempting to register factory for plugin cuBLAS when one has already been registered 2023-11-09 12:15:33.418966: W tensorflow/core/common_runtime/gpu/gpu_device.cc:2211] Cannot dlopen some GPU libraries. Please make sure the missing libraries mentioned above are installed properly if you would like to use GPU. Follow the guide at https://www.tensorflow.org/install/gpu for how to download and setup the required libraries for your platform. Skipping registering GPU devices... I1109 12:15:33.419316 140466682771264 create_coco_tf_record.py:502] writing to output path: ./bccd_coco_tfrecords/valid I1109 12:15:33.426927 140466682771264 create_coco_tf_record.py:374] Building bounding box index. I1109 12:15:33.427194 140466682771264 create_coco_tf_record.py:385] 0 images are missing bboxes. I1109 12:15:33.632256 140466682771264 tfrecord_lib.py:168] On image 0 I1109 12:15:33.659518 140466682771264 tfrecord_lib.py:180] Finished writing, skipped 0 annotations. I1109 12:15:33.660955 140466682771264 create_coco_tf_record.py:537] Finished writing, skipped 0 annotations.

CLI command to convert data(test data).

`TEST_DATA_DIR='./BCC.v1-bccd.coco/test'` `TEST_ANNOTATION_FILE_DIR='./BCC.v1-bccd.coco/test/_annotations.coco.json'` `OUTPUT_TFRECORD_TEST='./bccd_coco_tfrecords/test'`

python -m official.vision.data.create_coco_tf_record --logtostderr \

--image_dir=$TEST_DATA_DIR \

--object_annotations_file=$TEST_ANNOTATION_FILE_DIR \

--output_file_prefix=$OUTPUT_TFRECORD_TEST \

--num_shards=1

2023-11-09 12:15:34.949263: E tensorflow/compiler/xla/stream_executor/cuda/cuda_dnn.cc:9342] Unable to register cuDNN factory: Attempting to register factory for plugin cuDNN when one has already been registered 2023-11-09 12:15:34.949311: E tensorflow/compiler/xla/stream_executor/cuda/cuda_fft.cc:609] Unable to register cuFFT factory: Attempting to register factory for plugin cuFFT when one has already been registered 2023-11-09 12:15:34.949341: E tensorflow/compiler/xla/stream_executor/cuda/cuda_blas.cc:1518] Unable to register cuBLAS factory: Attempting to register factory for plugin cuBLAS when one has already been registered 2023-11-09 12:15:38.592568: W tensorflow/core/common_runtime/gpu/gpu_device.cc:2211] Cannot dlopen some GPU libraries. Please make sure the missing libraries mentioned above are installed properly if you would like to use GPU. Follow the guide at https://www.tensorflow.org/install/gpu for how to download and setup the required libraries for your platform. Skipping registering GPU devices... I1109 12:15:38.592885 140186454914880 create_coco_tf_record.py:502] writing to output path: ./bccd_coco_tfrecords/test I1109 12:15:38.596868 140186454914880 create_coco_tf_record.py:374] Building bounding box index. I1109 12:15:38.597061 140186454914880 create_coco_tf_record.py:385] 0 images are missing bboxes. I1109 12:15:38.788634 140186454914880 tfrecord_lib.py:168] On image 0 I1109 12:15:38.814095 140186454914880 tfrecord_lib.py:180] Finished writing, skipped 0 annotations. I1109 12:15:38.815249 140186454914880 create_coco_tf_record.py:537] Finished writing, skipped 0 annotations.

Configure the Retinanet Resnet FPN COCO model for custom dataset.

Dataset used for fine tuning the checkpoint is Blood Cells Detection (BCCD).

train_data_input_path = './bccd_coco_tfrecords/train-00000-of-00001.tfrecord'

valid_data_input_path = './bccd_coco_tfrecords/valid-00000-of-00001.tfrecord'

test_data_input_path = './bccd_coco_tfrecords/test-00000-of-00001.tfrecord'

model_dir = './trained_model/'

export_dir ='./exported_model/'

In Model Garden, the collections of parameters that define a model are called configs. Model Garden can create a config based on a known set of parameters via a factory.

Use the retinanet_resnetfpn_coco experiment configuration, as defined by tfm.vision.configs.retinanet.retinanet_resnetfpn_coco.

The configuration defines an experiment to train a RetinanNet with Resnet-50 as backbone, FPN as decoder. Default Configuration is trained on COCO train2017 and evaluated on COCO val2017.

There are also other alternative experiments available such asretinanet_resnetfpn_coco, retinanet_spinenet_coco, fasterrcnn_resnetfpn_coco and more. One can switch to them by changing the experiment name argument to the get_exp_config function.

We are going to fine tune the Resnet-50 backbone checkpoint which is already present in the default configuration.

exp_config = exp_factory.get_exp_config('retinanet_resnetfpn_coco')

Adjust the model and dataset configurations so that it works with custom dataset(in this case BCCD).

batch_size = 8

num_classes = 3

HEIGHT, WIDTH = 256, 256

IMG_SIZE = [HEIGHT, WIDTH, 3]

# Backbone config.

exp_config.task.freeze_backbone = False

exp_config.task.annotation_file = ''

# Model config.

exp_config.task.model.input_size = IMG_SIZE

exp_config.task.model.num_classes = num_classes + 1

exp_config.task.model.detection_generator.tflite_post_processing.max_classes_per_detection = exp_config.task.model.num_classes

# Training data config.

exp_config.task.train_data.input_path = train_data_input_path

exp_config.task.train_data.dtype = 'float32'

exp_config.task.train_data.global_batch_size = batch_size

exp_config.task.train_data.parser.aug_scale_max = 1.0

exp_config.task.train_data.parser.aug_scale_min = 1.0

# Validation data config.

exp_config.task.validation_data.input_path = valid_data_input_path

exp_config.task.validation_data.dtype = 'float32'

exp_config.task.validation_data.global_batch_size = batch_size

Adjust the trainer configuration.

logical_device_names = [logical_device.name for logical_device in tf.config.list_logical_devices()]

if 'GPU' in ''.join(logical_device_names):

print('This may be broken in Colab.')

device = 'GPU'

elif 'TPU' in ''.join(logical_device_names):

print('This may be broken in Colab.')

device = 'TPU'

else:

print('Running on CPU is slow, so only train for a few steps.')

device = 'CPU'

train_steps = 1000

exp_config.trainer.steps_per_loop = 100 # steps_per_loop = num_of_training_examples // train_batch_size

exp_config.trainer.summary_interval = 100

exp_config.trainer.checkpoint_interval = 100

exp_config.trainer.validation_interval = 100

exp_config.trainer.validation_steps = 100 # validation_steps = num_of_validation_examples // eval_batch_size

exp_config.trainer.train_steps = train_steps

exp_config.trainer.optimizer_config.warmup.linear.warmup_steps = 100

exp_config.trainer.optimizer_config.learning_rate.type = 'cosine'

exp_config.trainer.optimizer_config.learning_rate.cosine.decay_steps = train_steps

exp_config.trainer.optimizer_config.learning_rate.cosine.initial_learning_rate = 0.1

exp_config.trainer.optimizer_config.warmup.linear.warmup_learning_rate = 0.05

Running on CPU is slow, so only train for a few steps. 2023-11-09 12:15:40.194897: W tensorflow/core/common_runtime/gpu/gpu_device.cc:2211] Cannot dlopen some GPU libraries. Please make sure the missing libraries mentioned above are installed properly if you would like to use GPU. Follow the guide at https://www.tensorflow.org/install/gpu for how to download and setup the required libraries for your platform. Skipping registering GPU devices...

Print the modified configuration.

pp.pprint(exp_config.as_dict())

display.Javascript('google.colab.output.setIframeHeight("500px");')

{ 'runtime': { 'all_reduce_alg': None, 'batchnorm_spatial_persistent': False, 'dataset_num_private_threads': None, 'default_shard_dim': -1, 'distribution_strategy': 'mirrored', 'enable_xla': False, 'gpu_thread_mode': None, 'loss_scale': None, 'mixed_precision_dtype': 'bfloat16', 'num_cores_per_replica': 1, 'num_gpus': 0, 'num_packs': 1, 'per_gpu_thread_count': 0, 'run_eagerly': False, 'task_index': -1, 'tpu': None, 'tpu_enable_xla_dynamic_padder': None, 'use_tpu_mp_strategy': False, 'worker_hosts': None}, 'task': { 'allow_image_summary': False, 'annotation_file': '', 'differential_privacy_config': None, 'export_config': { 'cast_detection_classes_to_float': False, 'cast_num_detections_to_float': False, 'output_intermediate_features': False, 'output_normalized_coordinates': False}, 'freeze_backbone': False, 'init_checkpoint': 'gs://cloud-tpu-checkpoints/vision-2.0/resnet50_imagenet/ckpt-28080', 'init_checkpoint_modules': 'backbone', 'losses': { 'box_loss_weight': 50, 'focal_loss_alpha': 0.25, 'focal_loss_gamma': 1.5, 'huber_loss_delta': 0.1, 'l2_weight_decay': 0.0001, 'loss_weight': 1.0}, 'max_num_eval_detections': 100, 'model': { 'anchor': { 'anchor_size': 4.0, 'aspect_ratios': [0.5, 1.0, 2.0], 'num_scales': 3}, 'backbone': { 'resnet': { 'bn_trainable': True, 'depth_multiplier': 1.0, 'model_id': 50, 'replace_stem_max_pool': False, 'resnetd_shortcut': False, 'scale_stem': True, 'se_ratio': 0.0, 'stem_type': 'v0', 'stochastic_depth_drop_rate': 0.0}, 'type': 'resnet'}, 'decoder': { 'fpn': { 'fusion_type': 'sum', 'num_filters': 256, 'use_keras_layer': False, 'use_separable_conv': False}, 'type': 'fpn'}, 'detection_generator': { 'apply_nms': True, 'box_coder_weights': None, 'max_num_detections': 100, 'nms_iou_threshold': 0.5, 'nms_version': 'v2', 'pre_nms_score_threshold': 0.05, 'pre_nms_top_k': 5000, 'return_decoded': None, 'soft_nms_sigma': None, 'tflite_post_processing': { 'max_classes_per_detection': 4, 'max_detections': 200, 'nms_iou_threshold': 0.5, 'nms_score_threshold': 0.1, 'normalize_anchor_coordinates': False, 'omit_nms': False, 'use_regular_nms': False}, 'use_class_agnostic_nms': False, 'use_cpu_nms': False}, 'head': { 'attribute_heads': [], 'num_convs': 4, 'num_filters': 256, 'share_classification_heads': False, 'share_level_convs': True, 'use_separable_conv': False}, 'input_size': [256, 256, 3], 'max_level': 7, 'min_level': 3, 'norm_activation': { 'activation': 'relu', 'norm_epsilon': 0.001, 'norm_momentum': 0.99, 'use_sync_bn': False}, 'num_classes': 4}, 'name': None, 'per_category_metrics': False, 'train_data': { 'apply_tf_data_service_before_batching': False, 'autotune_algorithm': None, 'block_length': 1, 'cache': False, 'cycle_length': None, 'decoder': { 'simple_decoder': { 'attribute_names': [ ], 'mask_binarize_threshold': None, 'regenerate_source_id': False}, 'type': 'simple_decoder'}, 'deterministic': None, 'drop_remainder': True, 'dtype': 'float32', 'enable_shared_tf_data_service_between_parallel_trainers': False, 'enable_tf_data_service': False, 'file_type': 'tfrecord', 'global_batch_size': 8, 'input_path': './bccd_coco_tfrecords/train-00000-of-00001.tfrecord', 'is_training': True, 'parser': { 'aug_policy': None, 'aug_rand_hflip': True, 'aug_scale_max': 1.0, 'aug_scale_min': 1.0, 'aug_type': None, 'match_threshold': 0.5, 'max_num_instances': 100, 'num_channels': 3, 'skip_crowd_during_training': True, 'unmatched_threshold': 0.5}, 'prefetch_buffer_size': None, 'seed': None, 'sharding': True, 'shuffle_buffer_size': 10000, 'tf_data_service_address': None, 'tf_data_service_job_name': None, 'tfds_as_supervised': False, 'tfds_data_dir': '', 'tfds_name': '', 'tfds_skip_decoding_feature': '', 'tfds_split': '', 'trainer_id': None, 'weights': None}, 'use_coco_metrics': True, 'use_wod_metrics': False, 'validation_data': { 'apply_tf_data_service_before_batching': False, 'autotune_algorithm': None, 'block_length': 1, 'cache': False, 'cycle_length': None, 'decoder': { 'simple_decoder': { 'attribute_names': [ ], 'mask_binarize_threshold': None, 'regenerate_source_id': False}, 'type': 'simple_decoder'}, 'deterministic': None, 'drop_remainder': True, 'dtype': 'float32', 'enable_shared_tf_data_service_between_parallel_trainers': False, 'enable_tf_data_service': False, 'file_type': 'tfrecord', 'global_batch_size': 8, 'input_path': './bccd_coco_tfrecords/valid-00000-of-00001.tfrecord', 'is_training': False, 'parser': { 'aug_policy': None, 'aug_rand_hflip': False, 'aug_scale_max': 1.0, 'aug_scale_min': 1.0, 'aug_type': None, 'match_threshold': 0.5, 'max_num_instances': 100, 'num_channels': 3, 'skip_crowd_during_training': True, 'unmatched_threshold': 0.5}, 'prefetch_buffer_size': None, 'seed': None, 'sharding': True, 'shuffle_buffer_size': 10000, 'tf_data_service_address': None, 'tf_data_service_job_name': None, 'tfds_as_supervised': False, 'tfds_data_dir': '', 'tfds_name': '', 'tfds_skip_decoding_feature': '', 'tfds_split': '', 'trainer_id': None, 'weights': None} }, 'trainer': { 'allow_tpu_summary': False, 'best_checkpoint_eval_metric': '', 'best_checkpoint_export_subdir': '', 'best_checkpoint_metric_comp': 'higher', 'checkpoint_interval': 100, 'continuous_eval_timeout': 3600, 'eval_tf_function': True, 'eval_tf_while_loop': False, 'loss_upper_bound': 1000000.0, 'max_to_keep': 5, 'optimizer_config': { 'ema': None, 'learning_rate': { 'cosine': { 'alpha': 0.0, 'decay_steps': 1000, 'initial_learning_rate': 0.1, 'name': 'CosineDecay', 'offset': 0}, 'type': 'cosine'}, 'optimizer': { 'sgd': { 'clipnorm': None, 'clipvalue': None, 'decay': 0.0, 'global_clipnorm': None, 'momentum': 0.9, 'name': 'SGD', 'nesterov': False}, 'type': 'sgd'}, 'warmup': { 'linear': { 'name': 'linear', 'warmup_learning_rate': 0.05, 'warmup_steps': 100}, 'type': 'linear'} }, 'preemption_on_demand_checkpoint': True, 'recovery_begin_steps': 0, 'recovery_max_trials': 0, 'steps_per_loop': 100, 'summary_interval': 100, 'train_steps': 1000, 'train_tf_function': True, 'train_tf_while_loop': True, 'validation_interval': 100, 'validation_steps': 100, 'validation_summary_subdir': 'validation'} } <IPython.core.display.Javascript object>

Set up the distribution strategy.

if exp_config.runtime.mixed_precision_dtype == tf.float16:

tf.keras.mixed_precision.set_global_policy('mixed_float16')

if 'GPU' in ''.join(logical_device_names):

distribution_strategy = tf.distribute.MirroredStrategy()

elif 'TPU' in ''.join(logical_device_names):

tf.tpu.experimental.initialize_tpu_system()

tpu = tf.distribute.cluster_resolver.TPUClusterResolver(tpu='/device:TPU_SYSTEM:0')

distribution_strategy = tf.distribute.experimental.TPUStrategy(tpu)

else:

print('Warning: this will be really slow.')

distribution_strategy = tf.distribute.OneDeviceStrategy(logical_device_names[0])

print('Done')

Warning: this will be really slow. Done

Create the Task object (tfm.core.base_task.Task) from the config_definitions.TaskConfig.

The Task object has all the methods necessary for building the dataset, building the model, and running training & evaluation. These methods are driven by tfm.core.train_lib.run_experiment.

with distribution_strategy.scope():

task = tfm.core.task_factory.get_task(exp_config.task, logging_dir=model_dir)

Visualize a batch of the data.

for images, labels in task.build_inputs(exp_config.task.train_data).take(1):

print()

print(f'images.shape: {str(images.shape):16} images.dtype: {images.dtype!r}')

print(f'labels.keys: {labels.keys()}')

images.shape: (8, 256, 256, 3) images.dtype: tf.float32 labels.keys: dict_keys(['cls_targets', 'box_targets', 'anchor_boxes', 'cls_weights', 'box_weights', 'image_info'])

Create category index dictionary to map the labels to coressponding label names.

category_index={

1: {

'id': 1,

'name': 'Platelets'

},

2: {

'id': 2,

'name': 'RBC'

},

3: {

'id': 3,

'name': 'WBC'

}

}

tf_ex_decoder = TfExampleDecoder()

Helper function for visualizing the results from TFRecords.

Use visualize_boxes_and_labels_on_image_array from visualization_utils to draw boudning boxes on the image.

def show_batch(raw_records, num_of_examples):

plt.figure(figsize=(20, 20))

use_normalized_coordinates=True

min_score_thresh = 0.30

for i, serialized_example in enumerate(raw_records):

plt.subplot(1, 3, i + 1)

decoded_tensors = tf_ex_decoder.decode(serialized_example)

image = decoded_tensors['image'].numpy().astype('uint8')

scores = np.ones(shape=(len(decoded_tensors['groundtruth_boxes'])))

visualization_utils.visualize_boxes_and_labels_on_image_array(

image,

decoded_tensors['groundtruth_boxes'].numpy(),

decoded_tensors['groundtruth_classes'].numpy().astype('int'),

scores,

category_index=category_index,

use_normalized_coordinates=use_normalized_coordinates,

max_boxes_to_draw=200,

min_score_thresh=min_score_thresh,

agnostic_mode=False,

instance_masks=None,

line_thickness=4)

plt.imshow(image)

plt.axis('off')

plt.title(f'Image-{i+1}')

plt.show()

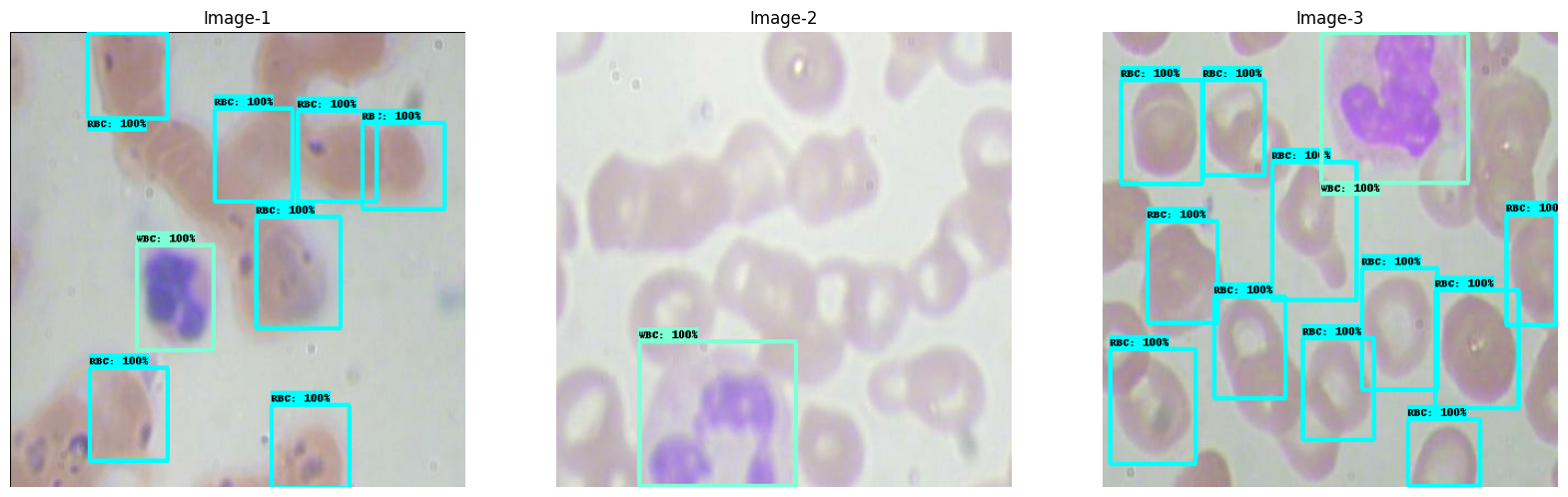

Visualization of train data

The bounding box detection has two components

- Class label of the object detected (e.g.RBC)

- Percentage of match between predicted and ground truth bounding boxes.

buffer_size = 20

num_of_examples = 3

raw_records = tf.data.TFRecordDataset(

exp_config.task.train_data.input_path).shuffle(

buffer_size=buffer_size).take(num_of_examples)

show_batch(raw_records, num_of_examples)

Train and evaluate.

We follow the COCO challenge tradition to evaluate the accuracy of object detection based on mAP(mean Average Precision). Please check here for detail explanation of how evaluation metrics for detection task is done.

IoU: is defined as the area of the intersection divided by the area of the union of a predicted bounding box and ground truth bounding box.

model, eval_logs = tfm.core.train_lib.run_experiment(

distribution_strategy=distribution_strategy,

task=task,

mode='train_and_eval',

params=exp_config,

model_dir=model_dir,

run_post_eval=True)

restoring or initializing model...

INFO:tensorflow:Customized initialization is done through the passed init_fn.

INFO:tensorflow:Customized initialization is done through the passed init_fn.

train | step: 0 | training until step 100...

2023-11-09 12:15:53.688817: W tensorflow/core/framework/dataset.cc:959] Input of GeneratorDatasetOp::Dataset will not be optimized because the dataset does not implement the AsGraphDefInternal() method needed to apply optimizations.

train | step: 100 | steps/sec: 0.7 | output:

{'box_loss': 0.02193972,

'cls_loss': 0.6757131,

'learning_rate': 0.09755283,

'model_loss': 1.7726997,

'total_loss': 2.8906868,

'training_loss': 2.8906868}

saved checkpoint to ./trained_model/ckpt-100.

eval | step: 100 | running 100 steps of evaluation...

2023-11-09 12🔞11.406759: W tensorflow/core/framework/dataset.cc:959] Input of GeneratorDatasetOp::Dataset will not be optimized because the dataset does not implement the AsGraphDefInternal() method needed to apply optimizations.

creating index...

index created!

creating index...

index created!

Running per image evaluation...

Evaluate annotation type bbox

DONE (t=0.42s).

Accumulating evaluation results...

DONE (t=0.06s).

Average Precision (AP) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.000

Average Precision (AP) @[ IoU=0.50 | area= all | maxDets=100 ] = 0.000

Average Precision (AP) @[ IoU=0.75 | area= all | maxDets=100 ] = 0.000

Average Precision (AP) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.000

Average Precision (AP) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.000

Average Precision (AP) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.000

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 1 ] = 0.000

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 10 ] = 0.000

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.000

Average Recall (AR) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.000

Average Recall (AR) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.000

Average Recall (AR) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.000

eval | step: 100 | steps/sec: 10.1 | eval time: 9.9 sec | output:

{'AP': 0.0,

'AP50': 0.0,

'AP75': 0.0,

'APl': 0.0,

'APm': 0.0,

'APs': 0.0,

'ARl': 0.0,

'ARm': 0.0,

'ARmax1': 0.0,

'ARmax10': 0.0,

'ARmax100': 0.0,

'ARs': 0.0,

'box_loss': 18.058144,

'cls_loss': 11249.595,

'model_loss': 12152.503,

'steps_per_second': 10.0835173990815,

'total_loss': 12153.81,

'validation_loss': 12153.81}

train | step: 100 | training until step 200...

train | step: 200 | steps/sec: 0.7 | output:

{'box_loss': 0.008522138,

'cls_loss': 0.50075334,

'learning_rate': 0.090450846,

'model_loss': 0.92686033,

'total_loss': 2.2234242,

'training_loss': 2.2234242}

saved checkpoint to ./trained_model/ckpt-200.

eval | step: 200 | running 100 steps of evaluation...

2023-11-09 12:20:26.584188: W tensorflow/core/framework/dataset.cc:959] Input of GeneratorDatasetOp::Dataset will not be optimized because the dataset does not implement the AsGraphDefInternal() method needed to apply optimizations.

creating index...

index created!

creating index...

index created!

Running per image evaluation...

Evaluate annotation type bbox

DONE (t=0.61s).

Accumulating evaluation results...

DONE (t=0.06s).

Average Precision (AP) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.011

Average Precision (AP) @[ IoU=0.50 | area= all | maxDets=100 ] = 0.034

Average Precision (AP) @[ IoU=0.75 | area= all | maxDets=100 ] = 0.003

Average Precision (AP) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.000

Average Precision (AP) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.010

Average Precision (AP) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.059

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 1 ] = 0.004

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 10 ] = 0.029

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.157

Average Recall (AR) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.000

Average Recall (AR) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.049

Average Recall (AR) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.177

eval | step: 200 | steps/sec: 13.2 | eval time: 7.6 sec | output:

{'AP': 0.010944664,

'AP50': 0.03427069,

'AP75': 0.0026895092,

'APl': 0.05921977,

'APm': 0.01015669,

'APs': 0.0,

'ARl': 0.17723133,

'ARm': 0.048520368,

'ARmax1': 0.0035891088,

'ARmax10': 0.029232906,

'ARmax100': 0.15689641,

'ARs': 0.0,

'box_loss': 0.009609308,

'cls_loss': 4.135805,

'model_loss': 4.61627,

'steps_per_second': 13.19492688372793,

'total_loss': 5.900796,

'validation_loss': 5.900796}

train | step: 200 | training until step 300...

train | step: 300 | steps/sec: 0.8 | output:

{'box_loss': 0.00830541,

'cls_loss': 0.4176439,

'learning_rate': 0.07938927,

'model_loss': 0.8329147,

'total_loss': 2.1065934,

'training_loss': 2.1065934}

saved checkpoint to ./trained_model/ckpt-300.

eval | step: 300 | running 100 steps of evaluation...

2023-11-09 12:22:38.377585: W tensorflow/core/framework/dataset.cc:959] Input of GeneratorDatasetOp::Dataset will not be optimized because the dataset does not implement the AsGraphDefInternal() method needed to apply optimizations.

creating index...

index created!

creating index...

index created!

Running per image evaluation...

Evaluate annotation type bbox

DONE (t=1.35s).

Accumulating evaluation results...

DONE (t=0.06s).

Average Precision (AP) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.016

Average Precision (AP) @[ IoU=0.50 | area= all | maxDets=100 ] = 0.069

Average Precision (AP) @[ IoU=0.75 | area= all | maxDets=100 ] = 0.002

Average Precision (AP) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.000

Average Precision (AP) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.013

Average Precision (AP) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.009

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 1 ] = 0.032

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 10 ] = 0.063

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.141

Average Recall (AR) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.000

Average Recall (AR) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.100

Average Recall (AR) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.124

eval | step: 300 | steps/sec: 12.5 | eval time: 8.0 sec | output:

{'AP': 0.016204117,

'AP50': 0.06889212,

'AP75': 0.0020602986,

'APl': 0.009428242,

'APm': 0.013351731,

'APs': 0.0,

'ARl': 0.123588346,

'ARm': 0.10017456,

'ARmax1': 0.03215091,

'ARmax10': 0.063206546,

'ARmax100': 0.14125936,

'ARs': 0.0,

'box_loss': 0.00870863,

'cls_loss': 0.5394852,

'model_loss': 0.97491676,

'steps_per_second': 12.503431188892941,

'total_loss': 2.2379956,

'validation_loss': 2.2379956}

train | step: 300 | training until step 400...

train | step: 400 | steps/sec: 0.8 | output:

{'box_loss': 0.008178472,

'cls_loss': 0.39109412,

'learning_rate': 0.06545085,

'model_loss': 0.8000177,

'total_loss': 2.05375,

'training_loss': 2.05375}

saved checkpoint to ./trained_model/ckpt-400.

eval | step: 400 | running 100 steps of evaluation...

2023-11-09 12:24:51.305428: W tensorflow/core/framework/dataset.cc:959] Input of GeneratorDatasetOp::Dataset will not be optimized because the dataset does not implement the AsGraphDefInternal() method needed to apply optimizations.

creating index...

index created!

creating index...

index created!

Running per image evaluation...

Evaluate annotation type bbox

DONE (t=1.32s).

Accumulating evaluation results...

DONE (t=0.06s).

Average Precision (AP) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.170

Average Precision (AP) @[ IoU=0.50 | area= all | maxDets=100 ] = 0.417

Average Precision (AP) @[ IoU=0.75 | area= all | maxDets=100 ] = 0.080

Average Precision (AP) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.000

Average Precision (AP) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.126

Average Precision (AP) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.164

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 1 ] = 0.139

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 10 ] = 0.211

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.289

Average Recall (AR) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.000

Average Recall (AR) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.223

Average Recall (AR) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.265

eval | step: 400 | steps/sec: 12.5 | eval time: 8.0 sec | output:

{'AP': 0.16998358,

'AP50': 0.41680792,

'AP75': 0.07954546,

'APl': 0.16424511,

'APm': 0.12631872,

'APs': 0.0,

'ARl': 0.26493624,

'ARm': 0.22271821,

'ARmax1': 0.13854705,

'ARmax10': 0.2114396,

'ARmax100': 0.2887498,

'ARs': 0.0,

'box_loss': 0.007682202,

'cls_loss': 0.44814157,

'model_loss': 0.8322517,

'steps_per_second': 12.476437706321125,

'total_loss': 2.0770469,

'validation_loss': 2.0770469}

train | step: 400 | training until step 500...

train | step: 500 | steps/sec: 0.8 | output:

{'box_loss': 0.008003844,

'cls_loss': 0.37224656,

'learning_rate': 0.049999997,

'model_loss': 0.77243865,

'total_loss': 2.0097175,

'training_loss': 2.0097175}

saved checkpoint to ./trained_model/ckpt-500.

eval | step: 500 | running 100 steps of evaluation...

2023-11-09 12:27:03.978075: W tensorflow/core/framework/dataset.cc:959] Input of GeneratorDatasetOp::Dataset will not be optimized because the dataset does not implement the AsGraphDefInternal() method needed to apply optimizations.

creating index...

index created!

creating index...

index created!

Running per image evaluation...

Evaluate annotation type bbox

DONE (t=1.31s).

Accumulating evaluation results...

DONE (t=0.06s).

Average Precision (AP) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.144

Average Precision (AP) @[ IoU=0.50 | area= all | maxDets=100 ] = 0.409

Average Precision (AP) @[ IoU=0.75 | area= all | maxDets=100 ] = 0.018

Average Precision (AP) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.000

Average Precision (AP) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.068

Average Precision (AP) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.146

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 1 ] = 0.141

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 10 ] = 0.177

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.249

Average Recall (AR) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.000

Average Recall (AR) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.163

Average Recall (AR) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.228

eval | step: 500 | steps/sec: 12.6 | eval time: 8.0 sec | output:

{'AP': 0.14389119,

'AP50': 0.40903544,

'AP75': 0.01820877,

'APl': 0.14554419,

'APm': 0.06774697,

'APs': 0.0,

'ARl': 0.22750455,

'ARm': 0.16317539,

'ARmax1': 0.14100254,

'ARmax10': 0.17656359,

'ARmax100': 0.24859329,

'ARs': 0.0,

'box_loss': 0.008371367,

'cls_loss': 0.46203443,

'model_loss': 0.8806029,

'steps_per_second': 12.550521613819056,

'total_loss': 2.110864,

'validation_loss': 2.110864}

train | step: 500 | training until step 600...

train | step: 600 | steps/sec: 0.8 | output:

{'box_loss': 0.0075427825,

'cls_loss': 0.36228406,

'learning_rate': 0.034549143,

'model_loss': 0.739423,

'total_loss': 1.9641409,

'training_loss': 1.9641409}

saved checkpoint to ./trained_model/ckpt-600.

eval | step: 600 | running 100 steps of evaluation...

2023-11-09 12:29:16.661897: W tensorflow/core/framework/dataset.cc:959] Input of GeneratorDatasetOp::Dataset will not be optimized because the dataset does not implement the AsGraphDefInternal() method needed to apply optimizations.

creating index...

index created!

creating index...

index created!

Running per image evaluation...

Evaluate annotation type bbox

DONE (t=1.26s).

Accumulating evaluation results...

DONE (t=0.06s).

Average Precision (AP) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.205

Average Precision (AP) @[ IoU=0.50 | area= all | maxDets=100 ] = 0.494

Average Precision (AP) @[ IoU=0.75 | area= all | maxDets=100 ] = 0.074

Average Precision (AP) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.000

Average Precision (AP) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.165

Average Precision (AP) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.210

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 1 ] = 0.178

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 10 ] = 0.249

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.304

Average Recall (AR) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.000

Average Recall (AR) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.288

Average Recall (AR) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.281

eval | step: 600 | steps/sec: 12.3 | eval time: 8.1 sec | output:

{'AP': 0.20549679,

'AP50': 0.49406928,

'AP75': 0.074281454,

'APl': 0.20956378,

'APm': 0.16475147,

'APs': 0.0,

'ARl': 0.2809654,

'ARm': 0.28829592,

'ARmax1': 0.17803712,

'ARmax10': 0.24863629,

'ARmax100': 0.3042056,

'ARs': 0.0,

'box_loss': 0.007942874,

'cls_loss': 0.3965486,

'model_loss': 0.79369235,

'steps_per_second': 12.345320504588557,

'total_loss': 2.013407,

'validation_loss': 2.013407}

train | step: 600 | training until step 700...

train | step: 700 | steps/sec: 0.8 | output:

{'box_loss': 0.007070377,

'cls_loss': 0.34681392,

'learning_rate': 0.02061074,

'model_loss': 0.7003328,

'total_loss': 1.91634,

'training_loss': 1.91634}

saved checkpoint to ./trained_model/ckpt-700.

eval | step: 700 | running 100 steps of evaluation...

2023-11-09 12:31:29.396952: W tensorflow/core/framework/dataset.cc:959] Input of GeneratorDatasetOp::Dataset will not be optimized because the dataset does not implement the AsGraphDefInternal() method needed to apply optimizations.

creating index...

index created!

creating index...

index created!

Running per image evaluation...

Evaluate annotation type bbox

DONE (t=1.25s).

Accumulating evaluation results...

DONE (t=0.06s).

Average Precision (AP) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.266

Average Precision (AP) @[ IoU=0.50 | area= all | maxDets=100 ] = 0.548

Average Precision (AP) @[ IoU=0.75 | area= all | maxDets=100 ] = 0.195

Average Precision (AP) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.000

Average Precision (AP) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.211

Average Precision (AP) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.320

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 1 ] = 0.199

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 10 ] = 0.293

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.354

Average Recall (AR) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.000

Average Recall (AR) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.314

Average Recall (AR) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.339

eval | step: 700 | steps/sec: 12.4 | eval time: 8.0 sec | output:

{'AP': 0.26574683,

'AP50': 0.54845077,

'AP75': 0.19461285,

'APl': 0.3196148,

'APm': 0.21140255,

'APs': 0.0,

'ARl': 0.33888888,

'ARm': 0.31413966,

'ARmax1': 0.19884779,

'ARmax10': 0.29322097,

'ARmax100': 0.35407078,

'ARs': 0.0,

'box_loss': 0.006610607,

'cls_loss': 0.35634473,

'model_loss': 0.68687505,

'steps_per_second': 12.437609994369083,

'total_loss': 1.8996844,

'validation_loss': 1.8996844}

train | step: 700 | training until step 800...

train | step: 800 | steps/sec: 0.8 | output:

{'box_loss': 0.006680321,

'cls_loss': 0.3391388,

'learning_rate': 0.009549147,

'model_loss': 0.673155,

'total_loss': 1.8838484,

'training_loss': 1.8838484}

saved checkpoint to ./trained_model/ckpt-800.

eval | step: 800 | running 100 steps of evaluation...

2023-11-09 12:33:41.871412: W tensorflow/core/framework/dataset.cc:959] Input of GeneratorDatasetOp::Dataset will not be optimized because the dataset does not implement the AsGraphDefInternal() method needed to apply optimizations.

creating index...

index created!

creating index...

index created!

Running per image evaluation...

Evaluate annotation type bbox

DONE (t=1.21s).

Accumulating evaluation results...

DONE (t=0.06s).

Average Precision (AP) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.270

Average Precision (AP) @[ IoU=0.50 | area= all | maxDets=100 ] = 0.551

Average Precision (AP) @[ IoU=0.75 | area= all | maxDets=100 ] = 0.228

Average Precision (AP) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.000

Average Precision (AP) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.238

Average Precision (AP) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.293

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 1 ] = 0.203

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 10 ] = 0.292

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.355

Average Recall (AR) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.000

Average Recall (AR) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.330

Average Recall (AR) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.310

eval | step: 800 | steps/sec: 12.2 | eval time: 8.2 sec | output:

{'AP': 0.27034393,

'AP50': 0.55116975,

'AP75': 0.22773828,

'APl': 0.29330468,

'APm': 0.23779006,

'APs': 0.0,

'ARl': 0.30956283,

'ARm': 0.33016625,

'ARmax1': 0.2025409,

'ARmax10': 0.29244527,

'ARmax100': 0.35519278,

'ARs': 0.0,

'box_loss': 0.006337466,

'cls_loss': 0.3529123,

'model_loss': 0.6697856,

'steps_per_second': 12.213763576089187,

'total_loss': 1.8787861,

'validation_loss': 1.8787861}

train | step: 800 | training until step 900...

train | step: 900 | steps/sec: 0.8 | output:

{'box_loss': 0.006552434,

'cls_loss': 0.3347254,

'learning_rate': 0.002447176,

'model_loss': 0.6623471,

'total_loss': 1.8704419,

'training_loss': 1.8704419}

saved checkpoint to ./trained_model/ckpt-900.

eval | step: 900 | running 100 steps of evaluation...

2023-11-09 12:35:54.334538: W tensorflow/core/framework/dataset.cc:959] Input of GeneratorDatasetOp::Dataset will not be optimized because the dataset does not implement the AsGraphDefInternal() method needed to apply optimizations.

creating index...

index created!

creating index...

index created!

Running per image evaluation...

Evaluate annotation type bbox

DONE (t=1.22s).

Accumulating evaluation results...

DONE (t=0.06s).

Average Precision (AP) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.265

Average Precision (AP) @[ IoU=0.50 | area= all | maxDets=100 ] = 0.563

Average Precision (AP) @[ IoU=0.75 | area= all | maxDets=100 ] = 0.146

Average Precision (AP) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.000

Average Precision (AP) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.261

Average Precision (AP) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.306

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 1 ] = 0.197

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 10 ] = 0.290

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.348

Average Recall (AR) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.000

Average Recall (AR) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.344

Average Recall (AR) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.326

eval | step: 900 | steps/sec: 12.2 | eval time: 8.2 sec | output:

{'AP': 0.26503274,

'AP50': 0.5632806,

'AP75': 0.1462794,

'APl': 0.30633608,

'APm': 0.26065546,

'APs': 0.0,

'ARl': 0.3263206,

'ARm': 0.34426433,

'ARmax1': 0.19695416,

'ARmax10': 0.28974226,

'ARmax100': 0.3476218,

'ARs': 0.0,

'box_loss': 0.006341949,

'cls_loss': 0.3344063,

'model_loss': 0.6515038,

'steps_per_second': 12.173977857621107,

'total_loss': 1.8589785,

'validation_loss': 1.8589785}

train | step: 900 | training until step 1000...

train | step: 1000 | steps/sec: 0.8 | output:

{'box_loss': 0.0064173574,

'cls_loss': 0.33288643,

'learning_rate': 0.0,

'model_loss': 0.6537543,

'total_loss': 1.8610404,

'training_loss': 1.8610404}

saved checkpoint to ./trained_model/ckpt-1000.

eval | step: 1000 | running 100 steps of evaluation...

2023-11-09 12:38:06.900922: W tensorflow/core/framework/dataset.cc:959] Input of GeneratorDatasetOp::Dataset will not be optimized because the dataset does not implement the AsGraphDefInternal() method needed to apply optimizations.

creating index...

index created!

creating index...

index created!

Running per image evaluation...

Evaluate annotation type bbox

DONE (t=1.22s).

Accumulating evaluation results...

DONE (t=0.06s).

Average Precision (AP) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.258

Average Precision (AP) @[ IoU=0.50 | area= all | maxDets=100 ] = 0.554

Average Precision (AP) @[ IoU=0.75 | area= all | maxDets=100 ] = 0.137

Average Precision (AP) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.000

Average Precision (AP) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.247

Average Precision (AP) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.298

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 1 ] = 0.193

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 10 ] = 0.287

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.344

Average Recall (AR) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.000

Average Recall (AR) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.329

Average Recall (AR) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.324

eval | step: 1000 | steps/sec: 11.6 | eval time: 8.6 sec | output:

{'AP': 0.25785798,

'AP50': 0.5541018,

'AP75': 0.13742597,

'APl': 0.29831147,

'APm': 0.24709457,

'APs': 0.0,

'ARl': 0.32358834,

'ARm': 0.3290108,

'ARmax1': 0.19289383,

'ARmax10': 0.28744015,

'ARmax100': 0.3443296,

'ARs': 0.0,

'box_loss': 0.006123655,

'cls_loss': 0.3268734,

'model_loss': 0.6330561,

'steps_per_second': 11.647036187140033,

'total_loss': 1.8402674,

'validation_loss': 1.8402674}

eval | step: 1000 | running 100 steps of evaluation...

creating index...

index created!

creating index...

index created!

Running per image evaluation...

Evaluate annotation type bbox

DONE (t=1.21s).

Accumulating evaluation results...

DONE (t=0.06s).

Average Precision (AP) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.258

Average Precision (AP) @[ IoU=0.50 | area= all | maxDets=100 ] = 0.554

Average Precision (AP) @[ IoU=0.75 | area= all | maxDets=100 ] = 0.137

Average Precision (AP) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.000

Average Precision (AP) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.247

Average Precision (AP) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.298

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 1 ] = 0.193

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 10 ] = 0.287

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.344

Average Recall (AR) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.000

Average Recall (AR) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.329

Average Recall (AR) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.324

eval | step: 1000 | steps/sec: 12.1 | eval time: 8.2 sec | output:

{'AP': 0.25785798,

'AP50': 0.5541018,

'AP75': 0.13742597,

'APl': 0.29831147,

'APm': 0.24709457,

'APs': 0.0,

'ARl': 0.32358834,

'ARm': 0.3290108,

'ARmax1': 0.19289383,

'ARmax10': 0.28744015,

'ARmax100': 0.3443296,

'ARs': 0.0,

'box_loss': 0.006123655,

'cls_loss': 0.3268734,

'model_loss': 0.6330561,

'steps_per_second': 12.140613310097448,

'total_loss': 1.8402674,

'validation_loss': 1.8402674}

Load logs in tensorboard.

%load_ext tensorboard

%tensorboard --logdir './trained_model/'

Saving and exporting the trained model.

The keras.Model object returned by train_lib.run_experiment expects the data to be normalized by the dataset loader using the same mean and variance statiscics in preprocess_ops.normalize_image(image, offset=MEAN_RGB, scale=STDDEV_RGB). This export function handles those details, so you can pass tf.uint8 images and get the correct results.

export_saved_model_lib.export_inference_graph(

input_type='image_tensor',

batch_size=1,

input_image_size=[HEIGHT, WIDTH],

params=exp_config,

checkpoint_path=tf.train.latest_checkpoint(model_dir),

export_dir=export_dir)

WARNING:tensorflow:Skipping full serialization of Keras layer <official.vision.modeling.retinanet_model.RetinaNetModel object at 0x7f29ec799a90>, because it is not built. WARNING:tensorflow:Skipping full serialization of Keras layer <official.vision.modeling.retinanet_model.RetinaNetModel object at 0x7f29ec799a90>, because it is not built. WARNING:tensorflow:Skipping full serialization of Keras layer <official.vision.modeling.layers.detection_generator.MultilevelDetectionGenerator object at 0x7f2a30060940>, because it is not built. WARNING:tensorflow:Skipping full serialization of Keras layer <official.vision.modeling.layers.detection_generator.MultilevelDetectionGenerator object at 0x7f2a30060940>, because it is not built. INFO:tensorflow:Assets written to: ./exported_model/assets INFO:tensorflow:Assets written to: ./exported_model/assets

Inference from trained model

def load_image_into_numpy_array(path):

"""Load an image from file into a numpy array.

Puts image into numpy array to feed into tensorflow graph.

Note that by convention we put it into a numpy array with shape

(height, width, channels), where channels=3 for RGB.

Args:

path: the file path to the image

Returns:

uint8 numpy array with shape (img_height, img_width, 3)

"""

image = None

if(path.startswith('http')):

response = urlopen(path)

image_data = response.read()

image_data = BytesIO(image_data)

image = Image.open(image_data)

else:

image_data = tf.io.gfile.GFile(path, 'rb').read()

image = Image.open(BytesIO(image_data))

(im_width, im_height) = image.size

return np.array(image.getdata()).reshape(

(1, im_height, im_width, 3)).astype(np.uint8)

def build_inputs_for_object_detection(image, input_image_size):

"""Builds Object Detection model inputs for serving."""

image, _ = resize_and_crop_image(

image,

input_image_size,

padded_size=input_image_size,

aug_scale_min=1.0,

aug_scale_max=1.0)

return image

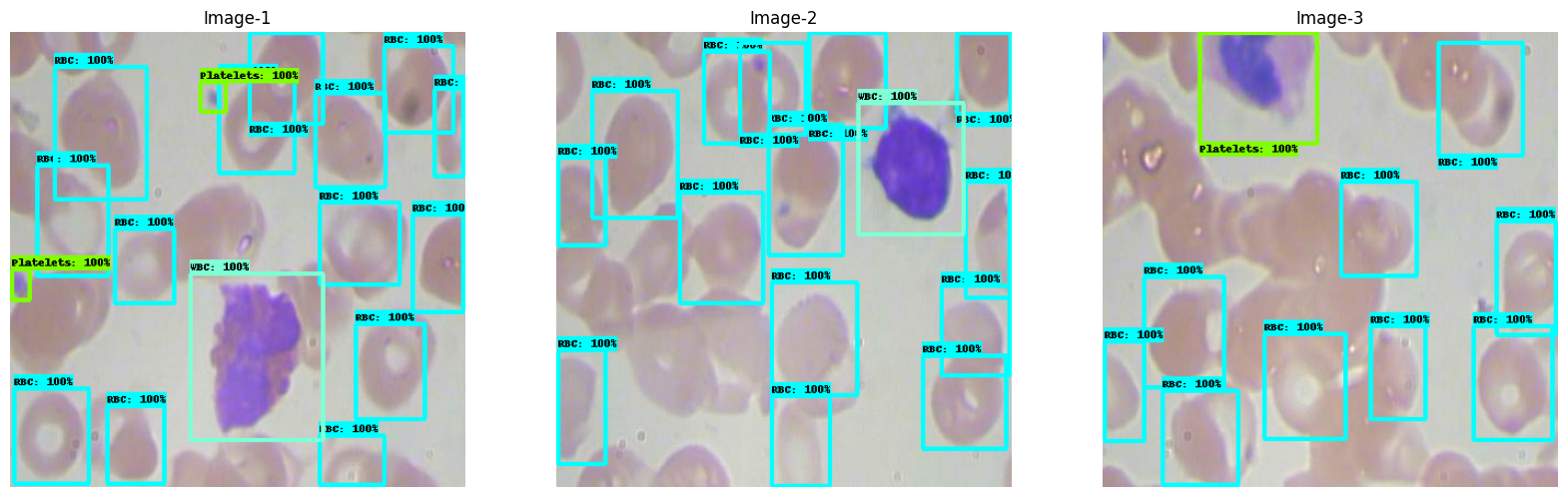

Visualize test data.

num_of_examples = 3

test_ds = tf.data.TFRecordDataset(

'./bccd_coco_tfrecords/test-00000-of-00001.tfrecord').take(

num_of_examples)

show_batch(test_ds, num_of_examples)

Importing SavedModel.

imported = tf.saved_model.load(export_dir)

model_fn = imported.signatures['serving_default']

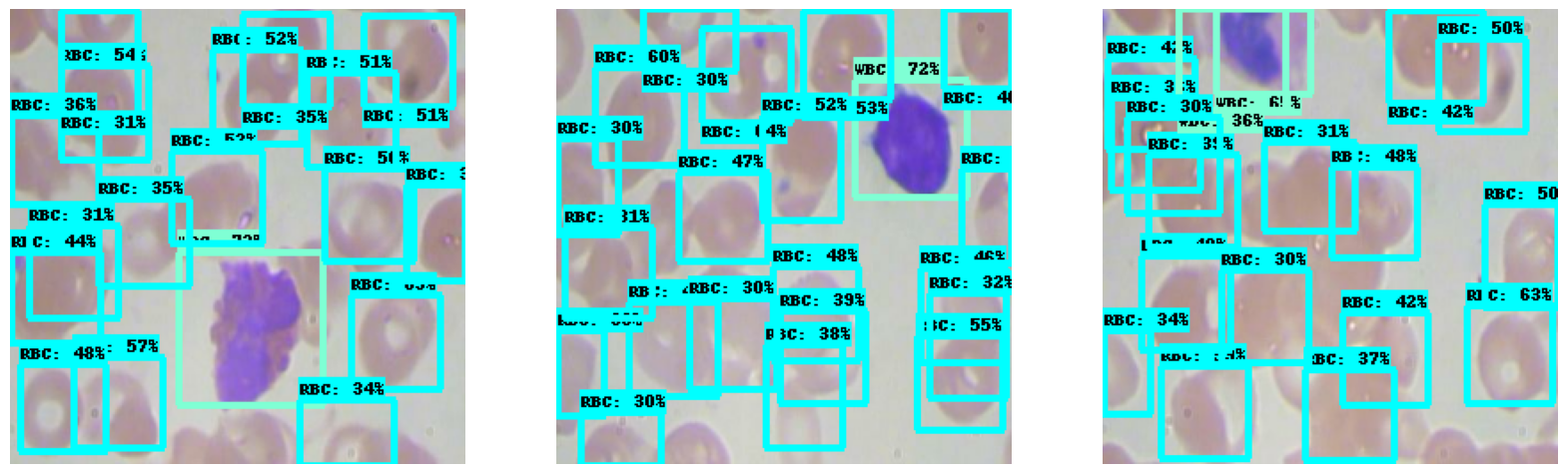

Visualize predictions.

input_image_size = (HEIGHT, WIDTH)

plt.figure(figsize=(20, 20))

min_score_thresh = 0.30 # Change minimum score for threshold to see all bounding boxes confidences.

for i, serialized_example in enumerate(test_ds):

plt.subplot(1, 3, i+1)

decoded_tensors = tf_ex_decoder.decode(serialized_example)

image = build_inputs_for_object_detection(decoded_tensors['image'], input_image_size)

image = tf.expand_dims(image, axis=0)

image = tf.cast(image, dtype = tf.uint8)

image_np = image[0].numpy()

result = model_fn(image)

visualization_utils.visualize_boxes_and_labels_on_image_array(

image_np,

result['detection_boxes'][0].numpy(),

result['detection_classes'][0].numpy().astype(int),

result['detection_scores'][0].numpy(),

category_index=category_index,

use_normalized_coordinates=False,

max_boxes_to_draw=200,

min_score_thresh=min_score_thresh,

agnostic_mode=False,

instance_masks=None,

line_thickness=4)

plt.imshow(image_np)

plt.axis('off')

plt.show()

Except as otherwise noted, the content of this page is licensed under the Creative Commons Attribution 4.0 License, and code samples are licensed under the Apache 2.0 License. For details, see the Google Developers Site Policies. Java is a registered trademark of Oracle and/or its affiliates.

Last updated 2023-11-09 UTC.