Inside Intel's Secret Overclocking Lab: The Tools and Team Pushing CPUs to New Limits (original) (raw)

Page 7 of 8:

'Safe' Overclocking Voltages and Techniques

How to Void Your Warranty as Safely as Possible

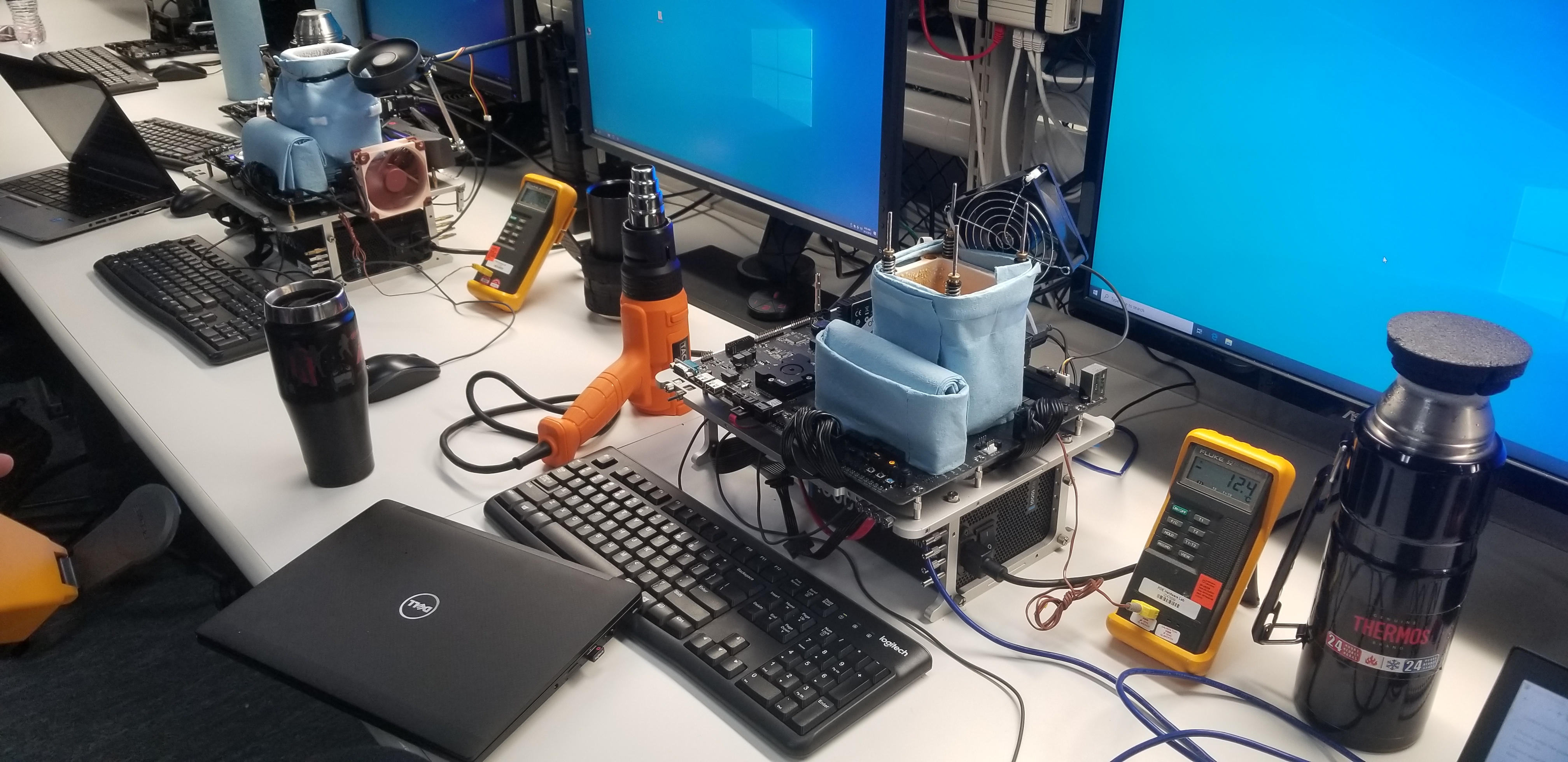

(Image credit: Tom's Hardware)

Intel spends a tremendous amount of time and treasure assuring that its chips will run beyond the rated speed, thus delivering some value for the extra dollars you plunk down for an overclockable K-Series chip. However, in spite of what some might think given Intel's spate of overclocking-friendly features and software, we have to remember that, unless you pay an additional fee for an insurance policy, overclocking voids your warranty.

The reason behind that is simple, but the physics are mindbogglingly complex. Every semiconductor process has a point on its voltage/frequency curve beyond which a processor will wear out at an untenable rate. If the chip wears enough, it triggers electromigration (the process of electrons slipping through the electrical pathways), which leads to premature chip death. Some factors are known to increase the rate of wear, such as the higher current and thermal density that comes as a result of overclocking.

All this means that, like the carton of milk in your refrigerator, your chip has an expiration date. It's the job of semiconductor engineers to predict that expiration date and control it with some accuracy, but Intel specs lifespan at out-of-the-box settings. Because increasing frequency through overclocking requires pumping more power through the chip, thus generating more heat, higher frequencies typically result in faster aging, and thus lowered life span.

In other words, all bets are off for Intel's failure rate predictions once you start bumping up the voltage. But there are settings and techniques that overclockers can use to minimize the impact of overclocking, and if done correctly, premature chip death from overclocking isn't a common occurrence.

Because Intel doesn’t cover overclocking with its warranty, the company doesn't specify what it would consider 'safe' voltages or settings.

But we're in a lab with what are arguably some of the smartest overclockers in the world, and these engineers spend their time analyzing failure rate data (and its relationship to the voltage/frequency curve) that will never be shared with the public.

We're aware that, due to company policy, the engineers couldn't give us an official answer to the basic question of what is considered a safe voltage, but that didn't stop us from asking what voltages and settings the lab members use in their own home machines. Given that, for a living, they study data that quantifies life expectancy at given temperatures and voltages... Well, connect the dots.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Speaking as enthusiasts, the engineers told us they feel perfectly fine running thier Coffee Lake chips at home at 1.4V with conventional cooling, which is higher than the 1.35V we typically recommend as the 'safe' ceiling in our reviews. For Skylake-X, the team says they run their personal machines anywhere from 1.4V to 1.425V if they can keep it cool enough, with the latter portion of the statement being strongly emphasized.

At home, the lab engineers consider a load temperature above 80C to be a red alert, meaning that's the no-fly zone, but temps that remain steady in the mid-70’s are considered safe. The team also strongly recommends using adaptive voltage targets for overclocking and leaving C-States enabled. Not to mention using AVX offsets to keep temperatures in check during AVX-heavy workloads.

As Ragland explained, the amount of time a processor stays in elevated temperature and voltage states has the biggest impact on lifespan. You can control the temperature of your chip with better cooling, which then increases lifespan (assuming the voltage is kept constant). Assuming voltage remains constant, each successive drop in temperature results in a non-linear increase in life expectancy, so the 'first drop' in temps from 90C to 80C yields a huge increase in chip longevity. In turn, colder chips run faster at lower voltages, so dropping the temperature significantly by using a beefier cooling solution also allows you to drop the voltage further, which then helps control the voltage axis.

In the end, though, voltage is the hardest variable to contain. Ragland pointed out that voltages are really the main limiter that prevents Intel from warrantying overclocked processors, as higher voltages definitely reduce the lifespan of a processor.

But Ragland has some advice: "As an overclocker, if you manage these two [voltage and temperature], but especially think about 'time in state' or 'time at high voltage,' you can make your part last quite a while if you just think about that. It's the person that sets their system up at elevated voltages and just leaves it there 24/7 [static overclock], that's the person that is going to burn that system out faster than someone who uses the normal turbo algorithms to do their overclocking so that when the system is idle your frequency drops and your voltage drops with it. So, There's a reason we don't warranty it, but there's also a way that overclockers can manage it and be a little safer."

That means manipulating the turbo boost ratio is much safer than assigning a static clock ratio via multipliers. As an additional note, you should shoot for idle temperatures below 30C, though that isn't much of a problem if you overclock via the normal turbo algorithms as described by Ragland.

Feedback

Hanging out with Intel's OC lab team was certainly a learning experience. The engineers have a passion for their work that's impossible to fake: Once you start talking shop you can get a real sense of a person's passion, or lack thereof, for their craft. From our meeting, we get the sense that Intel's OC lab crew members measure up to any definition of true tech enthusiasts, and we got the very real impression this is more than "just a job" to them.

Like employees at any company, there are certain things the engineers simply aren't allowed to answer, but they were forthright with what they could share and what they couldn't. We're accustomed to slippery non-answer answers to our questions from media-trained representatives (from pretty much every company) when a simple "I can't answer that" would suffice. There were plenty of "I can't answer that" responses during our visit, but we appreciate the honesty.

We peppered the team with questions, but they also asked us plenty of questions. The team was almost as interested in our observations and our take on the state of the enthusiast market as we were interested in their work, which is refreshing. We had a Q&A session where we were free to give feedback, and while Ragland obviously can't make the big C-suite-level decisions, his team sits at the nexus of the company's overclocking efforts, so we hope some of our feedback is taken upstream.

In our minds, overclocking began all those years ago as a way for enthusiasts to get more for their dollar. Sure, it's a wonderful hobby, but the underlying concept is simple: Buy a cheaper chip and spend some time tuning to unlock the performance of a more expensive model. Unfortunately, over the years, Intel's segmentation practices have turned overclocking into a premium-driven affair, with prices for overclockable chips taking some of the shine off the extra value. Those same practices have filtered out to motherboard makers, too. We should expect to pay extra for the premium components required to unlock the best of overclocking, but in many cases, the "overclocking tax" has reached untenable levels.

While segmentation is good for profits, it also leaves Intel ripe for disruption. That disruptor is AMD, which freely allows overclocking on every one of its new chips.

Unfortunately, we can't rationally expect Intel to suddenly unlock every chip and abandon a segmentation policy that has generated billions of dollars in revenue, but there are reasonable steps it could take to improve its value proposition.

Case in point: Intel's policy of restricting overclocking to pricey Z-series motherboards. AMD allows overclocking on nearly all of its more value-centric platforms (A-series excluded), which makes overclocking more accessible to mainstream users. We feel very strongly that Intel should unlock overclocking on its downstream B- and H-series chipsets to open up overclocking to a broader audience.

Intel's team expressed concern that the power delivery subsystems on many of these downstream motherboards aren't suitable for overclocking, which is a fair observation. However, there is surely at least some headroom for tuning, and we bandied about our suggestion of opening up some level of restricted overclocking on those platforms. Remember, back in the Sandy Bridge era, Intel restricted overclocking to four bins (400 MHz), so there is an established method to expose at least some headroom. We're told our feedback will be shared upstream, and hopefully it is considered. We'd like to see Intel become more competitive in this area, as that would benefit enthusiasts on both sides of the ball.

Our other suggestions include that Intel works on a dynamic approach to its auto-overclocking mechanisms. AMD's Precision Boost Overdrive (PBO) opened up overclocking to less-knowledgeable users by creating a one-click tool to auto-overclock your system. Intel's relatively-new IPM is also a great one-click tool that accomplishes many of the same goals, but it is based on static overclock settings that don't automatically adapt in real-time to the chip's properties or changes in thermal conditions. Instead, you have to re-run the utility. We'd like to see a more dynamic approach taken, and we're told that Intel is already evaluating that type of methodology.

- PAGE 1: The Overclocking Lab

- PAGE 2: The Beginnings and Mission of Intel's Overclocking Lab

- PAGE 3: Pouring LN2, the OSHA Way

- PAGE 4: TIM, Coolers, The Medusa, and Other Intel Lab Gear

- PAGE 5: Validation Boards and Overclocking Bootcamps

- PAGE 6: VRM Supercooling, PCH Swapping, and Internal Tools

- PAGE 7: 'Safe' Overclocking Voltages and Techniques

- PAGE 8: Is Overclocking Dead?

MORE: Best CPUs

MORE: Intel & AMD Processor Hierarchy

MORE: All CPUs Content

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.