Easier and nicer to use Mobile Web applications (original) (raw)

Winning users over with more attractive and more flexible mobile web applications

Dave Raggett, W3C/Canon

Position paper for W3C Mobile Web Initiative Workshop, 18-19th November 2004. This paper reflects the personal views of the author and should not be taken to represent those of either W3C or Canon.

Contents:

- current user experience

- multimodal applications

- skin-able user interfaces

- considerations for effective deployment

- suggested next steps

- acknowledgements

Current User Experience

Whilst most recent phones are able to resize the images, there remain many problems with the user experience. Web pages designed for PDAs often don't resize well for the smaller displays common on phones. A two column table designed for PDAs looks terrible on a phone when the words in the second column are split across lines. See Fig 1 and 2.

Page designs aimed at bigger displays often don't scale well as the display size reduces. In Fig 3 we see how graphics can become hidden under the text. A solution is for the designer to provide different layouts as appropriate to the device characteristics. In Fig 4 we see the same page as in Fig3, but, this time using an mobile browser that supports CSS media queries. This involves a few extra lines in the style sheet and is much easier than server-side adaptation.

Other variations across widely available mobile browsers include support for CSS positioning, and basic scripting support for features like document.write(). To add to this, it can be tricky to reliably determine key device characteristics for use in server side scripts. This creates considerable challenges for web application designers, and is a major factor in accounting for the paucity of effective mobile content.

Multimodal Applications

In the right circumstances, speech recognition is a lot more convenient than keystrokes on the phone's limited keypad. Speech can be used for commands, filling out forms and for search, e.g. in a ring-tone application, for supplying the name of an artist or a track. For moderate vocabularies, device-based speech recognition is practical and offers the lowest latency. Network-based recognition allows for the use of more powerful recognizers and larger vocabularies. A major consideration when large grammar files are involved, is that these can take much longer to transfer to the device, than for the device to transfer the raw speech data to the server. This is likely to be the case for grammars for large data sets on network hosts. We are therefore likely to see applications using a mix of embedded and network-based speech recognition.

|

|---|

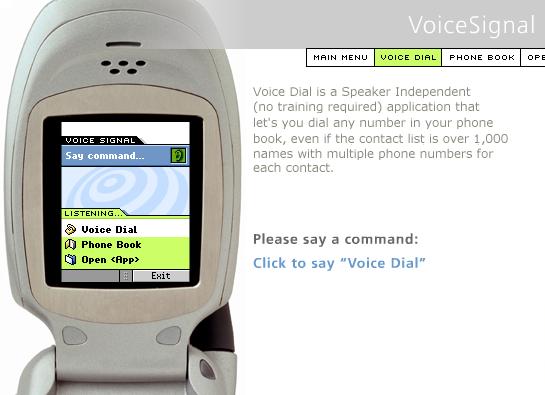

| Fig 5: VoiceSignal's embedded multimodal apps |

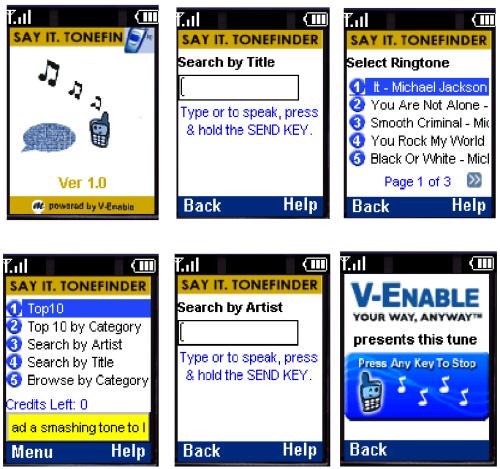

The follow sequence of screen shots show a multimodal application being used to search for ringtones. You can search by voice or by using the phone's keypad. The application is based upon uploading the raw speech signal to the server using the data connection.

|

|---|

| Fig 6: V-Enable's ringtone application (networked speech) |

Effective use of speech recognition relies on the application being designed to direct the user to respond appropriately. The user is guided through a combination of visual and aural prompts. You may need to view the screen to see what you can say. An animated icon together with an audible beep may indicate when the application expects you to start speaking. On some devices, you may then need to press a button to help the device to ignore background noise, and as a means to stretch battery life.

| | Text Hits | Speech Hits | % Speech | | | ------------------------------------------------------------------------------------------------------------------------------------ | ----------- | -------- | --- | | Artist Name | 17207 | 31940 | 64% | | Title Name | 5830 | 9657 | 62% | | Fig 7: User preferences for seaching with keypad vs speech from a sample size of 240 thousand users for V-Enable's "SayItToneFinder" | | | |

Skin-able User Interfaces

Multi-modal interaction is expected to provide significant benefits over mono-modal interaction, for instance, allowing end-users to use speech when their hands and eyes are busy. Speech input may be faster and more convenient than text entry on cell phone key pads. In other circumstances, the reverse may hold when social or environmental factors preclude the use of speech. An important principle is that users should be free to choose which modes they want to use according to their needs, while designers should be empowered to provide an effective user experience for whichever choice is made.

One way to support this is through the idea of user interface skins that can be changed as needed to suit user preferences and device capabilities. The visual appearence and the details of how you interact with an application can vary strikingly from one skin to the next. This approach is increasingly commonplace for desktop applications, and relies on a clean separation between the user interface and the application.

What is needed to enable skin-able user interfaces for mobile web applications? Style sheets make it easy to change the visual appearence, but currently don't cover the way users interact with applications. In principle, it would be easy to extend style sheets to describe interaction behavior. A study by the author has shown that a handful of extensions to CSS would be more than sufficient to describe the sequence of prompts and anticipated user responses, and to allow for a flexible mix of user and application directed interaction. CSS Media Queries would allow the designer to tailor the interaction to match the user preferences and device capabilities. If a concensus can't be reached around extending CSS, then a further possibility is a new XML interaction sheet language. A demonstrator for both approaches is in preparation.

W3C's Scaleable Vector Graphics (SVG) markup language is proving increasingly popular for mobile devices, with lots of potential for creating attractive user interfaces for mobile web applications. There is now interest in being able to use SVG for skin-able user interfaces. This is based upon a means to bind application markup to controls written in SVG. The glue is XBL (the extensible binding language). By changing the bindings, designers can radically alter the appearence and behavior of their application. Further study is needed to exploit SVG in multimodal user interfaces, but in principle, XBL could be combined with extensions to CSS to provide really compelling user interfaces.

Considerations for Effective Deployment

The previous sections have looked at issues with the current user experience for mobile web applications, and at opportunities for offering a more compelling experience with skin-able multimodal user interfaces. This should help to boost ARPU (average revenue per user), with benefits to network operators, content developers and device vendors. What are the considerations for phased deployment of new capabilities for mobile web applications and what are the implications for the technical standards?

When only a relatively few people have devices that support the new capabilities, there is a reduced incentive to develop content for such devices. This is greatly eased if the same content can be used for both old and new devices. The great majority of people will have the same experience as before, while the lucky few will benefit from a richer experience. The history of the World Wide Web has shown the importance of backwards compatibility, and this lesson is still relevant to current work on evolving the mobile web.

If standards are not backwards compatible, it takes a lot longer to grow adoption. First, people have to be given a compelling reason to upgrade, and second, it takes time for reach a critical mass of users that in turn catalyzes the growth of content. Few people are likely to upgrade their devices if there is only a very small amount of applicable content. This creates a chicken and egg situation. It can be circumvented by providing other reasons to upgrade, e.g. changes in fashion, and other improvements that are of immediate value, but this requires considerable faith and patience from the device vendors and network operators.

A further consideration is to build upon what people already know and are experienced with. This greatly expands the pool of would be content developers, helping to boost adoption rates enormously. Finally, the technical approach must be attractive to the companies that create the devices and network infrastructure. This is where an incremental phased approach is helpful. Evolutionary extensions to existing capabilities are preferable to revolutionary changes. Backwards compatible extensions to XHTML and CSS are thus more likely to succeed than new languages.

Suggested Next Steps

Two things that could be done to quickly improve the end user experience: First, the availability of better information about device characteristics and the level of support for standards, together with a reliable means to exploit this within scripts. Second, Web content should identify whether it is designed for use on mobile devices, so that such content can be readily found using Web search engines. This would make it easier for a much broader pool of content developers to reach such devices.

The next step would be to bring such browsers up to a common level where they all support CSS media queries and CSS positioning. This would make it easier for designers to adapt the page layout to match the capabilities of a particular device, without involving the additional complexities in dealing with server-side adaptation.

Work on skin-able user interfaces and multimodal interaction should be focused on end-user needs, and ensuring that the standards can be readily deployed on a wide scale. W3C is already involved in standards work on multimodal interaction and device independence, but complementary work is needed in several areas. One such area is content labeling, as mentioned above. Another is concerned with how devices identify and gain access to services, e.g. for network-based speech services, and more generally, for sessions and device coordination.

The Web is potentially much much more than just browsing HTML documents, and we should be looking for ways to unlock this potential. For instance, what would be needed for a multimodal service for browsing voice mail, where you can view the list of messages while you are listening to your messages?

Whilst a complicated solution could be fashioned using a Web server to couple the XHTML browser on the device with a VoiceXML browser in the network, a much simpler solution can be envisioned where the device uses SIP control messages to control the voice channel, and Web Services to update the displayed list of messages. This would be practical if the corresponding resources could be exposed as simple components for use in Web applications, whether through scripting or standardized markup.

One promising avenue is to allow for applications involving multiple devices in peer to peer communications. A couple of examples include peer to peer connections between a camera phone and a printer, and between a phone and a projector for business presentations. Today, these can be realized with some effort via manually installed Java applications, but we lack the standards to do this via simple web applications.

Another avenue is to look at opportunities for augmenting human to human communications. For instance, allowing a customer service agent to talk you through product descriptions pushed to your phone during the converation. When looking for a hotel room, you could view pictures of the rooms and the nearby amenities, and as a result provide additional information to help the customer service agent refine the list of suggested hotels. Both avenues could be addressed by new standards work aimed at enabling the_Ubiquitous Web!_

Acknowledgements

The following mobile browsers were used in preparing this document:

- Access NetFront browser: http://www.access-netfront.com/

- Openwave Systems' phone browser: http://www.openwave.com/

- Opera Software's Opera browser: http://www.opera.com/

Websites used for preparing the figures:

- Fig 1 & 2: http://www.wacklepedia.com/pdahotspots/pda_hotspots.htm

- Fig 3 & 4: http://epona.net/

- Fig 5: http://www.voicesignal.com/

- Fig 6 & 7: http://www.v-enable.com/

- Fig 8: http://www.smartphone2000.com/