V-BEs: Visually-Grounded Library of Behaviors for Manipulating Diverse Objects across Diverse Configurations and Views (original) (raw)

V-BEs

Overview of method

Given an input RGB-D image IvI_vIv of the scene and an object 3D bounding box mathrmo\mathrm{o}mathrmo, the selector mathrmG\mathrm{G}mathrmG predicts the probability of successfully manipulating the object when applying each behavior on the object. This is done by using geometry-aware recurrent neural networks (GRNNs) to convert the image to a 3D scene representation mathbfM′\mathbf{M}'mathbfM′, cropping the representation to the object using the provided 3D bounding box, and computing the cosine simularity between this object representation mathbfF(Iv,mathrmo;phi)\mathbf{F}(I_v, \mathrm{o}; \phi)mathbfF(Iv,mathrmo;phi) and learned behavioral keys kappai\kappa_ikappai. for each behavior pii\pi_ipii in the library. The behavior with the highest predicted success probability is then executed in the environment. We train the selector using interaction labels collected by running behaviors on random training objects and recording the binary success or failure outcomes of the executions.

Paradigm 1: In contrast to state-to-action or image-to-action mapping, the proposed framework decomposes a policy into a behavior selection module and a library of behaviors to select from. The decomposition enables these modules to work on different representation: the selection module operates in a semantically-rich visual feature space, while the behaviors operates in an abstract object state space that facilitate efficient policy learning.

Quantitative Results

Our method out-performs various baselines and ablations in simulated pushing and grasping tasks.

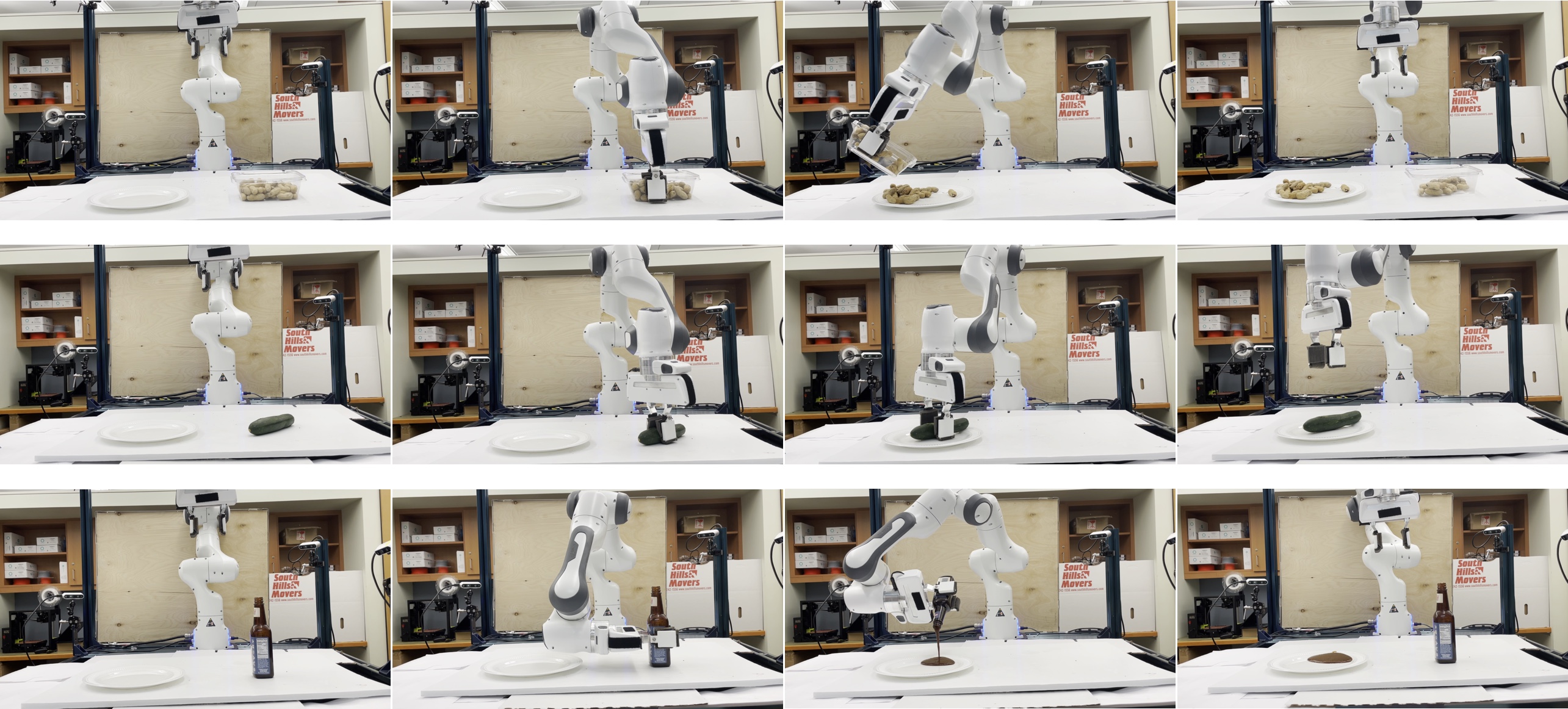

We also perform real robot experiment in a setup where the robot needs to execute various skills to transport diverse rigid, granular, and liquid objects to a plate.