|

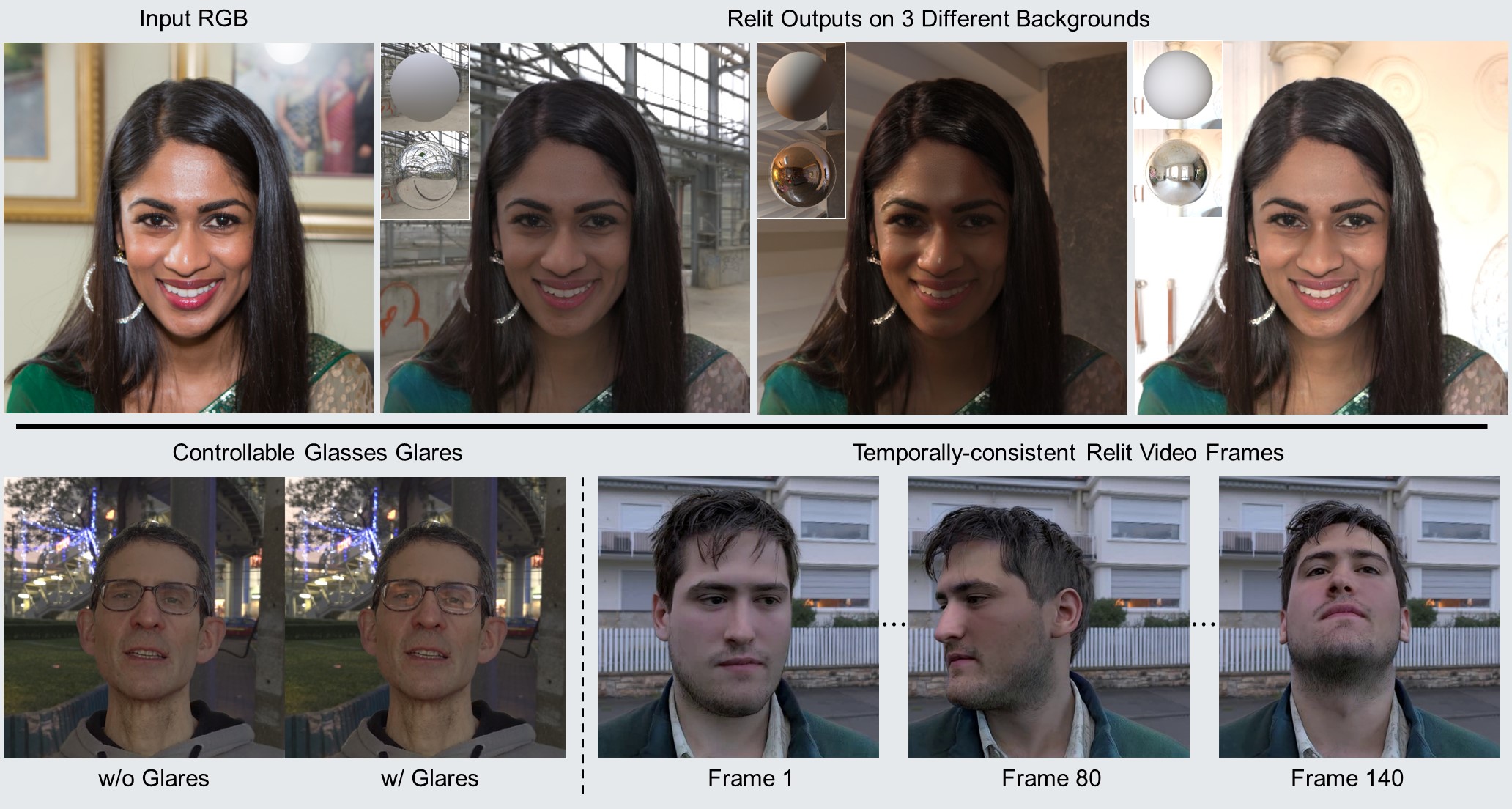

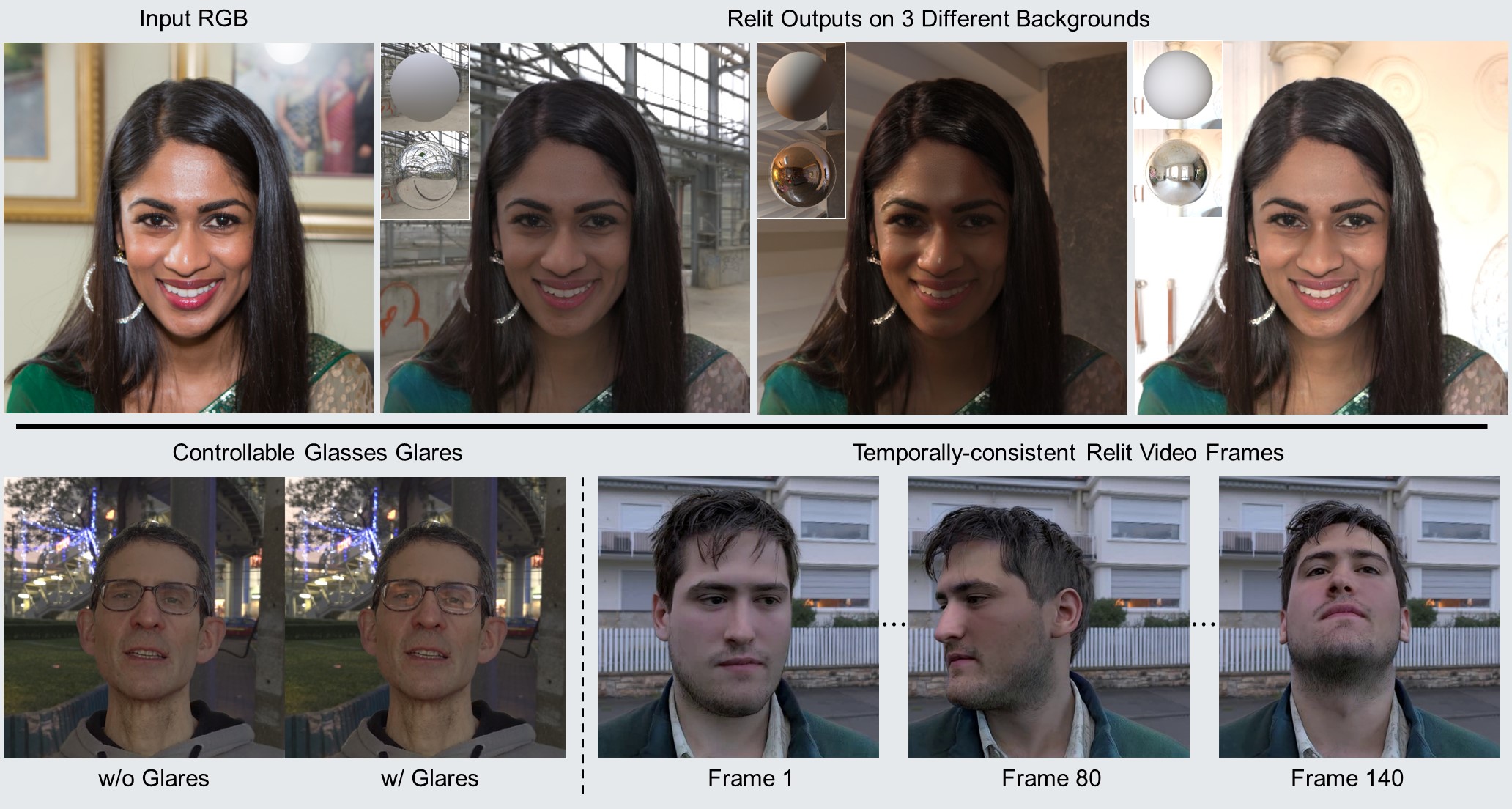

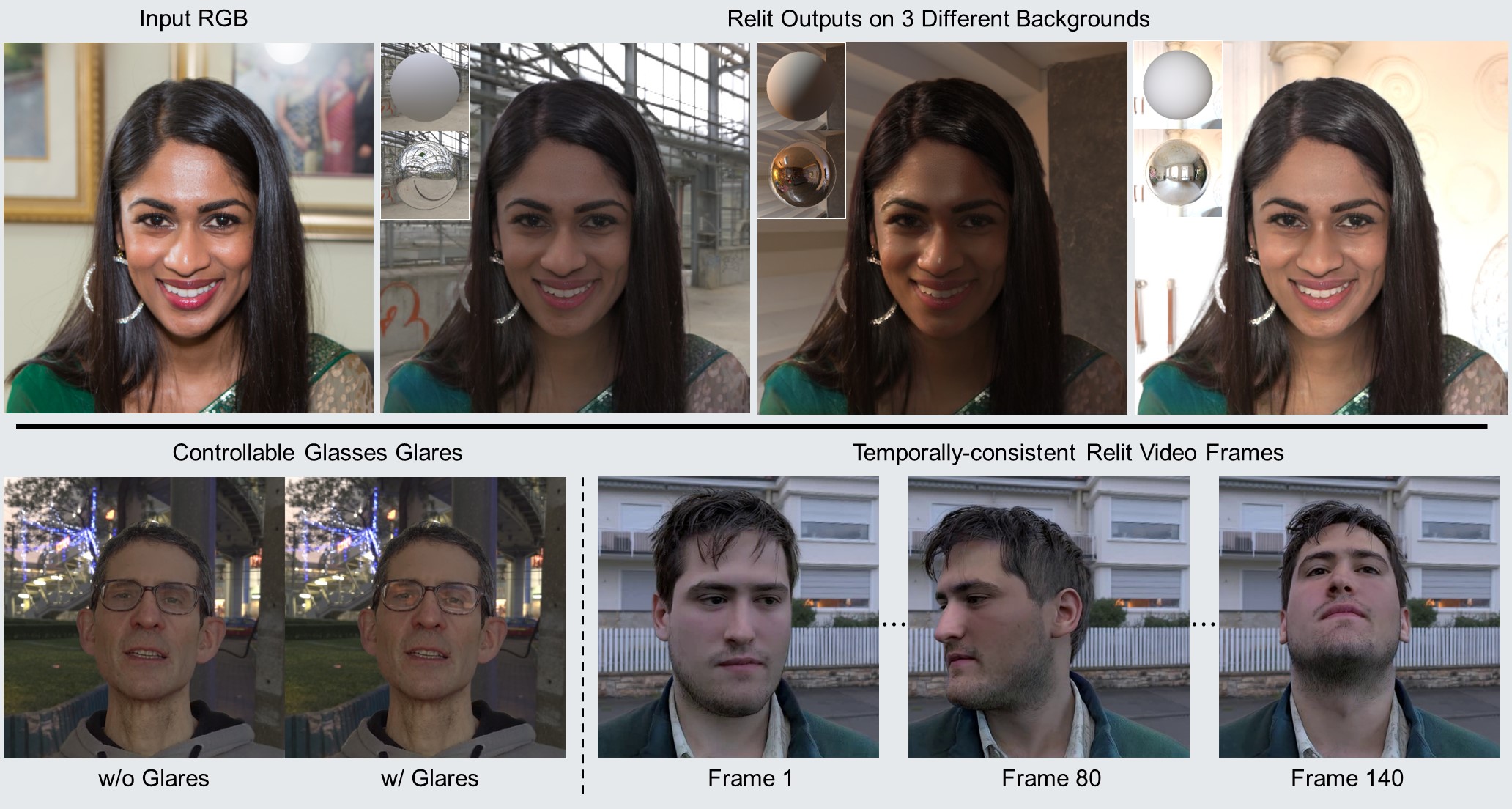

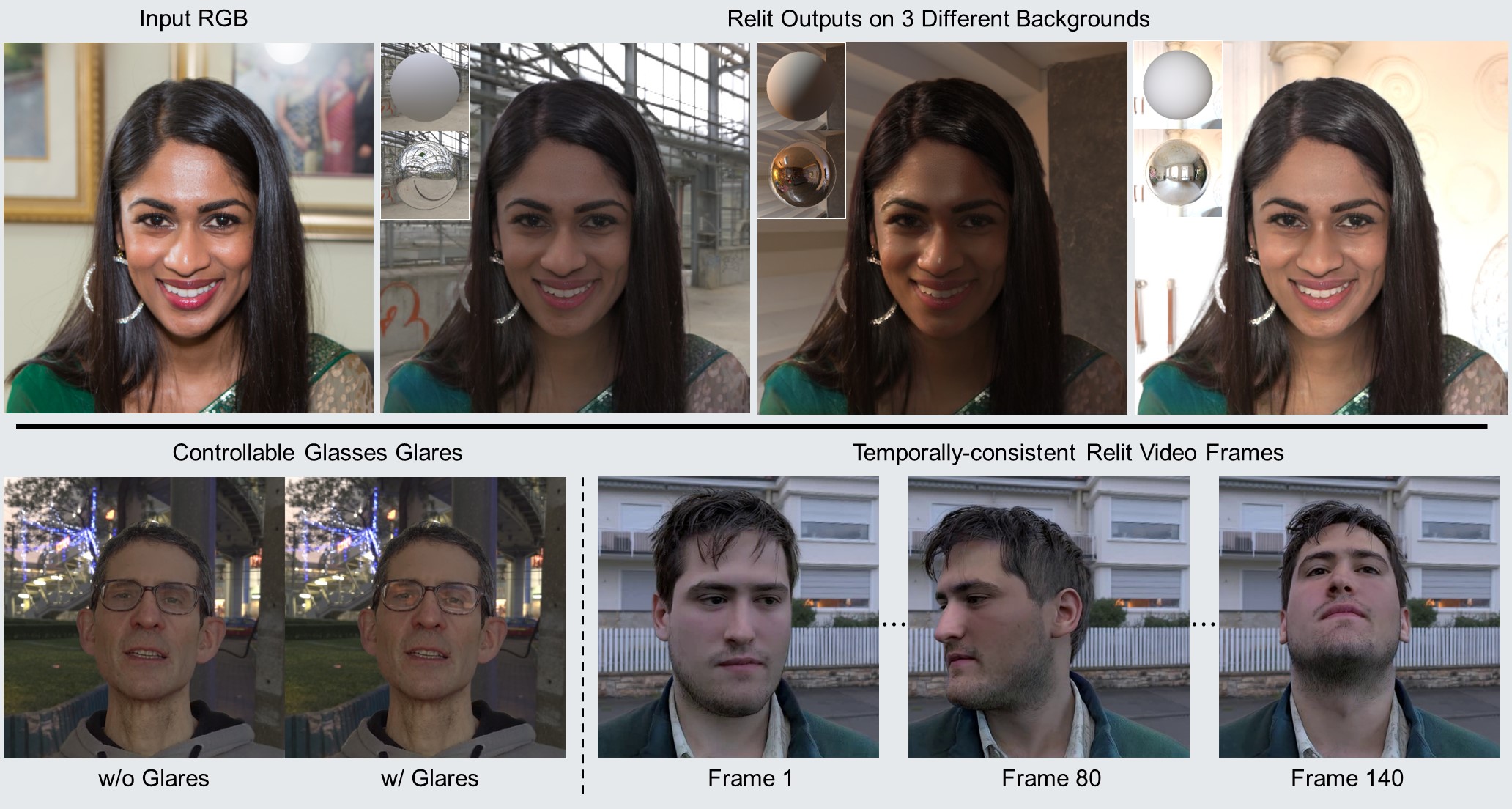

Learning to Relight Portrait Images via a Virtual Light Stage and Synthetic-to-Real Adaptation Yu-Ying Yeh,Koki Nagano,Sameh Khamis,Jan Kautz,Ming-Yu Liu,Ting-Chun Wang SIGGRAPH Asia, 2022 project page /arxiv /video We propose a single-image portrait relighting method trained with our rendered dataset and synthetic-to-real adaptation to achieve high photorealism without using light stage data. Our method can also handle eyeglasses and support video relighting. |

Your browser does not support the video tag.  |

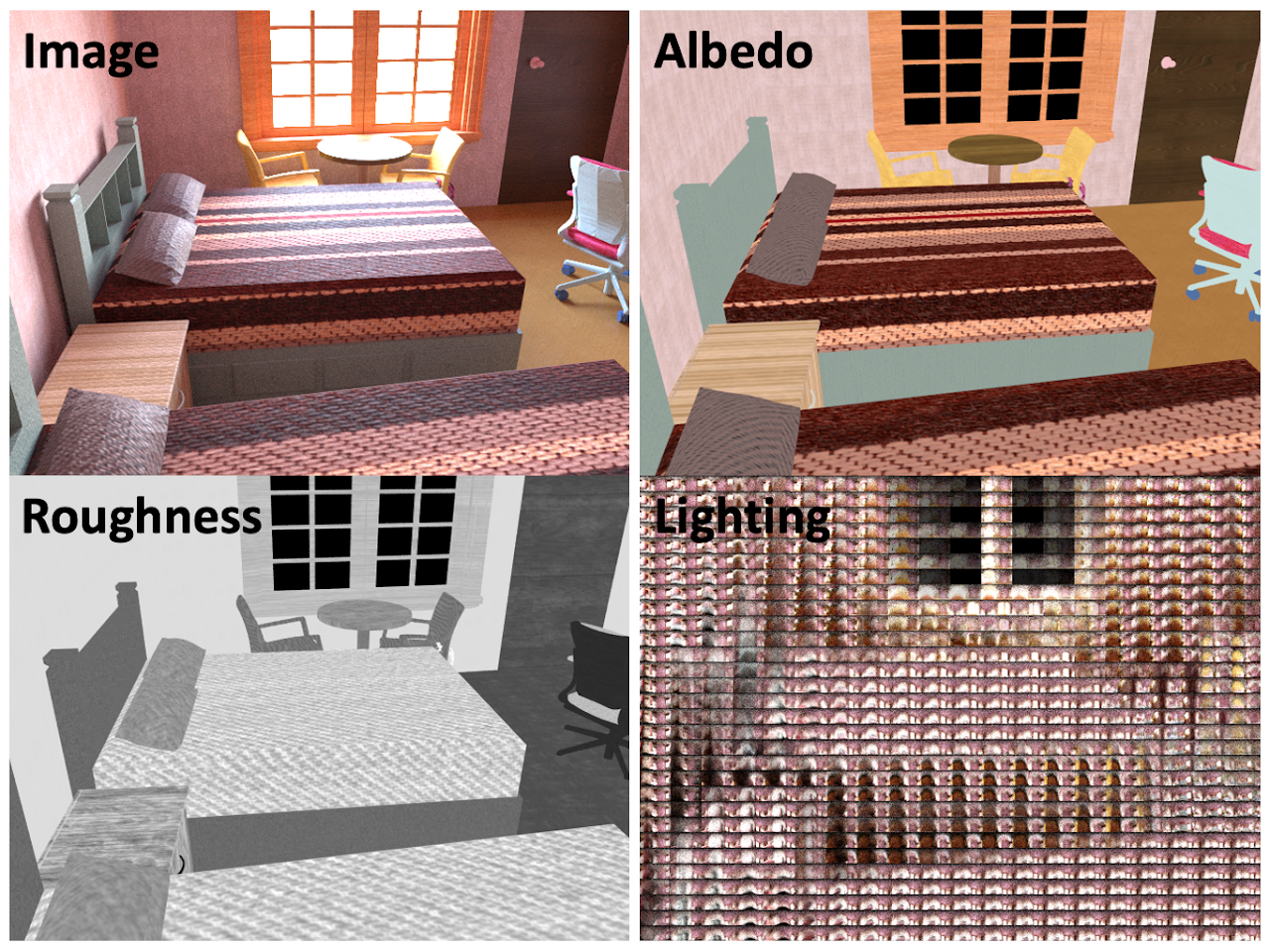

PhotoScene: Photorealistic Material and Lighting Transfer for Indoor Scenes Yu-Ying Yeh,Zhengqin Li,Yannick Hold-Geoffroy,Rui Zhu,Zexiang Xu, Miloš Hašan,Kalyan Sunkavalli,Manmohan Chandraker CVPR, 2022 project page /arXiv /cvpr paper /code Transfer high-quality procedural materials and lightings from images to reconstructed indoor scene 3D geometry, which enables photorealistic 3D content creation for digital twins. |

|

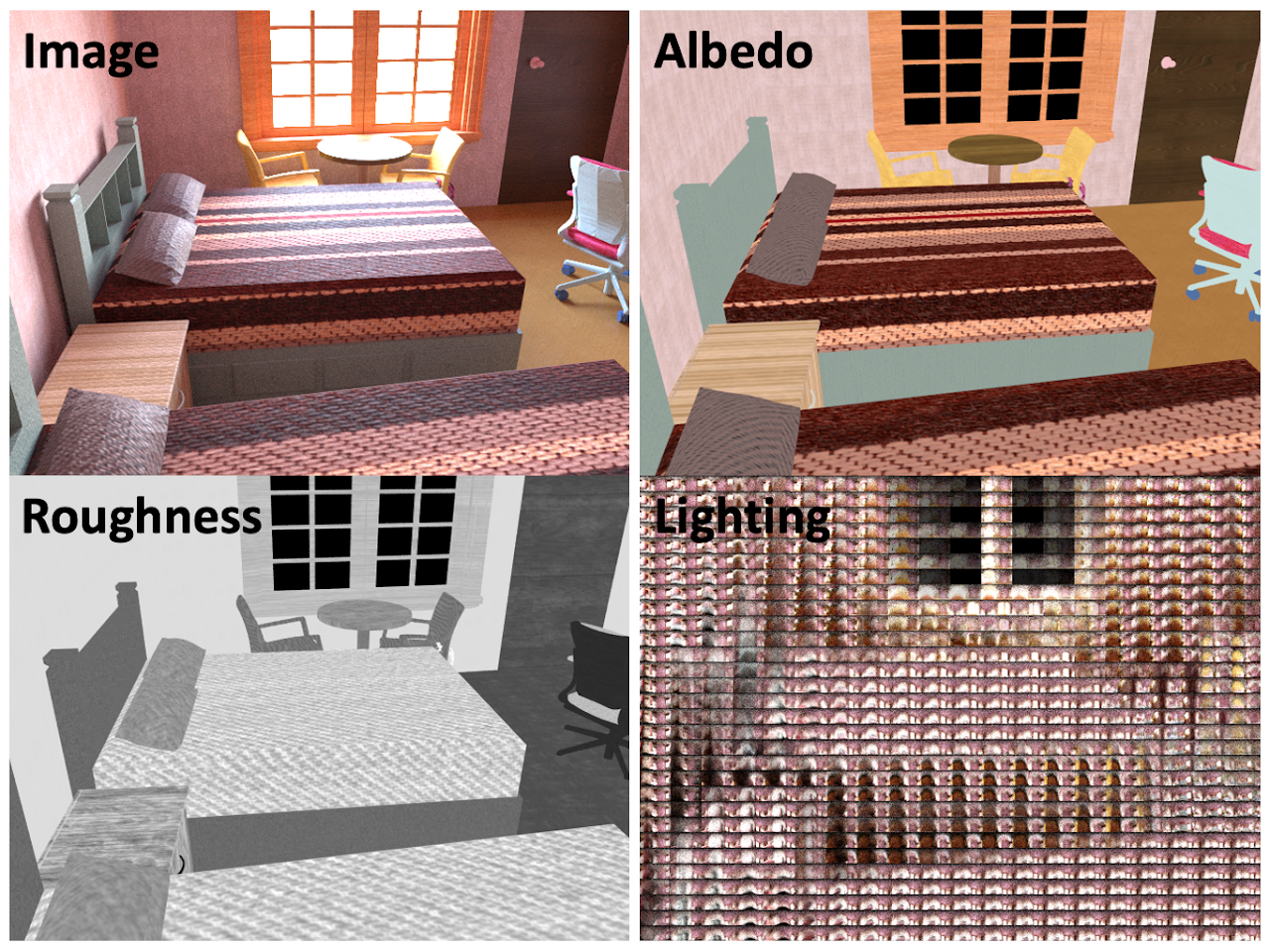

OpenRooms: An Open Framework for Photorealistic Indoor Scene Datasets Zhengqin Li, Ting-Wei Yu, Shen Sang, Sarah Wang,Meng Song, Yuhan Liu,Yu-Ying Yeh, Rui Zhu,Nitesh Gundavarapu, Jia Shi,Sai Bi,Zexiang Xu,Hong-Xing Yu,Kalyan Sunkavalli,Miloš Hašan,Ravi Ramamoorthi,Manmohan Chandraker CVPR, 2021 (Oral Presentation) project page /arXiv An open framework which creates a large-scale photorealistic indoor scene dataset OpenRooms from a publicly available video scans dataset ScanNet. |

|

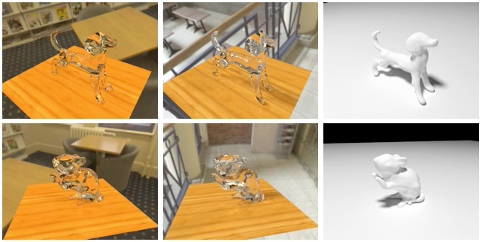

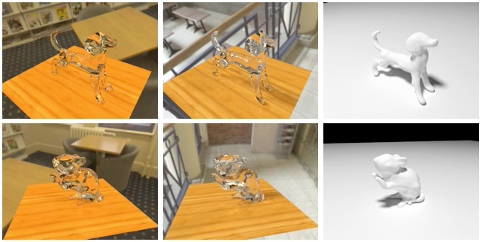

Through the Looking Glass: Neural 3D Reconstruction of Transparent Shapes Yu-Ying Yeh*,Zhengqin Li*,Manmohan Chandraker (*equal contributions) CVPR, 2020 (Oral Presentation) project page /arXiv /code /dataset /real data Transparent shape reconstruction from multiple images captured from a mobile phone. |

|

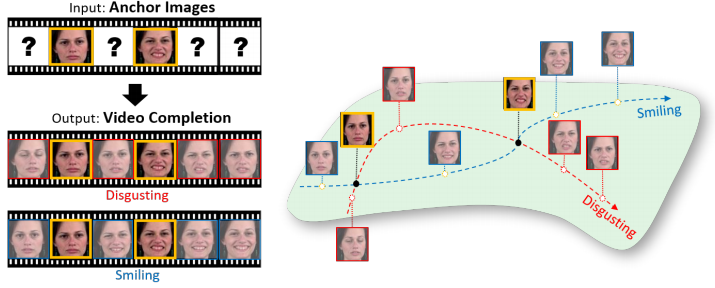

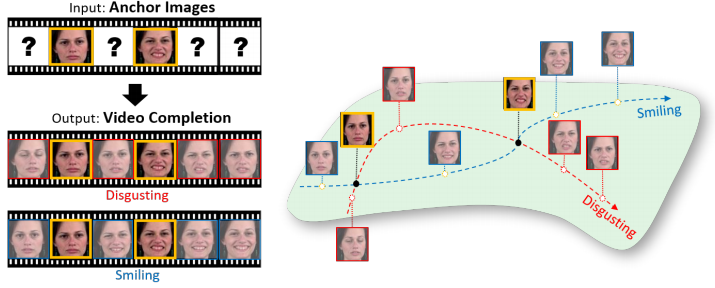

Static2Dynamic: Video Inference from a Deep Glimpse Yu-Ying Yeh,Yen-Cheng Liu,Wei-Chen Chiu,Yu-Chiang Frank Wang IEEE Transactions on Emerging Topics in Computational Intelligence, 2020 paper /bibtex Video generation, interpolation, inpainting and prediction given a set of anchor frames. |

|

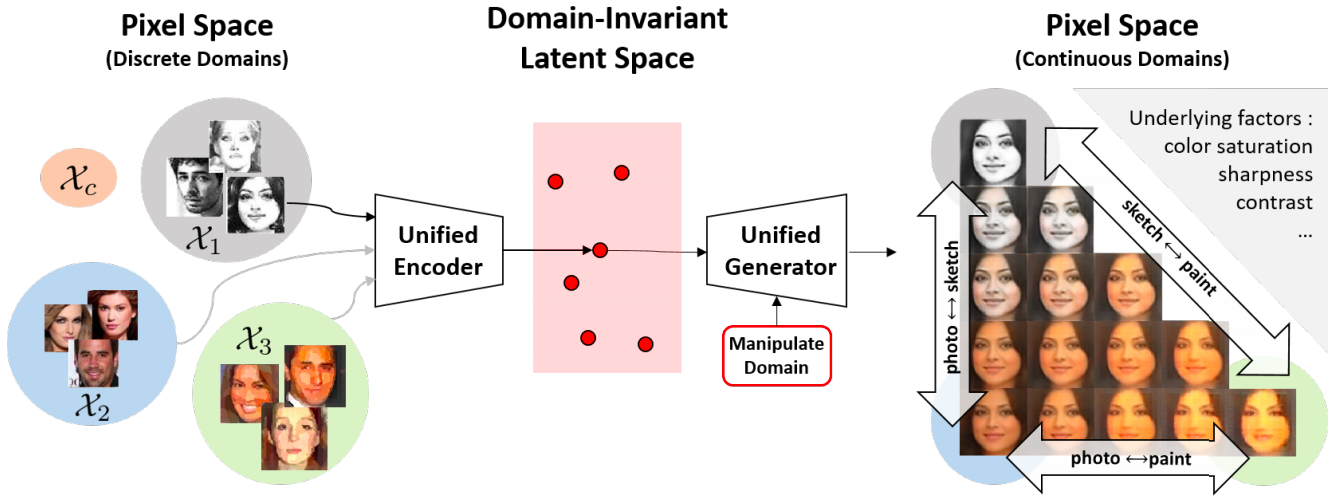

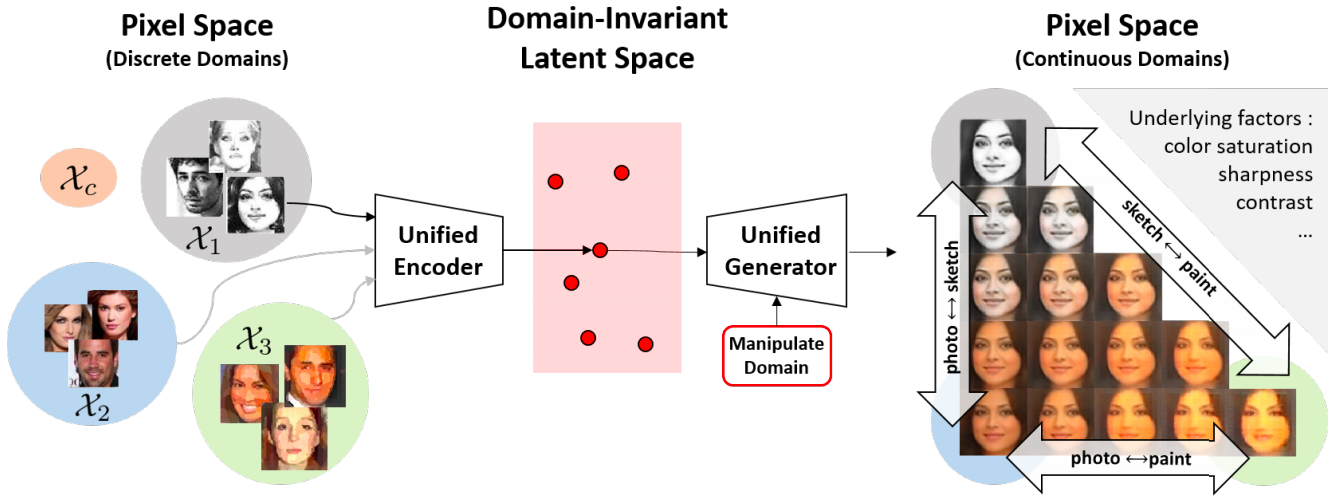

A Unified Feature Disentangler for Multi-Domain Image Translation and Manipulation Alexendar Liu,Yen-Cheng Liu,Yu-Ying Yeh,Yu-Chiang Frank Wang NeurIPS, 2018 arXiv /bibtex /code A novel and unified deep learning framework which is capable of learning domain-invariant representation from data across multiple domains. |

|

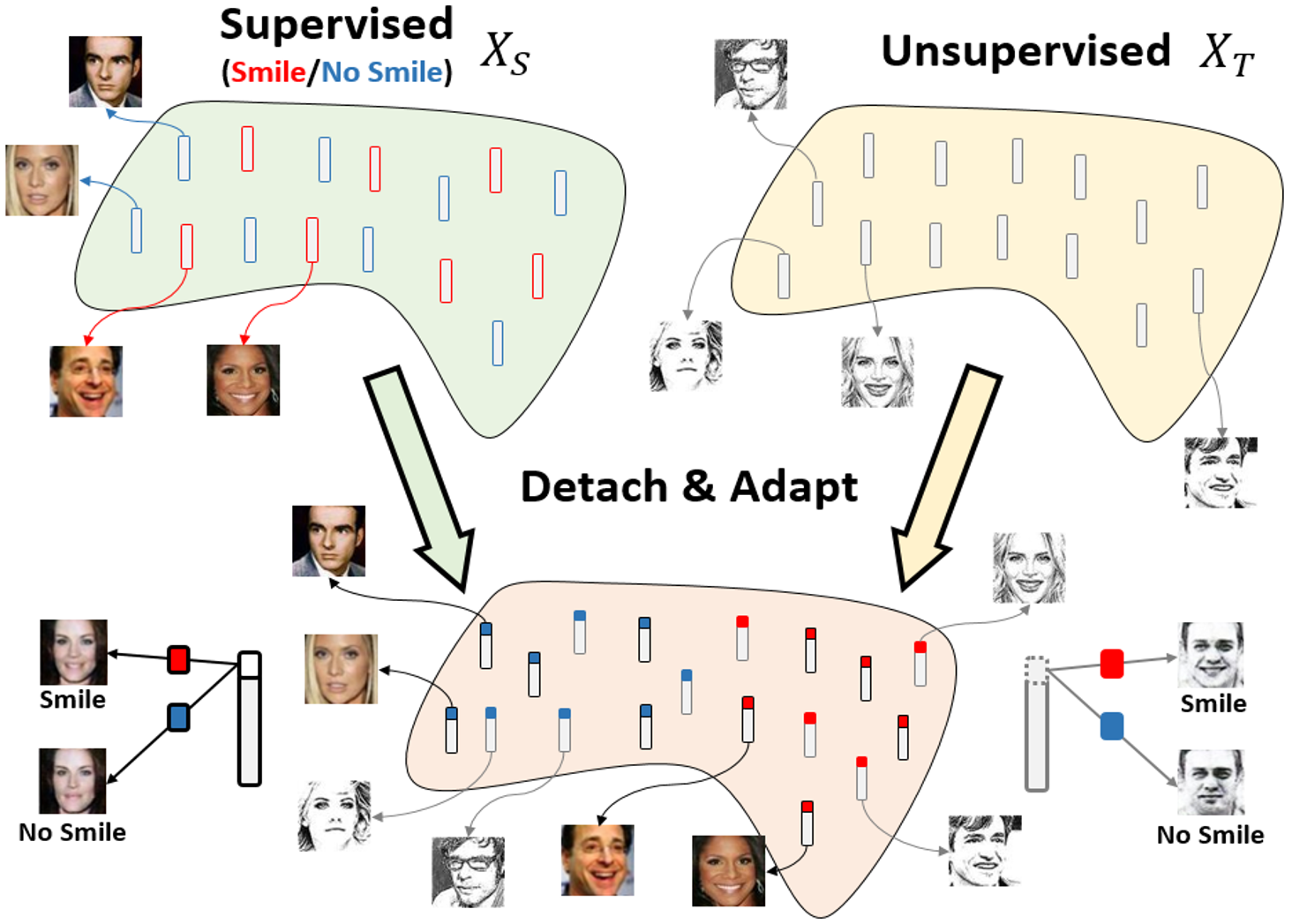

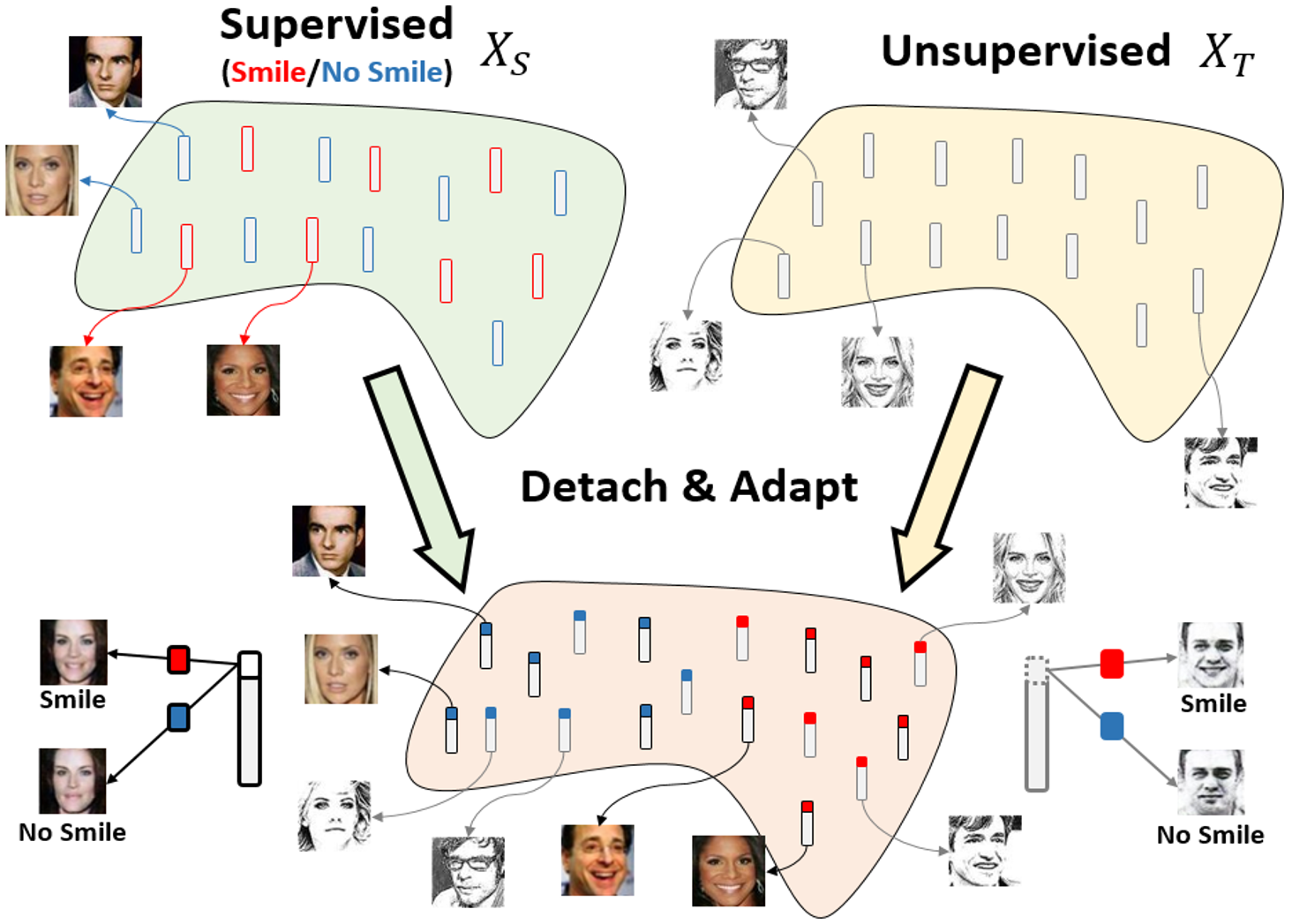

Detach and Adapt: Learning Cross-Domain Disentangled Deep Representation Yen-Cheng Liu,Yu-Ying Yeh, Tzu-Chien Fu,Wei-Chen Chiu, Sheng-De Wang,Yu-Chiang Frank Wang CVPR, 2018 (Spotlight Presentation) paper /bibtex /code /presentation Feature disentanglement for cross-domain data which enables image translation and manipulation from labeled source doamin to unlabeled target domain. |