ZenML - MLOps framework for infrastructure agnostic ML pipelines (original) (raw)

Trusted by 1,000s of top companies to standardize their MLOps workflows

Speed

Iterate at warp speed

Local to cloud seamlessly. Jupyter to production pipelines in minutes. Smart caching accelerates iterations everywhere. Rapidly experiment with ML and GenAI models.

Observability

Auto-track everything

Automatic logging of code, data, and LLM prompts. Version control for ML and GenAI workflows. Focus on innovation, not bookkeeping.

Scale

Limitless Scaling

Scale to major clouds or K8s effortlessly. 50+ MLOps and LLMOps integrations. From small models to large language models, grow seamlessly.

Flexibility

Backend flexibility, zero lock-in

Switch backends freely. Deploy classical ML or LLMs with equal ease. Adapt your LLMOps stack as needs evolve.

Reusability

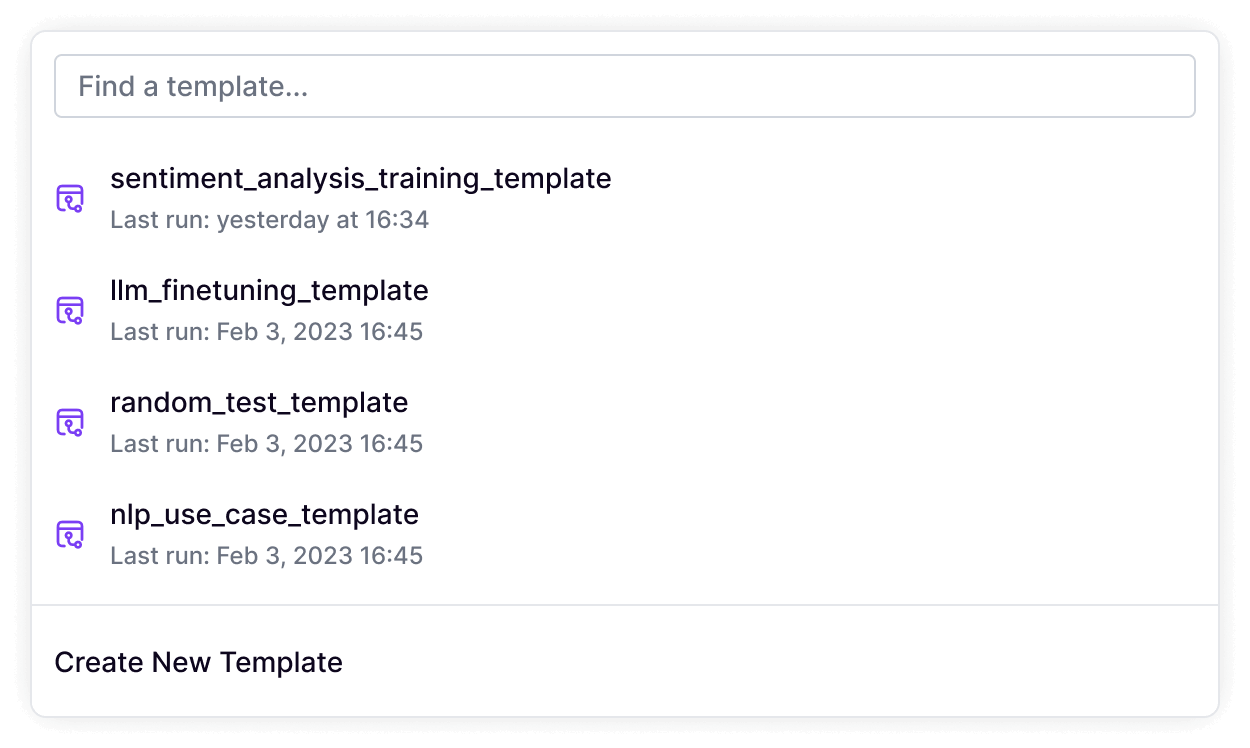

Shared ML building blocks

Team-wide templates for steps and pipelines. Collective expertise, accelerated development.

Optimization

Streamline cloud expenses

Stop overpaying on cloud compute. Clear view of resource usage across ML and GenAI projects.

Governance

Built-in compliance & security

Comply with EU AI Act & US AI Executive Order. One-view ML infrastructure oversight. Built-in security best practices.

ZenML offers the capability to build end-to-end ML workflows that seamlessly integrate with various components of the ML stack, such as different providers, data stores, and orchestrators. This enables teams to accelerate their time to market by bridging the gap between data scientists and engineers, while ensuring consistent implementation regardless of the underlying technology.

Harold Giménez

SVP R&D at HashiCorp

ZenML's automatic logging and containerization have transformed our MLOps pipeline. We've drastically reduced environment inconsistencies and can now reproduce any experiment with just a few clicks. It's like having a DevOps engineer built into our ML framework.

Liza Bykhanova

Data Scientist at Competera

"Many, many teams still struggle with managing models, datasets, code, and monitoring as they deploy ML models into production. ZenML provides a solid toolkit for making that easy in the Python ML world"

Chris Manning

Professor of Linguistics and CS at Stanford

"ZenML has transformed how we manage our GPU resources. The automatic deployment and shutdown of GPU instances have significantly reduced our cloud costs. We're no longer paying for idle GPUs, and our team can focus on model development instead of infrastructure management. It's made our ML operations much more efficient and cost-effective."

Christian Versloot

Data Technologist at Infoplaza

"After a benchmark on several solutions, we choose ZenML for its stack flexibility and its incremental process. We started from small local pipelines and gradually created more complex production ones. It was very easy to adopt."

Clément Depraz

Data Scientist at Brevo

"ZenML allowed us a fast transition between dev to prod. It’s no longer the big fish eating the small fish – it’s the fast fish eating the slow fish."

François Serra

ML Engineer / ML Ops / ML Solution architect at ADEO Services

"ZenML allows you to quickly and responsibly go from POC to production ML systems while enabling reproducibility, flexibitiliy, and above all, sanity."

Goku Mohandas

Founder of MadeWithML

"ZenML's approach to standardization and reusability has been a game-changer for our ML teams. We've significantly reduced development time with shared components, and our cross-team collaboration has never been smoother. The centralized asset management gives us the visibility and control we needed to scale our ML operations confidently."

Maximillian Baluff

Lead AI Engineer at IT4IPM

"With ZenML, we're no longer tied to a single cloud provider. The flexibility to switch backends between AWS and GCP has been a game-changer for our team."

Dragos Ciupureanu

VP of Engineering at Koble

"ZenML allows orchestrating ML pipelines independent of any infrastructure or tooling choices. ML teams can free their minds of tooling FOMO from the fast-moving MLOps space, with the simple and extensible ZenML interface. No more vendor lock-in, or massive switching costs!"

Richard Socher

Former Chief Scientist Salesforce and Founder of You.com

Thanks to ZenML we've set up a pipeline where before we had only jupyter notebooks. It helped us tremendously with data and model versioning and we really look forward to any future improvements!

Francesco Pudda

Machine Learning Engineer at WiseTech Global

Using ZenML

It's extremely simple to plugin ZenML

Just add Python decorators to your existing code and see the magic happen

Automatically track experiments in your experiment tracker

Return pythonic objects and have them versioned automatically

Track model metadata and lineage

Switch easily between local and cloud orchestration

Define data dependencies and modularize your entire codebase

`@step(experiment_tracker="mlflow")def read_data_from_snowflake(config: pydantic.BaseModel) -> pd.DataFrame: `

` df = read_data(client.get_secret("snowflake_credentials")mlflow.log_metric("data_shape", df.shape) `

`@step(settings={"resources": ResourceSettings(memory="240Gb") } `

` model=Model(name="my_model", model_registry="mlflow")) `

`def my_trainer(df: pd.DataFrame) -> transformers.AutoModel:tokenizer, model = train_model(df) return model`

`@pipeline(active_stack="databricks_stack",`

` on_failure=on_failure_hook)`

`def my_pipeline(): df = read_data_from_snowflake()

my_trainer(df)

my_pipeline()`

Remove sensitive information from your code

Choose resources abstracted from infrastructure

Works for any framework - classical ML or LLM’s

Easily define alerts for observability

No compliance headaches

Your VPC, your data

ZenML is a metadata layer on top of your existing infrastructure, meaning all data and compute stays on your side.

Looking to Get Ahead in MLOps & LLMOps?

Subscribe to the ZenML newsletter and receive regular product updates, tutorials, examples, and more.

Support

Frequently asked questions

Everything you need to know about the product.

What is the difference between ZenML and other machine learning orchestrators?

Unlike other machine learning pipeline frameworks, ZenML does not take an opinion on the orchestration layer. You start writing locally, and then deploy your pipeline on an orchestrator defined in your MLOps stack. ZenML supports many orchestrators natively, and can be easily extended to other orchestrators. Read more about why you might want to write your machine learning pipelines in a platform agnostic way here.

Does ZenML integrate with my MLOps stack (cloud, ML libraries, other tools etc.)?

As long as you're working in Python, you can leverage the entire ecosystem. In terms of machine learning infrastructure, ZenML pipelines can already be deployed on Kubernetes, AWS Sagemaker, GCP Vertex AI, Kubeflow, Apache Airflow and many more. Artifact, secrets, and container storage is also supported for all major cloud providers.

Does ZenML help in GenAI / LLMOps use-cases?

Yes! ZenML is fully compatabile, and is intended to be used to productionalize LLM applications. There are examples on the ZenML projects repository that showcases our integrations with Llama Index, OpenAI, and Langchain. Check them out here!

How can I build my MLOps/LLMOps platform using ZenML?

The best way is to start simple. The starter and production guides walk you through how to build a miminal cloud MLOps stack. You can then extend with the other numerous components such as experiment tracker, model deployers, model registries and more!

What is the difference between the open source and Pro product?

ZenML is and always will be open-source at its heart. The core framework is freely available on Github and you can run and manage it in-house without using the Pro product. On the other hand, ZenML Pro offers one of the best experiences to use ZenML, and includes a managed version of the OSS product, including some Pro-only features that create the best collaborative experience for many companies that are scaling their ML efforts. You can see a more detailed comparison here.

Still not clear?Ask us on Slack

Start Your Free Trial Now

No new paradigms - Bring your own tools and infrastructure

No data leaves your servers, we only track metadata

Free trial included - no strings attached, cancel anytime

Privacy Preference Center

When you visit websites, they may store or retrieve data in your browser. This storage is often necessary for the basic functionality of the website. The storage may be used for marketing, analytics, and personalization of the site, such as storing your preferences. Privacy is important to us, so you have the option of disabling certain types of storage that may not be necessary for the basic functioning of the website. Blocking categories may impact your experience on the website.

Manage Consent Preferences by Category

These items are required to enable basic website functionality.

These items are used to deliver advertising that is more relevant to you and your interests. They may also be used to limit the number of times you see an advertisement and measure the effectiveness of advertising campaigns. Advertising networks usually place them with the website operator’s permission.

These items allow the website to remember choices you make (such as your user name, language, or the region you are in) and provide enhanced, more personal features. For example, a website may provide you with local weather reports or traffic news by storing data about your current location.

These items help the website operator understand how its website performs, how visitors interact with the site, and whether there may be technical issues. This storage type usually doesn’t collect information that identifies a visitor.