Softplus — PyTorch 2.7 documentation (original) (raw)

class torch.nn.Softplus(beta=1.0, threshold=20.0)[source][source]¶

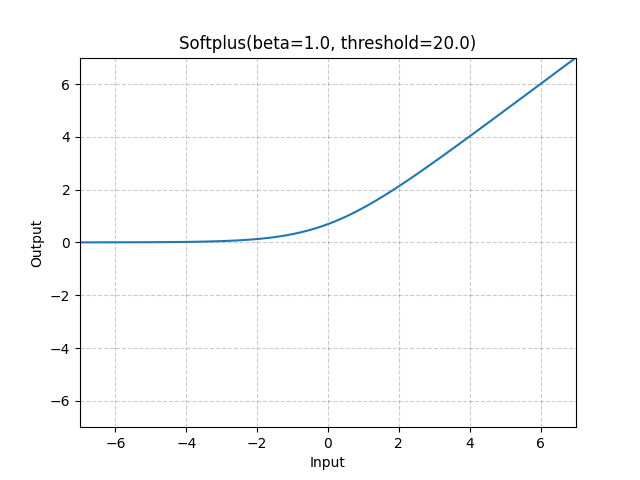

Applies the Softplus function element-wise.

Softplus(x)=1β∗log(1+exp(β∗x))\text{Softplus}(x) = \frac{1}{\beta} * \log(1 + \exp(\beta * x))

SoftPlus is a smooth approximation to the ReLU function and can be used to constrain the output of a machine to always be positive.

For numerical stability the implementation reverts to the linear function when input×β>thresholdinput \times \beta > threshold.

Parameters

- beta (float) – the β\beta value for the Softplus formulation. Default: 1

- threshold (float) – values above this revert to a linear function. Default: 20

Shape:

- Input: (∗)(*), where ∗* means any number of dimensions.

- Output: (∗)(*), same shape as the input.

Examples:

m = nn.Softplus() input = torch.randn(2) output = m(input)