SequentialLR (original) (raw)

class torch.optim.lr_scheduler.SequentialLR(optimizer, schedulers, milestones, last_epoch=-1)[source]#

Contains a list of schedulers expected to be called sequentially during the optimization process.

Specifically, the schedulers will be called according to the milestone points, which should provide exact intervals by which each scheduler should be called at a given epoch.

Parameters

- optimizer (Optimizer) – Wrapped optimizer.

- schedulers (list) – List of chained schedulers.

- milestones (list) – List of integers that reflects milestone points.

- last_epoch (int) – The index of last epoch. Default: -1.

Example

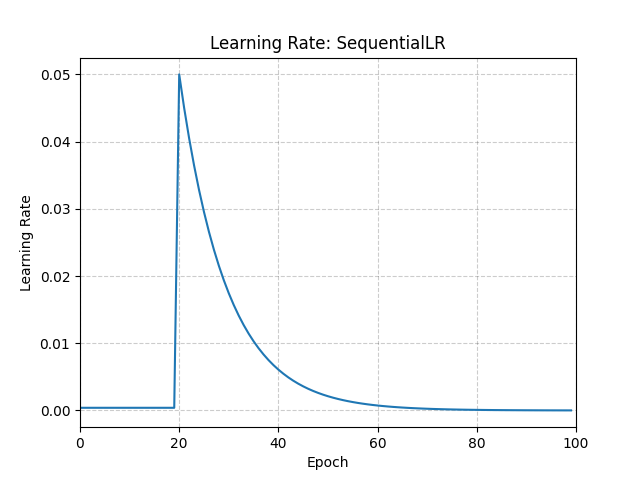

Assuming optimizer uses lr = 0.05 for all groups

lr = 0.005 if epoch == 0

lr = 0.005 if epoch == 1

lr = 0.005 if epoch == 2

...

lr = 0.05 if epoch == 20

lr = 0.045 if epoch == 21

lr = 0.0405 if epoch == 22

scheduler1 = ConstantLR(optimizer, factor=0.1, total_iters=20) scheduler2 = ExponentialLR(optimizer, gamma=0.9) scheduler = SequentialLR( ... optimizer, ... schedulers=[scheduler1, scheduler2], ... milestones=[20], ... ) for epoch in range(100): train(...) validate(...) scheduler.step()

Return last computed learning rate by current scheduler.

Return type

Compute learning rate using chainable form of the scheduler.

Return type

load_state_dict(state_dict)[source]#

Load the scheduler’s state.

Parameters

state_dict (dict) – scheduler state. Should be an object returned from a call to state_dict().

recursive_undo(sched=None)[source]#

Recursively undo any step performed by the initialisation of schedulers.

Return the state of the scheduler as a dict.

It contains an entry for every variable in self.__dict__ which is not the optimizer. The wrapped scheduler states will also be saved.

Return type

Perform a step.