Quickstart — SkyPilot documentation (original) (raw)

This guide will walk you through:

- Defining a task in a simple YAML format

- Provisioning a cluster and running a task

- Using the core SkyPilot CLI commands

Be sure to complete the installation instructions first before continuing with this guide.

Hello, SkyPilot

Let’s define our very first task, a simple Hello, SkyPilot! program.

Create a directory from anywhere on your machine:

$ mkdir hello-sky $ cd hello-sky

Copy the following YAML into a hello_sky.yaml file:

resources:

Optional; if left out, automatically pick the cheapest cloud.

cloud: aws

8x NVIDIA A100 GPU

accelerators: A100:8

Working directory (optional) containing the project codebase.

Its contents are synced to ~/sky_workdir/ on the cluster.

workdir: .

Typical use: pip install -r requirements.txt

Invoked under the workdir (i.e., can use its files).

setup: | echo "Running setup."

Typical use: make use of resources, such as running training.

Invoked under the workdir (i.e., can use its files).

run: | echo "Hello, SkyPilot!" conda env list

This defines a task with the following components:

resources: cloud resources the task must be run on (e.g., accelerators, instance type, etc.)workdir: the working directory containing project code that will be synced to the provisioned instance(s)setup: commands that must be run before the task is executed (invoked under workdir)run: commands that run the actual task (invoked under workdir)

All these fields are optional.

To launch a cluster and run a task, use sky launch:

$ sky launch -c mycluster hello_sky.yaml

Tip

This may take a few minutes for the first run. Feel free to read ahead on this guide.

Tip

You can use the -c flag to give the cluster an easy-to-remember name. If not specified, a name is autogenerated.

If the cluster name is an existing cluster shown in sky status, the cluster will be reused.

The sky launch command performs much heavy-lifting:

- selects an appropriate cloud and VM based on the specified resource constraints;

- provisions (or reuses) a cluster on that cloud;

- syncs up the

workdir; - executes the

setupcommands; and - executes the

runcommands.

In a few minutes, the cluster will finish provisioning and the task will be executed. The outputs will show Hello, SkyPilot! and the list of installed Conda environments.

Execute a task on an existing cluster#

Once you have an existing cluster, use sky exec to execute a task on it:

$ sky exec mycluster hello_sky.yaml

The sky exec command is more lightweight; it

- syncs up the

workdir(so that the task may use updated code); and - executes the

runcommands.

Provisioning and setup commands are skipped.

Bash commands are also supported, such as:

$ sky exec mycluster python train_cpu.py $ sky exec mycluster --gpus=A100:8 python train_gpu.py

For interactive/monitoring commands, such as htop or gpustat -i, use ssh instead (see below) to avoid job submission overheads.

View all clusters#

Use sky status to see all clusters (across regions and clouds) in a single table:

This may show multiple clusters, if you have created several:

NAME LAUNCHED RESOURCES COMMAND STATUS mygcp 1 day ago 1x GCP(n1-highmem-8) sky launch -c mygcp --cloud gcp STOPPED mycluster 4 mins ago 1x AWS(p4d.24xlarge, {'A100': 8}) sky exec mycluster hello_sky.yaml UP

See here for a list of all possible cluster states.

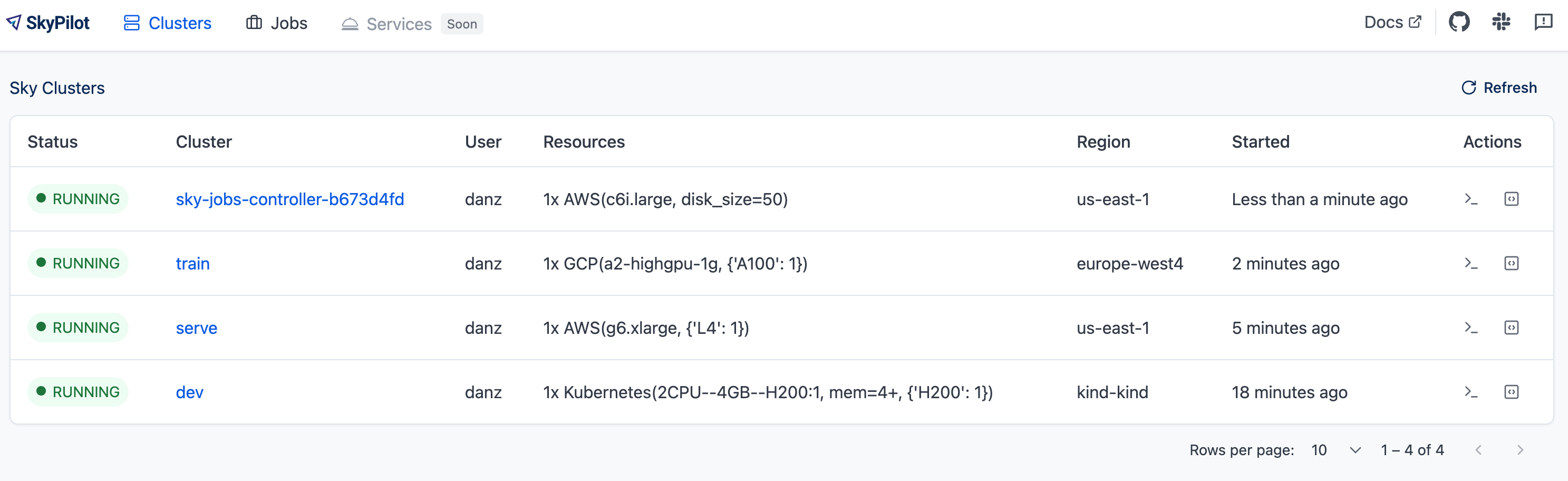

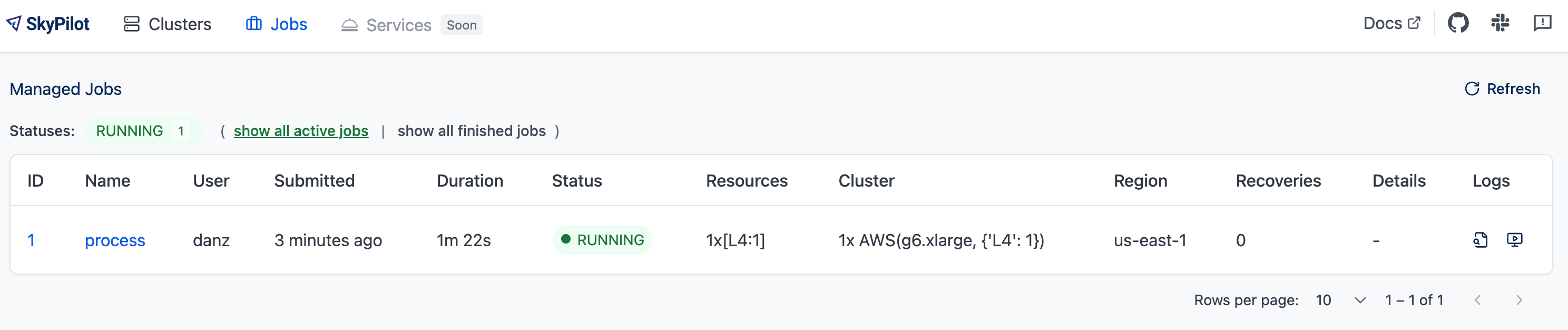

Access the dashboard#

SkyPilot offers a dashboard for all clusters and jobs launched with SkyPilot. To open the dashboard, run sky dashboard, which will automatically opens a browser tab for the dashboard.

If you install SkyPilot from source, before starting the API server:

- Run the following commands to generate the dashboard build:

Install all dependencies

$ npm --prefix sky/dashboard install

Build

$ npm --prefix sky/dashboard run build

- Start the dashboard with

sky dashboard.

The clusters page example:

The managed jobs page example:

SSH into clusters#

Simply run ssh <cluster_name> to log into a cluster:

Multi-node clusters work too:

Assuming 3 nodes.

Head node.

$ ssh mycluster

Worker nodes.

$ ssh mycluster-worker1 $ ssh mycluster-worker2

The above are achieved by adding appropriate entries to ~/.ssh/config.

Because SkyPilot exposes SSH access to clusters, this means clusters can be easily used inside tools such as Visual Studio Code Remote.

Transfer files#

After a task’s execution, use rsync or scp to download files (e.g., checkpoints):

$ rsync -Pavz mycluster:/remote/source /local/dest # copy from remote VM

For uploading files to the cluster, see Syncing Code and Artifacts.

Stop/terminate a cluster#

When you are done, stop the cluster with sky stop:

To terminate a cluster instead, run sky down:

Note

Stopping a cluster does not lose data on the attached disks (billing for the instances will stop while the disks will still be charged). Those disks will be reattached when restarting the cluster.

Terminating a cluster will delete all associated resources (all billing stops), and any data on the attached disks will be lost. Terminated clusters cannot be restarted.

Find more commands that manage the lifecycle of clusters in the CLI reference.

Scaling out#

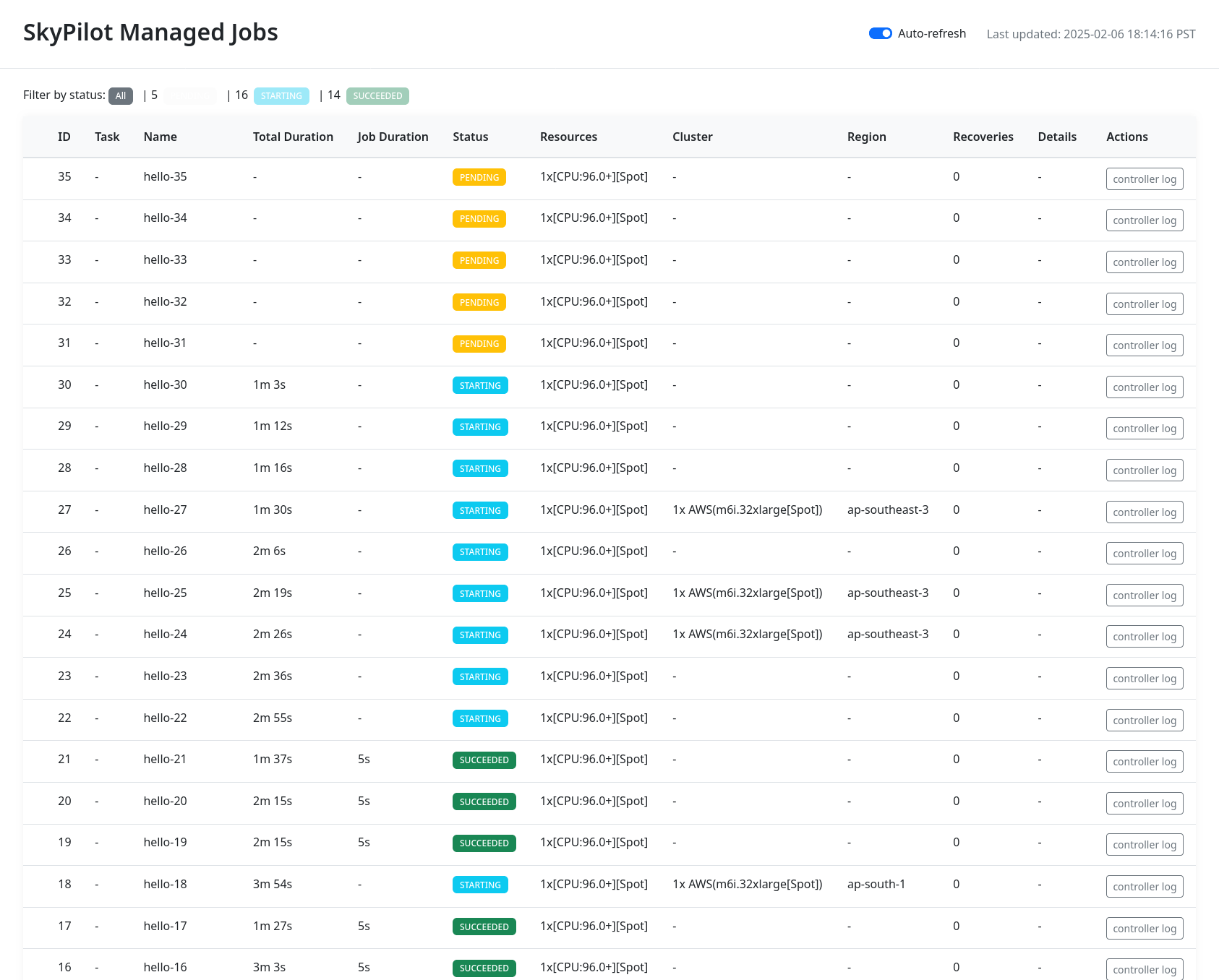

So far, we have used SkyPilot’s CLI to submit work to and interact with a single cluster. When you are ready to scale out (e.g., run 10s, 100s, or 1000s of jobs), use managed jobs to run on auto-managed clusters, or even spot instances.

$ for i in $(seq 100) # launch 100 jobs do sky jobs launch --use-spot --detach-run --yes -n hello-$i hello_sky.yaml done ... $ sky jobs dashboard # check the jobs status

SkyPilot can support thousands of managed jobs running at once.

Asynchronous execution#

All SkyPilot CLIs and APIs are asynchronous requests, i.e. you can interrupt them at any time and let them run in the background. For example, if you KeyInterrupt the sky launch command, the cluster will keep provisioning in the background:

$ sky launch -c mycluster hello_sky.yaml ^C ⚙︎ Request will continue running asynchronously. ├── View logs: sky api logs 73d316ac ├── Or, visit: http://127.0.0.1:46580/api/stream?request_id=73d316ac └── To cancel the request, run: sky api cancel 73d316ac

See more details in Asynchronous Execution.

Next steps#

Congratulations! In this quickstart, you have launched a cluster, run a task, and interacted with SkyPilot’s CLI.

Next steps:

- Adapt Tutorial: AI Training to start running your own project on SkyPilot!

- See the Task YAML reference, CLI reference, and more examples.

- Set up SkyPilot for a multi-user team: Team Deployment.

We invite you to explore SkyPilot’s unique features in the rest of the documentation.