Text2Video-Zero (original) (raw)

Text2Video-Zero: Text-to-Image Diffusion Models are Zero-Shot Video Generators is by Levon Khachatryan, Andranik Movsisyan, Vahram Tadevosyan, Roberto Henschel, Zhangyang Wang, Shant Navasardyan, Humphrey Shi.

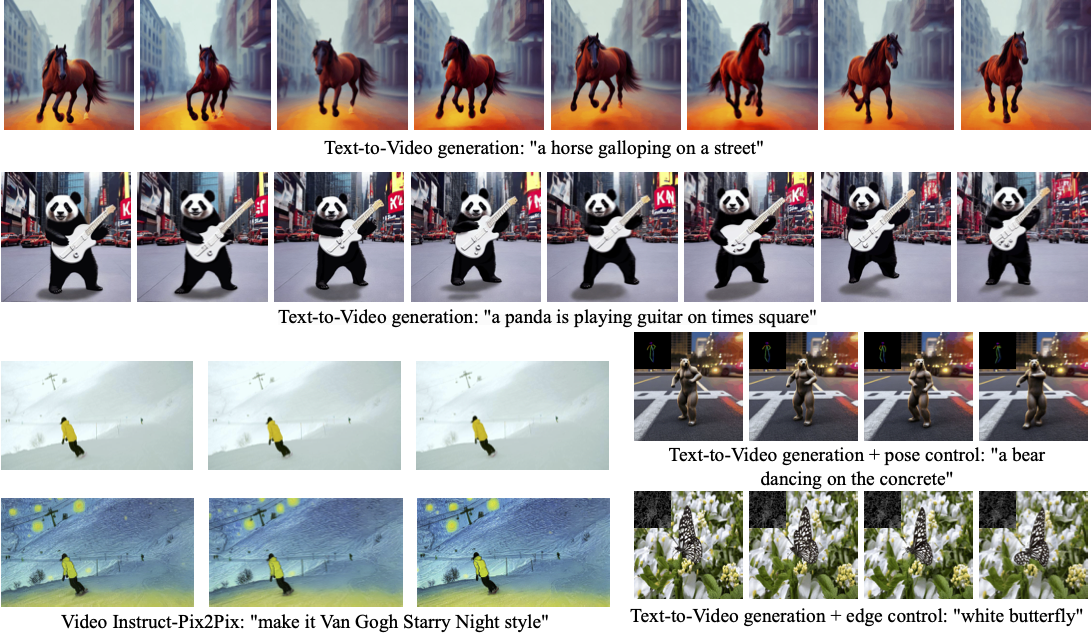

Text2Video-Zero enables zero-shot video generation using either:

- A textual prompt

- A prompt combined with guidance from poses or edges

- Video Instruct-Pix2Pix (instruction-guided video editing)

Results are temporally consistent and closely follow the guidance and textual prompts.

The abstract from the paper is:

Recent text-to-video generation approaches rely on computationally heavy training and require large-scale video datasets. In this paper, we introduce a new task of zero-shot text-to-video generation and propose a low-cost approach (without any training or optimization) by leveraging the power of existing text-to-image synthesis methods (e.g., Stable Diffusion), making them suitable for the video domain. Our key modifications include (i) enriching the latent codes of the generated frames with motion dynamics to keep the global scene and the background time consistent; and (ii) reprogramming frame-level self-attention using a new cross-frame attention of each frame on the first frame, to preserve the context, appearance, and identity of the foreground object. Experiments show that this leads to low overhead, yet high-quality and remarkably consistent video generation. Moreover, our approach is not limited to text-to-video synthesis but is also applicable to other tasks such as conditional and content-specialized video generation, and Video Instruct-Pix2Pix, i.e., instruction-guided video editing. As experiments show, our method performs comparably or sometimes better than recent approaches, despite not being trained on additional video data.

You can find additional information about Text2Video-Zero on the project page, paper, and original codebase.

Usage example

Text-To-Video

To generate a video from prompt, run the following Python code:

import torch from diffusers import TextToVideoZeroPipeline import imageio

model_id = "stable-diffusion-v1-5/stable-diffusion-v1-5" pipe = TextToVideoZeroPipeline.from_pretrained(model_id, torch_dtype=torch.float16).to("cuda")

prompt = "A panda is playing guitar on times square" result = pipe(prompt=prompt).images result = [(r * 255).astype("uint8") for r in result] imageio.mimsave("video.mp4", result, fps=4)

You can change these parameters in the pipeline call:

- Motion field strength (see the paper, Sect. 3.3.1):

motion_field_strength_xandmotion_field_strength_y. Default:motion_field_strength_x=12,motion_field_strength_y=12

TandT'(see the paper, Sect. 3.3.1)t0andt1in the range{0, ..., num_inference_steps}. Default:t0=45,t1=48

- Video length:

video_length, the number of frames video_length to be generated. Default:video_length=8

We can also generate longer videos by doing the processing in a chunk-by-chunk manner:

import torch from diffusers import TextToVideoZeroPipeline import numpy as np

model_id = "stable-diffusion-v1-5/stable-diffusion-v1-5"

pipe = TextToVideoZeroPipeline.from_pretrained(model_id, torch_dtype=torch.float16).to("cuda")

seed = 0

video_length = 24

chunk_size = 8

prompt = "A panda is playing guitar on times square"

result = [] chunk_ids = np.arange(0, video_length, chunk_size - 1) generator = torch.Generator(device="cuda") for i in range(len(chunk_ids)): print(f"Processing chunk {i + 1} / {len(chunk_ids)}") ch_start = chunk_ids[i] ch_end = video_length if i == len(chunk_ids) - 1 else chunk_ids[i + 1]

frame_ids = [0] + list(range(ch_start, ch_end))

generator.manual_seed(seed)

output = pipe(prompt=prompt, video_length=len(frame_ids), generator=generator, frame_ids=frame_ids)

result.append(output.images[1:])result = np.concatenate(result) result = [(r * 255).astype("uint8") for r in result] imageio.mimsave("video.mp4", result, fps=4)

SDXL Support

In order to use the SDXL model when generating a video from prompt, use the TextToVideoZeroSDXLPipeline pipeline:

import torch from diffusers import TextToVideoZeroSDXLPipeline

model_id = "stabilityai/stable-diffusion-xl-base-1.0" pipe = TextToVideoZeroSDXLPipeline.from_pretrained( model_id, torch_dtype=torch.float16, variant="fp16", use_safetensors=True ).to("cuda")

Text-To-Video with Pose Control

To generate a video from prompt with additional pose control

- Download a demo video

from huggingface_hub import hf_hub_download

filename = "assets/poses_skeleton_gifs/dance1_corr.mp4"

repo_id = "PAIR/Text2Video-Zero"

video_path = hf_hub_download(repo_type="space", repo_id=repo_id, filename=filename) - Read video containing extracted pose images

from PIL import Image

import imageio

reader = imageio.get_reader(video_path, "ffmpeg")

frame_count = 8

pose_images = [Image.fromarray(reader.get_data(i)) for i in range(frame_count)]

To extract pose from actual video, read ControlNet documentation. - Run

StableDiffusionControlNetPipelinewith our custom attention processor

import torch

from diffusers import StableDiffusionControlNetPipeline, ControlNetModel

from diffusers.pipelines.text_to_video_synthesis.pipeline_text_to_video_zero import CrossFrameAttnProcessor

model_id = "stable-diffusion-v1-5/stable-diffusion-v1-5"

controlnet = ControlNetModel.from_pretrained("lllyasviel/sd-controlnet-openpose", torch_dtype=torch.float16)

pipe = StableDiffusionControlNetPipeline.from_pretrained(

model_id, controlnet=controlnet, torch_dtype=torch.float16

).to("cuda")

pipe.unet.set_attn_processor(CrossFrameAttnProcessor(batch_size=2))

pipe.controlnet.set_attn_processor(CrossFrameAttnProcessor(batch_size=2))

latents = torch.randn((1, 4, 64, 64), device="cuda", dtype=torch.float16).repeat(len(pose_images), 1, 1, 1)

prompt = "Darth Vader dancing in a desert"

result = pipe(prompt=[prompt] * len(pose_images), image=pose_images, latents=latents).images

imageio.mimsave("video.mp4", result, fps=4)

SDXL Support

Since our attention processor also works with SDXL, it can be utilized to generate a video from prompt using ControlNet models powered by SDXL:

import torch

from diffusers import StableDiffusionXLControlNetPipeline, ControlNetModel

from diffusers.pipelines.text_to_video_synthesis.pipeline_text_to_video_zero import CrossFrameAttnProcessor

controlnet_model_id = 'thibaud/controlnet-openpose-sdxl-1.0'

model_id = 'stabilityai/stable-diffusion-xl-base-1.0'

controlnet = ControlNetModel.from_pretrained(controlnet_model_id, torch_dtype=torch.float16)

pipe = StableDiffusionControlNetPipeline.from_pretrained(

model_id, controlnet=controlnet, torch_dtype=torch.float16

).to('cuda')

pipe.unet.set_attn_processor(CrossFrameAttnProcessor(batch_size=2))

pipe.controlnet.set_attn_processor(CrossFrameAttnProcessor(batch_size=2))

latents = torch.randn((1, 4, 128, 128), device="cuda", dtype=torch.float16).repeat(len(pose_images), 1, 1, 1)

prompt = "Darth Vader dancing in a desert"

result = pipe(prompt=[prompt] * len(pose_images), image=pose_images, latents=latents).images

imageio.mimsave("video.mp4", result, fps=4)

Text-To-Video with Edge Control

To generate a video from prompt with additional Canny edge control, follow the same steps described above for pose-guided generation using Canny edge ControlNet model.

Video Instruct-Pix2Pix

To perform text-guided video editing (with InstructPix2Pix):

- Download a demo video

from huggingface_hub import hf_hub_download

filename = "assets/pix2pix video/camel.mp4"

repo_id = "PAIR/Text2Video-Zero"

video_path = hf_hub_download(repo_type="space", repo_id=repo_id, filename=filename) - Read video from path

from PIL import Image

import imageio

reader = imageio.get_reader(video_path, "ffmpeg")

frame_count = 8

video = [Image.fromarray(reader.get_data(i)) for i in range(frame_count)] - Run

StableDiffusionInstructPix2PixPipelinewith our custom attention processor

import torch

from diffusers import StableDiffusionInstructPix2PixPipeline

from diffusers.pipelines.text_to_video_synthesis.pipeline_text_to_video_zero import CrossFrameAttnProcessor

model_id = "timbrooks/instruct-pix2pix"

pipe = StableDiffusionInstructPix2PixPipeline.from_pretrained(model_id, torch_dtype=torch.float16).to("cuda")

pipe.unet.set_attn_processor(CrossFrameAttnProcessor(batch_size=3))

prompt = "make it Van Gogh Starry Night style"

result = pipe(prompt=[prompt] * len(video), image=video).images

imageio.mimsave("edited_video.mp4", result, fps=4)

DreamBooth specialization

Methods Text-To-Video, Text-To-Video with Pose Control and Text-To-Video with Edge Controlcan run with custom DreamBooth models, as shown below forCanny edge ControlNet model andAvatar style DreamBooth model:

- Download a demo video

from huggingface_hub import hf_hub_download

filename = "assets/canny_videos_mp4/girl_turning.mp4"

repo_id = "PAIR/Text2Video-Zero"

video_path = hf_hub_download(repo_type="space", repo_id=repo_id, filename=filename) - Read video from path

from PIL import Image

import imageio

reader = imageio.get_reader(video_path, "ffmpeg")

frame_count = 8

canny_edges = [Image.fromarray(reader.get_data(i)) for i in range(frame_count)] - Run

StableDiffusionControlNetPipelinewith custom trained DreamBooth model

import torch

from diffusers import StableDiffusionControlNetPipeline, ControlNetModel

from diffusers.pipelines.text_to_video_synthesis.pipeline_text_to_video_zero import CrossFrameAttnProcessor

model_id = "PAIR/text2video-zero-controlnet-canny-avatar"

controlnet = ControlNetModel.from_pretrained("lllyasviel/sd-controlnet-canny", torch_dtype=torch.float16)

pipe = StableDiffusionControlNetPipeline.from_pretrained(

model_id, controlnet=controlnet, torch_dtype=torch.float16

).to("cuda")

pipe.unet.set_attn_processor(CrossFrameAttnProcessor(batch_size=2))

pipe.controlnet.set_attn_processor(CrossFrameAttnProcessor(batch_size=2))

latents = torch.randn((1, 4, 64, 64), device="cuda", dtype=torch.float16).repeat(len(canny_edges), 1, 1, 1)

prompt = "oil painting of a beautiful girl avatar style"

result = pipe(prompt=[prompt] * len(canny_edges), image=canny_edges, latents=latents).images

imageio.mimsave("video.mp4", result, fps=4)

You can filter out some available DreamBooth-trained models with this link.

Make sure to check out the Schedulers guide to learn how to explore the tradeoff between scheduler speed and quality, and see the reuse components across pipelines section to learn how to efficiently load the same components into multiple pipelines.

TextToVideoZeroPipeline

class diffusers.TextToVideoZeroPipeline

( vae: AutoencoderKL text_encoder: CLIPTextModel tokenizer: CLIPTokenizer unet: UNet2DConditionModel scheduler: KarrasDiffusionSchedulers safety_checker: StableDiffusionSafetyChecker feature_extractor: CLIPImageProcessor requires_safety_checker: bool = True )

__call__

( prompt: typing.Union[str, typing.List[str]] video_length: typing.Optional[int] = 8 height: typing.Optional[int] = None width: typing.Optional[int] = None num_inference_steps: int = 50 guidance_scale: float = 7.5 negative_prompt: typing.Union[str, typing.List[str], NoneType] = None num_videos_per_prompt: typing.Optional[int] = 1 eta: float = 0.0 generator: typing.Union[torch._C.Generator, typing.List[torch._C.Generator], NoneType] = None latents: typing.Optional[torch.Tensor] = None motion_field_strength_x: float = 12 motion_field_strength_y: float = 12 output_type: typing.Optional[str] = 'tensor' return_dict: bool = True callback: typing.Optional[typing.Callable[[int, int, torch.Tensor], NoneType]] = None callback_steps: typing.Optional[int] = 1 t0: int = 44 t1: int = 47 frame_ids: typing.Optional[typing.List[int]] = None ) → TextToVideoPipelineOutput

Parameters

- prompt (

strorList[str], optional) — The prompt or prompts to guide image generation. If not defined, you need to passprompt_embeds. - video_length (

int, optional, defaults to 8) — The number of generated video frames. - height (

int, optional, defaults toself.unet.config.sample_size * self.vae_scale_factor) — The height in pixels of the generated image. - width (

int, optional, defaults toself.unet.config.sample_size * self.vae_scale_factor) — The width in pixels of the generated image. - num_inference_steps (

int, optional, defaults to 50) — The number of denoising steps. More denoising steps usually lead to a higher quality image at the expense of slower inference. - guidance_scale (

float, optional, defaults to 7.5) — A higher guidance scale value encourages the model to generate images closely linked to the textpromptat the expense of lower image quality. Guidance scale is enabled whenguidance_scale > 1. - negative_prompt (

strorList[str], optional) — The prompt or prompts to guide what to not include in video generation. If not defined, you need to passnegative_prompt_embedsinstead. Ignored when not using guidance (guidance_scale < 1). - num_videos_per_prompt (

int, optional, defaults to 1) — The number of videos to generate per prompt. - eta (

float, optional, defaults to 0.0) — Corresponds to parameter eta (η) from the DDIM paper. Only applies to the DDIMScheduler, and is ignored in other schedulers. - generator (

torch.GeneratororList[torch.Generator], optional) — A torch.Generator to make generation deterministic. - latents (

torch.Tensor, optional) — Pre-generated noisy latents sampled from a Gaussian distribution, to be used as inputs for video generation. Can be used to tweak the same generation with different prompts. If not provided, a latents tensor is generated by sampling using the supplied randomgenerator. - output_type (

str, optional, defaults to"np") — The output format of the generated video. Choose between"latent"and"np". - return_dict (

bool, optional, defaults toTrue) — Whether or not to return aTextToVideoPipelineOutput instead of a plain tuple. - callback (

Callable, optional) — A function that calls everycallback_stepssteps during inference. The function is called with the following arguments:callback(step: int, timestep: int, latents: torch.Tensor). - callback_steps (

int, optional, defaults to 1) — The frequency at which thecallbackfunction is called. If not specified, the callback is called at every step. - motion_field_strength_x (

float, optional, defaults to 12) — Strength of motion in generated video along x-axis. See thepaper, Sect. 3.3.1. - motion_field_strength_y (

float, optional, defaults to 12) — Strength of motion in generated video along y-axis. See thepaper, Sect. 3.3.1. - t0 (

int, optional, defaults to 44) — Timestep t0. Should be in the range [0, num_inference_steps - 1]. See thepaper, Sect. 3.3.1. - t1 (

int, optional, defaults to 47) — Timestep t0. Should be in the range [t0 + 1, num_inference_steps - 1]. See thepaper, Sect. 3.3.1. - frame_ids (

List[int], optional) — Indexes of the frames that are being generated. This is used when generating longer videos chunk-by-chunk.

The output contains a ndarray of the generated video, when output_type != "latent", otherwise a latent code of generated videos and a list of bools indicating whether the corresponding generated video contains “not-safe-for-work” (nsfw) content..

The call function to the pipeline for generation.

backward_loop

( latents timesteps prompt_embeds guidance_scale callback callback_steps num_warmup_steps extra_step_kwargs cross_attention_kwargs = None ) → latents

Parameters

- latents — Latents at time timesteps[0].

- timesteps — Time steps along which to perform backward process.

- prompt_embeds — Pre-generated text embeddings.

- guidance_scale — A higher guidance scale value encourages the model to generate images closely linked to the text

promptat the expense of lower image quality. Guidance scale is enabled whenguidance_scale > 1. - callback (

Callable, optional) — A function that calls everycallback_stepssteps during inference. The function is called with the following arguments:callback(step: int, timestep: int, latents: torch.Tensor). - callback_steps (

int, optional, defaults to 1) — The frequency at which thecallbackfunction is called. If not specified, the callback is called at every step. - extra_step_kwargs — Extra_step_kwargs.

- cross_attention_kwargs — A kwargs dictionary that if specified is passed along to the

AttentionProcessoras defined inself.processor. - num_warmup_steps — number of warmup steps.

Latents of backward process output at time timesteps[-1].

Perform backward process given list of time steps.

encode_prompt

( prompt device num_images_per_prompt do_classifier_free_guidance negative_prompt = None prompt_embeds: typing.Optional[torch.Tensor] = None negative_prompt_embeds: typing.Optional[torch.Tensor] = None lora_scale: typing.Optional[float] = None clip_skip: typing.Optional[int] = None )

Parameters

- prompt (

strorList[str], optional) — prompt to be encoded - device — (

torch.device): torch device - num_images_per_prompt (

int) — number of images that should be generated per prompt - do_classifier_free_guidance (

bool) — whether to use classifier free guidance or not - negative_prompt (

strorList[str], optional) — The prompt or prompts not to guide the image generation. If not defined, one has to passnegative_prompt_embedsinstead. Ignored when not using guidance (i.e., ignored ifguidance_scaleis less than1). - prompt_embeds (

torch.Tensor, optional) — Pre-generated text embeddings. Can be used to easily tweak text inputs, e.g. prompt weighting. If not provided, text embeddings will be generated frompromptinput argument. - negative_prompt_embeds (

torch.Tensor, optional) — Pre-generated negative text embeddings. Can be used to easily tweak text inputs, e.g. prompt weighting. If not provided, negative_prompt_embeds will be generated fromnegative_promptinput argument. - lora_scale (

float, optional) — A LoRA scale that will be applied to all LoRA layers of the text encoder if LoRA layers are loaded. - clip_skip (

int, optional) — Number of layers to be skipped from CLIP while computing the prompt embeddings. A value of 1 means that the output of the pre-final layer will be used for computing the prompt embeddings.

Encodes the prompt into text encoder hidden states.

forward_loop

( x_t0 t0 t1 generator ) → x_t1

Parameters

- x_t0 — Latent code at time t0.

- t0 — Timestep at t0.

- t1 — Timestamp at t1.

- generator (

torch.GeneratororList[torch.Generator], optional) — A torch.Generator to make generation deterministic.

Forward process applied to x_t0 from time t0 to t1.

Perform DDPM forward process from time t0 to t1. This is the same as adding noise with corresponding variance.

TextToVideoZeroSDXLPipeline

class diffusers.TextToVideoZeroSDXLPipeline

( vae: AutoencoderKL text_encoder: CLIPTextModel text_encoder_2: CLIPTextModelWithProjection tokenizer: CLIPTokenizer tokenizer_2: CLIPTokenizer unet: UNet2DConditionModel scheduler: KarrasDiffusionSchedulers image_encoder: CLIPVisionModelWithProjection = None feature_extractor: CLIPImageProcessor = None force_zeros_for_empty_prompt: bool = True add_watermarker: typing.Optional[bool] = None )

__call__

( prompt: typing.Union[str, typing.List[str]] prompt_2: typing.Union[str, typing.List[str], NoneType] = None video_length: typing.Optional[int] = 8 height: typing.Optional[int] = None width: typing.Optional[int] = None num_inference_steps: int = 50 denoising_end: typing.Optional[float] = None guidance_scale: float = 7.5 negative_prompt: typing.Union[str, typing.List[str], NoneType] = None negative_prompt_2: typing.Union[str, typing.List[str], NoneType] = None num_videos_per_prompt: typing.Optional[int] = 1 eta: float = 0.0 generator: typing.Union[torch._C.Generator, typing.List[torch._C.Generator], NoneType] = None frame_ids: typing.Optional[typing.List[int]] = None prompt_embeds: typing.Optional[torch.Tensor] = None negative_prompt_embeds: typing.Optional[torch.Tensor] = None pooled_prompt_embeds: typing.Optional[torch.Tensor] = None negative_pooled_prompt_embeds: typing.Optional[torch.Tensor] = None latents: typing.Optional[torch.Tensor] = None motion_field_strength_x: float = 12 motion_field_strength_y: float = 12 output_type: typing.Optional[str] = 'tensor' return_dict: bool = True callback: typing.Optional[typing.Callable[[int, int, torch.Tensor], NoneType]] = None callback_steps: int = 1 cross_attention_kwargs: typing.Optional[typing.Dict[str, typing.Any]] = None guidance_rescale: float = 0.0 original_size: typing.Optional[typing.Tuple[int, int]] = None crops_coords_top_left: typing.Tuple[int, int] = (0, 0) target_size: typing.Optional[typing.Tuple[int, int]] = None t0: int = 44 t1: int = 47 )

Parameters

- prompt (

strorList[str], optional) — The prompt or prompts to guide the image generation. If not defined, one has to passprompt_embeds. instead. - prompt_2 (

strorList[str], optional) — The prompt or prompts to be sent to thetokenizer_2andtext_encoder_2. If not defined,promptis used in both text-encoders - video_length (

int, optional, defaults to 8) — The number of generated video frames. - height (

int, optional, defaults to self.unet.config.sample_size * self.vae_scale_factor) — The height in pixels of the generated image. - width (

int, optional, defaults to self.unet.config.sample_size * self.vae_scale_factor) — The width in pixels of the generated image. - num_inference_steps (

int, optional, defaults to 50) — The number of denoising steps. More denoising steps usually lead to a higher quality image at the expense of slower inference. - denoising_end (

float, optional) — When specified, determines the fraction (between 0.0 and 1.0) of the total denoising process to be completed before it is intentionally prematurely terminated. As a result, the returned sample will still retain a substantial amount of noise as determined by the discrete timesteps selected by the scheduler. The denoising_end parameter should ideally be utilized when this pipeline forms a part of a “Mixture of Denoisers” multi-pipeline setup, as elaborated in Refining the Image Output - guidance_scale (

float, optional, defaults to 7.5) — Guidance scale as defined in Classifier-Free Diffusion Guidance.guidance_scaleis defined aswof equation 2. of Imagen Paper. Guidance scale is enabled by settingguidance_scale > 1. Higher guidance scale encourages to generate images that are closely linked to the textprompt, usually at the expense of lower image quality. - negative_prompt (

strorList[str], optional) — The prompt or prompts not to guide the image generation. If not defined, one has to passnegative_prompt_embedsinstead. Ignored when not using guidance (i.e., ignored ifguidance_scaleis less than1). - negative_prompt_2 (

strorList[str], optional) — The prompt or prompts not to guide the image generation to be sent totokenizer_2andtext_encoder_2. If not defined,negative_promptis used in both text-encoders - num_videos_per_prompt (

int, optional, defaults to 1) — The number of videos to generate per prompt. - eta (

float, optional, defaults to 0.0) — Corresponds to parameter eta (η) in the DDIM paper: https://huggingface.co/papers/2010.02502. Only applies to schedulers.DDIMScheduler, will be ignored for others. - generator (

torch.GeneratororList[torch.Generator], optional) — One or a list of torch generator(s)to make generation deterministic. - frame_ids (

List[int], optional) — Indexes of the frames that are being generated. This is used when generating longer videos chunk-by-chunk. - prompt_embeds (

torch.Tensor, optional) — Pre-generated text embeddings. Can be used to easily tweak text inputs, e.g. prompt weighting. If not provided, text embeddings will be generated frompromptinput argument. - negative_prompt_embeds (

torch.Tensor, optional) — Pre-generated negative text embeddings. Can be used to easily tweak text inputs, e.g. prompt weighting. If not provided, negative_prompt_embeds will be generated fromnegative_promptinput argument. - pooled_prompt_embeds (

torch.Tensor, optional) — Pre-generated pooled text embeddings. Can be used to easily tweak text inputs, e.g. prompt weighting. If not provided, pooled text embeddings will be generated frompromptinput argument. - negative_pooled_prompt_embeds (

torch.Tensor, optional) — Pre-generated negative pooled text embeddings. Can be used to easily tweak text inputs, e.g. prompt weighting. If not provided, pooled negative_prompt_embeds will be generated fromnegative_promptinput argument. - latents (

torch.Tensor, optional) — Pre-generated noisy latents, sampled from a Gaussian distribution, to be used as inputs for image generation. Can be used to tweak the same generation with different prompts. If not provided, a latents tensor will ge generated by sampling using the supplied randomgenerator. - motion_field_strength_x (

float, optional, defaults to 12) — Strength of motion in generated video along x-axis. See thepaper, Sect. 3.3.1. - motion_field_strength_y (

float, optional, defaults to 12) — Strength of motion in generated video along y-axis. See thepaper, Sect. 3.3.1. - output_type (

str, optional, defaults to"pil") — The output format of the generate image. Choose betweenPIL:PIL.Image.Imageornp.array. - return_dict (

bool, optional, defaults toTrue) — Whether or not to return a~pipelines.stable_diffusion_xl.StableDiffusionXLPipelineOutputinstead of a plain tuple. - callback (

Callable, optional) — A function that will be called everycallback_stepssteps during inference. The function will be called with the following arguments:callback(step: int, timestep: int, latents: torch.Tensor). - callback_steps (

int, optional, defaults to 1) — The frequency at which thecallbackfunction will be called. If not specified, the callback will be called at every step. - cross_attention_kwargs (

dict, optional) — A kwargs dictionary that if specified is passed along to theAttentionProcessoras defined underself.processorindiffusers.cross_attention. - guidance_rescale (

float, optional, defaults to 0.7) — Guidance rescale factor proposed by Common Diffusion Noise Schedules and Sample Steps are Flawedguidance_scaleis defined asφin equation 16. ofCommon Diffusion Noise Schedules and Sample Steps are Flawed. Guidance rescale factor should fix overexposure when using zero terminal SNR. - original_size (

Tuple[int], optional, defaults to (1024, 1024)) — Iforiginal_sizeis not the same astarget_sizethe image will appear to be down- or upsampled.original_sizedefaults to(width, height)if not specified. Part of SDXL’s micro-conditioning as explained in section 2.2 ofhttps://huggingface.co/papers/2307.01952. - crops_coords_top_left (

Tuple[int], optional, defaults to (0, 0)) —crops_coords_top_leftcan be used to generate an image that appears to be “cropped” from the positioncrops_coords_top_leftdownwards. Favorable, well-centered images are usually achieved by settingcrops_coords_top_leftto (0, 0). Part of SDXL’s micro-conditioning as explained in section 2.2 ofhttps://huggingface.co/papers/2307.01952. - target_size (

Tuple[int], optional, defaults to (1024, 1024)) — For most cases,target_sizeshould be set to the desired height and width of the generated image. If not specified it will default to(width, height). Part of SDXL’s micro-conditioning as explained in section 2.2 of https://huggingface.co/papers/2307.01952. - t0 (

int, optional, defaults to 44) — Timestep t0. Should be in the range [0, num_inference_steps - 1]. See thepaper, Sect. 3.3.1. - t1 (

int, optional, defaults to 47) — Timestep t0. Should be in the range [t0 + 1, num_inference_steps - 1]. See thepaper, Sect. 3.3.1.

Function invoked when calling the pipeline for generation.

backward_loop

( latents timesteps prompt_embeds guidance_scale callback callback_steps num_warmup_steps extra_step_kwargs add_text_embeds add_time_ids cross_attention_kwargs = None guidance_rescale: float = 0.0 ) → latents

Parameters

- latents — Latents at time timesteps[0].

- timesteps — Time steps along which to perform backward process.

- prompt_embeds — Pre-generated text embeddings.

- guidance_scale — A higher guidance scale value encourages the model to generate images closely linked to the text

promptat the expense of lower image quality. Guidance scale is enabled whenguidance_scale > 1. - callback (

Callable, optional) — A function that calls everycallback_stepssteps during inference. The function is called with the following arguments:callback(step: int, timestep: int, latents: torch.Tensor). - callback_steps (

int, optional, defaults to 1) — The frequency at which thecallbackfunction is called. If not specified, the callback is called at every step. - extra_step_kwargs — Extra_step_kwargs.

- cross_attention_kwargs — A kwargs dictionary that if specified is passed along to the

AttentionProcessoras defined inself.processor. - num_warmup_steps — number of warmup steps.

latents of backward process output at time timesteps[-1]

Perform backward process given list of time steps

encode_prompt

( prompt: str prompt_2: typing.Optional[str] = None device: typing.Optional[torch.device] = None num_images_per_prompt: int = 1 do_classifier_free_guidance: bool = True negative_prompt: typing.Optional[str] = None negative_prompt_2: typing.Optional[str] = None prompt_embeds: typing.Optional[torch.Tensor] = None negative_prompt_embeds: typing.Optional[torch.Tensor] = None pooled_prompt_embeds: typing.Optional[torch.Tensor] = None negative_pooled_prompt_embeds: typing.Optional[torch.Tensor] = None lora_scale: typing.Optional[float] = None clip_skip: typing.Optional[int] = None )

Parameters

- prompt (

strorList[str], optional) — prompt to be encoded - prompt_2 (

strorList[str], optional) — The prompt or prompts to be sent to thetokenizer_2andtext_encoder_2. If not defined,promptis used in both text-encoders - device — (

torch.device): torch device - num_images_per_prompt (

int) — number of images that should be generated per prompt - do_classifier_free_guidance (

bool) — whether to use classifier free guidance or not - negative_prompt (

strorList[str], optional) — The prompt or prompts not to guide the image generation. If not defined, one has to passnegative_prompt_embedsinstead. Ignored when not using guidance (i.e., ignored ifguidance_scaleis less than1). - negative_prompt_2 (

strorList[str], optional) — The prompt or prompts not to guide the image generation to be sent totokenizer_2andtext_encoder_2. If not defined,negative_promptis used in both text-encoders - prompt_embeds (

torch.Tensor, optional) — Pre-generated text embeddings. Can be used to easily tweak text inputs, e.g. prompt weighting. If not provided, text embeddings will be generated frompromptinput argument. - negative_prompt_embeds (

torch.Tensor, optional) — Pre-generated negative text embeddings. Can be used to easily tweak text inputs, e.g. prompt weighting. If not provided, negative_prompt_embeds will be generated fromnegative_promptinput argument. - pooled_prompt_embeds (

torch.Tensor, optional) — Pre-generated pooled text embeddings. Can be used to easily tweak text inputs, e.g. prompt weighting. If not provided, pooled text embeddings will be generated frompromptinput argument. - negative_pooled_prompt_embeds (

torch.Tensor, optional) — Pre-generated negative pooled text embeddings. Can be used to easily tweak text inputs, e.g. prompt weighting. If not provided, pooled negative_prompt_embeds will be generated fromnegative_promptinput argument. - lora_scale (

float, optional) — A lora scale that will be applied to all LoRA layers of the text encoder if LoRA layers are loaded. - clip_skip (

int, optional) — Number of layers to be skipped from CLIP while computing the prompt embeddings. A value of 1 means that the output of the pre-final layer will be used for computing the prompt embeddings.

Encodes the prompt into text encoder hidden states.

forward_loop

( x_t0 t0 t1 generator ) → x_t1

Parameters

- x_t0 — Latent code at time t0.

- t0 — Timestep at t0.

- t1 — Timestamp at t1.

- generator (

torch.GeneratororList[torch.Generator], optional) — A torch.Generator to make generation deterministic.

Forward process applied to x_t0 from time t0 to t1.

Perform DDPM forward process from time t0 to t1. This is the same as adding noise with corresponding variance.

TextToVideoPipelineOutput

class diffusers.pipelines.text_to_video_synthesis.pipeline_text_to_video_zero.TextToVideoPipelineOutput

( images: typing.Union[typing.List[PIL.Image.Image], numpy.ndarray] nsfw_content_detected: typing.Optional[typing.List[bool]] )

Parameters

- images (

[List[PIL.Image.Image],np.ndarray]) — List of denoised PIL images of lengthbatch_sizeor NumPy array of shape(batch_size, height, width, num_channels). - nsfw_content_detected (

[List[bool]]) — List indicating whether the corresponding generated image contains “not-safe-for-work” (nsfw) content orNoneif safety checking could not be performed.

Output class for zero-shot text-to-video pipeline.