Aligning object templates to a point cloud — Point Cloud Library 0.0 documentation (original) (raw)

This tutorial gives an example of how some of the tools covered in the other tutorials can be combined to solve a higher level problem — aligning a previously captured model of an object to some newly captured data. In this specific example, we’ll take a depth image that contains a person and try to fit some previously captured templates of their face; this will allow us to determine the position and orientation of the face in the scene.

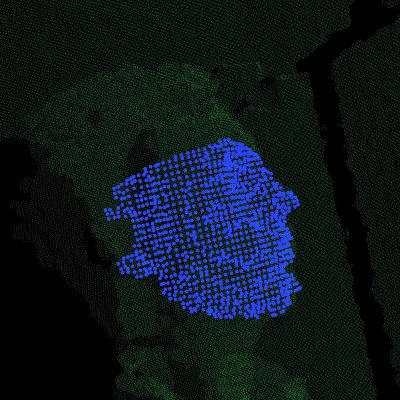

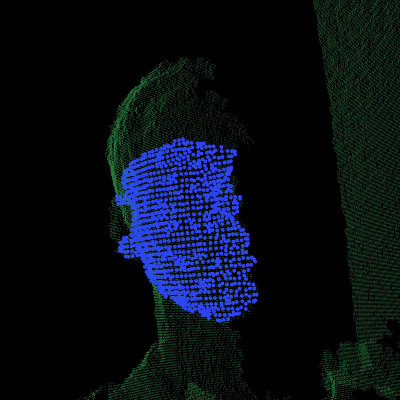

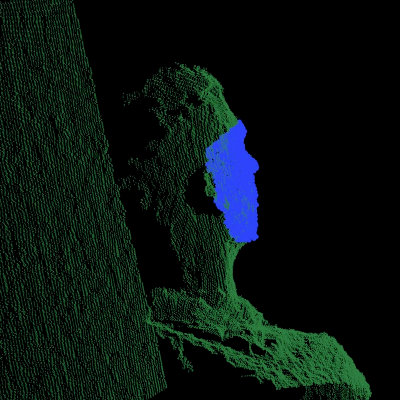

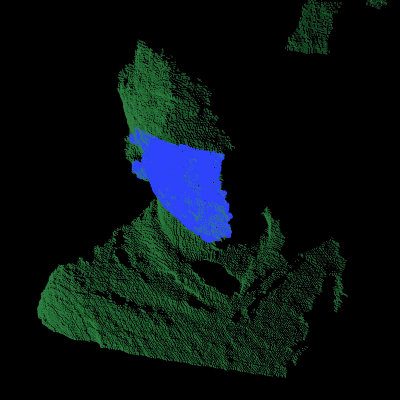

We can use the code below to fit a template of a person’s face (the blue points) to a new point cloud (the green points).

The code

First, download the datasets from github.com/PointCloudLibrary/data/tree/master/tutorials/template_alignment/and extract the files.

Next, copy and paste the following code into your editor and save it as template_alignment.cpp (or download the source file here).

1#include 2#include 3#include 4#include <Eigen/Core> 5#include <pcl/memory.h> 6#include <pcl/pcl_macros.h> 7#include <pcl/point_types.h> 8#include <pcl/point_cloud.h> 9#include <pcl/io/pcd_io.h> 10#include <pcl/kdtree/kdtree_flann.h> 11#include <pcl/filters/passthrough.h> 12#include <pcl/filters/voxel_grid.h> 13#include <pcl/features/normal_3d.h> 14#include <pcl/features/fpfh.h> 15#include <pcl/registration/ia_ransac.h> 16 17class FeatureCloud 18{ 19 public: 20 // A bit of shorthand 21 typedef pcl::PointCloudpcl::PointXYZ PointCloud; 22 typedef pcl::PointCloudpcl::Normal SurfaceNormals; 23 typedef pcl::PointCloudpcl::FPFHSignature33 LocalFeatures; 24 typedef pcl::search::KdTreepcl::PointXYZ SearchMethod; 25 26 FeatureCloud () : 27 search_method_xyz_ (new SearchMethod), 28 normal_radius_ (0.02f), 29 feature_radius_ (0.02f) 30 {} 31 32 // Process the given cloud 33 void 34 setInputCloud (PointCloud::Ptr xyz) 35 { 36 xyz_ = xyz; 37 processInput (); 38 } 39 40 // Load and process the cloud in the given PCD file 41 void 42 loadInputCloud (const std::string &pcd_file) 43 { 44 xyz_ = PointCloud::Ptr (new PointCloud); 45 pcl::io::loadPCDFile (pcd_file, *xyz_); 46 processInput (); 47 } 48 49 // Get a pointer to the cloud 3D points 50 PointCloud::Ptr 51 getPointCloud () const 52 { 53 return (xyz_); 54 } 55 56 // Get a pointer to the cloud of 3D surface normals 57 SurfaceNormals::Ptr 58 getSurfaceNormals () const 59 { 60 return (normals_); 61 } 62 63 // Get a pointer to the cloud of feature descriptors 64 LocalFeatures::Ptr 65 getLocalFeatures () const 66 { 67 return (features_); 68 } 69 70 protected: 71 // Compute the surface normals and local features 72 void 73 processInput () 74 { 75 computeSurfaceNormals (); 76 computeLocalFeatures (); 77 } 78 79 // Compute the surface normals 80 void 81 computeSurfaceNormals () 82 { 83 normals_ = SurfaceNormals::Ptr (new SurfaceNormals); 84 85 pcl::NormalEstimation<pcl::PointXYZ, pcl::Normal> norm_est; 86 norm_est.setInputCloud (xyz_); 87 norm_est.setSearchMethod (search_method_xyz_); 88 norm_est.setRadiusSearch (normal_radius_); 89 norm_est.compute (*normals_); 90 } 91 92 // Compute the local feature descriptors 93 void 94 computeLocalFeatures () 95 { 96 features_ = LocalFeatures::Ptr (new LocalFeatures); 97 98 pcl::FPFHEstimation<pcl::PointXYZ, pcl::Normal, pcl::FPFHSignature33> fpfh_est; 99 fpfh_est.setInputCloud (xyz_); 100 fpfh_est.setInputNormals (normals_); 101 fpfh_est.setSearchMethod (search_method_xyz_); 102 fpfh_est.setRadiusSearch (feature_radius_); 103 fpfh_est.compute (features_); 104 } 105 106 private: 107 // Point cloud data 108 PointCloud::Ptr xyz_; 109 SurfaceNormals::Ptr normals_; 110 LocalFeatures::Ptr features_; 111 SearchMethod::Ptr search_method_xyz_; 112 113 // Parameters 114 float normal_radius_; 115 float feature_radius_; 116}; 117 118class TemplateAlignment 119{ 120 public: 121 122 // A struct for storing alignment results 123 struct Result 124 { 125 float fitness_score; 126 Eigen::Matrix4f final_transformation; 127 PCL_MAKE_ALIGNED_OPERATOR_NEW 128 }; 129 130 TemplateAlignment () : 131 min_sample_distance_ (0.05f), 132 max_correspondence_distance_ (0.01f0.01f), 133 nr_iterations_ (500) 134 { 135 // Initialize the parameters in the Sample Consensus Initial Alignment (SAC-IA) algorithm 136 sac_ia_.setMinSampleDistance (min_sample_distance_); 137 sac_ia_.setMaxCorrespondenceDistance (max_correspondence_distance_); 138 sac_ia_.setMaximumIterations (nr_iterations_); 139 } 140 141 // Set the given cloud as the target to which the templates will be aligned 142 void 143 setTargetCloud (FeatureCloud &target_cloud) 144 { 145 target_ = target_cloud; 146 sac_ia_.setInputTarget (target_cloud.getPointCloud ()); 147 sac_ia_.setTargetFeatures (target_cloud.getLocalFeatures ()); 148 } 149 150 // Add the given cloud to the list of template clouds 151 void 152 addTemplateCloud (FeatureCloud &template_cloud) 153 { 154 templates_.push_back (template_cloud); 155 } 156 157 // Align the given template cloud to the target specified by setTargetCloud () 158 void 159 align (FeatureCloud &template_cloud, TemplateAlignment::Result &result) 160 { 161 sac_ia_.setInputSource (template_cloud.getPointCloud ()); 162 sac_ia_.setSourceFeatures (template_cloud.getLocalFeatures ()); 163 164 pcl::PointCloudpcl::PointXYZ registration_output; 165 sac_ia_.align (registration_output); 166 167 result.fitness_score = (float) sac_ia_.getFitnessScore (max_correspondence_distance_); 168 result.final_transformation = sac_ia_.getFinalTransformation (); 169 } 170 171 // Align all of template clouds set by addTemplateCloud to the target specified by setTargetCloud () 172 void 173 alignAll (std::vector<TemplateAlignment::Result, Eigen::aligned_allocator > &results) 174 { 175 results.resize (templates_.size ()); 176 for (std::size_t i = 0; i < templates_.size (); ++i) 177 { 178 align (templates_[i], results[i]); 179 } 180 } 181 182 // Align all of template clouds to the target cloud to find the one with best alignment score 183 int 184 findBestAlignment (TemplateAlignment::Result &result) 185 { 186 // Align all of the templates to the target cloud 187 std::vector<Result, Eigen::aligned_allocator > results; 188 alignAll (results); 189 190 // Find the template with the best (lowest) fitness score 191 float lowest_score = std::numeric_limits::infinity (); 192 int best_template = 0; 193 for (std::size_t i = 0; i < results.size (); ++i) 194 { 195 const Result &r = results[i]; 196 if (r.fitness_score < lowest_score) 197 { 198 lowest_score = r.fitness_score; 199 best_template = (int) i; 200 } 201 } 202 203 // Output the best alignment 204 result = results[best_template]; 205 return (best_template); 206 } 207 208 private: 209 // A list of template clouds and the target to which they will be aligned 210 std::vector templates_; 211 FeatureCloud target_; 212 213 // The Sample Consensus Initial Alignment (SAC-IA) registration routine and its parameters 214 pcl::SampleConsensusInitialAlignment<pcl::PointXYZ, pcl::PointXYZ, pcl::FPFHSignature33> sac_ia_; 215 float min_sample_distance_; 216 float max_correspondence_distance_; 217 int nr_iterations_; 218}; 219 220// Align a collection of object templates to a sample point cloud 221int 222main (int argc, char **argv) 223{ 224 if (argc < 3) 225 { 226 printf ("No target PCD file given!\n"); 227 return (-1); 228 } 229 230 // Load the object templates specified in the object_templates.txt file 231 std::vector object_templates; 232 std::ifstream input_stream (argv[1]); 233 object_templates.resize (0); 234 std::string pcd_filename; 235 while (input_stream.good ()) 236 { 237 std::getline (input_stream, pcd_filename); 238 if (pcd_filename.empty () || pcd_filename.at (0) == '#') // Skip blank lines or comments 239 continue; 240 241 FeatureCloud template_cloud; 242 template_cloud.loadInputCloud (pcd_filename); 243 object_templates.push_back (template_cloud); 244 } 245 input_stream.close (); 246 247 // Load the target cloud PCD file 248 pcl::PointCloudpcl::PointXYZ::Ptr cloud (new pcl::PointCloudpcl::PointXYZ); 249 pcl::io::loadPCDFile (argv[2], *cloud); 250 251 // Preprocess the cloud by... 252 // ...removing distant points 253 const float depth_limit = 1.0; 254 pcl::PassThroughpcl::PointXYZ pass; 255 pass.setInputCloud (cloud); 256 pass.setFilterFieldName ("z"); 257 pass.setFilterLimits (0, depth_limit); 258 pass.filter (*cloud); 259 260 // ... and downsampling the point cloud 261 const float voxel_grid_size = 0.005f; 262 pcl::VoxelGridpcl::PointXYZ vox_grid; 263 vox_grid.setInputCloud (cloud); 264 vox_grid.setLeafSize (voxel_grid_size, voxel_grid_size, voxel_grid_size); 265 //vox_grid.filter (*cloud); // Please see this http://www.pcl-developers.org/Possible-problem-in-new-VoxelGrid-implementation-from-PCL-1-5-0-td5490361.html 266 pcl::PointCloudpcl::PointXYZ::Ptr tempCloud (new pcl::PointCloudpcl::PointXYZ); 267 vox_grid.filter (*tempCloud); 268 cloud = tempCloud; 269 270 // Assign to the target FeatureCloud 271 FeatureCloud target_cloud; 272 target_cloud.setInputCloud (cloud); 273 274 // Set the TemplateAlignment inputs 275 TemplateAlignment template_align; 276 for (std::size_t i = 0; i < object_templates.size (); ++i) 277 { 278 template_align.addTemplateCloud (object_templates[i]); 279 } 280 template_align.setTargetCloud (target_cloud); 281 282 // Find the best template alignment 283 TemplateAlignment::Result best_alignment; 284 int best_index = template_align.findBestAlignment (best_alignment); 285 const FeatureCloud &best_template = object_templates[best_index]; 286 287 // Print the alignment fitness score (values less than 0.00002 are good) 288 printf ("Best fitness score: %f\n", best_alignment.fitness_score); 289 290 // Print the rotation matrix and translation vector 291 Eigen::Matrix3f rotation = best_alignment.final_transformation.block<3,3>(0, 0); 292 Eigen::Vector3f translation = best_alignment.final_transformation.block<3,1>(0, 3); 293 294 printf ("\n"); 295 printf (" | %6.3f %6.3f %6.3f | \n", rotation (0,0), rotation (0,1), rotation (0,2)); 296 printf ("R = | %6.3f %6.3f %6.3f | \n", rotation (1,0), rotation (1,1), rotation (1,2)); 297 printf (" | %6.3f %6.3f %6.3f | \n", rotation (2,0), rotation (2,1), rotation (2,2)); 298 printf ("\n"); 299 printf ("t = < %0.3f, %0.3f, %0.3f >\n", translation (0), translation (1), translation (2)); 300 301 // Save the aligned template for visualization 302 pcl::PointCloudpcl::PointXYZ transformed_cloud; 303 pcl::transformPointCloud (*best_template.getPointCloud (), transformed_cloud, best_alignment.final_transformation); 304 pcl::io::savePCDFileBinary ("output.pcd", transformed_cloud); 305 306 return (0); 307}

The explanation

Now, let’s break down the code piece by piece.

We’ll start by examining the FeatureCloud class. This class is defined in order to provide a convenient method for computing and storing point clouds with local feature descriptors for each point.

The constructor creates a new KdTreeFLANN object and initializes the radius parameters that will be used when computing surface normals and local features.

FeatureCloud () :

search_method_xyz_ (new SearchMethod),

normal_radius_ (0.02f),

feature_radius_ (0.02f)

{}Then we define methods for setting the input cloud, either by passing a shared pointer to a PointCloud or by providing the name of a PCD file to load. In either case, after setting the input, processInput is called, which will compute the local feature descriptors as described later.

// Process the given cloud

void

setInputCloud (PointCloud::Ptr xyz)

{

xyz_ = xyz;

processInput ();

}

// Load and process the cloud in the given PCD file

void

loadInputCloud (const std::string &pcd_file)

{

xyz_ = PointCloud::Ptr (new PointCloud);

pcl::io::loadPCDFile (pcd_file, *xyz_);

processInput ();

}We also define some public accessor methods that can be used to get shared pointers to the points, surface normals, and local feature descriptors.

// Get a pointer to the cloud 3D points

PointCloud::Ptr

getPointCloud () const

{

return (xyz_);

}

// Get a pointer to the cloud of 3D surface normals

SurfaceNormals::Ptr

getSurfaceNormals () const

{

return (normals_);

}

// Get a pointer to the cloud of feature descriptors

LocalFeatures::Ptr

getLocalFeatures () const

{

return (features_);

}Next we define the method for processing the input point cloud, which first computes the cloud’s surface normals and then computes its local features.

// Compute the surface normals and local features

void

processInput ()

{

computeSurfaceNormals ();

computeLocalFeatures ();

}We use PCL’s NormalEstimation class to compute the surface normals. To do so, we must specify the input point cloud, the KdTree to use when searching for neighboring points, and the radius that defines each point’s neighborhood. We then compute the surface normals and store them in a member variable for later use.

// Compute the surface normals

void

computeSurfaceNormals ()

{

normals_ = SurfaceNormals::Ptr (new SurfaceNormals);

pcl::NormalEstimation<pcl::PointXYZ, pcl::Normal> norm_est;

norm_est.setInputCloud (xyz_);

norm_est.setSearchMethod (search_method_xyz_);

norm_est.setRadiusSearch (normal_radius_);

norm_est.compute (*normals_);

}Similarly, we use PCL’s FPFHEstimation class to compute “Fast Point Feature Histogram” descriptors from the input point cloud and its surface normals.

// Compute the local feature descriptors

void

computeLocalFeatures ()

{

features_ = LocalFeatures::Ptr (new LocalFeatures);

pcl::FPFHEstimation<pcl::PointXYZ, pcl::Normal, pcl::FPFHSignature33> fpfh_est;

fpfh_est.setInputCloud (xyz_);

fpfh_est.setInputNormals (normals_);

fpfh_est.setSearchMethod (search_method_xyz_);

fpfh_est.setRadiusSearch (feature_radius_);

fpfh_est.compute (*features_);

}The methods described above serve to encapsulate the work needed to compute feature descriptors and store them with their corresponding 3D point cloud.

Now we’ll examine the TemplateAlignment class, which as the name suggests, will be used to perform template alignment (also referred to as template fitting/matching/registration). A template is typically a small group of pixels or points that represents a known part of a larger object or scene. By registering a template to a new image or point cloud, you can determine the position and orientation of the object that the template represents.

We start by defining a structure to store the alignment results. It contains a floating point value that represents the “fitness” of the alignment (a lower number means a better alignment) and a transformation matrix that describes how template points should be rotated and translated in order to best align with the points in the target cloud.

Note

Because we are including an Eigen::Matrix4f in this struct, we need to include the EIGEN_MAKE_ALIGNED_OPERATOR_NEW macro, which will overload the struct’s “operator new” so that it will generate 16-bytes-aligned pointers. If you’re curious, you can find more information about this issue here. For convenience, there is a redefinition of the macro in memory.h, aptly named PCL_MAKE_ALIGNED_OPERATOR_NEW which will let us for example call pcl::make_shared to create a shared_ptr of over-aligned classes.

// A struct for storing alignment results

struct Result

{

float fitness_score;

Eigen::Matrix4f final_transformation;

PCL_MAKE_ALIGNED_OPERATOR_NEW

};In the constructor, we initialize the SampleConsensusInitialAlignment (SAC-IA) object that we’ll be using to perform the alignment, providing values for each of its parameters. (Note: the maximum correspondence distance is actually specified as squared distance; for this example, we’ve decided to truncate the error with an upper limit of 1 cm, so we pass in 0.01 squared.)

TemplateAlignment () :

min_sample_distance_ (0.05f),

max_correspondence_distance_ (0.01f*0.01f),

nr_iterations_ (500)

{

// Initialize the parameters in the Sample Consensus Initial Alignment (SAC-IA) algorithm

sac_ia_.setMinSampleDistance (min_sample_distance_);

sac_ia_.setMaxCorrespondenceDistance (max_correspondence_distance_);

sac_ia_.setMaximumIterations (nr_iterations_);

}Next we define a method for setting the target cloud (i.e., the cloud to which the templates will be aligned), which sets the inputs of SAC-IA alignment algorithm.

// Set the given cloud as the target to which the templates will be aligned

void

setTargetCloud (FeatureCloud &target_cloud)

{

target_ = target_cloud;

sac_ia_.setInputTarget (target_cloud.getPointCloud ());

sac_ia_.setTargetFeatures (target_cloud.getLocalFeatures ());

}We then define a method for specifying which template or templates to attempt to align. Each call to this method will add the given template cloud to an internal vector of FeatureClouds and store them for future use.

// Add the given cloud to the list of template clouds

void

addTemplateCloud (FeatureCloud &template_cloud)

{

templates_.push_back (template_cloud);

}Next we define our alignment method. This method takes a template as input and aligns it to the target cloud that was specified by calling setInputTarget. It works by setting the given template as the SAC-IA algorithm’s source cloud and then calling its align method to align the source to the target. Note that the align method requires us to pass in a point cloud that will store the newly aligned source cloud, but we can ignore this output for our application. Instead, we call SAC-IA’s accessor methods to get the alignment’s fitness score and final transformation matrix (the rigid transformation from the source cloud to the target), and we output them as a Result struct.

// Align the given template cloud to the target specified by setTargetCloud ()

void

align (FeatureCloud &template_cloud, TemplateAlignment::Result &result)

{

sac_ia_.setInputSource (template_cloud.getPointCloud ());

sac_ia_.setSourceFeatures (template_cloud.getLocalFeatures ());

pcl::PointCloud<pcl::PointXYZ> registration_output;

sac_ia_.align (registration_output);

result.fitness_score = (float) sac_ia_.getFitnessScore (max_correspondence_distance_);

result.final_transformation = sac_ia_.getFinalTransformation ();

}Because this class is designed to work with multiple templates, we also define a method for aligning all of the templates to the target cloud and storing the results in a vector of Result structs.

// Align all of template clouds set by addTemplateCloud to the target specified by setTargetCloud ()

void

alignAll (std::vector<TemplateAlignment::Result, Eigen::aligned_allocator<Result> > &results)

{

results.resize (templates_.size ());

for (std::size_t i = 0; i < templates_.size (); ++i)

{

align (templates_[i], results[i]);

}

}Finally, we define a method that will align all of the templates to the target cloud and return the index of the best match and its corresponding Result struct.

// Align all of template clouds to the target cloud to find the one with best alignment score

int

findBestAlignment (TemplateAlignment::Result &result)

{

// Align all of the templates to the target cloud

std::vector<Result, Eigen::aligned_allocator<Result> > results;

alignAll (results);

// Find the template with the best (lowest) fitness score

float lowest_score = std::numeric_limits<float>::infinity ();

int best_template = 0;

for (std::size_t i = 0; i < results.size (); ++i)

{

const Result &r = results[i];

if (r.fitness_score < lowest_score)

{

lowest_score = r.fitness_score;

best_template = (int) i;

}

}

// Output the best alignment

result = results[best_template];

return (best_template);

}Now that we have a class that handles aligning object templates, we’ll apply it to the the problem of face alignment. In the supplied data files, we’ve included six template point clouds that we created from different views of a person’s face. Each one was downsampled to a spacing of 5mm and manually cropped to include only points from the face. In the following code, we show how to use our TemplateAlignment class to locate the position and orientation of the person’s face in a new cloud.

First, we load the object template clouds. We’ve stored our templates as .PCD files, and we’ve listed their names in a file called object_templates.txt. Here, we read in each file name, load it into a FeatureCloud, and store the FeatureCloud in a vector for later.

// Load the object templates specified in the object_templates.txt file std::vector object_templates; std::ifstream input_stream (argv[1]); object_templates.resize (0); std::string pcd_filename; while (input_stream.good ()) { std::getline (input_stream, pcd_filename); if (pcd_filename.empty () || pcd_filename.at (0) == '#') // Skip blank lines or comments continue;

FeatureCloud template_cloud;

template_cloud.loadInputCloud (pcd_filename);

object_templates.push_back (template_cloud);} input_stream.close ();

Next we load the target cloud (from the filename supplied on the command line).

// Load the target cloud PCD file pcl::PointCloudpcl::PointXYZ::Ptr cloud (new pcl::PointCloudpcl::PointXYZ); pcl::io::loadPCDFile (argv[2], *cloud);

We then perform a little pre-processing on the data to get it ready for alignment. The first step is to filter out any background points. In this example we assume the person we’re trying to align to will be less than 1 meter away, so we apply a pass-through filter, filtering on the “z” field (i.e., depth) with limits of 0 to 1.

Note

This is application and data dependent. You may need to tune the threshold (or drop this filter entirely) to make it work with your data.

// Preprocess the cloud by... // ...removing distant points const float depth_limit = 1.0; pcl::PassThroughpcl::PointXYZ pass; pass.setInputCloud (cloud); pass.setFilterFieldName ("z"); pass.setFilterLimits (0, depth_limit); pass.filter (*cloud);

We also downsample the point cloud with a spacing of 5mm, which reduces the amount of computation that’s required.

// ... and downsampling the point cloud const float voxel_grid_size = 0.005f; pcl::VoxelGridpcl::PointXYZ vox_grid; vox_grid.setInputCloud (cloud); vox_grid.setLeafSize (voxel_grid_size, voxel_grid_size, voxel_grid_size); //vox_grid.filter (*cloud); // Please see this http://www.pcl-developers.org/Possible-problem-in-new-VoxelGrid-implementation-from-PCL-1-5-0-td5490361.html pcl::PointCloudpcl::PointXYZ::Ptr tempCloud (new pcl::PointCloudpcl::PointXYZ); vox_grid.filter (*tempCloud); cloud = tempCloud;

And after the pre-processing is finished, we create our target FeatureCloud.

// Assign to the target FeatureCloud FeatureCloud target_cloud; target_cloud.setInputCloud (cloud);

Next, we initialize our TemplateAlignment object. For this, we need to add each of our template clouds and set the target cloud.

// Set the TemplateAlignment inputs TemplateAlignment template_align; for (std::size_t i = 0; i < object_templates.size (); ++i) { template_align.addTemplateCloud (object_templates[i]); } template_align.setTargetCloud (target_cloud);

Now that our TemplateAlignment object is initialized, we’re ready call the findBestAlignment method to determine which template best fits the given target cloud. We store the alignment results in best_alignment.

// Find the best template alignment TemplateAlignment::Result best_alignment; int best_index = template_align.findBestAlignment (best_alignment); const FeatureCloud &best_template = object_templates[best_index];

Next we output the results. Looking at the fitness score (best_alignment.fitness_score) gives us an idea of how successful the alignment was, and looking at the transformation matrix (best_alignment.final_transformation) tells us the position and orientation of the object we aligned to in the target cloud. Specifically, because it’s a rigid transformation, it can be decomposed into a 3-dimensional translation vector  and a 3 x 3 rotation matrix

and a 3 x 3 rotation matrix  as follows:

as follows:

![T = \left[ \begin{array}{cccc}

& & & t_x \\

& R & & t_y \\

& & & t_z \\

0 & 0 & 0 & 1 \end{array} \right]](https://pcl.readthedocs.io/projects/tutorials/en/master/_images/math/64202bcd0d366f90022e9b1faabc419b7219e320.png)

// Print the alignment fitness score (values less than 0.00002 are good) printf ("Best fitness score: %f\n", best_alignment.fitness_score);

// Print the rotation matrix and translation vector Eigen::Matrix3f rotation = best_alignment.final_transformation.block<3,3>(0, 0); Eigen::Vector3f translation = best_alignment.final_transformation.block<3,1>(0, 3);

printf ("\n"); printf (" | %6.3f %6.3f %6.3f | \n", rotation (0,0), rotation (0,1), rotation (0,2)); printf ("R = | %6.3f %6.3f %6.3f | \n", rotation (1,0), rotation (1,1), rotation (1,2)); printf (" | %6.3f %6.3f %6.3f | \n", rotation (2,0), rotation (2,1), rotation (2,2)); printf ("\n"); printf ("t = < %0.3f, %0.3f, %0.3f >\n", translation (0), translation (1), translation (2));

Finally, we take the best fitting template, apply the transform that aligns it to the target cloud, and save the aligned template out as a .PCD file so that we can visualize it later to see how well the alignment worked.

// Save the aligned template for visualization pcl::PointCloudpcl::PointXYZ transformed_cloud; pcl::transformPointCloud (*best_template.getPointCloud (), transformed_cloud, best_alignment.final_transformation); pcl::io::savePCDFileBinary ("output.pcd", transformed_cloud);

Compiling and running the program

Add the following lines to your CMakeLists.txt file:

1cmake_minimum_required(VERSION 3.5 FATAL_ERROR) 2 3project(template_alignment) 4 5find_package(PCL 1.2 REQUIRED) 6 7add_executable (template_alignment template_alignment.cpp) 8target_link_libraries (template_alignment ${PCL_LIBRARIES})

After you have made the executable, you can run it like so:

$ ./template_alignment data/object_templates.txt data/person.pcd

After a few seconds, you will see output similar to:

Best fitness score: 0.000009

| 0.834 0.295 0.466 |R = | -0.336 0.942 0.006 | | -0.437 -0.162 0.885 |

t = < -0.373, -0.097, 0.087 >

You can also use the pcl_viewer utility to visualize the aligned template and overlay it against the target cloud by running the following command:

$ pcl_viewer data/person.pcd output.pcd

The clouds should look something like this: