ReLU6 — PyTorch 2.7 documentation (original) (raw)

Shortcuts

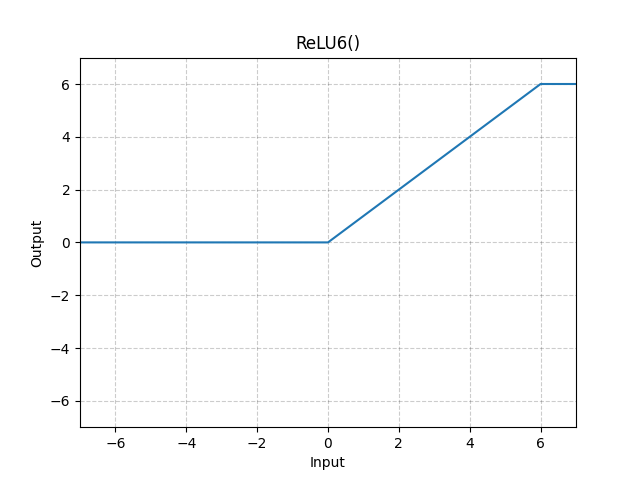

class torch.nn.ReLU6(inplace=False)[source][source]¶

Applies the ReLU6 function element-wise.

ReLU6(x)=min(max(0,x),6)\text{ReLU6}(x) = \min(\max(0,x), 6)

Parameters

inplace (bool) – can optionally do the operation in-place. Default: False

Shape:

- Input: (∗)(*), where ∗* means any number of dimensions.

- Output: (∗)(*), same shape as the input.

Examples:

m = nn.ReLU6() input = torch.randn(2) output = m(input)