SELU — PyTorch 2.7 documentation (original) (raw)

class torch.nn.SELU(inplace=False)[source][source]¶

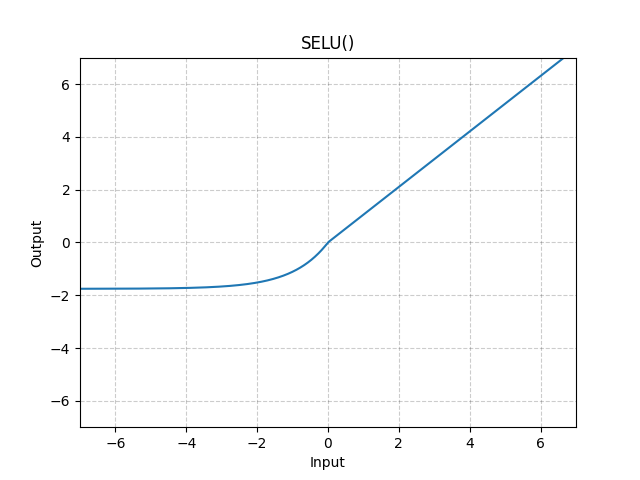

Applies the SELU function element-wise.

SELU(x)=scale∗(max(0,x)+min(0,α∗(exp(x)−1)))\text{SELU}(x) = \text{scale} * (\max(0,x) + \min(0, \alpha * (\exp(x) - 1)))

with α=1.6732632423543772848170429916717\alpha = 1.6732632423543772848170429916717 andscale=1.0507009873554804934193349852946\text{scale} = 1.0507009873554804934193349852946.

More details can be found in the paper Self-Normalizing Neural Networks .

Parameters

inplace (bool, optional) – can optionally do the operation in-place. Default: False

Shape:

- Input: (∗)(*), where ∗* means any number of dimensions.

- Output: (∗)(*), same shape as the input.

Examples:

m = nn.SELU() input = torch.randn(2) output = m(input)