SiLU — PyTorch 2.7 documentation (original) (raw)

class torch.nn.SiLU(inplace=False)[source][source]¶

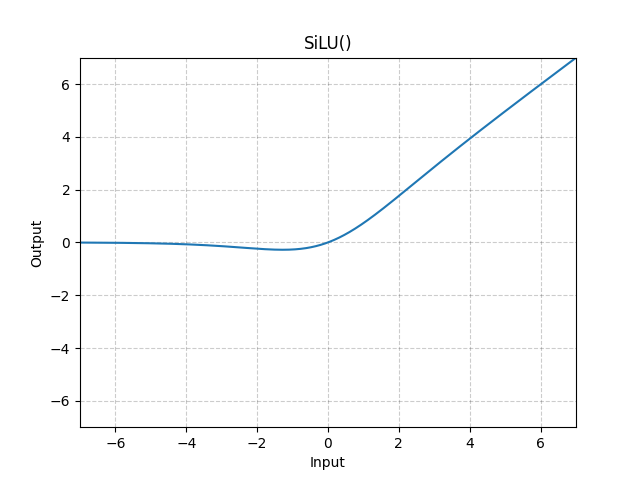

Applies the Sigmoid Linear Unit (SiLU) function, element-wise.

The SiLU function is also known as the swish function.

silu(x)=x∗σ(x),where σ(x) is the logistic sigmoid.\text{silu}(x) = x * \sigma(x), \text{where } \sigma(x) \text{ is the logistic sigmoid.}

Shape:

- Input: (∗)(*), where ∗* means any number of dimensions.

- Output: (∗)(*), same shape as the input.

Examples:

m = nn.SiLU() input = torch.randn(2) output = m(input)

To analyze traffic and optimize your experience, we serve cookies on this site. By clicking or navigating, you agree to allow our usage of cookies. As the current maintainers of this site, Facebook’s Cookies Policy applies. Learn more, including about available controls: Cookies Policy.