pulseaudio – Arun Raghavan (original) (raw)

https://arunraghavan.netOpen source hacker Tue, 19 Mar 2024 14:54:45 +0000 en-US hourly 1 https://wordpress.org/?v=6.7.1 42438795 Asymptotic: A 2023 Review https://arunraghavan.net/2024/03/asymptotic-a-2023-review/https://arunraghavan.net/2024/03/asymptotic-a-2023-review/#respond Tue, 19 Mar 2024 14:54:45 +0000 https://arunraghavan.net/?p=2619 It’s been a busy few several months, but now that we have some breathing room, I wanted to take stock of what we have done over the last year or so.

This is a good thing for most people and companies to do of course, but being a scrappy, (questionably) young organisation, it’s doubly important for us to introspect. This allows us to both recognise our achievements and ensure that we are accomplishing what we have set out to do.

One thing that is clear to me is that we have been lagging in writing about some of the interesting things that we have had the opportunity to work on, so you can expect to see some more posts expanding on what you find below, as well as some of the newer work that we have begun.

(note: I write about our open source contributions below, but needless to say, none of it is possible without the collaboration, input, and reviews of members of the community)

WHIP/WHEP client and server for GStreamer

If you’re in the WebRTC world, you likely have not missed the excitement around standardisation of HTTP-based signalling protocols, culminating in theWHIP andWHEP specifications.

Tarun has been driving our client and server implementations for both these protocols, and in the process has been refactoring some of the webrtcsink and webrtcsrc code to make it easier to add more signaller implementations. You can find out more about this work inhis talk at GstConf 2023and we’ll be writing more about the ongoing effort here as well.

Low-latency embedded audio with PipeWire

Some of our work involves implementing a framework for very low-latency audio processing on an embedded device. PipeWire is a good fit for this sort of application, but we have had to implement a couple of features to make it work.

It turns out that doing timer-based scheduling can be more CPU intensive than ALSA period interrupts at low latencies, so we implemented an IRQ-based scheduling mode for PipeWire. This is now used by default when a pro-audio profile is selected for an ALSA device.

In addition to this, we also implemented rate adaptation for USB gadget devices using the USB Audio Class “feedback control” mechanism. This allows USB gadget devices to adapt their playback/capture rates to the graph’s rate _without_having to perform resampling on the device, saving valuable CPU and latency.

There is likely still some room to optimise things, so expect to more hear on this front soon.

Compress offload in PipeWire

Sanchayan has written about the work we did to add support in PipeWire for offloading compressed audio. This is something we explored in PulseAudio (there’s even an implementationout there), but it’s a testament to the PipeWire design that we were able to get this done without any protocol changes.

This should be useful in various embedded devices that have both the hardware and firmware to make use of this power-saving feature.

GStreamer LC3 encoder and decoder

Tarun wrote a GStreamer plugin implementing the LC3 codecusing the liblc3 library. This is the primary codec for next-generation wireless audio devices implementing the Bluetooth LE Audio specification. The plugin is upstream and can be used to encode and decode LC3 data already, but will likely be more useful when the existing Bluetooth plugins to talk to Bluetooth devices get LE audio support.

QUIC plugins for GStreamer

Sanchayan implemented a QUIC source and sink plugin in Rust, allowing us to start experimenting with the next generation of network transports. For the curious, the plugins sit on top of the Quinnimplementation of the QUIC protocol.

There is a merge request openthat should land soon, and we’re already seeing folks using these plugins.

AWS S3 plugins

We’ve been fleshing out the AWS S3 plugins over the years, and we’ve added a new awss3putobjectsink. This provides a better way to push small or sparse data to S3 (subtitles, for example), without potentially losing data in case of a pipeline crash.

We’ll also be expecting this to look a little more like multifilesink, allowing us to arbitrary split up data and write to S3 directly as multiple objects.

Update to webrtc-audio-processing

We also updated the webrtc-audio-processinglibrary, based on more recent upstream libwebrtc. This is one of those things that becomes surprisingly hard as you get into it — packaging an API-unstable library correctly, while supporting a plethora of operating system and architecture combinations.

Clients

We can’t always speak publicly of the work we are doing with our clients, but there have been a few interesting developments we can (and have spoken about).

Both Sanchayan and I spoke a bit about our work with WebRTC-as-a-service provider, Daily. My talk at the GStreamer Conferencewas a summary of the work I wrote about previouslyabout what we learned while building Daily’s live streaming, recording, and other backend services. There were other clients we worked with during the year with similar experiences.

Sanchayan spoke about the interesting approach to building SIP supportthat we took for Daily. This was a pretty fun project, allowing us to build a modern server-side SIP client with GStreamer and SIP.js.

An ongoing project we are working on is building AES67 support using GStreamer for FreeSWITCH, which essentially allows bridging low-latency network audio equipment with existing SIP and related infrastructure.

As you might have noticed from previous sections, we are also working on a low-latency audio appliance using PipeWire.

Retrospective

All in all, we’ve had a reasonably productive 2023. There are things I know we can do better in our upstream efforts to help move merge requests and issues, and I hope to address this in 2024.

We have ideas for larger projects that we would like to take on. Some of these we might be able to find clients who would be willing to pay for. For the ideas that we think are useful but may not find any funding, we will continue to spend our spare time to push forward.

If you made this this far, thank you, and look out for more updates!

]]> https://arunraghavan.net/2024/03/asymptotic-a-2023-review/feed/ 0 2619 Update from the PipeWire hackfest https://arunraghavan.net/2018/10/update-from-the-pipewire-hackfest/https://arunraghavan.net/2018/10/update-from-the-pipewire-hackfest/#comments Wed, 31 Oct 2018 15:49:24 +0000 https://arunraghavan.net/?p=2484 As the third and final day of the PipeWire hackfest draws to a close, I thought I’d summarise some of my thoughts on the goings-on and the future.

Thanks

Before I get into the details, I want to send out a big thank you to:

- Christian Schaller for all the hard work of organising the event and Wim Taymans for the work on PipeWire so far (and in the future)

- The GNOME Foundation, for sponsoring the event as a whole

- Qualcomm, who are funding my presence at the event

- Collabora, for sponsoring dinner on Monday

- Everybody who attended and participate for their time and thoughtful comments

Background

For those of you who are not familiar with it, PipeWire (previously Pinos, previously PulseVideo) was Wim’s effort at providing secure, multi-program access to video devices (like webcams, or the desktop for screen capture). As he went down that rabbit hole, he wrote SPA, a lightweight general-purpose framework for representing a streaming graph, and this led to the idea of expanding the project to include support for low latency audio.

The Linux userspace audio story has, for the longest time, consisted of two top-level components: PulseAudio which handles consumer audio (power efficiency, wide range of arbitrary hardware), and JACK which deals with pro audio (low latency, high performance). Consolidating this into a good out-of-the-box experience for all use-cases has been a long-standing goal for myself and others in the community that I have spoken to.

An Opportunity

From a PulseAudio perspective, it has been hard to achieve the 1-to-few millisecond latency numbers that would be absolutely necessary for professional audio use-cases. A lot of work has gone into improving this situation, most recently with David Henningsson’s shared-ringbuffer channels that made client/server communication more efficient.

At the same time, as application sandboxing frameworks such as Flatpak have added security requirements of us that were not accounted for when PulseAudio was written. Examples including choosing which devices an application has access to (or can even know of) or which applications can act as control entities (set routing etc., enable/disable devices). Some work has gone into this — Ahmed Darwish did some key work to get memfd support in PulseAudio, and Wim has prototyped an access-control mechanism module to enable a Flatpak portal for sound.

All this said, there are still fundamental limitations in architectural decisions in PulseAudio that would require significant plumbing to address. With Wim’s work on PipeWire and his extensive background with GStreamer and PulseAudio itself, I think we have an opportunity to revisit some of those decisions with the benefit of a decade’s worth of learning deploying PulseAudio in various domains starting from desktops/laptops to phones, cars, robots, home audio, telephony systems and a lot more.

Key Ideas

There are some core ideas of PipeWire that I am quite excited about.

The first of these is the graph. Like JACK, the entities that participate in the data flow are represented by PipeWire as nodes in a graph, and routing between nodes is very flexible — you can route applications to playback devices and capture devices to applications, but you can also route applications to other applications, and this is notionally the same thing.

The second idea is a bit more radical — PipeWire itself only “runs” the graph. The actual connections between nodes are created and managed by a “session manager”. This allows us to completely separate the data flow from policy, which means we could write completely separate policy for desktop use cases vs. specific embedded use cases. I’m particularly excited to see this be scriptable in a higher-level language, which is something Bastien has already started work on!

A powerful idea in PulseAudio was rewinding — the ability to send out huge buffers to the device, but the flexibility to rewind that data when things changed (a new stream got added, or the stream moved, or the volume changed). While this is great for power saving, it is a significant amount of complexity in the code. In addition, with some filters in the data path, rewinding can break the algorithm by introducing non-linearity. PipeWire doesn’t support rewinds, and we will need to find a good way to manage latencies to account for low power use cases. One example is that we could have the session manager bump up the device latency when we know latency doesn’t matter (Android does this when the screen is off).

There are a bunch of other things that are in the process of being fleshed out, like being able to represent the hardware as a graph as well, to have a clearer idea of what is going on within a node. More updates as these things are more concrete.

The Way Forward

There is a good summary by Christian about our discussion about what is missing and how we can go about trying to make a smooth transition for PulseAudio users. There is, of course, a lot to do, and my ideal outcome is that we one day flip a switch and nobody knows that we have done so.

In practice, we’ll need to figure out how to make this transition seamless for most people, while folks with custom setup will need to be given a long runway and clear documentation to know what to do. It’s way to early to talk about this in more specifics, however.

Configuration

One key thing that PulseAudio does right (I know there are people who disagree!) is having a custom configuration that automagically works on a lot of Intel HDA-based systems. We’ve been wondering how to deal with this in PipeWire, and the path we think makes sense is to transition to ALSA UCM configuration. This is not as flexible as we need it to be, but I’d like to extend it for that purpose if possible. This would ideally also help consolidate the various methods of configuration being used by the various Linux userspaces.

To that end, I’ve started trying to get a UCM setup on my desktop that PulseAudio can use, and be functionally equivalent to what we do with our existing configuration. There are missing bits and bobs, and I’m currently focusing on the ones related to hardware volume control. I’ll write about this in the future as the effort expands out to other hardware.

Onwards and upwards

The transition to PipeWire is unlikely to be quick or completely-painless or free of contention. For those who are worried about the future, know that any switch is still a long way away. In the mean time, however, constructive feedback and comments are welcome.

]]> https://arunraghavan.net/2018/10/update-from-the-pipewire-hackfest/feed/ 1 2484 A Late GUADEC 2017 Post https://arunraghavan.net/2017/09/a-late-guadec-2017-post/https://arunraghavan.net/2017/09/a-late-guadec-2017-post/#comments Mon, 04 Sep 2017 03:20:14 +0000 https://arunraghavan.net/?p=2396 It’s been a little over a month since I got back from Manchester, and this post should’ve come out earlier but I’ve been swamped.

The conference was absolutely lovely, the organisation was a 110% on point (serious kudos, I know first hand how hard that is). Others on Planet GNOME have written extensively about the talks, the social events, and everything in between that made it a great experience. What I would like to write about is about why this year’s GUADEC was special to me.

GNOME turning 20 years old is obviously a large milestone, and one of the main reasons I wanted to make sure I was at Manchester this year. There were many occasions to take stock of how far we had come, where we are, and most importantly, to reaffirm who we are, and why we do what we do.

And all of this made me think of my own history with GNOME. In 2002/2003, Nat and Miguel came down to Bangalore to talk about some of the work they were doing. I know I wasn’t the only one who found their energy infectious, and at Linux Bangalore 2003, they got on stage, just sat down, and started hacking up a GtkMozEmbed-based browser. The idea itself was fun, but what I took away — and I know I wasn’t the only one — is the sheer inclusive joy they shared in creating something and sharing that with their audience.

For all of us working on GNOME in whatever way we choose to contribute, there is the immediate gratification of shaping this project, as well as the larger ideological underpinning of making everyone’s experience talking to their computers better and free-er.

But I think it is also important to remember that all our efforts to make our community an inviting and inclusive space have a deep impact across the world. So much so that complete strangers from around the world are able to feel a sense of belonging to something much larger than themselves.

I am excited about everything we will achieve in the next 20 years.

(thanks go out to the GNOME Foundation for helping me attend GUADEC this year)

Sponsored by GNOME!

]]> https://arunraghavan.net/2017/09/a-late-guadec-2017-post/feed/ 1 2396 Beamforming in PulseAudio https://arunraghavan.net/2016/06/beamforming-in-pulseaudio/https://arunraghavan.net/2016/06/beamforming-in-pulseaudio/#comments Wed, 29 Jun 2016 05:22:12 +0000 http://arunraghavan.net/?p=1769 In case you missed it — we got PulseAudio 9.0 out the door, with the echo cancellation improvements that I wrote about. Now is probably a good time for me to make good on my promise to expand upon the subject of beamforming.

As with the last post, I’d like to shout out to the wonderful folks at Aldebaran Robotics who made this work possible!

Beamforming

Beamforming as a concept is used in various aspects of signal processing including radio waves, but I’m going to be talking about it only as applied to audio. The basic idea is that if you have a number of microphones (a mic array) in some known arrangement, it is possible to “point” or steer the array in a particular direction, so sounds coming from that direction are made louder, while sounds from other directions are rendered softer (attenuated).

Practically speaking, it should be easy to see the value of this on a laptop, for example, where you might want to focus a mic array to point in front of the laptop, where the user probably is, and suppress sounds that might be coming from other locations. You can see an example of this in the webcam below. Notice the grilles on either side of the camera — there is a microphone behind each of these.

Webcam with 2 mics

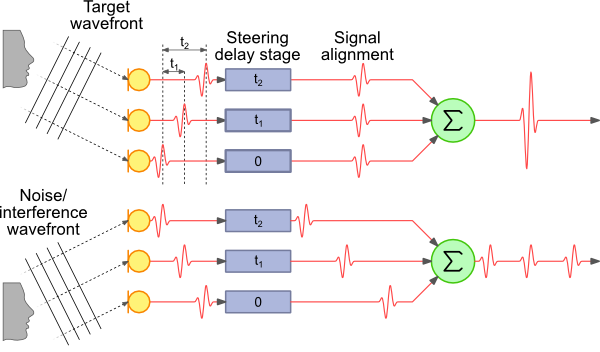

This raises the question of how this effect is achieved. The simplest approach is called “delay-sum beamforming”. The key idea in this approach is that if we have an array of microphones that we want to steer the array at a particular angle, the sound we want to steer at will reach each microphone at a different time. This is illustrated below. The image is taken from this great article describing the principles and math in a lot more detail.

Delay-sum beamforming

In this figure, you can see that the sound from the source we want to listen to reaches the top-most microphone slightly before the next one, which in turn captures the audio slightly before the bottom-most microphone. If we know the distance between the microphones and the angle to which we want to steer the array, we can calculate the additional distance the sound has to travel to each microphone.

The speed of sound in air is roughly 340 m/s, and thus we can also calculate how much of a delay occurs between the same sound reaching each microphone. The signal at the first two microphones is delayed using this information, so that we can line up the signal from all three. Then we take the sum of the signal from all three (actually the average, but that’s not too important).

The signal from the direction we’re pointing in is going to be strongly correlated, so it will turn out loud and clear. Signals from other directions will end up being attenuated because they will only occur in one of the mics at a given point in time when we’re summing the signals — look at the noise wavefront in the illustration above as an example.

Implementation

(this section is a bit more technical than the rest of the article, feel free to skim through or skip ahead to the next section if it’s not your cup of tea!)

The devil is, of course, in the details. Given the microphone geometry and steering direction, calculating the expected delays is relatively easy. We capture audio at a fixed sample rate — let’s assume this is 32000 samples per second, or 32 kHz. That translates to one sample every 31.25 µs. So if we want to delay our signal by 125µs, we can just add a buffer of 4 samples (4 × 31.25 = 125). Sound travels about 4.25 cm in that time, so this is not an unrealistic example.

Now, instead, assume the signal needs to be delayed by 80 µs. This translates to 2.56 samples. We’re working in the digital domain — the mic has already converted the analog vibrations in the air into digital samples that have been provided to the CPU. This means that our buffer delay can either be 2 samples or 3, not 2.56. We need another way to add a fractional delay (else we’ll end up with errors in the sum).

There is a fair amount of academic work describing methods to perform filtering on a sample to provide a fractional delay. One common way is to apply an FIR filter. However, to keep things simple, the method I chose was the Thiran approximation — the literature suggests that it performs the task reasonably well, and has the advantage of not having to spend a whole lot of CPU cycles first transforming to the frequency domain (which an FIR filter requires)(edit: converting to the frequency domain isn’t necessary, thanks to the folks who pointed this out).

I’ve implemented all of this as a separate module in PulseAudio as a beamformer filter module.

Now it’s time for a confession. I’m a plumber, not a DSP ninja. My delay-sum beamformer doesn’t do a very good job. I suspect part of it is the limitation of the delay-sum approach, partly the use of an IIR filter (which the Thiran approximation is), and it’s also entirely possible there is a bug in my fractional delay implementation. Reviews and suggestions are welcome!

A Better Implementation

The astute reader has, by now, realised that we are already doing a bunch of processing on incoming audio during voice calls — I’ve written in the previous article about how the webrtc-audio-processing engine provides echo cancellation, acoustic gain control, voice activity detection, and a bunch of other features.

Another feature that the library provides is — you guessed it — beamforming. The engineers at Google (who clearly are DSP ninjas) have a pretty good beamformer implementation, and this is also available via module-echo-cancel. You do need to configure the microphone geometry yourself (which means you have to manually load the module at the moment). Details are on our wiki (thanks to Tanu for that!).

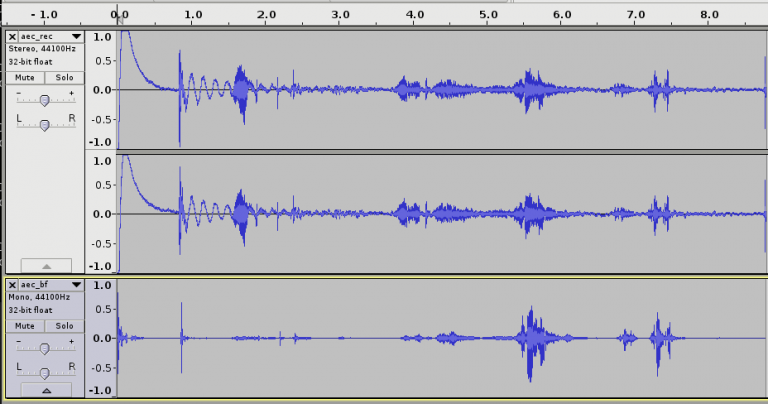

How well does this work? Let me show you. The image below is me talking to my laptop, which has two microphones about 4cm apart, on either side of the webcam, above the screen. First I move to the right of the laptop (about 60°, assuming straight ahead is 0°). Then I move to the left by about the same amount (the second speech spike). And finally I speak from the center (a couple of times, since I get distracted by my phone).

The upper section represents the microphone input — you’ll see two channels, one corresponding to each mic. The bottom part is the processed version, with echo cancellation, gain control, noise suppression, etc. and beamforming.

WebRTC beamforming

You can also listen to the actual recordings …

https://arunraghavan.net/wp-content/uploads/aec_rec.mp3

… and the processed output.

https://arunraghavan.net/wp-content/uploads/aec_bf.mp3

Feels like black magic, doesn’t it?

Finishing thoughts

The webrtc-audio-processing-based beamforming is already available for you to use. The downside is that you need to load the module manually, rather than have this automatically plugged in when needed (because we don’t have a way to store and retrieve the mic geometry). At some point, I would really like to implement a configuration framework within PulseAudio to allow users to set configuration from some external UI and have that be picked up as needed.

Nicolas Dufresne has done some work to wrap the webrtc-audio-processing library functionality in a GStreamer element (and this is in master now). Adding support for beamforming to the element would also be good to have.

The module-beamformer bits should be a good starting point for folks who want to wrap their own beamforming library and have it used in PulseAudio. Feel free to get in touch with me if you need help with that.

]]> https://arunraghavan.net/2016/06/beamforming-in-pulseaudio/feed/ 35 1769 Audio Devices and Configuration https://arunraghavan.net/2016/01/audio-devices-and-configuration/https://arunraghavan.net/2016/01/audio-devices-and-configuration/#comments Tue, 12 Jan 2016 09:54:58 +0000 http://arunraghavan.net/?p=1734 This one’s going to be a bit of a long post. You might want to grab a cup of coffee before you jump in!

Over the last few years, I’ve spent some time getting PulseAudio up and running on a few Android-based phones. There was the initial Galaxy Nexus port, a proof-of-concept port of Firefox OS (git) to use PulseAudio instead of AudioFlinger on a Nexus 4, and most recently, a port of Firefox OS to use PulseAudio on the first gen Moto G and last year’s Sony Xperia Z3 Compact (git).

The process so far has been largely manual and painstaking, and I’ve been trying to make that easier. But before I talk about the how of that, let’s see how all this works in the first place.

The Problem

If you have managed to get by without having to dig into this dark pit, the porting process can be something of an exercise in masochism. More so if you’re in my shoes and don’t have access to any of the documentation for the audio hardware. Hardware vendors and OEMs usually don’t share these specifications unless under NDA, which is hard to set up as someone just hacking on this stuff as an experiment or for fun in their spare time.

Broadly, the task involves looking at how the devices is set up on Android, and then replicating that process using the standard ALSA library, which is what PulseAudio uses (this works because both the Android and generic Linux userspace talk to the same ALSA-based kernel audio drivers).

Android’s configuration

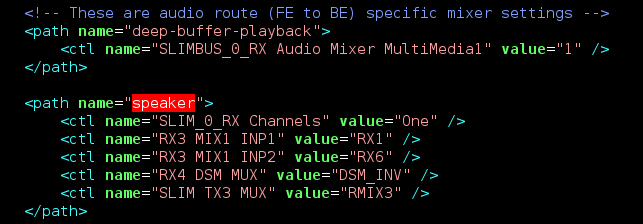

First, you look at the Android audio HAL code for the device you’re porting, and the corresponding mixer paths XML configuration. Between the two of these, you get a description of how you can configure the hardware to play back audio in various use cases (music, tones, voice calls), and how to route the audio (headphones, headset, speakers, Bluetooth).

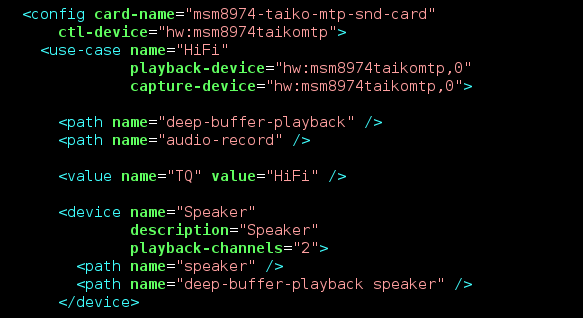

Snippet from mixer paths XML

In this example, there is one path that describes how to set up the hardware for “deep buffer playback” (used for music, where you can buffer a bunch of data and let the CPU go to sleep). The next path, “speaker”, tells us how to set up the routing to play audio out of the speaker.

These strings are not well-defined, so different hardware uses different path names and combinations to set up the hardware. The XML configuration also does not tell us a number of things, such as what format the hardware supports or what ALSA device to use. All of this information is embedded in the audio HAL code.

Configuring with ALSA

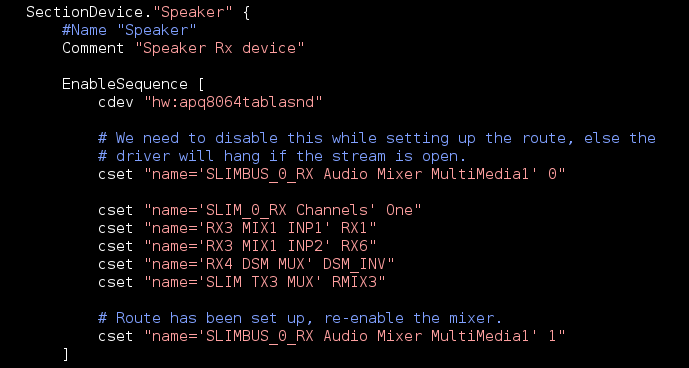

Next, you need to translate this configuration into something PulseAudio will understand1. The preferred method for this is ALSA’s UCM, which describes how to set up the hardware for each use case it supports, and how to configure the routing in each of those use cases.

Snippet from UCM

This is a snippet from the “hi-fi” use case, which is the UCM use case roughly corresponding to “deep buffer playback” in the previous section. Within that, we’re looking at the “speaker device” and you can see the same mixer controls as in the previous XML file are toggled. This file does have some additional information — for example, this snippet specifies what ALSA device should be used to toggle mixer controls (“hw:apq8064tablasnd”).

Doing the Porting

Typically, I start with the “hi-fi” use case — what you would normally use for music playback (and could likely use for tones and such as well). Getting the “phone” use case working is usually much more painful. In addition to setting up the audio hardware similar to th “hi-fi use case, it involves talking to the modem, for which there isn’t a standard method across Android devices. To complicate things, the modem firmware can be extremely sensitive to the order/timing of setup, often with no means of debugging (a.k.a. fun times!).

When there is a new Android version, I need to look at all the changes in the HAL and the XML file, redo the translation to UCM, and then test everything again.

This is clearly repetitive work, and I know I’m not the only one having to do it. Hardware vendors often face the same challenge when supporting the same devices on multiple platforms — Android’s HAL usually uses the XML config I showed above, ChromeOS’s CrAS and PulseAudio use ALSA UCM, Intel uses the parameter framework with its own XML format.

Introducing xml2ucm

With this background, when I started looking at the Z3 Compact port last year, I decided to write a tool to make this and future ports easier. That tool is creatively named xml2ucm2.

As we saw, the ALSA UCM configuration contains more information than the XML file. It contains a description of the playback and mixer devices to use, as well as some information about configuration (channel count, primarily). This information is usually hardcoded in the audio HAL on Android.

To deal with this, I introduced a small configuration file that provides the additional information required to perform the translation. The idea is that you write this configuration once, and can more or less perform the translation automatically. If the HAL or the XML file changes, it should be easy to implement that as a change in the configuration and just regenerate the UCM files.

Example xml2ucm configuration

This example shows how the Android XML like in the snippet above can be converted to the corresponding UCM configuration. Once I had the code done, porting all the hi-fi bits on the Xperia Z3 Compact took about 30 minutes. The results of this are available as a more complete example: the mixer paths XML, the config XML, and the generated UCM.

What’s next

One big missing piece here is voice calls. I spent some time trying to get voice calls working on the two phones I had available to me (the Moto G and the Z3 Compact), but this is quite challenging without access to hardware documentation and I ran out of spare time to devote to the problem. It would be nice to have a complete working example for a device, though.

There are other configuration mechanisms out there — notably Intel’s parameter framework. It would be interesting to add support for that as well. Ideally, the code could be extended to build a complete model of the audio routing/configuration, and generate any of the configuration that is supported.

I’d like this tool to be generally useful, so feel free to post comments and suggestions on Github or just get in touch.

p.s. Thanks go out to Abhinav for all the Haskell help!

]]> https://arunraghavan.net/2016/01/audio-devices-and-configuration/feed/ 4 1734 A Quick Update https://arunraghavan.net/2016/01/a-quick-update/https://arunraghavan.net/2016/01/a-quick-update/#respond Mon, 04 Jan 2016 09:58:53 +0000 http://arunraghavan.net/?p=1665 Happy 2016 everyone!

While I did mention a while back (almost two years ago, wow) that I was taking a break, I realised recently that I hadn’t posted an update from when I started again.

For the last year and a half, I’ve been providing freelance consulting around PulseAudio, GStreamer, and various other directly and tangentially related projects. There’s a brief list of the kind of work I’ve been involved in.

If you’re looking for help with PulseAudio, GStreamer, multimedia middleware or anything else you might’ve come across on this blog, do get in touch!

]]> https://arunraghavan.net/2016/01/a-quick-update/feed/ 0 1665 PulseAudio 7.1 is out https://arunraghavan.net/2015/10/pulseaudio-7-1-is-out/https://arunraghavan.net/2015/10/pulseaudio-7-1-is-out/#comments Fri, 30 Oct 2015 13:31:51 +0000 http://arunraghavan.net/?p=1595 We just rolled out a minor bugfix release. Quick changelog:

- Fix a crasher when using srbchannel

- Fix a build system typo that caused symlinks to turn up in /

- Make Xonar cards work better

- Other minor bug fixes and improvements

More details on the mailing list.

Thanks to everyone who contributed with bug reports and testing. What isn’t generally visible is that a lot of this happens behind the scenes downstream on distribution bug trackers, IRC, and so forth.

]]> https://arunraghavan.net/2015/10/pulseaudio-7-1-is-out/feed/ 1 1595 PSA: Breaking webrtc-audio-processing API https://arunraghavan.net/2015/10/psa-breaking-webrtc-audio-processing-api/https://arunraghavan.net/2015/10/psa-breaking-webrtc-audio-processing-api/#comments Wed, 28 Oct 2015 17:35:41 +0000 http://arunraghavan.net/?p=1586 I know it’s been ages, but I am now working on updating the webrtc-audio-processing library. You might remember this as the code that we split off from the webrtc.org code to use in the PulseAudio echo cancellation module.

This is basically just the AudioProcessing module, bundled as a standalone library so that we can use the fantastic AEC, AGC, and noise suppression implementation from that code base. For packaging simplicity, I made a copy of the necessary code, and wrote an autotools-based build system around that.

Now since I last copied the code, the library API has changed a bit — nothing drastic, just a few minor cleanups and removed API. This wouldn’t normally be a big deal since this code isn’t actually published as external API — it’s mostly embedded in the Chromium and Firefox trees, probably other projects too.

Since we are exposing a copy of this code as a standalone library, this means that there are two options — we could (a) just break the API, and all dependent code needs to be updated to be able to use the new version, or (b) write a small wrapper to try to maintain backwards compatibility.

I’m inclined to just break API and release a new version of the library which is not backwards compatible. My rationale for this is that I’d like to keep the code as close to what is upstream as possible, and over time it could become painful to maintain a bunch of backwards-compatibility code.

A nicer solution would be to work with upstream to make it possible to build the AudioProcessing module as a standalone library. While the folks upstream seemed amenable to the idea when this came up a few years ago, nobody has stepped up to actually do the work for this. In the mean time, a number of interesting features have been added to the module, and it would be good to pull this in to use in PulseAudio and any other projects using this code (more about this in a follow-up post).

So if you’re using webrtc-audio-processing, be warned that the next release will probably break API, and you’ll need to update your code. I’ll try to publish a quick update guide when releasing the code, but if you want to look at the current API, take a look at the current audio_processing.h.

p.s.: If you do use webrtc-audio-processing as a dependency, I’d love to hear about it. As far as I know, PulseAudio is the only user of this library at the moment.

]]> https://arunraghavan.net/2015/10/psa-breaking-webrtc-audio-processing-api/feed/ 3 1586 GUADEC 2015 https://arunraghavan.net/2015/08/guadec-2015/https://arunraghavan.net/2015/08/guadec-2015/#comments Fri, 21 Aug 2015 06:21:27 +0000 http://arunraghavan.net/?p=1582 This one’s a bit late, for reasons that’ll be clear enough later in this post. I had the happy opportunity to go to GUADEC in Gothenburg this year (after missing the last two, unfortunately). It was a great, well-organised event, and I felt super-charged again, meeting all the people making GNOME better every day.

GUADEC picnic @ Gothenberg

I presented a status update of what we’ve been up to in the PulseAudio world in the past few years. Amazingly, all the videos are up already, so you can catch up with anything that you might have missed here.

We also had a meeting of PulseAudio developers which and a number of interesting topics of discussion came up (I’ll try to summarise my notes in a separate post).

A bunch of other interesting discussions happened in the hallways, and I’ll write about that if my investigations take me some place interesting.

Now the downside — I ended up missing the BoF part of GUADEC, and all of the GStreamer hackfest in Montpellier after. As it happens, I contracted dengue and I’m still recovering from this. Fortunately it was the lesser (non-haemorrhagic) version without any complications, so now it’s just a matter of resting till I’ve recuperated completely.

Nevertheless, the first part of the trip was great, and I’d like to thank the GNOME Foundation for sponsoring my travel and stay, without which I would have missed out on all the GUADEC fun this year.

Sponsored by GNOME!

]]> https://arunraghavan.net/2015/08/guadec-2015/feed/ 2 1582 GNOME Asia 2015 https://arunraghavan.net/2015/05/gnome-asia-2015/https://arunraghavan.net/2015/05/gnome-asia-2015/#comments Wed, 20 May 2015 08:08:10 +0000 http://arunraghavan.net/?p=1572 I was in Depok, Indonesia last week to speak at GNOME Asia 2015. It was a great experience — the organisers did a fantastic job and as a bonus, the venue was incredibly pretty!

View from our room

My talk was about the GNOME audio stack, and my original intention was to talk a bit about the APIs, how to use them, and how to choose which to use. After the first day, though, I felt like a more high-level view of the pieces would be more useful to the audience, so I adjusted the focus a bit. My slides are up here.

Nirbheek and I then spent a couple of days going down to Yogyakarta to cycle around, visit some temples, and sip some fine hipster coffee.

All in all, it was a week well spent. I’d like to thank the GNOME Foundation for helping me get to the conference!

Sponsored by GNOME!

]]> https://arunraghavan.net/2015/05/gnome-asia-2015/feed/ 2 1572 Notes from the PulseAudio Mini Summit 2014 https://arunraghavan.net/2014/11/notes-from-the-pulseaudio-mini-summit-2014/https://arunraghavan.net/2014/11/notes-from-the-pulseaudio-mini-summit-2014/#comments Tue, 04 Nov 2014 16:49:23 +0000 http://arunraghavan.net/?p=1541 The third week of October was quite action-packed, with a whole bunch of conferences happening in Düsseldorf. The Linux audio developer community as well as the PulseAudio developers each had a whole day of discussions related to a wide range of topics. I’ll be summarising the events of the PulseAudio mini summit day here. The discussion was split into two parts, the first half of the day with just the current core developers and the latter half with members of the community participating as well.

I’d like to thank the Linux Foundation for sparing us a room to carry out these discussions — it’s fantastic that we are able to colocate such meetings with a bunch of other conferences, making it much easier than it would otherwise be for all of us to converge to a single place, hash out ideas, and generally have a good time in real life as well!

Happy faces — incontrovertible proof that everyone loves PulseAudio!

With a whole day of discussions, this is clearly going to be a long post, so you might want to grab a coffee now. :)

Release plan

We have a few blockers for 6.0, and some pending patches to merge (mainly HSP support). Once this is done, we can proceed to our standard freeze → release candidate → stable process.

Build simplification for BlueZ HFP/HSP backends

For simplifying packaging, it would be nice to be able to build all the available BlueZ module backends in one shot. There wasn’t much opposition to this idea, and David (Henningsson) said he might look at this. (as I update this before posting, he already has)

srbchannel plans

We briefly discussed plans around the recently introduced shared ringbuffer channel code for communication between PulseAudio clients and the server. We talked about the performance benefits, and future plans such as direct communication between the client and server-side I/O threads.

Routing framework patches

Tanu (Kaskinen) has a long-standing set of patches to add a generic routing framework to PulseAudio, developed by notably Jaska Uimonen, Janos Kovacs, and other members of the Tizen IVI team. This work adds a set of new concepts that we’ve not been entirely comfortable merging into the core. To unblock these patches, it was agreed that doing this work in a module and using a protocol extension API would be more beneficial. (Tanu later did a demo of the CLI extensions that have been made for the new routing concepts)

module-device-manager

As a consequence of the discussion around the routing framework, David mentioned that he’d like to take forward Colin’s priority list work in the mean time. Based on our discussions, it looked like it would be possible to extend module-device-manager to make it port aware and get the kind functionality we want (the ability to have a priority-order list of devices). David was to look into this.

Module writing infrastructure

Relatedly, we discussed the need to export the PA internal headers to allow externally built modules. We agreed that this would be okay to have if it was made abundantly clear that this API would have absolutely no stability guarantees, and is mostly meant to simplify packaging for specialised distributions.

Which led us to the other bit of infrastructure required to write modules more easily — making our protocol extension mechanism more generic. Currently, we have a static list of protocol extensions in our core. Changing this requires exposing our pa_tagstruct structure as public API, which we haven’t done. If we don’t want to do that, then we would expose a generic “throw this blob across the protocol” mechanism and leave it to the module/library to take care of marshalling/unmarshalling.

Resampler quality evaluation

Alexander shared a number of his findings about resampler quality on PulseAudio, vs. those found on Windows and Mac OS. Some questions were asked about other parameters, such as relative CPU consumption, etc. There was also some discussion on how to try to carry this work to a conclusion, but no clear answer emerged.

It was also agreed on the basis of this work that support for libsamplerate and ffmpeg could be phased out after deprecation.

Addition of a “hi-fi” mode

The discussion came around to the possibility of having a mode where (if the hardware supports it), PulseAudio just plays out samples without resampling, conversion, etc. This has been brought up in the past for “audiophile” use cases where the card supports 88.2/96 kHZ and higher sample rates.

No objections were raised to having such a mode — I’d like to take this up at some point of time.

LFE channel module

Alexander has some code for filtering low frequencies for the LFE channel, currently as a virtual sink, that could eventually be integrated into the core.

rtkit

David raised a question about the current status of rtkit and whether it needs to exist, and if so, where. Lennart brought up the fact that rtkit currently does not work on systemd+cgroups based setups (I don’t seem to have why in my notes, and I don’t recall off the top of my head).

The conclusion of the discussion was that some alternate policy method for deciding RT privileges, possibly within systemd, would be needed, but for now rtkit should be used (and fixed!)

kdbus/memfd

Discussions came up about the possibility of using kdbus and/or memfd for the PulseAudio transport. This is interesting to me, there doesn’t seem to be an immediately clear benefit over our SHM mechanism in terms of performance, and some work to evaluate how this could be used, and what the benefit would be, needs to be done.

ALSA controls spanning multiple outputs

David has now submitted patches for controls that affect multiple outputs (such as “Headphone+LO”). These are currently being discussed.

Audio groups

Tanu would like to add code to support collecting audio streams into “audio groups” to apply collective policy to them. I am supposed to help review this, and Colin mentioned that module-stream-restore already uses similar concepts.

Stream and device objects

Tanu proposed the addition of new objects to represent streams and objects. There didn’t seem to be consensus on adding these, but there was agreement of a clear need to consolidate common code from sink-input/source-output and sink/source implementations. The idea was that having a common parent object for each pair might be one way to do this. I volunteered to help with this if someone’s taking it up.

Filter sinks

Alexander brough up the need for a filter API in PulseAudio, and this is something I really would like to have. I am supposed to sketch out an API (though implementing this is non-trivial and will likely take time).

Dynamic PCM for HDMI

David plans to see if we can use profile availability to help determine when an HDMI device is actually available.

Browser volumes

The usability of flat-volumes for browser use cases (where the volume of streams can be controlled programmatically) was discussed, and my patch to allow optional opt-out by a stream from participating in flat volumes came up. Tanu and I are to continue the discussion already on the mailing list to come up with a solution for this.

Handling bad rewinding code

Alexander raised concerns about the quality of rewinding code in some of our filter modules. The agreement was that we needed better documentation on handling rewinds, including how to explicitly not allow rewinds in a sink. The example virtual sink/source code also needs to be adjusted accordingly.

BlueZ native backend

Wim Taymans’ work on adding back HSP support to PulseAudio came up. Since the meeting, I’ve reviewed and merged this code with the change we want. Speaking to Luiz Augusto von Dentz from the BlueZ side, something we should also be able to add back is for PulseAudio to act as an HSP headset (using the same approach as for HSP gateway support).

Containers and PA

Takashi Iwai raised a question about what a good way to run PA in a container was. The suggestion was that a tunnel sink would likely be the best approach.

Common ALSA configuration

Based on discussion from the previous day at the Linux Audio mini-summit, I’m supposed to look at the possibility of consolidating the various mixer configuration formats we currently have to deal with (primarily UCM and its implementations, and Android’s XML format).

(thanks to Tanu, David and Peter for reviewing this)

]]> https://arunraghavan.net/2014/11/notes-from-the-pulseaudio-mini-summit-2014/feed/ 17 1541 Four years https://arunraghavan.net/2014/02/four-years/https://arunraghavan.net/2014/02/four-years/#comments Sun, 02 Feb 2014 14:34:50 +0000 http://arunraghavan.net/?p=1458 Four years and what seems like a lifetime ago, I jumped aboard the ship Collabora Multimedia, and set sail for adventure and lands unknown. We sailed through strange new seas, to exotic lands, defeated many monsters, and, I feel, had some positive impact on the world around us. Last Friday, on my request, I got dropped back at the shore.

I’ve had an insanely fun time at Collabora, working with absurdly talented and dedicated people. Nevertheless, I’ve come to the point where I feel like I need something of a break. I’m not sure what’s next, other than a month or two of rest and relaxation — reading, cycling, travel, and catching up with some of the things I’ve been promising to do if only I had more time. Yes, that includes PulseAudio and GStreamer hacking as well. :-)

And there’ll be more updates and blog posts too!

]]> https://arunraghavan.net/2014/02/four-years/feed/ 6 1458 PulseAudio 4.0 and Skype https://arunraghavan.net/2013/08/pulseaudio-4-0-and-skype/https://arunraghavan.net/2013/08/pulseaudio-4-0-and-skype/#comments Fri, 02 Aug 2013 16:10:26 +0000 http://arunraghavan.net/?p=1442 This is a public service announcement for packagers and users of Skype and PulseAudio 4.0.

In PulseAudio 4.0, we added some code to allow us to deal with automatic latency adjustment more gracefully, particularly for latency requests under ~80 ms. This exposed a bug in Skype that breaks audio in interesting ways (no sound, choppy sound, playback happens faster than it should).

We’ve spoken to the Skype developers about this problem and they have been investigating the problem. In the mean time, we suggest that users and packagers work around this problem in the mean time.

If you are packaging Skype for your distribution, you need to change the Exec line in your Skype .desktop file as follows:

Exec=env PULSE_LATENCY_MSEC=60 skype %U

If you are a user, and your distribution doesn’t already carry this fix (as of about a week ago, Ubuntu does, and as of ~1 hour from now, Gentoo will), you need to launch Skype from the command line as follows:

$ PULSE_LATENCY_MSEC=60 skype

If you’re not sure if you’re hit but this bug, you’re probably not. :-)

]]> https://arunraghavan.net/2013/08/pulseaudio-4-0-and-skype/feed/ 52 1442 PulseAudio 4.0 and more https://arunraghavan.net/2013/06/pulseaudio-4-0-and-more/https://arunraghavan.net/2013/06/pulseaudio-4-0-and-more/#comments Tue, 04 Jun 2013 02:45:41 +0000 http://arunraghavan.net/?p=1433 And we’re back … PulseAudio 4.0 is out! There’s both a short and super-detailed changelog in the release notes. For the lazy, this release brings a bunch of Bluetooth stability updates, better low latency handling, performance improvements, and a whole lot more. :)

One interesting thing is that for this release, we kept a parallel next branch open while master was frozen for stabilising and releasing. As a result, we’re already well on our way to 5.0 with 52 commits since 4.0 already merged into master.

And finally, I’m excited to announce PulseAudio is going to be carrying out two great projects this summer, as part of the Google Summer of Code! We are going to have Alexander Couzens (lynxis) working on a rewrite of module-tunnel using libpulse, mentored by Tanu Kaskinen. In addition to this, Damir Jelić (poljar) working on improvements to resampling, mentored by Peter Meerwald.

That’s just some of the things to look forward to in coming months. I’ve got a few more things I’d like to write about, but I’ll save that for another post.

]]> https://arunraghavan.net/2013/06/pulseaudio-4-0-and-more/feed/ 1 1433 PulseAudio in GSoC 2013 https://arunraghavan.net/2013/04/pulseaudio-in-gsoc-2013/https://arunraghavan.net/2013/04/pulseaudio-in-gsoc-2013/#respond Thu, 11 Apr 2013 11:34:48 +0000 http://arunraghavan.net/?p=1426 That’s right — PulseAudio will be participating in the Google Summer of Code again this year! We had a great set of students and projects last year, and you’ve already seen some their work in the last release.

There are some more details on how to get involved on the mailing list. We’re looking forward to having another set of smart and enthusiastic new contributors this year!

p.s.: Mentors and students from organisations (GStreamer and BlueZ, for example), do feel free to get in touch with us if you have ideas for projects related to PulseAudio that overlap with those other projects.

]]> https://arunraghavan.net/2013/04/pulseaudio-in-gsoc-2013/feed/ 0 1426 PulseAudio 3.0 https://arunraghavan.net/2012/12/pulseaudio-3-0/https://arunraghavan.net/2012/12/pulseaudio-3-0/#comments Tue, 18 Dec 2012 07:57:46 +0000 http://arunraghavan.net/?p=1412 Yay, we just released PulseAudio 3.0! I’m not going to rehash the changelog that you can find in the release announcement as well as the longer release notes.

I would like to thank the 36 contributors over the last 6 months who have made this release what it is and continue to demonstrate what a vibrant community we have!

]]> https://arunraghavan.net/2012/12/pulseaudio-3-0/feed/ 2 1412 PulseConf 2012: Report https://arunraghavan.net/2012/11/pulseconf-2012-report/https://arunraghavan.net/2012/11/pulseconf-2012-report/#comments Tue, 06 Nov 2012 11:04:22 +0000 http://arunraghavan.net/?p=1380 For those of you who missed my previous updates, we recently organised a PulseAudio miniconference in Copenhagen, Denmark last week. The organisation of all this was spearheaded by ALSA and PulseAudio hacker, David Henningsson. The good folks organising the Ubuntu Developer Summit / Linaro Connect were kind enough to allow us to colocate this event. A big thanks to both of them for making this possible!

The room where the first PulseAudio conference took place

The conference was attended by the four current active PulseAudio developers: Colin Guthrie, Tanu Kaskinen, David Henningsson, and myself. We were joined by long-time contributors Janos Kovacs and Jaska Uimonen from Intel, Luke Yelavich, Conor Curran and Michał Sawicz.

We started the conference at around 9:30 am on November 2nd, and actually managed to keep to the final schedule(!), so I’m going to break this report down into sub-topics for each item which will hopefully make for easier reading than an essay. I’ve also put up some photos from the conference on the Google+ event.

Mission and Vision

We started off with a broad topic — what each of our personal visions/goals for the project are. Interestingly, two main themes emerged: having the most seamless desktop user experience possible, and making sure we are well-suited to the embedded world.

Most of us expressed interest in making sure that users of various desktops had a smooth, hassle-free audio experience. In the ideal case, they would never need to find out what PulseAudio is!

Orthogonally, a number of us are also very interested in making PulseAudio a strong contender in the embedded space (mobile phones, tablets, set top boxes, cars, and so forth). While we already find PulseAudio being used in some of these, there are areas where we can do better (more in later topics).

There was some reservation expressed about other, less-used features such as network playback being ignored because of this focus. The conclusion after some discussion was that this would not be the case, as a number of embedded use-cases do make use of these and other “fringe” features.

Increasing patch bandwidth

Contributors to PulseAudio will be aware that our patch queue has been growing for the last few months due to lack of developer time. We discussed several ways to deal with this problem, the most promising of which was a periodic triage meeting.

We will be setting up a rotating schedule where each of us will organise a meeting every 2 weeks (the period might change as we implement things) where we can go over outstanding patches and hopefully clear backlog. Colin has agreed to set up the first of these.

Routing infrastructure

Next on the agenda was a presentation by Janos Kovacs about the work they’ve been doing at Intel with enhancing the PulseAudio’s routing infrastructure. These are being built from the perspective of IVI systems (i.e., cars) which typically have fairly complex use cases involving multiple concurrent devices and users. The slides for the talk will be put up here shortly (edit: slides are now available).

The talk was mingled with a Q&A type discussion with Janos and Jaska. The first item of discussion was consolidating Colin’s priority-based routing ideas into the proposed infrastructure. The broad thinking was that the ideas were broadly compatible and should be implementable in the new model.

There was also some discussion on merging the module-combine-sink functionality into PulseAudio’s core, in order to make 1:N routing easier. Some alternatives using te module-filter-* were proposed. Further discussion will likely be required before this is resolved.

The next steps for this work are for Jaska and Janos to break up the code into smaller logical bits so that we can start to review the concepts and code in detail and work towards eventually merging as much as makes sense upstream.

Low latency

This session was taken up against the background of improving latency for games on the desktop (although it does have other applications). The indicated required latency for games was given as 16 ms (corresponding to a frame rate of 60 fps). A number of ideas to deal with the problem were brought up.

Firstly, it was suggested that the maxlength buffer attribute when setting up streams could be used to signal a hard limit on stream latency — the client signals that it will prefer an underrun, over a latency above maxlength.

Another long-standing item was to investigate the cause of underruns as we lower latency on the stream — David has already begun taking this up on the LKML.

Finally, another long-standing issue is the buffer attribute adjustment done during stream setup. This is not very well-suited to low-latency applications. David and I will be looking at this in coming days.

Merging per-user and system modes

Tanu led the topic of finding a way to deal with use-cases such as mpd or multi-user systems, where access to the PulseAudio daemon of the active user by another user might be desired. Multiple suggestions were put forward, though a definite conclusion was not reached, as further thought is required.

Tanu’s suggestion was a split between a per-user daemon to manage tasks such as per-user configuration, and a system-wide daemon to manage the actual audio resources. The rationale being that the hardware itself is a common resource and could be handled by a non-user-specific daemon instance. This approach has the advantage of having a single entity in charge of the hardware, which keeps a part of the implementation simpler. The disadvantage is that we will either sacrifice security (arbitrary users can “eavesdrop” using the machine’s mic), or security infrastructure will need to be added to decide what users are allowed what access.

I suggested that since these are broadly fringe use-cases, we should document how users can configure the system by hand for these purposes, the crux of the argument being that our architecture should be dictated by the main use-cases, and not the ancillary ones. The disadvantage of this approach is, of course, that configuration is harder for the minority that wishes multi-user access to the hardware.

Colin suggested a mechanism for users to be able to request access from an “active” PulseAudio daemon, which could trigger approval by the corresponding “active” user. The communication mechanism could be the D-Bus system bus between user daemons, and Ștefan Săftescu’s Google Summer of Code work to allow desktop notifications to be triggered from PulseAudio could be used to get to request authorisation.

David suggested that we could use the per-user/system-wide split, modified somewhat to introduce the concept of a “system-wide” card. This would be a device that is configured as being available to the whole system, and thus explicitly marked as not having any privacy guarantees.

In both the above cases, discussion continued about deciding how the access control would be handled, and this remains open.

We will be continuing to look at this problem until consensus emerges.

Improving (laptop) surround sound

The next topic dealt with being able to deal with laptops with a built-in 2.1 channel set up. The background of this is that there are a number of laptops with stereo speakers and a subwoofer. These are usually used as stereo devices with the subwoofer implicitly being fed data by the audio controller in some hardware-dependent way.

The possibility of exposing this hardware more accurately was discussed. Some investigation is required to see how things are currently exposed for various hardware (my MacBook Pro exposes the subwoofer as a surround control, for example). We need to deal with correctly exposing the hardware at the ALSA layer, and then using that correctly in PulseAudio profiles.

This led to a discussion of how we could handle profiles for these. Ideally, we would have a stereo profile with the hardware dealing with upmixing, and a 2.1 profile that would be automatically triggered when a stream with an LFE channel was presented. This is a general problem while dealing with surround output on HDMI as well, and needs further thought as it complicates routing.

Testing

I gave a rousing speech about writing more tests using some of the new improvements to our testing framework. Much cheering and acknowledgement ensued.

Ed.: some literary liberties might have been taken in this section

Unified cross-distribution ALSA configuration

I missed a large part of this unfortunately, but the crux if the discussion was around unifying cross-distribution sound configuration for those who wish to disable PulseAudio.

Base volumes

The next topic we took up was base volumes, and whether they are useful to most end users. For those unfamiliar with the concept, we sometimes see sinks/sources where which support volume controls going to > 0dB (which is the no=attenuation point). We provide the maximum allowed gain in ALSA as the maximum volume, and suggest that UIs show a marker for the base volume.

It was felt that this concept was irrelevant, and probably confusing to most end users, and that we suggest that UIs do not show this information any more.

Relatedly, it was decided that having a per-port maximum volume configuration would be useful, so as to allow users to deal with hardware where the output might get too loud.

Devices with dynamic capabilities (HDMI)

Our next topic of discussion was finding a way to deal with devices such as those HDMI ports where the capabilities of the device could change at run time (for example, when you plug out a monitor and plug in a home theater receiver).

A few ideas to deal with this were discussed, and the best one seemed to be David’s proposal to always have a separate card for each HDMI device. The addition of dynamic profiles could then be exploited to only make profiles available when an actual device is plugged in (and conversely removed when the device is plugged out).

Splitting of configuration

It was suggested that we could split our current configuration files into three categories: core, policy and hardware adaptation. This was met with approval all-around, and the pre-existing ability to read configuration from subdirectories could be reused.

Another feature that was desired was the ability to ship multiple configurations for different hardware adaptations with a single package and have the correct one selected based on the hardware being run on. We did not know of a standard, architecture-independent way to determine hardware adaptation, so it was felt that the first step toward solving this problem would be to find or create such a mechanism. This could either then be used to set up configuration correctly in early boot, or by PulseAudio for do runtime configuration selection.

Relatedly, moving all distributed configuration to /usr/share/..., with overrides in /etc/pulse/... and $HOME were suggested.

Better drain/underrun reporting

David volunteered to implement a per-sink-input timer for accurately determining when drain was completed, rather than waiting for the period of the entire buffer as we currently do. Unsurprisingly, no objections were raised to this solution to the long-standing issue.

In a similar vein, redefining the underflow event to mean a real device underflow (rather than the client-side buffer running empty) was suggested. After some discussion, we agreed that a separate event for device underruns would likely be better.

Beer

We called it a day at this point and dispersed beer-wards.

Our valiant attendees after a day of plotting the future of PulseAudio

User experience

David very kindly invited us to spend a day after the conference hacking at his house in Lund, Sweden, just a short hop away from Copenhagen. We spent a short while in the morning talking about one last item on the agenda — helping to build a more seamless user experience. The idea was to figure out some tools to help users with problems quickly converge on what problem they might be facing (or help developers do the same). We looked at the Ubuntu apport audio debugging tool that David has written, and will try to adopt it for more general use across distributions.

Hacking

The rest of the day was spent in more discussions on topics from the previous day, poring over code for some specific problems, and rolling out the first release candidate for the upcoming 3.0 release.

And cut!

I am very happy that this conference happened, and am looking forward to being able to do it again next year. As you can see from the length of this post, there are lot of things happening in this part of the stack, and lots more yet to come. It was excellent meeting all the fellow PulseAudio hackers, and my thanks to all of them for making it.

Finally, I wouldn’t be sitting here writing this report without support from Collabora, who sponsored my travel to the conference, so it’s fitting that I end this with a shout-out to them. :)

]]> https://arunraghavan.net/2012/11/pulseconf-2012-report/feed/ 8 1380 grsec and PulseAudio (and Gentoo) https://arunraghavan.net/2012/10/grsec-and-pulseaudio/https://arunraghavan.net/2012/10/grsec-and-pulseaudio/#comments Tue, 30 Oct 2012 08:49:50 +0000 http://arunraghavan.net/?p=1366 This problem seems to bite some of our hardened users a couple of times a year, so thought I’d blog about it. If you are using grsec and PulseAudio, you must not enable CONFIG_GRKERNSEC_SYSFS_RESTRICT in your kernel, else autodetection of your cards will fail.

PulseAudio’s module-udev-detect needs to access /sys to discover what cards are available on the system, and that kernel option disallows this for anyone but root.

]]> https://arunraghavan.net/2012/10/grsec-and-pulseaudio/feed/ 4 1366 PulseConf Schedule https://arunraghavan.net/2012/10/pulseconf-schedule/https://arunraghavan.net/2012/10/pulseconf-schedule/#respond Mon, 29 Oct 2012 12:45:47 +0000 http://arunraghavan.net/?p=1360 David has now published a tentative schedule for the PulseAudio Mini-conference (I’m just going to call it PulseConf — so much easier on the tongue).

For the lazy, these are some of the topics we’ll be covering:

- Vision and mission — where we are and where we want to be

- Improving our patch review process

- Routing infrastructure

- Improving low latency behaviour

- Revisiting system- and user-modes

- Devices with dynamic capabilities

- Improving surround sound behaviour

- Separating configuration for hardware adaptation

- Better drain/underrun reporting behaviour

Phew — and there are more topics that we probably will not have time to deal with!

For those of you who cannot attend, the Linaro Connect folks (who are graciously hosting us) are planning on running Google+ Hangouts for their sessions. Hopefully we should be able to do the same for our proceedings. Watch this space for details!

p.s.: A big thank you to my employer Collabora for sponsoring my travel to the conference.

]]> https://arunraghavan.net/2012/10/pulseconf-schedule/feed/ 0 1360 PulseConf! https://arunraghavan.net/2012/10/pulseconf/https://arunraghavan.net/2012/10/pulseconf/#respond Thu, 04 Oct 2012 08:41:01 +0000 http://arunraghavan.net/?p=1356 For those of you who missed it, your friendly neighbourhood PulseAudio hackers are converging on Copenhagen in a month to discuss, plan and hack on the future of PulseAudio.

We’re doing this for the first time, so I’m super-excited! David has posted details so if this is of interest to you, you should definitely join us!