CMU Panoptic Dataset (original) (raw)

| | Massively Multiview System 480 VGA camera views 30+ HD views 10 RGB-D sensors Hardware-based sync Calibration Interesting Scenes with Labels Multiple people Socially interacting groups 3D body pose 3D facial landmarks Transcripts + speaker ID | | ---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- |

Dataset Size

Currently, 65 sequences (5.5 hours) and 1.5 millions of 3D skeletons are available.

License

CMU Panoptic Studio dataset is shared only for research purposes, and this cannot be used for any commercial purposes. The dataset or its modified version cannot be redistributed without permission from dataset organizers.

What's New

| Apr. 2019 | Social Signal Prediction paper got accepted to CVPR 2019. Code and data will be available |

|---|---|

| Apr. 2019 | Monocular Total Capture (MTC) paper got accepted to CVPR 2019. Code and data are availablie: link |

| Jul. 2018 | 3D Face and Hand keypoint data are available for sequences. Check the Range-of-motion sequence. |

| May. 2018 | PointCloud DB from 10 Kinects (with corresponding 41 RGB videos) is available (6+ hours of data): PtCloudDB. |

| Mar. 2018 | Total body motion capture paper will be presented in CVPR 2018: Project page. |

| Dec. 2017 | Hand Keypoint Dataset Page has been added. More data will be coming soon. |

| Jun. 2017 | We organize a tutorial in conjunction with CVPR 2017: "DIY A Multiview Camera System: Panoptic Studio Teardown" |

| Jun. 2017 | Hand keypoint detection and reconstruction paper will be presented in CVPR 2017: Project page. |

| Dec. 2016 | Panoptic Studio is featured on The Verge. You can also see the video version here. |

| Dec. 2016 | The social interaction capture paper (extended version of ICCV15) is available on arXiv. |

| Sep. 2016 | The CMU PanopticStudio Dataset is now publicly released. Currently, 480 VGA videos, 31 HD videos, 3D body pose, and calibration data are available. Dense point cloud (from 10 Kinects) and 3D face reconstruction will be available soon. Please contact Hanbyul Joo and Tomas Simon for any issue of our dataset. |

| Sep. 2016 | The PanopticStudio Toolbox is available on GitHub. |

| Aug. 2016 | Our dataset website is open. Dataset and tools will be available soon. |

Dataset Examples

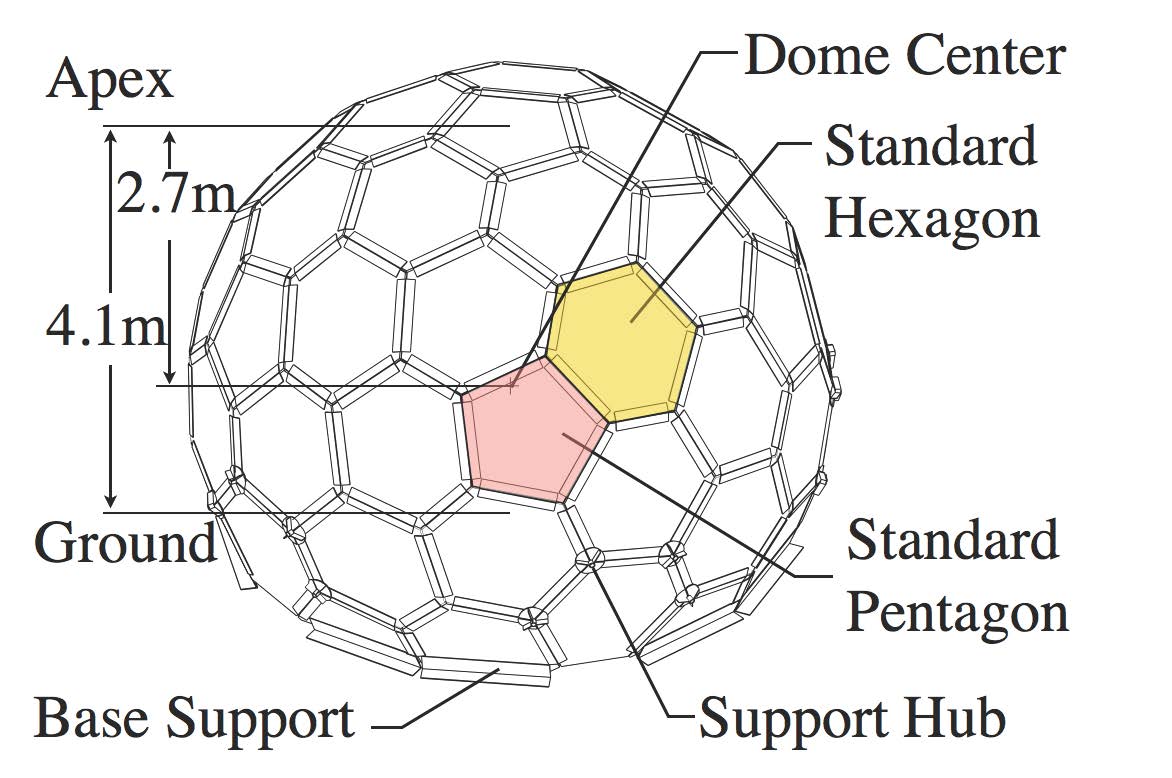

System Description

We keep upgrading our system. Currently our system has the following hardware setup:

- 480 VGA cameras, 640 x 480 resolution, 25 fps, synchronized among themselves using a hardware clock

- 31 HD cameras, 1920 x 1080 resolution, 30 fps, synchronized among themselves using a hardware clock, timing aligned with VGA cameras

- 10 Kinect Ⅱ Sensors. 1920 x 1080 (RGB), 512 x 424 (depth), 30 fps, timing aligned among themselves and other sensors

- 5 DLP Projectors. synchronized with HD cameras

The following figure shows the dimension of our system.

References

By using the dataset, you agree to cite at least one of the following papers.

@article{Joo_2017_TPAMI, title={Panoptic Studio: A Massively Multiview System for Social Interaction Capture}, author={Joo, Hanbyul and Simon, Tomas and Li, Xulong and Liu, Hao and Tan, Lei and Gui, Lin and Banerjee, Sean and Godisart, Timothy Scott and Nabbe, Bart and Matthews, Iain and Kanade, Takeo and Nobuhara, Shohei and Sheikh, Yaser}, journal={IEEE Transactions on Pattern Analysis and Machine Intelligence}, year={2017} }

@article{Simon_2017_CVPR, title={Hand Keypoint Detection in Single Images using Multiview Bootstrapping}, author={Simon, Tomas and Joo, Hanbyul and Sheikh, Yaser}, journal={CVPR}, year={2017} }

@InProceedings{Joo_2015_ICCV, title = {Panoptic Studio: A Massively Multiview System for Social Motion Capture}, author = {Joo, Hanbyul and Liu, Hao and Tan, Lei and Gui, Lin and Nabbe, Bart and Matthews, Iain and Kanade, Takeo and Nobuhara, Shohei and Sheikh, Yaser}, booktitle = {The IEEE International Conference on Computer Vision (ICCV)}, year = {2015} }

Acknowledgement

This research is supported by the National Science Foundation under Grants No. 1353120 and 1029679, and in part using an ONR grant 11628301.