Operations - MATLAB & Simulink (original) (raw)

Develop custom deep learning functions

For most tasks, you can use built-in layers. If there is not a built-in layer that you need for your task, then you can define your own custom layer. You can define custom layers with learnable and state parameters. After you define a custom layer, you can check that the layer is valid, GPU compatible, and outputs correctly defined gradients. To learn more, see Define Custom Deep Learning Layers. For a list of supported layers, see List of Deep Learning Layers.

Use deep learning operations to develop MATLAB® code for custom layers, training loops, and model functions.

Functions

| dlarray | Deep learning array for customization |

|---|---|

| dims | Data format of dlarray object |

| finddim | Find dimensions with specified label |

| stripdims | Remove dlarray data format |

| extractdata | Extract data from dlarray |

| isdlarray | Check if object is dlarray (Since R2020b) |

| dlconv | Deep learning convolution |

|---|---|

| dltranspconv | Deep learning transposed convolution |

| lstm | Long short-term memory |

| gru | Gated recurrent unit |

| attention | Dot-product attention (Since R2022b) |

| embed | Embed discrete data (Since R2020b) |

| fullyconnect | Sum all weighted input data and apply a bias |

| dlode45 | Deep learning solution of nonstiff ordinary differential equation (ODE) (Since R2021b) |

| batchnorm | Normalize data across all observations for each channel independently |

| crosschannelnorm | Cross channel square-normalize using local responses |

| groupnorm | Normalize data across grouped subsets of channels for each observation independently (Since R2020b) |

| instancenorm | Normalize across each channel for each observation independently (Since R2021a) |

| layernorm | Normalize data across all channels for each observation independently (Since R2021a) |

| avgpool | Pool data to average values over spatial dimensions |

| maxpool | Pool data to maximum value |

| maxunpool | Unpool the output of a maximum pooling operation |

| relu | Apply rectified linear unit activation |

|---|---|

| leakyrelu | Apply leaky rectified linear unit activation |

| gelu | Apply Gaussian error linear unit (GELU) activation (Since R2022b) |

| softmax | Apply softmax activation to channel dimension |

| sigmoid | Apply sigmoid activation |

| crossentropy | Cross-entropy loss for classification tasks |

|---|---|

| indexcrossentropy | Index cross-entropy loss for classification tasks (Since R2024b) |

| l1loss | L1 loss for regression tasks (Since R2021b) |

| l2loss | L2 loss for regression tasks (Since R2021b) |

| huber | Huber loss for regression tasks (Since R2021a) |

| ctc | Connectionist temporal classification (CTC) loss for unaligned sequence classification (Since R2021a) |

| mse | Half mean squared error |

| dlaccelerate | Accelerate deep learning function for custom training loops (Since R2021a) |

|---|---|

| AcceleratedFunction | Accelerated deep learning function (Since R2021a) |

| clearCache | Clear accelerated deep learning function trace cache (Since R2021a) |

Topics

Automatic Differentiation

- Automatic Differentiation Background

Learn how automatic differentiation works. - Use Automatic Differentiation In Deep Learning Toolbox

How to use automatic differentiation in deep learning. - List of Functions with dlarray Support

View the list of functions that supportdlarrayobjects. - Define Custom Deep Learning Operations

Learn how to define custom deep learning operation. - Specify Custom Operation Backward Function

This example shows how to define the SReLU operation as a differentiable function and specify a custom backward function. - Train Model Using Custom Backward Function

This example shows how to train a deep learning model that contains an operation with a custom backward function. - Create Bidirectional LSTM (BiLSTM) Function

This example shows how to create a bidirectional long-short term memory (BiLSTM) function for custom deep learning functions. (Since R2023b)

Model Functions

- Train Network Using Model Function

This example shows how to create and train a deep learning network by using functions rather than a layer graph or adlnetwork. - Update Batch Normalization Statistics Using Model Function

This example shows how to update the network state in a network defined as a function. - Make Predictions Using Model Function

This example shows how to make predictions using a model function by splitting data into mini-batches. - Initialize Learnable Parameters for Model Function

Learn how to initialize learnable parameters for custom training loops using a model function.

Deep Learning Function Acceleration

- Deep Learning Function Acceleration for Custom Training Loops

Accelerate model functions and model loss functions for custom training loops by caching and reusing traces. - Accelerate Custom Training Loop Functions

This example shows how to accelerate deep learning custom training loop and prediction functions. - Check Accelerated Deep Learning Function Outputs

This example shows how to check that the outputs of accelerated functions match the outputs of the underlying function. - Evaluate Performance of Accelerated Deep Learning Function

This example shows how to evaluate the performance gains of using an accelerated function.

Related Information

Featured Examples

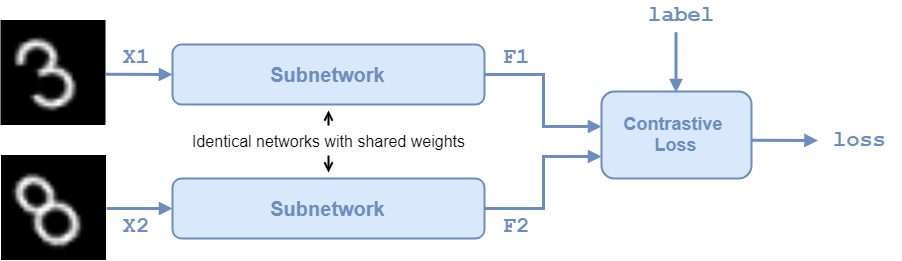

Train a Twin Network for Dimensionality Reduction

Train a twin neural network with shared weights to compare handwritten digits using dimensionality reduction.

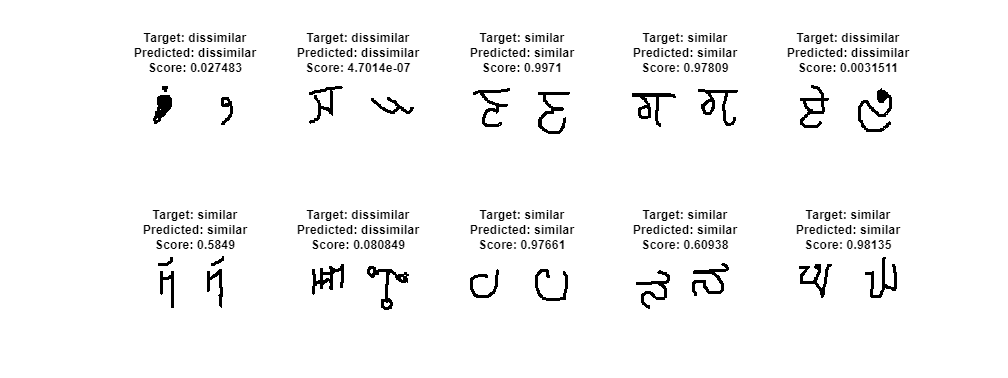

Train a Twin Neural Network to Compare Images

Train a twin neural network with shared weights to identify similar images of handwritten characters.

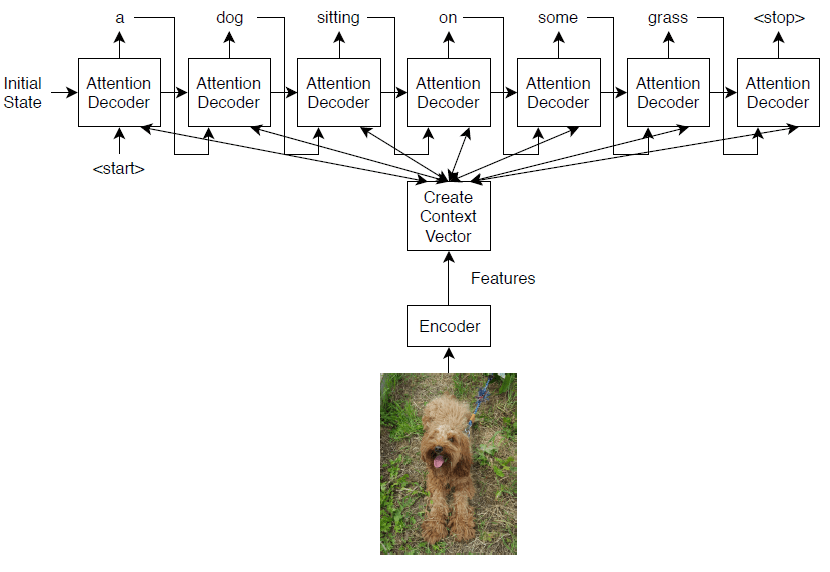

Image Captioning Using Attention

Train a deep learning model for image captioning using attention.

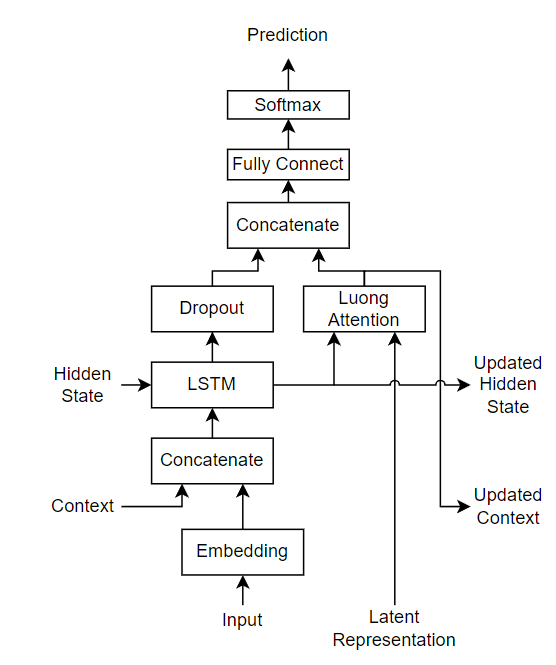

Sequence-to-Sequence Translation Using Attention

Convert decimal strings to Roman numerals using a recurrent sequence-to-sequence encoder-decoder model with attention.

Solve PDE Using Physics-Informed Neural Network

Train a physics-informed neural network (PINN) to predict the solutions of an partial differential equation (PDE).

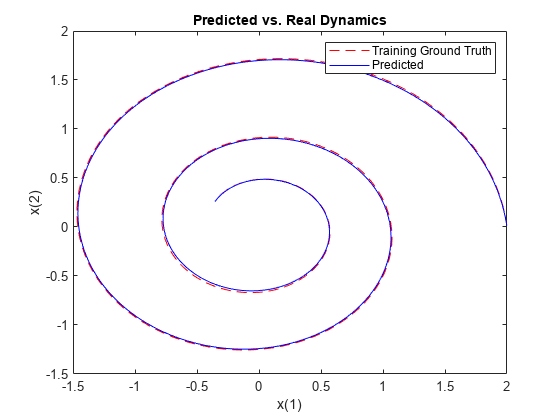

Dynamical System Modeling Using Neural ODE

Train a neural network with neural ordinary differential equations (ODEs) to learn the dynamics of a physical system.

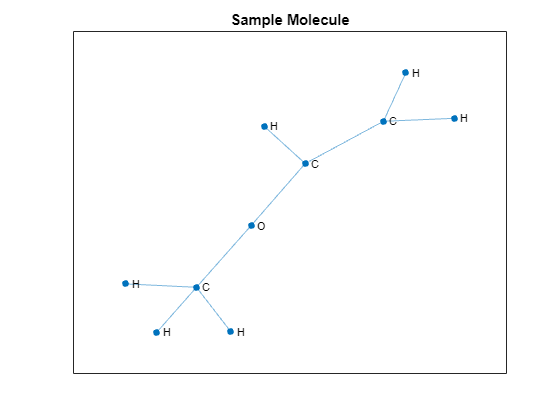

Node Classification Using Graph Convolutional Network

Classify nodes in a graph using a graph convolutional network (GCN).

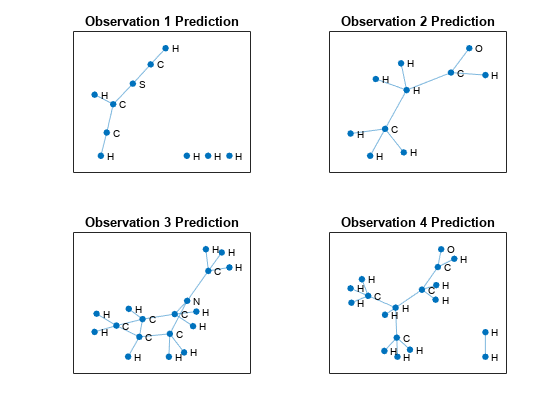

Multilabel Graph Classification Using Graph Attention Networks

Classify graphs that have multiple independent labels using graph attention networks (GATs).

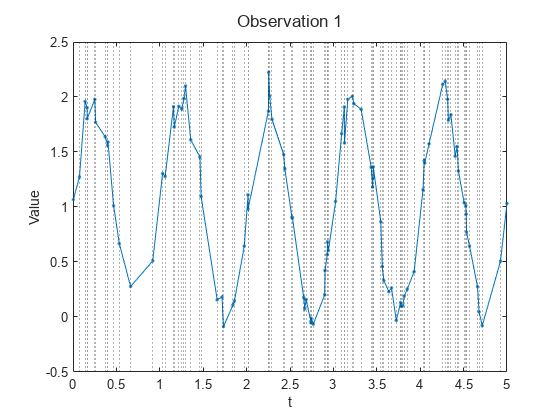

Train Latent ODE Network with Irregularly Sampled Time-Series Data

Train a latent ordinary differential equation (ODE) autoencoder with time-series data that is sampled at irregular time intervals.

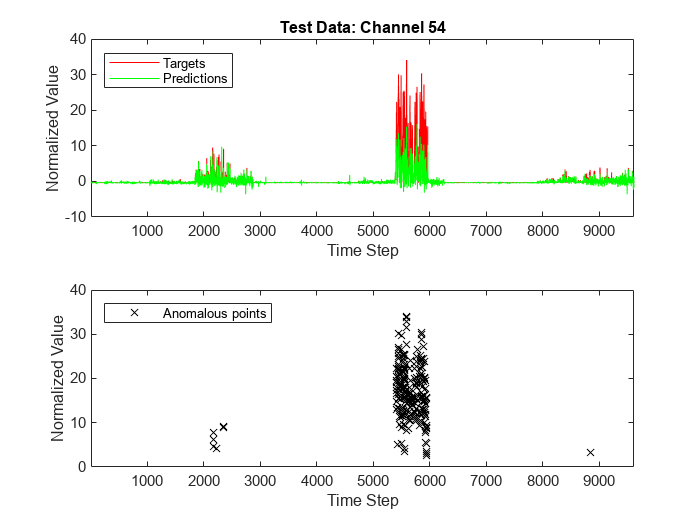

Multivariate Time Series Anomaly Detection Using Graph Neural Network

Detect anomalies in multivariate time series data using a graph neural network (GNN).