Custom Training Using Automatic Differentiation - MATLAB & Simulink (original) (raw)

Train deep learning networks using custom training loops

If the trainingOptions function does not provide the training options that you need for your task, or you have a loss function that the trainnet function does not support, then you can define a custom training loop. For models that cannot be specified as networks of layers, you can define the model as a function. To learn more, see Define Custom Training Loops, Loss Functions, and Networks.

Functions

| dlnetwork | Deep learning neural network |

|---|---|

| imagePretrainedNetwork | Pretrained neural network for images (Since R2024a) |

| resnetNetwork | 2-D residual neural network (Since R2024a) |

| resnet3dNetwork | 3-D residual neural network (Since R2024a) |

| addLayers | Add layers to neural network |

| removeLayers | Remove layers from neural network |

| replaceLayer | Replace layer in neural network |

| connectLayers | Connect layers in neural network |

| disconnectLayers | Disconnect layers in neural network |

| addInputLayer | Add input layer to network (Since R2022b) |

| initialize | Initialize learnable and state parameters of neural network (Since R2021a) |

| networkDataLayout | Deep learning network data layout for learnable parameter initialization (Since R2022b) |

| setL2Factor | Set L2 regularization factor of layer learnable parameter |

| getL2Factor | Get L2 regularization factor of layer learnable parameter |

| setLearnRateFactor | Set learn rate factor of layer learnable parameter |

| getLearnRateFactor | Get learn rate factor of layer learnable parameter |

| plot | Plot neural network architecture |

|---|---|

| summary | Print network summary (Since R2022b) |

| analyzeNetwork | Analyze deep learning network architecture |

| checkLayer | Check validity of custom or function layer |

| isequal | Check equality of neural networks (Since R2021a) |

| isequaln | Check equality of neural networks ignoring NaN values (Since R2021a) |

| forward | Compute deep learning network output for training |

|---|---|

| predict | Compute deep learning network output for inference |

| adamupdate | Update parameters using adaptive moment estimation (Adam) |

| rmspropupdate | Update parameters using root mean squared propagation (RMSProp) |

| sgdmupdate | Update parameters using stochastic gradient descent with momentum (SGDM) |

| lbfgsupdate | Update parameters using limited-memory BFGS (L-BFGS) (Since R2023a) |

| lbfgsState | State of limited-memory BFGS (L-BFGS) solver (Since R2023a) |

| dlupdate | Update parameters using custom function |

| trainingProgressMonitor | Monitor and plot training progress for deep learning custom training loops (Since R2022b) |

| updateInfo | Update information values for custom training loops (Since R2022b) |

| recordMetrics | Record metric values for custom training loops (Since R2022b) |

| groupSubPlot | Group metrics in training plot (Since R2022b) |

| deep.gpu.deterministicAlgorithms | Set determinism of deep learning operations on the GPU to get reproducible results (Since R2024b) |

| padsequences | Pad or truncate sequence data to same length (Since R2021a) |

|---|---|

| minibatchqueue | Create mini-batches for deep learning (Since R2020b) |

| onehotencode | Encode data labels into one-hot vectors (Since R2020b) |

| onehotdecode | Decode probability vectors into class labels (Since R2020b) |

| next | Obtain next mini-batch of data from minibatchqueue (Since R2020b) |

| reset | Reset minibatchqueue to start of data (Since R2020b) |

| shuffle | Shuffle data in minibatchqueue (Since R2020b) |

| hasdata | Determine if minibatchqueue can return mini-batch (Since R2020b) |

| partition | Partition minibatchqueue (Since R2020b) |

| dlarray | Deep learning array for customization |

|---|---|

| dlgradient | Compute gradients for custom training loops using automatic differentiation |

| dljacobian | Jacobian matrix deep learning operation (Since R2024b) |

| dldivergence | Divergence of deep learning data (Since R2024b) |

| dllaplacian | Laplacian of deep learning data (Since R2024b) |

| dlfeval | Evaluate deep learning model for custom training loops |

| dims | Data format of dlarray object |

| finddim | Find dimensions with specified label |

| stripdims | Remove dlarray data format |

| extractdata | Extract data from dlarray |

| isdlarray | Check if object is dlarray (Since R2020b) |

| crossentropy | Cross-entropy loss for classification tasks |

|---|---|

| indexcrossentropy | Index cross-entropy loss for classification tasks (Since R2024b) |

| l1loss | L1 loss for regression tasks (Since R2021b) |

| l2loss | L2 loss for regression tasks (Since R2021b) |

| huber | Huber loss for regression tasks (Since R2021a) |

| ctc | Connectionist temporal classification (CTC) loss for unaligned sequence classification (Since R2021a) |

| mse | Half mean squared error |

| dlaccelerate | Accelerate deep learning function for custom training loops (Since R2021a) |

|---|---|

| AcceleratedFunction | Accelerated deep learning function (Since R2021a) |

| clearCache | Clear accelerated deep learning function trace cache (Since R2021a) |

Topics

Custom Training Loops

- Train Deep Learning Model in MATLAB

Learn how to training deep learning models in MATLAB®. - Define Custom Training Loops, Loss Functions, and Networks

Learn how to define and customize deep learning training loops, loss functions, and models. - Train Network Using Custom Training Loop

This example shows how to train a network that classifies handwritten digits with a custom learning rate schedule. - Train Sequence Classification Network Using Custom Training Loop

This example shows how to train a network that classifies sequences with a custom learning rate schedule. - Specify Training Options in Custom Training Loop

Learn how to specify common training options in a custom training loop. - Define Model Loss Function for Custom Training Loop

Learn how to define a model loss function for a custom training loop. - Update Batch Normalization Statistics in Custom Training Loop

This example shows how to update the network state in a custom training loop. - Make Predictions Using dlnetwork Object

This example shows how to make predictions using adlnetworkobject by looping over mini-batches. - Monitor Custom Training Loop Progress

Track and plot custom training loop progress. - Compare Custom Solvers Using Custom Training Loop

This example shows how to train a deep learning network with different custom solvers and compare their accuracies. - Multiple-Input and Multiple-Output Networks

Learn how to define and train deep learning networks with multiple inputs or multiple outputs. - Train Network with Multiple Outputs

This example shows how to train a deep learning network with multiple outputs that predict both labels and angles of rotations of handwritten digits. - Train Network in Parallel with Custom Training Loop

This example shows how to set up a custom training loop to train a network in parallel. - Run Custom Training Loops on a GPU and in Parallel

Speed up custom training loops by running on a GPU, in parallel using multiple GPUs, or on a cluster. - Detect Issues During Deep Neural Network Training

This example shows how to automatically detect issues while training a deep neural network. - Speed Up Deep Neural Network Training

Learn how to accelerate deep neural network training.

Automatic Differentiation

- Automatic Differentiation Background

Learn how automatic differentiation works. - Deep Learning Data Formats

Learn about deep learning data formats. - List of Functions with dlarray Support

View the list of functions that supportdlarrayobjects. - Use Automatic Differentiation In Deep Learning Toolbox

How to use automatic differentiation in deep learning.

Generative Adversarial Networks

- Train Generative Adversarial Network (GAN)

This example shows how to train a generative adversarial network to generate images. - Train Conditional Generative Adversarial Network (CGAN)

This example shows how to train a conditional generative adversarial network to generate images. - Train Wasserstein GAN with Gradient Penalty (WGAN-GP)

This example shows how to train a Wasserstein generative adversarial network with a gradient penalty (WGAN-GP) to generate images.

Graph Neural Networks

- Multivariate Time Series Anomaly Detection Using Graph Neural Network

This example shows how to detect anomalies in multivariate time series data using a graph neural network (GNN). - Node Classification Using Graph Convolutional Network

This example shows how to classify nodes in a graph using a graph convolutional network (GCN). - Multilabel Graph Classification Using Graph Attention Networks

This example shows how to classify graphs that have multiple independent labels using graph attention networks (GATs).

Deep Learning Function Acceleration

- Deep Learning Function Acceleration for Custom Training Loops

Accelerate model functions and model loss functions for custom training loops by caching and reusing traces. - Accelerate Custom Training Loop Functions

This example shows how to accelerate deep learning custom training loop and prediction functions. - Check Accelerated Deep Learning Function Outputs

This example shows how to check that the outputs of accelerated functions match the outputs of the underlying function. - Evaluate Performance of Accelerated Deep Learning Function

This example shows how to evaluate the performance gains of using an accelerated function.

Related Information

Featured Examples

Generate Images Using Diffusion

Generate new images using a diffusion model.

- Since R2023b

- Open Live Script

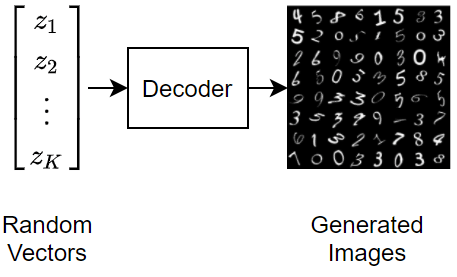

Train Variational Autoencoder (VAE) to Generate Images

Train a deep learning variational autoencoder (VAE) to generate images.

Train Fast Style Transfer Network

Train a network to transfer the style of an image to a second image. It is based on the architecture defined in [1].

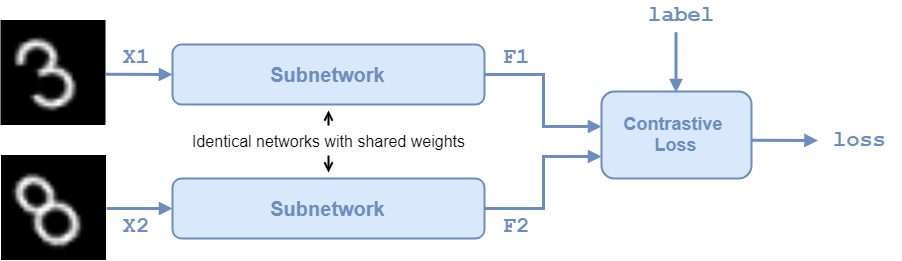

Train a Twin Network for Dimensionality Reduction

Train a twin neural network with shared weights to compare handwritten digits using dimensionality reduction.

Train Bayesian Neural Network

Train a Bayesian neural network (BNN) for image regression using Bayes by Backpropagation.

Train Image Classification Network Robust to Adversarial Examples

Train a neural network that is robust to adversarial examples using fast gradient sign method (FGSM) adversarial training.

Train Robust Deep Learning Network with Jacobian Regularization

Train a neural network that is robust to adversarial examples using a Jacobian regularization scheme.

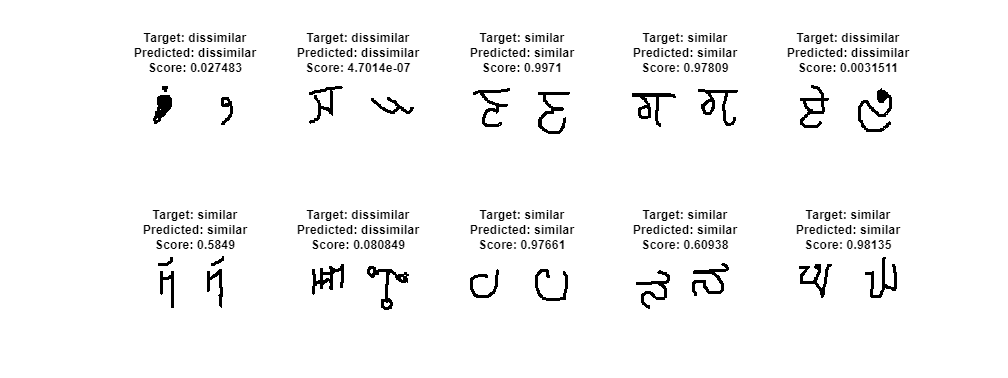

Train a Twin Neural Network to Compare Images

Train a twin neural network with shared weights to identify similar images of handwritten characters.

Train Network Using Cyclical Learning Rate for Snapshot Ensembling

Train a network to classify images of objects using a cyclical learning rate schedule and snapshot ensembling for better test accuracy. In the example, you learn how to use a cosine function for the learning rate schedule, take snapshots of the network during training to create a model ensemble, and add L2-norm regularization (weight decay) to the training loss.

Train Network Using Federated Learning

Train a network using federated learning. Federated learning is a technique that enables you to train a network in a distributed, decentralized way [1].

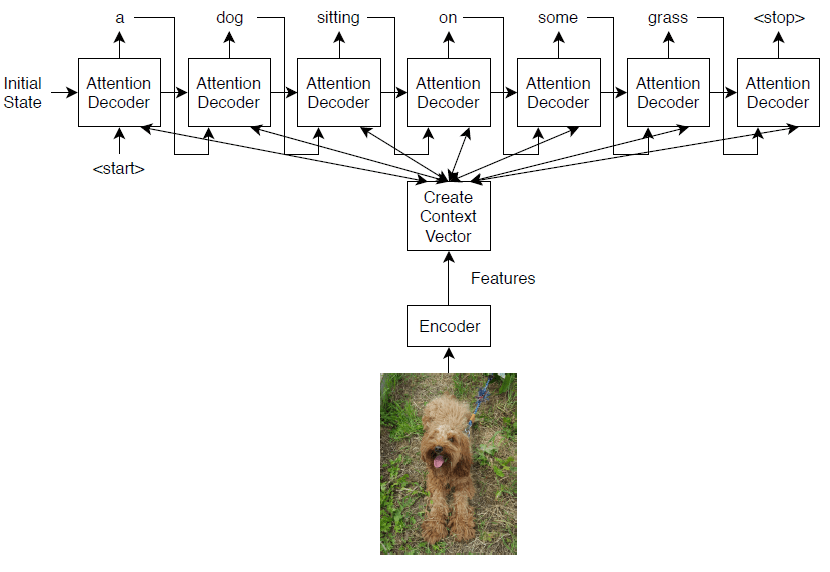

Image Captioning Using Attention

Train a deep learning model for image captioning using attention.